Apple offers Private Cloud Compute up for a security probe

Apple advised at launch that Private Cloud Compute's security will be inspectable by third parties. On Thursday, it fulfilled its promise.

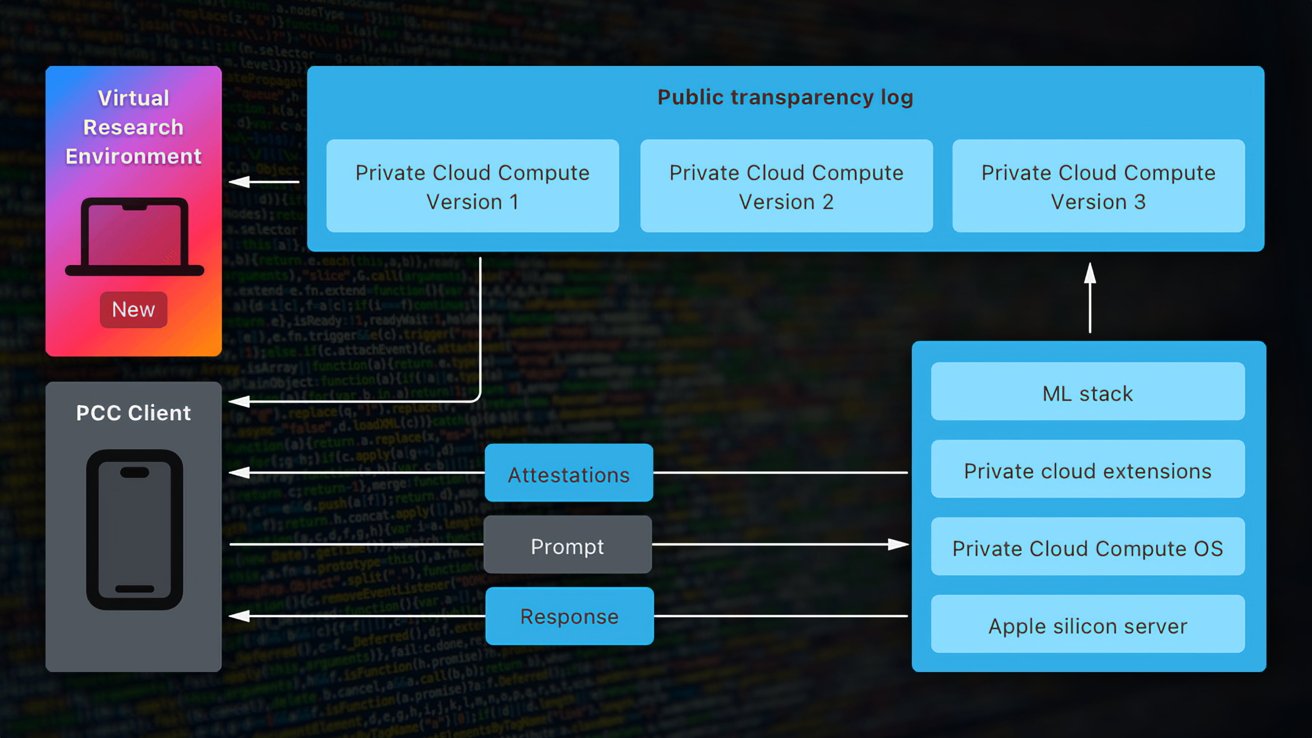

The virtual environment for testing Private Cloud Compute - Image credit: Apple

In July, Apple introduced Apple Intelligence and its cloud-based processing facility, Private Cloud Compute. It was pitched as being a secure and private way to handle in-cloud processing of Siri queries under Apple Intelligence.

As well as insisting that it used cryptography and didn't store user data, it also insisted that the features could be inspected by independent experts. On October 24, it offered an update on that plan.

In a Security Research blog post titled "Security research in Private Cloud Compute," Apple explains that it provided third-party auditors and some security researchers with early access. This included access to resources created for the project, including the PCC Virtual Research Environment (VRE).

The post also says that the same resources are being made publicly available from Thursday. Apple says this allows all security and privacy researchers, "or anyone with interest and a technical curiosity" to learn about Private Cloud Compute's workings and to make their own independent verification.

Resources

The release includes a new Private Cloud Compute Security Guide, which explains how the architecture is designed to meet Apple's core requirements for the project. It includes technical details of PCC components and their workings, how authentications and routing of requests occurs, and how the security holds up to various forms of attack.

The VRE is Apple's first ever for any of its platforms. It consists of tools to run the PCC node software on a virtual machine.

This isn't specifically the same code as used on servers, as there are "minor modifications" for it to work locally. Apple insists the software runs identically to the PCC node, with changes only to the boot process and the kernel.

A diagram showing how elements of Private Cloud Compute interact with the new virtual research environment - Image credit: Apple

The VRE also includes a virtual Secure Enclave Processor, and takes advantage of the built-in macOS support for paravirtualized graphics.

Apple is also making the source code for some key components available for inspection. Offered under a limited-use license intended for analysis, the source code includes the CloudAttestation project for constructing and validating PCC node attestations.

There's also the Thimble project, which includes a daemon for a user's device that works with CloudAttestation for verifying transparency.

PCC bug bounty

Furthermore, Apple is expanding its Apple Security Bounty. It promises "significant rewards" for reports of issues with security and privacy in Private Cloud Compute.

The new categories in the bounty directly align with critical threats from the Security Guide. This includes accidental data disclosure, external compromise from user requests, and physical or internal access vulnerabilities.

The prize scale starts from $50,000 for the accidental or unexpected disclosure of data due to a deployment or configuration issue. At the top end of the scale, managing to demonstrate arbitrary code execution with arbitrary entitlements, which can earn participants up to $1 million.

Apple adds that it will consider any security issue that has a "significant impact" to PCC for a potential award, even if it's not lined up with one of the defined categories.

"We hope that you'll dive deeper into PCC's design with our Security Guide, explore the code yourself with the Virtual Research Environment, and report any issues you find through Apple Security Bounty," the post states.

In closing, Apple says it designed PCC "to take an extraordinary step forward for privacy in AI," including verifiable transparency.

The post concludes "We believe Private Cloud Compute is the most advanced security architecture ever deployed for cloud AI compute at scale, and we look forward to working with the research community to build trust in the system and make it even more secure and private over time."

Read on AppleInsider

Comments

I'd rather see this type of cautious, measured approach rather than toss caution to the wind and push out consumer-facing AI features that are barely early beta quality.

And yes, I'm talking about every single LLM-powered AI chatbot service for consumers. They are all alpha or early beta quality. There is nothing that remotely resembles release quality software.

Security should be first and foremost. We have seen a massive braindrain at OpenAI because the current senior management did not prioritize this. We also saw Microsoft delay their CoPilot desktop rollout after a disastrous response and the original unveiling.

There are too many people scrambling to be "First" without taking any care to do things securely.

One thing for sure, I will wait until June 2025 to install iOS 18 and Sequoia on my main devices. And then I will ensure to turn off all AI functionality initially. I plan to trial desktop AI on a separate throwaway non-admin account on my Mac.

I actually bought an iPhone 16 and have yet to make it my primary phone; it mostly sits unused on a counter. I am strongly inclined to do a factory wipe and go through setup using an alternate identity to trial the Apple Intelligence features without putting any of my personal information on the line.

Trust is earned.

Apple needs to prove to me (and security experts) that they have been thinking carefully about security in Apple Intelligence.

As I’ve said before I see all consumer-facing AI features as alpha or early beta “quality” -- not just Apple’s.

I know I will eventually use some of them. I know I can turn them all off just like I disabled Siri after an extremely short period.

We already know Apple software engineering struggles to ship quality code. They paused development for a week last fall to tackle their bug list.

I was on iOS 16 and macOS Ventura so again running mature and stable OSes; so all of those bugs they cleared late last year I never had to endure since I upgraded a week before WWDC 2024. Apple’s software QA started declining about 10 years ago which is when I started delaying upgrades, first by a month or so, but the postponements got longer and longer as the code quality continued to slide.

For what it’s worth there has been a Mac in my house for over 30 years. The only OS that I never installed on my daily driver was Crapalina. Tried it several times on an external test drive, but it was always rubbish so I went from High Sierra to Big Sur.

I have no doubt that Apple will eventually get it right, the key word being "eventually." Here in 2024, my opinion is that iOS and macOS releases are beta quality up until about the x.5 release. Since Apple offers security updates for the previous two versions of their operating systems, it's not like I'm putting myself at risk staying on the previous release. I can enjoy both stability and security by being patient for code maturity from Apple.

And it's not like I'm discriminating Apple here. I do the same thing with Microsoft Windows. I'm running Windows 11 23H2 on a couple of systems and one on Windows 10 23H2. I'll likely upgrade one system to the recently released 24H2 sometime around June 2025. Like Apple Microsoft offers security patches for the previous two releases (22H2 and 23H2).

I don't think that Apple is willingly misleading shareholders (like most American adults with a retirement plan, I am an indirect shareholder), but mistakes can be made. Waiting for software maturity is one way to reduce chances of being caught in a situation that threatens personal privacy and security. There's no way anyone in 2024 can reduce that to zero percent but for sure holding back on iOS and macOS upgrades is a choice that Apple customers have at their disposal. On the vast beach of risk assessment everyone draws a different line in the sand. For me, stability, security and privacy far outweigh being the first on the block to run the new features.

It's worth pointing out that I am most certainly not alone or even an oddball exception. There is always a sizable percentage of people who stay behind on the previous operating system for months even if their devices are eligible to run the latest and greatest code. Every single operating system survey shows this.

Anyhow returning to the discussion topic, I appreciate Apple's willingness to let third-party security researchers kick the tires. I'm not a security expert myself so I have to rely on third parties to assess Apple's prowess in maintaining a high level of security and privacy. Apple Marketing can toot its horn but third party verification is more useful.

Based on what metrics? And I thought you haven’t run the betas yet, so it can’t be anecdotal. Methinks I smells a concerned troll.

I’ll tell you what my experience has been, living on the bleeding edge (by comparison). Both iOS 16 and 17 betas, then the releases caused configuration, automation and communication issues for a wide range of HomeKit devices. (For reference: I don’t generally test on the home setup, and the office/home networks are physically separate — two different vendors even. Mostly for billing purposes, but also for security.)

As for general stability, I’ll note that it’s really depended not just on the OS version, but the hardware platform. iPhones X through… I’d say 14, maybe 15 always had notable tendencies to hang and reboot, mostly with betas but also with releases. Less so with iPads. With the M Series iPads, that seems to have disappeared also. Ditto for the dreaded heat loop, where either a discharge or recharge phase causes heating to the point of uncomfortableness for an extended period or a dim and shutdown. Temperature also seems less a factor, particularly cold. Heat dissipation is still an issue for phones in cradles held in sunlight. Ventilation helps, different cases help. Mounting where an AC vent can reach a unit definitely helps.

But as for other types of stability issues … I think the only one I’ve really noted in the 18, 18.1, and 18.2 releases is the occasional Camera exception that leads to a quit. Previous software/hardware combos had this also, but more often than not you’d lose the image you just shot. Not so with recent iterations.

I suppose this is all a way to say, in my experience, iOS/iPadOS/tvOS/ 18.x has been waaaaaaaay more solid than any others I’ve had to test. Ditto for macOS 15.

As for any quality slips … well, the industry in general has had a lot of growing pains around QA. I see no general trend downward from 2014 (?) on. My guess is you weren’t testing or broadly using the company’s products from the ‘70s thru the Aughts (despite having a token Mac), as there are some real quality doozies in there.

So. My anecdotal and abundant experience has been that Apple’s quality has remained around an expected range that leads the industry as a whole. That’s also in line with general consumer opinion. If there’s a production specialist in the house, I’d be curious to hear from them on hardware build qualities as they see it.

Anyway, the last thing we need is the opinion of yet another weak-kneed, change averse, safety curmudgeon. I think there’s plenty of those — devs that won’t move off their circa 2015 hardware and software and the like.

What we’d need is actual company metrics, which I don’t think we’ll get. Transparency is nice to have — getting sued over what the data shows by opportunists looking for a settlement ain’t. Correct me here anyone — is there any company that does detailed public reporting on their state of quality?