Apple sued over 2022 dropping of CSAM detection features

A victim of childhood sexual abuse is suing Apple over its 2022 dropping of a previously-announced plan to scan images stored in iCloud for child sexual abuse material.

Apple has retained nudity detection in images, but dropped some CSAM protection features in 2022.

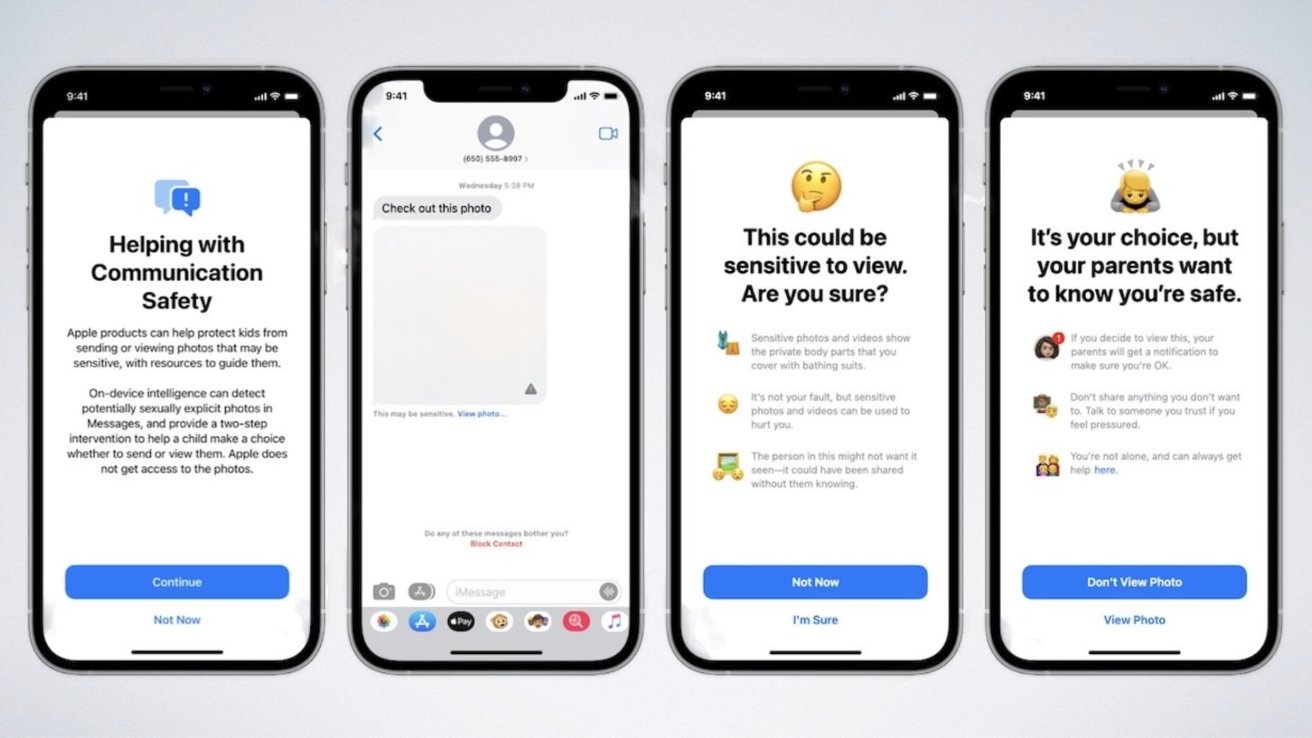

Apple originally introduced a plan in late 2021 to protect users from child sexual abuse material (CSAM) by scanning uploaded images on-device using a hashtag system. It would also warn users before sending or receiving photos with algorithically-detected nudity.

The nudity-detection feature, called Communication Safety, is still in place today. However, Apple dropped its plan for CSAM detection after backlash from privacy experts, child safety groups, and governments.

A 27-year-old woman, who was a victim of sexual abuse as a child by a relative, is suing Apple using a court-allowed pseudonym for stopping the CSAM-detecting feature. She says she previously received a law-enforcement notice that the images of her abuse were being stored on iCloud via a MacBook seized in Vermont when the feature was active.

In her lawsuit, she says Apple broke its promise to protect victims like her when it eliminated the CSAM-scanning feature from iCloud. By doing so, she says that Apple has allowed that material to be shared extensively.

Therefore, Apple is selling "defective products that harmed a class of customers" like herself.

More victims join lawsuit

The woman's lawsuit against Apple demands changes to Apple practices, and potential compensation to a group of up to 2,680 other eligible victims, according to one of the woman's lawyers. The lawsuit notes that CSAM-scanning features used by Google and Meta's Facebook catch far more illegal material than Apple's anti-nudity feature does.

Under current law, victims of child sexual abuse can be compensated at a minimum amount of $150,000. If all of the potential plaintiffs in the woman's lawsuit were to win compensation, damages could exceed $1.2 billion for Apple if it is found liable.

In a related case, attorneys acting on behalf of a nine-year-old CSAM victim sued Apple in a North Carolina court in August. In that case, the girl says strangers sent her CSAM videos through iCloud links, and "encouraged her to film and upload" similar videos, according to The New York Times, which reported on both cases.

Apple filed a motion to dismiss the North Carolina case, noting that Section 230 of the federal code protects it from liability for material uploaded to iCloud by its users. It also said that it was protected from product liability claims because iCloud isn't a standalone product.

Court rulings soften Section 230 protection

Recent court rulings, however, could work against Apple's claims to avoid liability. The US Court of Appeals for the Ninth Circuit has determined that such defenses can only apply to active content moderation, rather than as a blanket protection from possible liability.

Apple spokesman Fred Sainz said in response to the new lawsuit that Apple believes "child sexual abuse material is abhorrent, and we are committed to fighting the ways predators put children at risk."

Sainz added that "we are urgently and actively innovating to combat these crimes without compromising the security and privacy of all our users."

He pointed to the expansion of the nudity-detecting features to its Messages app, along with the ability for users to report harmful material to Apple.

The woman behind the lawsuit and her lawyer, Margaret Mabie, do not agree that Apple has done enough. In preparation for the case, Mabie dug through law enforcement reports and other documents to find cases related to her clients' images and Apple's products.

Mabie eventually built a list of more than 80 examples of the images being shared. One of the people sharing the images was a Bay Area man who was caught with more than 2,000 illegal images and videos stored in iCloud, the Times noted.

Read on AppleInsider

Comments

I can't say about Samsung's efforts because I don't own any Samsung products, but those products using Android (from any manufacturer) probably rely on Google's anti-CSAM measures:

or

Luxury cars typically have more safety features or more advanced ones than basic cars. A manufacturer adds pedestrian sensing with auto-braking to avoid hitting someone who goes in front of or behind the car and collision with them is likely. A person is hit by a car that does not have the pedestrian sensing auto-braking feature. Should the person who was hit be able to sue the auto manufacturer because they chose to exclude this safety feature for whatever reason (price/cost, model differentiation, design limitations, waiting for a major redesign, supply issues, etc). I see no difference.

It's usually the blood sucking lawyers who get people to ponder these brilliant ideas. After all, their main job is to use the law to line their pockets. That's a big part of my strong stance against lawsuits in general.

However, this particular lawsuit is worse because it potentially harms us all. I was against the CSAM spyware from the get-go because it cannot be made fail-safe. Some people would be wrongfully charged with crimes they didn't commit, yes, even with those promised "human reviews." This is a big reason why many were against it, not just me.

So basically, someone who was abused is abused yet again, albeit in a different way, by lawyers, who get them involved in these crazy lawsuits which have the potential to later harm a larger percentage of the population, if indeed Apple loses and is strong-armed to implement CSAM spyware.

Leave it to Americans to ALWAYS cast blame when something very bad happens to you. We need to eliminate the criminals without potentially making innocent people into criminals. Stop the endless suing!

Now lots of perfectly innocent pictures contain some level of nudity, so — again, guessing here — that is combined with where the image was sent and/or uploaded by. If any of the recipients or senders is a child account, that plus the nudity might flag it — and then law enforcement could be contacted to obtain a court order to decrypt.

If this lawsuit succeeds people playing the victim to a third party will go on and on, the way the Internet currently is today people that host websites and ad advertisers like to be able to send any and everything back-and-forth naturally they’re not gonna want a lockdown on the edge type of solution, but something like this means Apple is gonna have to design something that makes individual people users take some type of responsibility (by jumping through hoops loading up new programs). Not a iCloud user storing personal Photos or Pages text files online working on the edge is fine.

I look forward to the creation, of laser focused AI Agents that will eliminate junk mail, junk messages, and much of the other driftwood that’s on the Internet, the scam and advertising and telephone companies are not gonna support, the elimination of that crap. (such a Program won’t come from Google, Microsoft or Meta however they will build a pseudo me-too program after Apple that claims to do something).

This will also affect all of the other photo and text file programs from third parties made beyond Apple, back to the edge may be coming back like it or not.

The concerns of having CSAM on our devices as part of the detection were unwarranted and based on a misunderstanding of how the system works. A potential valid concern is the probability of hash collisions. I recall Apple's response on that was that they weren't going to alert on single matches.

In a truly E2E (iCloud photos are not unless you turn it on) system, hash scanning doesn't work as Apple never sees the original content on their servers. The only way to do it is to scan on device before anything goes anywhere. Apple's original annoucment around this effort was somewhat convoluted (but actually pretty smart) and didn't trigger on single matches (for fasle-positive reasons you mention) but it did have to do with scanning actual content not just hashes, which is why there's probably confusion.

Essentially, Apple has landed on the position that it will cooperate with law enforcement in existing investigations into CSAM material, but will not be the SOURCE of such an investigation.

Thank you for the correction and enlightenment.