Apple Intelligence summaries are still screwing up headlines

The UK's BBC is continuing to complain about the notification summaries created by Apple Intelligence, with iPhone users being misinformed by misinterpreted headlines.

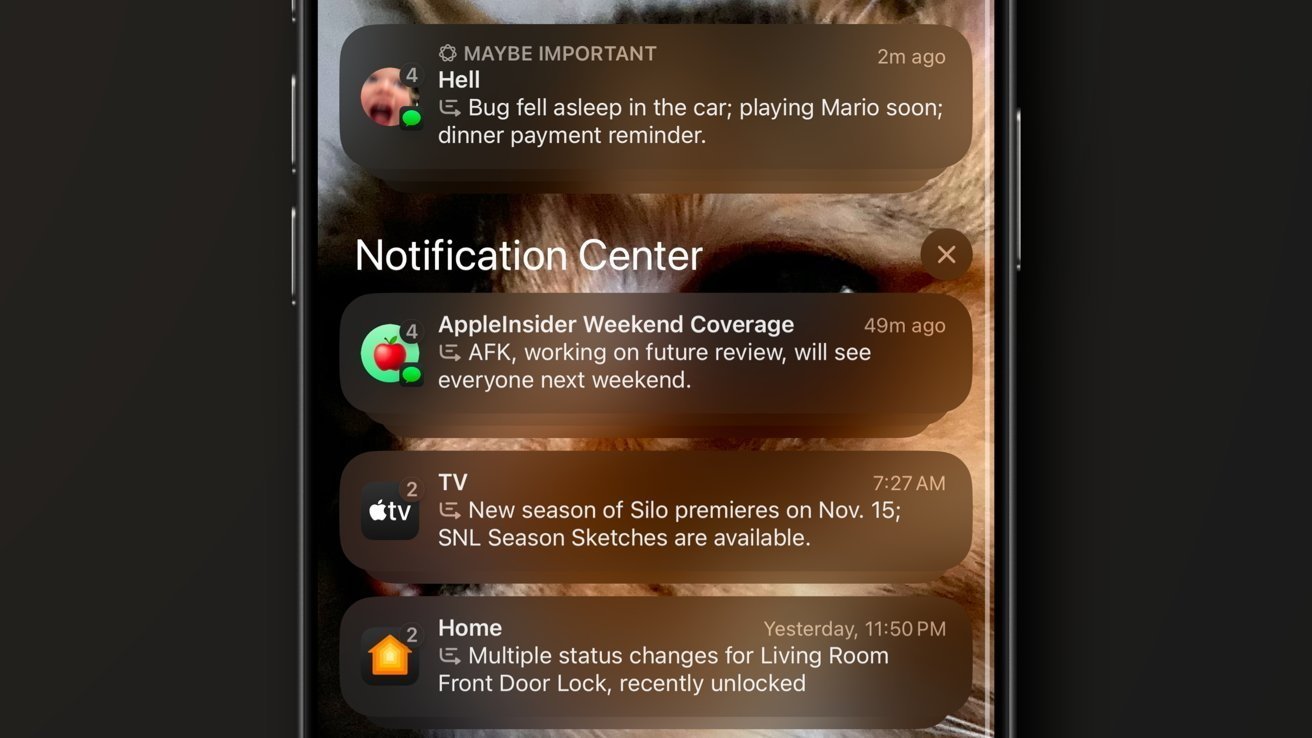

Examples of notification summaries on an iPhone

In December, the BBC complained to Apple about the summarization features of Apple Intelligence. While meant to save time for users, the summaries sometimes get things quite wrong, as it did with news headlines from the UK broadcaster.

In its new complaint on January 3, the BBC has been informed of summaries appearing on iPhones that were very inaccurate.

One summary, based on a headline from Thursday evening, claimed that darts player Luke Littler had "won PDC World Championship." In reality, Littler had only completed a semi-final, with the final itself to be held on Friday night.

In a second example, headlines from the BBC Sport app were condensed down with the claim that "Brazilian tennis player Rafael Nadal comes out as gay." It is believed that the real headline was for a story about Brazilian gay tennis player Joao Lucas Reis da Silva, and not the Spanish Nadal.

"As the most trusted news media organisation in the world, it is crucial that audiences can trust any information or journalism published in our name and that includes notifications," urged a BBC spokesperson. "It is essential that Apple fixes this problem urgently - as this has happened multiple times."

The BBC is not the only organization complaining about the lack of accuracy from Apple Intelligence when it comes to headlines. Reporters Without Borders said in December that it was "very concerned by the risks posed to media outlets" by the summaries.

The issues proved to the group that "generative AI services are still too immature to produce reliable information for the public."

Apple has yet to publicly comment on the current complaints, but CEO Tim Cook did acknowledge that inaccuracies would be a potential issue in June. While the results would be of "very high quality," the CEO admitted it may be "short of 100%."

In August, it was reported that Apple had preloaded instructions to counter hallucinations, instances when an AI model can create information on its own. Phrases "Do not hallucinate. Do not make up factual information" and others are fed into the system when acting on prompts.

Fixing the accuracy can also be tough for Apple, since it does not actively monitor what users are seeing on devices, as part of its policies on user privacy. The priority of using on-device processing where possible also makes it harder for Apple to work the problem.

Read on AppleInsider

Comments

People will look back in years to come and laugh at how dumb we must have been to trust an AI to tell us the important stuff. Honestly this will only end when people start getting killed from this misinformation and companies start getting sued.

When BBC makes a mistake it is on BBC. Did anyone ask for Apple to make a fake news generator while using corporate logos?

Hire a person or three to write unbiased, accurate summaries based on them actually reading the stories = better solution for Apple (we expect this type of crap from Google)

Someday AI will be able to do accurate summaries. But if today isn't that day, don't use AI for a job it clearly can't yet do.

It will harden ... eventually.

But it will still be Jell-O.

Don't sweat the small stuff.

A news summary like "Brazilian tennis player Rafael Nadal comes out as gay." would get flagged as sensitive and raised for human review. Until the review is done, it can show an important sentence from the article cropped to fit the space.