Behind the scenes, Siri's failed iOS 18 upgrade was a decade-long managerial car crash

The long-delayed overhaul of Siri was hit by repeated failures to progress, with leadership problems making it harder to execute than it should've been.

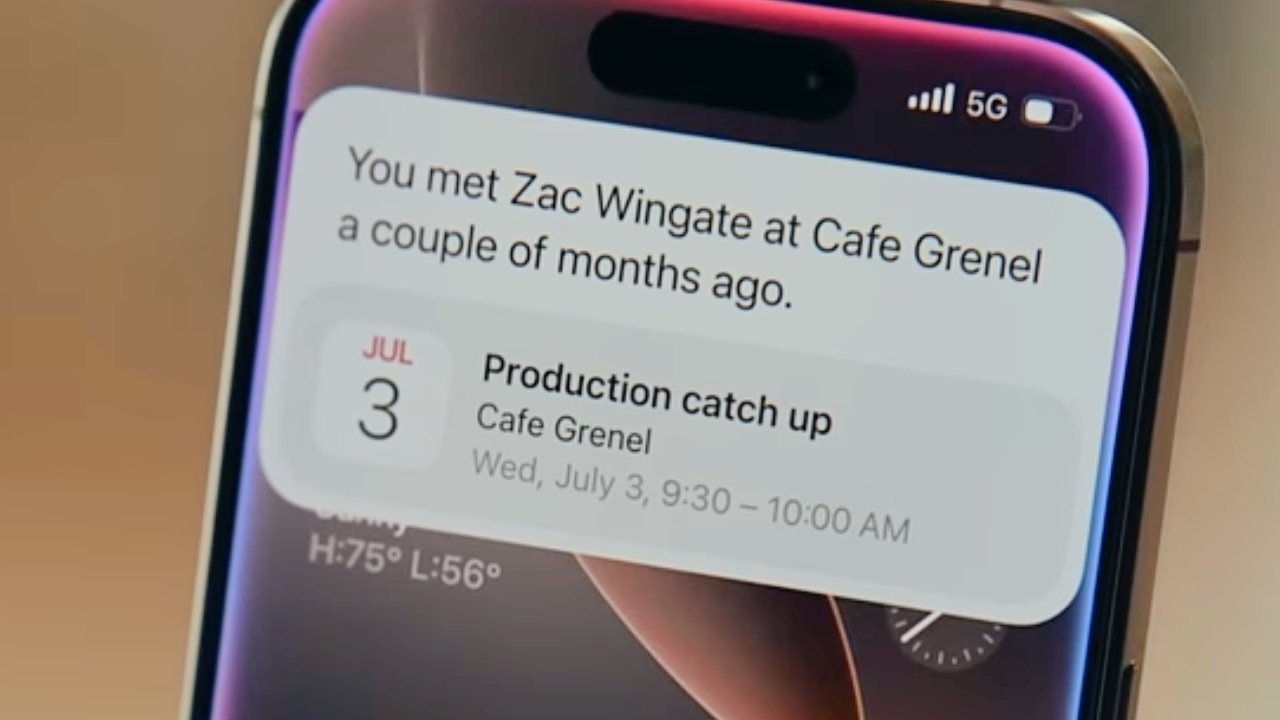

An example of a contextual query Siri will be able to answer, eventually. - Image Credit: Apple

In March, Apple admitted that its attempt to make Siri more personalized and up to date was far behind schedule. It confirmed that there were delays in getting Siri to the state where the company wanted it to be, and that it would be sorted out in the coming year.

Since that rare admittance from Apple, the company has done what it can to fix the situation. This included a managerial reshuffle, pushing John Giannandrea out of the top Siri role in favor of Mike Rockwell.

It was a major event in a situation that was extremely embarrassing to Apple. However, the entire affair is something that could have been avoided, reports The Information, had Siri not fallen victim to poor leadership choices.

Siri hot potato

Multiple people who worked in the AI and software engineering groups within Apple told the report that conflicting personalities were a problem. Some, who worked under the AI and machine learning group under Giannandrea said that poor leadership was at play.

The sources also identified Robby Walker, who worked under Giannandrea, as being one of the reasons for the issues, due to an apparent lack of ambition and willingness to take risks on future Siri designs.

The employees also referred to Siri as a "hot potato" within Apple, due to it being passed between the different teams over the years. With the latest reshuffle putting Siri under the oversight of software boss Craig Federighi, they have some hope that favorable changes will be made.

A lengthy Siri issue

The problems getting Siri modernized started years ago, in 2018, when Giannandrea moved from Google to work on Apple's new AI group. At a time when Siri was beginning to stagnate, Giannandrea took an interest in managing the digital assistant.

Before then, engineers working on Siri felt as if they were second-class citizens with Apple, with frustrations that the software engineering team's control over iOS updates failing to prioritize Siri fixes. Those software engineers also felt the Siri team weren't able to keep up with new features being developed in the group.

Giannandrea's plan was to make Apple's own AI-based voice assistant, using a playbook he gleaned from Google. He believed Apple had to get better training data, and to do better at web-scraping for answers.

However, when urged to shake up Siri's leadership, he declined to do so.

Walker's safe work

The lack of risk-taking by Walker was a problem, the group of sources added. It was believed that Walker downplayed efforts to swing for the fences, and instead worked on other less meaningful metrics.

This apparently included celebrations of small wins, like reducing the response time for user queries. His work to remove "Hey" from "Hey Siri" also took more than two years to pull off, with little actual real benefit in the end.

Walker also dismissed one attempt in 2023 for one team to use LLMs for Siri to gain emotional sensitivity, such as to detect if a user is in distress. He said he would rather focus on the next Siri release instead of committing resources to the effort.

That team still went away to work on the project via the software engineering group's safety and location team, without his knowledge.

Increased tension

The software engineering group and the AI team had a dysfunctional relationship, with respective leaders Federighi and Giannandrea having dramatically different managing styles.

Resentments also built up over difference in pay, the speed of promotions in the AI group, and vacation periods.

Eventually, Federighi's groups started to mass together hundreds of machine learning engineers to work on its own models, separate from the main AI team. This included building demos to voice-control apps without Siri, which the Siri team didn't appreciate.

An attempt to introduce voice control systems for apps on a headset that would become the Apple Vision Pro was also problem-filled. Hostility between Walker and others in the group, as well as the slowness of the Siri group in general, became a big point of friction once again.

When ChatGPT was released in 2022, the AI group didn't respond with any real urgency, the engineers claim. By contrast the software engineering group were far more interested, with demos presented to Federighi of what could be accomplished.

Eventually, Apple's managers said in 2023 that engineers couldn't include external models in Apple products. But, the responsibility to build the models was the responsibility of the AI group.

They also apparently didn't perform as well as OpenAI's offerings, employees explained.

The current shakeup

Despite years of issues, the current situation where the Federighi-controlled software engineering groups would oversee the AI work led by Rockwell should be a fruitful one for Siri's progress.

Federighi is viewed as having more knowledge of technical details than many under his control. He has also told Siri's machine learning engineers to do whatever they need to make the best AI features, even if it's using open-source models from other companies.

Meanwhile Rockwell, who has a good track record in the company, is viewed as someone with vision, which Walker certainly lacked.

Read on AppleInsider

Comments

"Among members of the Siri team at Apple, though, the demonstration was a surprise. They had never seen working versions of the capabilities, according to a former Apple employee. At the time, the only new feature from the demonstration that was activated for test devices was a pulsing, colorful ribbon that appeared on the edges of the iPhone’s screen when a user invoked Siri, the former employee said.

In other words the demo was faked and Siri had no such capabilities. It's good to see Federighi jump in and taking point, particularly with relaxing privacy focus just a tiny bit and allowing open-source third-party models. New leadership from the executive team, kudos. Perhaps something will actually be developed now.

Giannandrea came from Google, but he was never trained to think the Apple way. One of the reasons Apple worked so well for so long is because it thought different — a mindset Steve Jobs instilled deeply in the company. That way of thinking shouldn’t be assumed; it must be taught. If Apple wants to keep its soul intact, it needs to train new people in the culture that made it great. Hope they will learn from it.

Cook never should have structured the org such that AI and software are separate organizations. AI, ML and LLM algorithms seem pretty intricately tied to software. If there needed to be a Siri service owner, it should have been Product Marketing (Joswiak)? Then, Giannandrea was not good at his job. This is me looking from the outside. Maybe he was a good leader, but his org hasn't been very productive. I don't know who's responsible, but my experience with the software keyboard has not been good. It's been passable? At times, its key tapping performance and word prediction performance has regressed. That's an ML task that was under Federighi or Giannandrea? Either way, it's now an ML based keyboard that doesn't seem any better than the past.

Then, the overarching umbrella here is LLMs and chatbots. Everyone is desperate to create AI features. Most of them are of dubious benefit, but everyone has to be in the race. That's basically not Apple's MO, where they try to create beneficial features at their own pace. The company is in conflict with itself with AI. Not a good place to be.

Giannandrea had a good track record at Google so clearly he's smart. But being a great researcher is not the same as being a manager who will be rightfully judged by making product deadlines.

This isn't entirely Giannandrea's fault. Ultimately Apple failed in putting someone responsible for maintaining and improving Siri after their acquisition and now that hopping about the AI bandwagon is a top priority, a decade's worth of neglect has come back to bite them hard. Apple created their current conundrum by mismanaging Siri for years before they handed Giannandrea the torch.

They cannot fix Siri in a year and it's clear as day now.

My guess is they tried and belatedly came to the conclusion that they have to rewrite Siri from scratch to interface correctly with current and emerging AI technologies. This is far harder for Apple than most other companies because Apple has placed security and privacy as two pillars of their core values.

The peanut gallery who has been whining for years that Siri was falling behind the competition was right all along.

Someday Apple will put out a useful Siri but it will trail the competition for several more years. It's notable that Apple Maps has never caught up to Google Maps especially when you look at the two side-by-side in many places outside of the USA.

My hunch is that Apple is rewriting Siri from scratch with text as the primary input method not unreliable voice. After all, LLMs are based mostly on text data so there's no surprise that LLM-powered AI chatbots such as ChatGPT, Claude, Perplexity, Gemini, etc. have all debuted with text-centric interfaces.

I presume it's actually faster to deploy AI chatbots to other languages if text is the primary input method versus voice as well.

Even in 2025, when I find myself navigating through some company's telephone customer service system, I will always lean toward keypad input (account numbers, menu selections, etc.) rather than voice commands when offered the choice. Keypad entry is still miles better in accuracy than voice.

I remember using some startup's voice operated TV remote control back in the late Eighties or early Nineties. It was terrible and the startup failed unsurprisingly. My experience with voice commands in 2025 shows very little real progress. Voice to text transcription is barely any better today than it was 30 years. It seemingly works only part of the time for part of the users. It really needs to be 99.9% accurate for pretty much everyone (speaking the supported language) for it to not be a waste of time.

My guess is that accurate realtime voice-to-text transcription is still about 20 years away. It might happen in my lifetime or maybe again it might not. But I'm certainly not stupid enough to hold my breath for it.

Every time I go to my clinic the doctor asks if they can use an AI medical notes transcription service. I always decline and I will likely decline until the day I die. Accurary is just one concern. Privacy and data security are two others.

If anyone from Apple is reading this (which I highly doubt), I am not singling your company out. Basically everyone sucks at voice-to-text transcription in 2025 regardless of their market capitalization whether it's realtime or the system can spend some time processing the input. It is ALL rubbish.

What was shown in that now-deleted advertisement video was pure science fiction or so horribly unreliable that it's worthless for daily use by Joe Consumer. Bring us an AI assistant that we can count on. And "fake it until you make it" is not a sound policy. We (well those amongst us who are sane) don't have the time for that sort of nonsense.

Too much of this AI stuff is released to the public half-baked. Do you know what a half-baked loaf of bread is? It's inedible. At least Apple labels Apple Intelligence as "Beta" because it's lightyears away from being release quality right now.

However, John failed to respond how AI should be rolled out with Apple. He did not bring any helpful hardware with AI features.

He is a smart guy for sure, but does he fit to Apple? Questionable..

But this issue shows the general problem inside Apple.

They have failed with Vision Pro.

They have failed with Apple Car.

They have failed with Apple CarPlay.

Recently, we observe more failures or rather medicore product features.

Apple was re-born again in the middle of the financial crisis 2008.

There has always been a big hit in the middle of a crisis..

Now, we have a crisis.. Who knows..Maybe, there will be another company which will bring out a shocking product which could eat Apple´s market share or it is so revolutionary, so that it will shake up the entire mobile sector..

Within this tariff crisis, Cook will be busy to optimize his supply chain plan.. At the same time, he should care about Siri...

Apple gets a lot of escalated issues at the same time, which have always been a time ticking bomb.. Now, all bombs have exploded.

It does not look good for Apple..

My take is that Apple´s Siri will always be behind..

Remember that Apple sells more iPhones than any other platform device. Apple Intelligence needs to work on the iPhone first. They don't need to release new specific hardware, the Neural Engine has been on the iPhone since 2017, almost eight years ago. Apple already knows this which is why Apple Intelligence features trail on macOS Sequoia compared to iOS 18.

Remember how Steve described the iPhone: "the computer for the rest of us." He was absolutely, 100% correct.

The world does not need some dorky Star Trek-like AI communicator badge. We have phones. Smart glasses will never have the same consumer acceptance and smart watches have too many limitations (e.g., cameras).

Voice assistants have improved, continue to improve and will improve well into the future because, ultimately, things like Jarvis are the future.

Voice is a key interface in smart vehicles and any situation where your hands are busy or when you are away from other input mechanisms.

Luckily, in AI, natural language processing and generation are seeing incredible improvements on a daily basis. That is the basics of getting something in and out of voice assistants. In between we have the query processing functions, 'reasoning' and the data pool.

It's all coming along at a healthy pace and even if things still aren't perfect they are very usable. That can't be said of Siri.

Siri has basically been in stagnation mode for years and we always knew things weren't as good as they should be. For example, Siri should have been universal across all Apple devices and not give the impression of there being multiple Siri 'personalities' depending on the device you are aiming the query at. Rumours of management issues have also been constant.

On the purely AI front it's clear to me that Apple was caught well off guard and that is a management issue more than a technical issue.

This past weekend I had a friend over and she's been off work for two years battling with some lower limb issues. She is still being paid but her national insurance contributions aren't being paid by her employer so she now has payment gaps. She will have to cover that herself but her local union and the social security office seem perplexed by her current situation. It is all quite complex so we decided to throw a voice query at Perplexity Pro and see what it returned. A bit of a wild card but we were running around in circles.

It took less than five seconds to answer the query in a clear and concise manner including the necessary timeframes, penalisation situation and even returned the name of the government form she had to use to get things looked at including the postal address and internet links.

I don't use Perplexity Pro as a voice assistant obviously, but that is where we are going with these LLM's which are everimproving and we are seeing new advances released almost daily. The Dream 7b (Diffusion Reasoning Model) just got a lot of attention. Google one day, Meta another, Microsoft...

Not perfect but absolutely usable for many people. Siri isn't in the same league.

I asked 6-7 AI chatbots when the Super Bowl kickoff was about ten days before the event. Not a single one got the simple question correct because AI has zero contextual understanding, no common sense, no true reasoning, no design, no taste, nothing. It's just a probability calculator. They all just coughed up times from previous Super Bowls. They can't even correctly process a question that a second grader could understand.

About six weeks later I asked 3 AI chatbots to fill out an NCAA men's March Madness bracket the day after the committee finalized seeds. All three of them failed miserably. They all fabricated tournament seeds and one was so stupid to fill out their bracket of fake seeds with *ZERO* upsets. It predicted every higher seed to win their game.

Appalling.

I stopped after three chatbots because going through another 5-6 is just a waste of time. Asking questions to 7-8 chatbots and hunting for the correct/best response is NOT sustainable behavior. IT'S A BIG WASTE OF TIME and that's what we have in 2025. Worse, each AI inquiry uses an irresponsibly large amount of water and electricity.

These are just two minor examples that show how little LLM-based AI has come. Advancements have stalled. Did I pick two questions that are unanswerable for today's AI chatbots? Well, they were two very, Very, VERY common topics at the time. It's not like I asked AI chatbots to cure cancer, solve world hunger or reverse climate change (which is probably what they should be working on).

It's trivial to show a couple examples how AI works or a couple more that shows it doesn't. Anyone can cherry pick through specific examples to advance their opinions. However the key is for any given AI to be reliable >99.99% of the time. If you asked a human personal assistant to do three things every day (for example, pick up dry cleaning, send a sales contract to Partner Company A, and summarize this morning's meeting notes) and they consistently failed to do one of the three, you'd fire them in a week. There was a previous article that said that Apple is seeing Siri with Apple Intelligence only having a 60% success rate. That's way too low and none of their competitors are anywhere close to 90%, let alone 99%.

Here's a big part of the problem in 2025: no one knows what questions AI chatbots can handle and what they will stumble at. It's all a big resource-draining crapshoot. Again, this is not sustainable behavior. My guess is in the next 2-3 years we will see some very large AI players exit the consumer market completely. More than a couple of big startups will run out of funding and fold.

Worse, AI chatbots are utterly unembarrassed by giving out bad advice, like eating rocks or putting glue on pizza. They also have apparently zero ability to discern sarcasm, irony, parody, or other jokes in their datasets. If I write "Glue on pizza? Sounds great. You'll love it." online some AI chatbot's LLM will score that as an endorsement for that action even though any sane person would know I'm joking.

That's a fundamental problem with today's LLMs. They "learn" from what they find online. And computer scientists have a very old acronym for this: GIGO (Garbage In, Garbage Out).

And I'm not all that convinced that the engineers who program consumer-facing LLMs are all that perturbed when their precious baby makes extremely public mistakes. Capped off by hubris-ladened AI CEOs who swear that AGI is coming next year. Sorry guys, "fake it until you make it" is not a valid business plan. Go ask Elizabeth Holmes. Scratch that, don't ask her. Ask former shareholders of Theranos.

You can say that 2025 LLMs have more parameters than before but based on a few simple queries there is very little real progress in generating correct answers repeatedly, consistently, and reliably. All I can surmise is that AI servers are just hogging up more power than before while spewing out more nonsense. That's GIGO in action, running in a bigger model on larger clusters of Nvidia GPUs in more data centers. But it's still GIGO.

I adamantly believe that LLMs and other AI technologies have fabulous uses in enterprise and commercial settings especially when the data models are seeded with specific, high quality verifiable and professionally qualified knowledge. But from a consumer perspective, 98% of the tasks on consumer-facing AI are just a massive waste of time, electricity and other resources. If consumer-facing AI doesn't make any headway toward 99.99% accuracy, at some point the novelty will wear off and Joe Consumer will move onto something else. The only people using AI will be some programmers, scientists and data analyst types in specific markets (finance, drug research, semiconductor design, etc.).

Sure, AI can compose a corporate e-mail summarizing points made in this morning's staff meeting but it doesn't actually make the user any smarter. It just does a bunch of tedious grunt work. If that's what consumer expectations of AI end up being, that's fine too.

I'm pretty sure that some of Apple senior management team finally realize that consumer-facing AI has a high risk of turning into an SNL parody of artificial intelligence in the next couple of years. Not sure if anyone over at OpenAI gets this (and yes, ChatGPT was one of the AI assistants that fabulously failed the Super Bowl and March Madness queries).

More and more OEMs reject to support Carplay. Look at GM. Mercedes Benz has supported their MBUX.

It is ridiculous that Apple introduced the new version of Carplay to be rolled out in 2022, but still until now, this Carplay is not available at Porsche.

In the end it is not about AI or not AI, but rather the user perception of Siri improving. Right now the perception is that Siri has stagnated and is still failing at common items, even after 5 years and that its hasn’t improved in it’s fun gimmicky aspects either.

at Apple about this. I blame the overall leadership team.

I‘m not interested in the smart Siri they showed off. The only feature I want added is the ability to ask it a question and get an answer instead of saying “I found this on the web”. If I ask “are onions safe for dogs?” I just want an answer spoken to me based on a web search like Google Assistant does, with some results displayed on screen should I wish to view them in one web.

Google Assistant offline capabilities:

Basic Controls: You can use voice commands to control your phone, including setting timers, playing music, controlling smart home devices, and using Voice Access commands.

Simple Reminders: You can set basic reminders without an internet connection.

Offline Voice Input: You can use Google Assistant for offline voice input in supported apps, for example your mention of dictated message replies.