When you report bugs on iOS, some content may be used for AI training

If you decide to report a bug on a beta version of iOS, you now apparently have to let Apple use the uploaded content for Apple Intelligence training with no way to opt out.

If you want to report a bug on iOS, content you upload may be used for AI training.

On Monday, Apple announced its plans for a new opt-in Apple Intelligence training program. In essence, users can let Apple use content from their iPhone to train AI models. The training itself happens entirely on-device, and it incorporates a privacy-preserving method known as Differential Privacy.

Apple took measures to ensure that no private user data is transmitted for Image Playground and Genmoji training, as Differential privacy introduces artificial noise. This makes it so that individual data points cannot be tracked to their source.

Even so, some users are unhappy about the opt-in AI training program. While Apple said that it would become available in a future iOS 18.5 beta, one developer has already noticed a possible related change to the Feedback app.

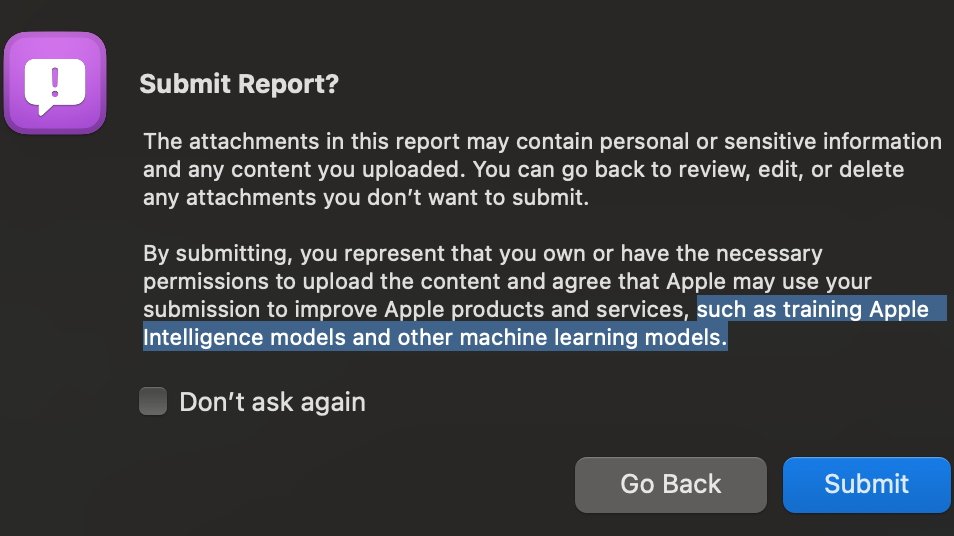

In a social media post, developer Joachim outlined a new section of Apple's privacy notice in the Feedback application. When uploading an attachment as part of a bug report, such as a sysdiagnose file, users now need to give Apple consent to use the uploaded content for AI training.

The privacy notice of the Feedback app now references AI training.

"Apple may use your submission to improve Apple products and services, such as training Apple Intelligence models and other machine learning models," the notice reads, in part.

The developer who spotted this addition criticized Apple for not including an opt-out option, saying that the only way users could opt out was by not filing a bug report at all.

They blasted Apple, expressing frustration that the iPhone maker decided to "hide it in the other privacy messaging stuff," and made it very clear that this is something they did not want.

Still, this is only one developer's reaction, with a few others chiming in with replies. It remains to be seen whether other devs will echo the sentiment, but it's likely given that there's no apparent opt-out button.

Anyone who wants to file a bug report will have to consent to Apple's AI training, and people will understandably be upset, even with privacy-preserving measures in place.

You can opt out of AI training, but not when reporting bugs

As time goes on, Apple's AI training program will expand to other areas of the iPhone operating system beyond bug reporting. The company wants to use Differential Privacy-based AI training for Genmoji, Image Playground, and Writing Tools.

It's possible to opt out of Apple's AI training program, but not if you want to report bugs on a beta version of iOS.

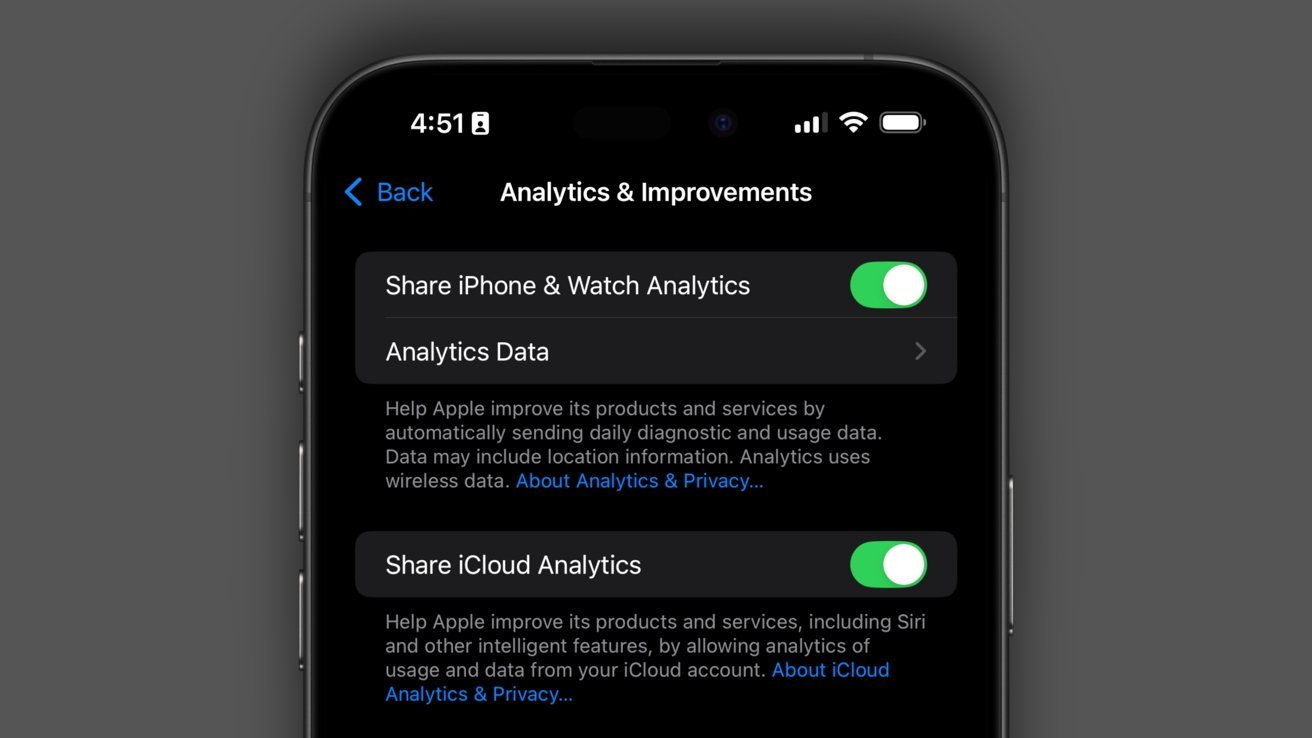

While nothing has yet been implemented on that front, users are able to opt out of the on-device Apple Intelligence training program by turning off analytics in Settings.

This can be done by scrolling down and selecting Privacy & Security, then Analytics & Improvements. Those who wish to opt out can do so by toggling the "Share iPhone & Watch Analytics" setting.

There appears to be no way for developers to opt out of training Apple's AI with their bug reports at this time.

Read on AppleInsider

Comments

Has this claim been validated? The dialog text specifically says the user can "delete any attachments" they don't want to submit, so wouldn't that include attachments related to the AI training, while still submitting the bug report?

Seems like opportunistic reporting here. Please try this yourself and let us know exactly what the situation is.

My experience over many years is mostly - no !

After all, a lot of the debugging data does say what the user was doing at the time and some of this might not be desirable to let idiot AI models parse through.

Just the fact that it generated some discussion and hesistation amongst AppleInsider forum participants is really enough to make having an AI training opt out a necessity.