Jony Ive and Laurene Powell Jobs say 'humanity deserves better' from technology

As Jony Ive's AI startup is bought by OpenAI, he and investor Laurene Powell Jobs talk about collaboration, and how technology has damaged society as well as benefited it.

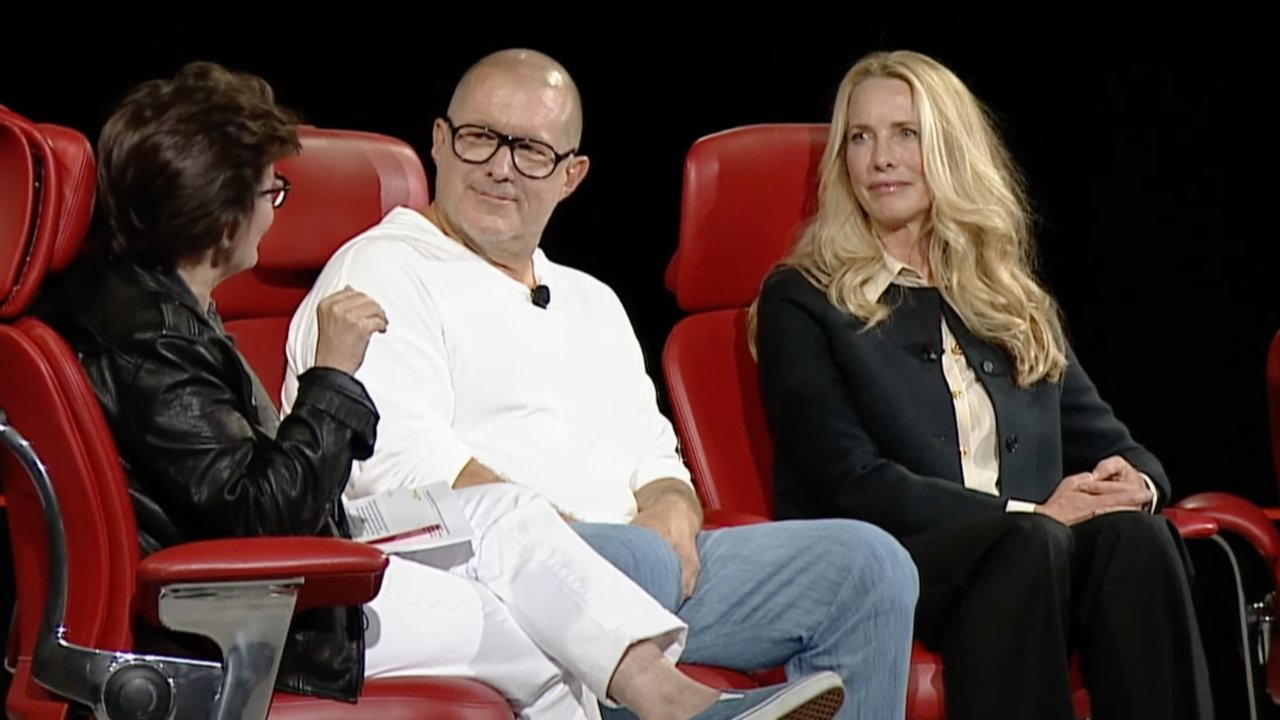

Jony Ive (center) and Laurene Powell Jobs (right) in 2022 -- image credit: Recode

Jony Ive and Sam Altman's startup "io" was bought in May 2025 by Altman's OpenAI company, and is working on a new AI device. Prior to being bought, Laurene Powell Jobs invested in "io," and in a new Financial Times interview with both of them, Ive reveals that she has been crucial to his post-Apple work, including forming his own LoveFrom company.

"If it wasn't for Laurene," said Jony Ive, "there wouldn't be LoveFrom."

Alongside speaking of how they first met in the 1990s at Powell Jobs's and Steve Jobs's house, both also talked about how technology has not always been a force for good.

"We now know, unambiguously, that there are dark uses for certain types of technology," said Powell Jobs. "You can only look at the studies being done on teenage girls and on anxiety in young people, and the rise of mental health needs, to understand that we've gone sideways."

"Certainly, technology wasn't designed to have that result," she continued. "But that is the sideways result."

Ive echoes that point about how technology is usually developed with positive aims, yet it has gone wrong, or been misused. As a designer of the world-changing iPhone, Ive includes his own work in this.

"If you make something new, if you innovate, there will be consequences unforeseen, and some will be wonderful and some will be harmful," he said.

"While some of the less positive consequences were unintentional, I still feel responsibility," continued Ive. "And the manifestation of that is a determination to try and be useful."

Neither Ive nor Powell Jobs would be drawn on details of what the new OpenAI device will be, but Powell Jobs says she is following its development closely. "Just watching something brand new be manifested, it's a wondrous thing to behold."

The full interview also touches on Ive's personal investment in redeveloping parts of San Francisco, and Powell Jobs rescuing the San Francisco Art Institute out of bankruptcy. But the piece also touches on the long-running friendship between the two.

"It's funny... as I've got older, to me, it's [about] who, not what," said Ive. "The very few precious relationships become so increasingly valuable, don't they?"

Separately, Ive and Powell Jobs -- together with Tim Cook -- launched the Steve Jobs Archive in 2022.

Read on AppleInsider

Comments

Yeah... "Humanity deserves better", but with Sam Altman? With OpenAI, which is not open source?? Data collection for better humanity??

This is a s*it show.

Here's the thing. Current LLM AI appears to be combining two relatively recent technological developments to essentially do what Eliza did a half century ago. Computational speed combined with teraflops of data is used to brute-force probabilistic mimicry of human language and thought. What's got everyone fooled is the fact that the mimicry is pretty good. What comes out of that process is something that looks something a human thought and said or wrote. There is, however, no thought. It's an illusion. It is a much larger database doing Eliza's trick of coyly responding with "How does [regurgitate user input] make you feel?"

The tell of course is the fact that, despite the use of all the internet's data and massive computational power, these things still produce output that includes "hallucinations" that an average human with nominal subject-manner knowledge can spot.

Until such point that AI can be trained on relatively small amounts of data, you can be confident that it's all just advanced mimicry. It's not evident that Apple is doing that, and it's abundantly clear that the other well-known AI projects (including whatever it is Ive and Altman are up to) are still just brute-force mimicry. There's a utility and place for that, but none of it is really the paradigm shift or the impending robot overlords that the hype says is already here.

It seems Apple's targeting of the open web makes it the most conservative of the AI groups, and technically most ethical. Though arguments can be made they shouldn't have bothered at all to avoid the conundrum. However, Apple went a step further and spent piles of money on licensing resources.

So, Apple's AI data gathering and training base is a combination of public data and paid content. As far as I can tell, they're the only company that went so far out of its way to try and build its AI ethically.

Now they do it on a studio floor. And sadly, without the port.

So much said with authority, while possessing little to no clue about something that hasn't been announced.