Run ChatGPT-style AI on your Mac with OpenAI's new offline tools

One of the two new open-weight models from OpenAI can bring ChatGPT-like reasoning to your Mac with no subscription needed.

New models from OpenAI

On August 5, OpenAI launched two new large language models with publicly available weights: gpt-oss-20b and gpt-oss-120b. These are the first open-weight models from the company since GPT-2 in 2019.

Both are released under the Apache 2.0 license, which allows for free commercial use and modification. Sam Altman, CEO of OpenAI, described the smaller model as the best and most usable open model currently available.

Altman also said the new models deliver reasoning performance comparable to GPT-4o-mini and o3-mini. Each model is part of OpenAI's proprietary lineup.

The move follows growing pressure from the open-source AI community, particularly as models like Meta's LLaMA 3 and China's DeepSeek continue to gain attention. OpenAI's decision to release these models now is likely a response to that shift in momentum.

System requirements and Mac compatibility

OpenAI says the smaller 20 billion parameter model works well on devices with at least 16 gigabytes of unified memory or VRAM. That makes it viable on higher-end Apple Silicon Macs, such as those with M2 Pro, M3 Max, or higher configurations.

The company even highlights Apple Silicon support as a key use case for the 20b model. The larger 120 billion parameter model is a different story.

OpenAI recommends 60 GB to 80 GB of memory for the 120 billion parameter model, which puts it well outside the range of most consumer laptops or desktops. Only powerful GPU workstations or cloud setups can realistically handle it.

The 20b model can run well on many Apple and PC setups. The 120b model is better suited for researchers and engineers with access to specialized hardware.

Performance and developer options

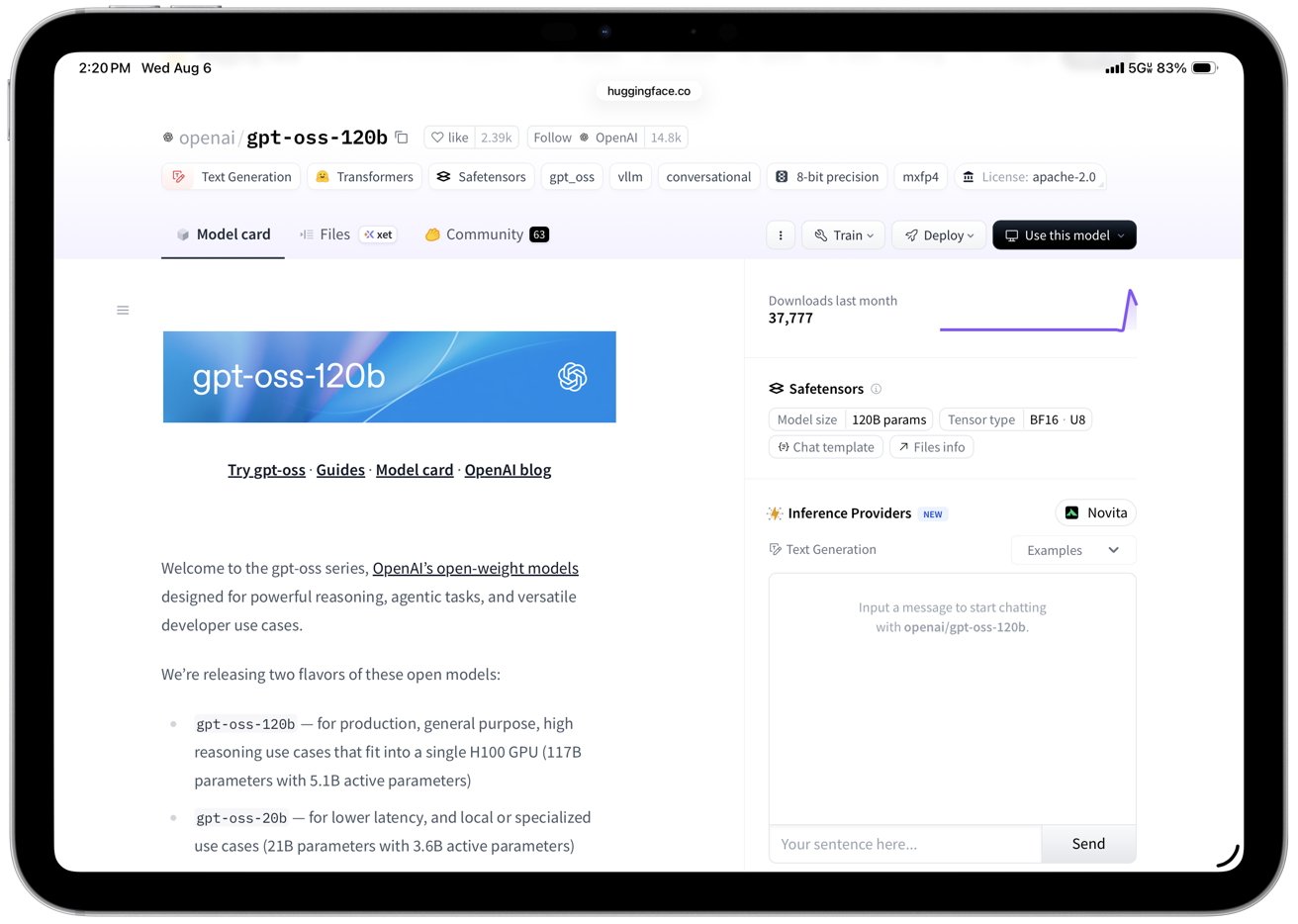

The gpt-oss models support modern features like chain-of-thought reasoning, function calling, and code execution. Developers can fine-tune them, build tools on top of them, and run them without needing an internet connection.

OpenAI model on HuggingFace

That customization opens new possibilities for privacy-focused apps, offline assistants, and custom AI workflows. OpenAI has provided reference implementations across several toolkits.

Developers can run the models using PyTorch, Transformers, Triton, vLLM, and Apple's Metal Performance Shaders. Support is also available in third-party tools like Ollama and LM Studio, which simplify model download, quantization, and interface setup.

Mac users can run the 20b model locally by using Apple's Metal system and the shared memory built into Apple Silicon. The model is already compressed using a special 4-bit format that helps it run faster and use less memory, without making the results worse.

It still takes a little technical work to set up, but tools like LM Studio or Ollama can help make that process easier. OpenAI has also released detailed model cards and sample prompts to help developers get started.

What it means for AI developers and Apple users

OpenAI's return to open-weight models is a significant shift. The 20b model offers strong performance for its size and can be used on a wide range of local hardware, including MacBooks and desktops with Apple Silicon.

The 20b model gives developers more freedom to build local AI tools without paying for API access or depending on cloud servers. Meanwhile, the 120b model shows what's possible at the high end but won't be practical for most users.

It may serve more as a research benchmark than a day-to-day tool. Even so, its availability under a permissive license is a major step for transparency and AI accessibility.

For Apple users, this release provides a glimpse of what powerful local AI can look like. With Apple pushing toward on-device intelligence in macOS and iOS, OpenAI's move fits a broader trend of local-first machine learning.

Read on AppleInsider

Comments

I really hope my next Mac is a 16GB one with 512GB of Storage

It runs very smoothly, definitely takes up about 60 for the App, but unlike running Llama3.3 (70b), the fan never kicks in. As much as I'd love to have a Studio Mac with an M3-Ultra, it is not necessary, as it works fine on a suitably equipped MBP.