Future Apple Vision Pro could take commands by just reading your lips

Apple has been researching how to have a future Apple Vision Pro determine mouth movements in order to take commands or dictation purely through lip reading.

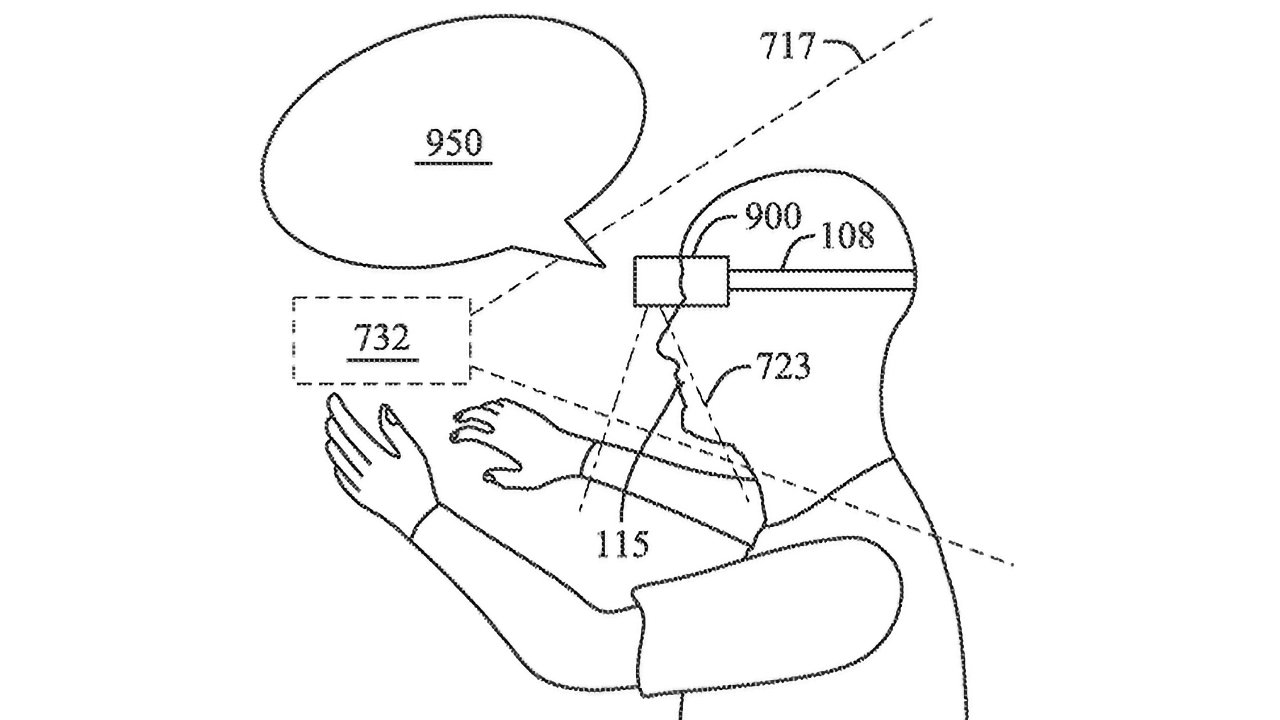

In case you ever want to conduct an orchestra while dictating a complaint against the cellist, Apple has you covered -- image credit: Apple

This is an extension of the existing AirPods Pro feature where a user can shake their head to cancel a call, or nod to accept it. In that case, the idea is that you can shake your head if you're somewhere that you can't speak, or nod if you can talk but your hands are full.

Now in a newly-revealed patent application called "Electronic Device With Dictation Structure," Apple wants you to be able to dictate even if you can't really speak.

"Audible dictation can be particularly inconvenient when the user is in a public or other environment where discretion, privacy, or quiet may be desired," says Apple's patent application for a future Apple Vision Pro feature.

Nowhere in its 21 pages does Apple suggest that standing around, wearing an headset and silently mouthing off, might not make you many friends. But in situations where those friends are being very loud, Apple does make a good point.

"Similarly, background noise in some environments can interfere with the ability of the head-mountable device to accurately and reliably recognize voice inputs from the user," it continues. "Therefore, there is a need for a head-mountable device which can allow the user to easily dictate inputs to the head-mountable device."

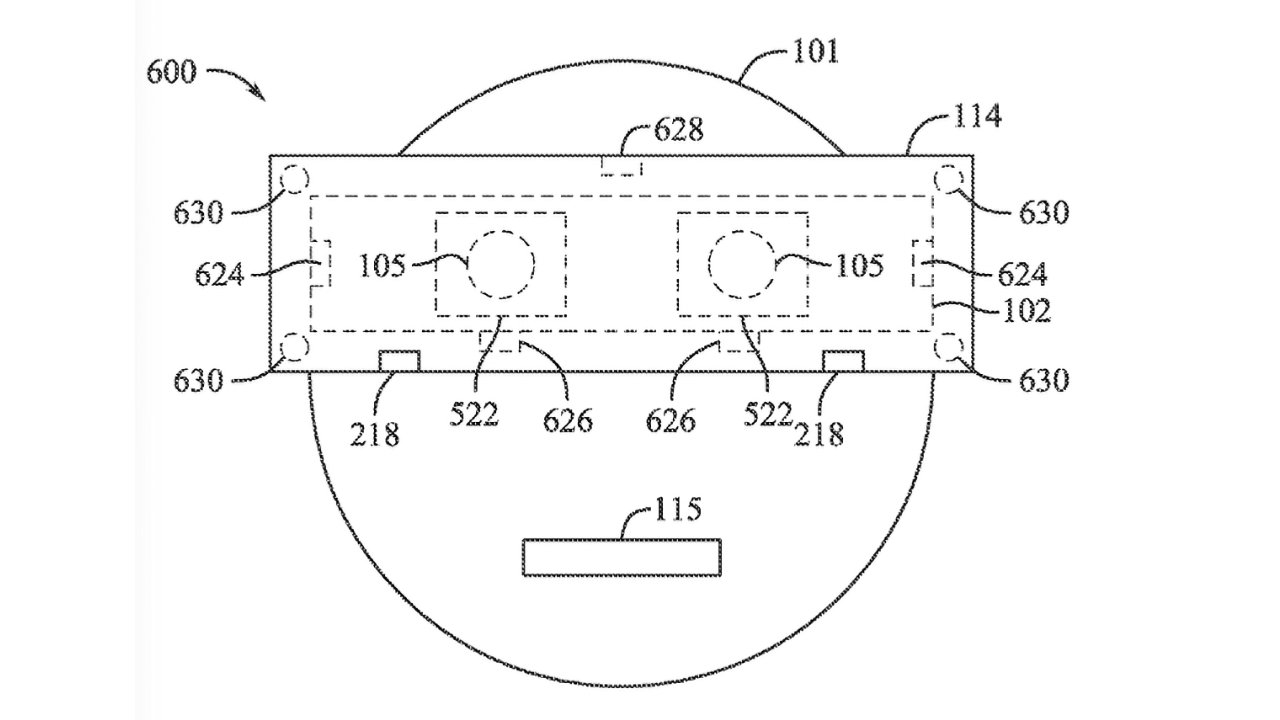

The proposal covers very many combinations of possible options. One is that "a vision sensor carried by the display frame and oriented externally in a downward direction," could be "configured to detect mouth movement."

But then there could also be an "additional sensor configured to detect at least one of a facial vibration or a facial deformation." Then alongside either or both of these, the Apple Vision Pro could also use "an internal-facing camera to detect an input selection based on eye gaze."

And in case three options isn't sufficient redundancy, there could be yet another "sensor including an external-facing camera to detect a hand gesture indicating confirmation of the input selection."

The Apple Vision Pro Passthrough feature may not be sufficiently off-putting to people, so you could also mutter at them -- image credit: Apple

What that last part offers is that the wearer could make a hand signal to say they wanted to dictate something, or that the wanted to stop. Anything the mouth in between those gestures should be taken as dictation.

There's no mention of whether the headset would also listen for regular dictation. So without some kind of signal, the headset would be taking dictation all the time, even when you were just muttering under your breath.

Apple does talk about using audio, though, as a way to train the Apple Vision Pro to recognize a user's speech patterns. "[The] training features can include audio recordings (e.g., audio clips at speaking volume between about 40 dB and about 70 dB, audio clips at whisper volume between about 20 dB and about 50 dB, etc.)."

"[Or] visual data can include different orientations or angles of a field of view that at least partially includes a user's mouth," it continues, "(e.g., a profile view from a user-facing device with a full field of view of the user's mouth, a downward angled view from a jaw camera with a partial field of view of the user's mouth, etc.).

The patent application is credited to just one inventor, the prolific Paul X. Wang. His frankly myriad previous patents and patent applications for Apple include ones such as a game controller for the Apple Vision Pro.

Read on AppleInsider

Comments