Amazon is watching and reviewing Cloud Cam security footage

Amazon has been hit by claims it is infringing on the privacy of its customers with its smart home security cameras, with a new report alleging Amazon workers are being given a glimpse into the homes of Cloud Cam users, under the guise of training the retailer's artificial intelligence systems.

Introduced in 2017, the Cloud Cam is a 1080p cloud-connected webcam that can offer live feeds of its view to homeowners via the Echo Show, Echo Spot, Fire range of devices, and an iPhone app. Artificial intelligence is used to monitor the video for triggerable events, in order to provide alerts and highlight clips of activity, such as an attempted robbery or the movements of a pet.

A new report from Bloomberg reports that video captured, stored, and analyzed by Amazon may not necessarily be seen just by software. Support teams working on the product are also provided an opportunity to see the feeds, in order to help the software better recognize what it is seeing.

"Dozens" of employees based in India and Romania are tasked with reviewing video snippets captured by Cloud Cam, telling the system whether what takes place in the clips is a threat or a false alarm, five people who worked on the program or have direct knowledge of its workings told the report.

Auditors can annotate up to 150 video recordings, each typically between 20 to 30 seconds in length. According to an Amazon spokeswoman, the clips are sent for review from employee testers, along with Cloud Cam owners who submit clips for troubleshooting, for example if there are inaccurate notifications or issues with video quality.

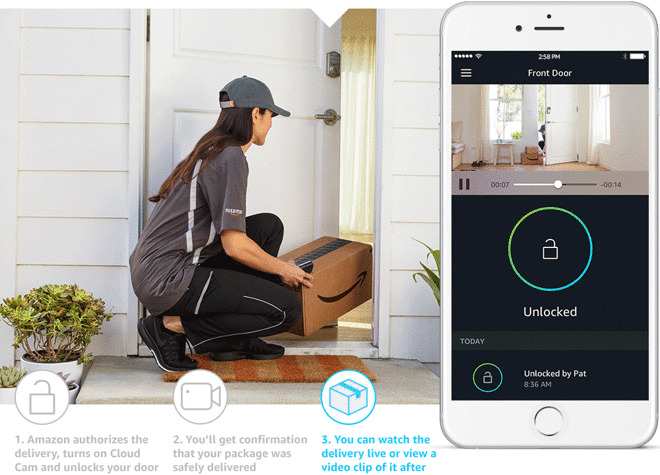

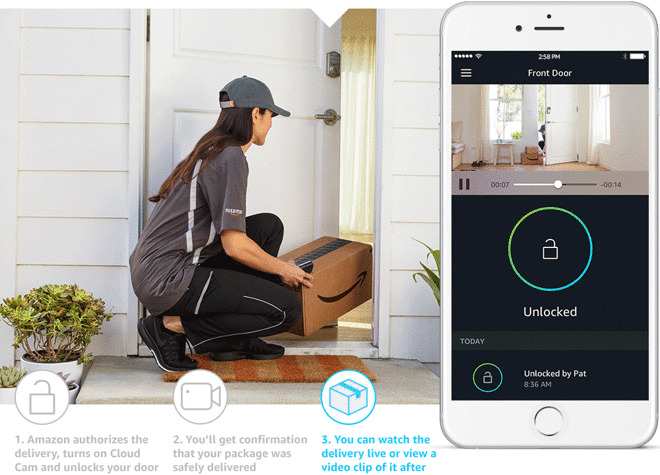

One of the marketed purposes of Cloud Cam was for alerts of deliveries though Amazon Key

"We take privacy seriously and put Cloud Cam customers in control of their video clips," the spokeswoman advised. Outside of submitting video for troubleshooting, they added "only customers can view their clips."

The report goes on to assert that Amazon does not inform customers of the use of humans to train algorithms in the terms and conditions of the service.

Inappropriate or sensitive content does sometimes slip into the retraining collection, two sources advised. Also cited by the sources, the review team viewed clips of users having sex on a rare occasion. The clips containing inappropriate content are flagged and then discarded to avoid being used for training the AI.

The Amazon spokeswoman confirmed the disposal of the inappropriate clips. She also did not mention why the activity would appear in clips voluntarily submitted by users or Amazon staff.

Amazon does implement some security to prevent the clips from being shared, with workers in India located on a restricted floor where mobile phones are banned. Even so, one person familiar with the team admitted this policy didn't stop some employees from sharing footage to people not on the team.

The fallout from the report led to a similar claim from a "whistleblower" that Apple failed to adequately disclose its use of contractors to listen to anonymized Siri queries, though Apple does provide warnings in its software license agreements.

Both the report and the "whistleblowing" prompted Apple to temporarily suspend its Siri quality control program as it conducts a thorough review. Apple also advised a future software update would enable users to opt in for the performance grading.

Amazon and Google then followed suit, with Google halting one global initiative for Google Assistant audio, while Amazon provided an option to opt out of human reviews of audio recordings for Alexa.

Unlike Amazon, Apple is unlikely to face a similar situation, as it doesn't currently offer automated monitoring of video feeds to customers. While Apple does allow video camera to work through HomeKit, Apple does not perform any sort of quality control, triggerable actions, or other processing on live video feeds itself.

This does leave the door open to third-parties that take advantage of HomeKit's video feed functionality to perform processing on video they collect, but not Apple. At least not at this time.

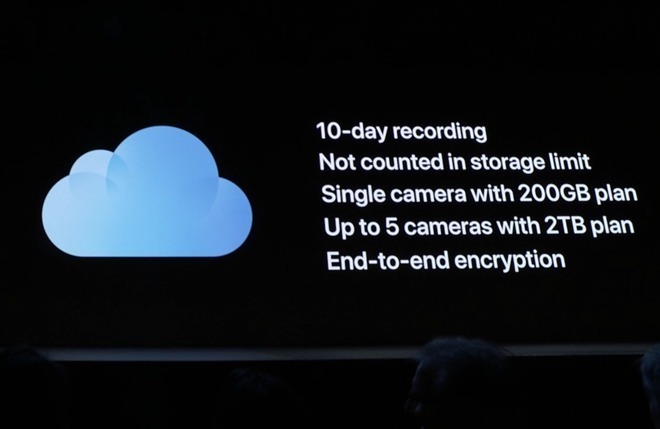

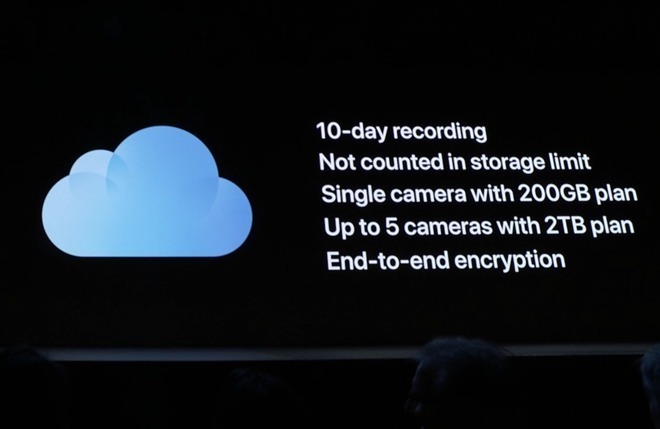

A WWDC presentation slide revealing elements of Apple's HomeKit Secure Video offering

Apple's HomeKit Secure Video feature, which was originally announced in June but has yet to become available to use, will allow for the storage of HomeKit video in iCloud. As end-to-end encryption will be used to secure the video before it leaves the user's home, it means not even Apple will be able to access the video at all.

One video leak allegedly showing the feature in action indicates it will offer some artificial intelligence-based processing, which includes detecting motion events and identifying the main subject of the video, such as a dog or car. Given the highly privacy-focused nature of Apple's offering, including the encryption element, it is likely this is locally-performed processing, rather than one performed in the cloud.

Introduced in 2017, the Cloud Cam is a 1080p cloud-connected webcam that can offer live feeds of its view to homeowners via the Echo Show, Echo Spot, Fire range of devices, and an iPhone app. Artificial intelligence is used to monitor the video for triggerable events, in order to provide alerts and highlight clips of activity, such as an attempted robbery or the movements of a pet.

A new report from Bloomberg reports that video captured, stored, and analyzed by Amazon may not necessarily be seen just by software. Support teams working on the product are also provided an opportunity to see the feeds, in order to help the software better recognize what it is seeing.

"Dozens" of employees based in India and Romania are tasked with reviewing video snippets captured by Cloud Cam, telling the system whether what takes place in the clips is a threat or a false alarm, five people who worked on the program or have direct knowledge of its workings told the report.

Auditors can annotate up to 150 video recordings, each typically between 20 to 30 seconds in length. According to an Amazon spokeswoman, the clips are sent for review from employee testers, along with Cloud Cam owners who submit clips for troubleshooting, for example if there are inaccurate notifications or issues with video quality.

One of the marketed purposes of Cloud Cam was for alerts of deliveries though Amazon Key

"We take privacy seriously and put Cloud Cam customers in control of their video clips," the spokeswoman advised. Outside of submitting video for troubleshooting, they added "only customers can view their clips."

The report goes on to assert that Amazon does not inform customers of the use of humans to train algorithms in the terms and conditions of the service.

Inappropriate or sensitive content does sometimes slip into the retraining collection, two sources advised. Also cited by the sources, the review team viewed clips of users having sex on a rare occasion. The clips containing inappropriate content are flagged and then discarded to avoid being used for training the AI.

The Amazon spokeswoman confirmed the disposal of the inappropriate clips. She also did not mention why the activity would appear in clips voluntarily submitted by users or Amazon staff.

Amazon does implement some security to prevent the clips from being shared, with workers in India located on a restricted floor where mobile phones are banned. Even so, one person familiar with the team admitted this policy didn't stop some employees from sharing footage to people not on the team.

Alexa, Siri, and Repetition

The story is reminiscent of an earlier Bloomberg report from April, which alleged Amazon used outside contractors and full-time employees to comb through snippets of audio from Echo devices to help train Alexa. Again, in that instance, inappropriate content made their way to employees, including one instance employees believed was evidence of sexual assault, and despite Amazon having security policies in place, content was allegedly shared between employees.The fallout from the report led to a similar claim from a "whistleblower" that Apple failed to adequately disclose its use of contractors to listen to anonymized Siri queries, though Apple does provide warnings in its software license agreements.

Both the report and the "whistleblowing" prompted Apple to temporarily suspend its Siri quality control program as it conducts a thorough review. Apple also advised a future software update would enable users to opt in for the performance grading.

Amazon and Google then followed suit, with Google halting one global initiative for Google Assistant audio, while Amazon provided an option to opt out of human reviews of audio recordings for Alexa.

Unlike Amazon, Apple is unlikely to face a similar situation, as it doesn't currently offer automated monitoring of video feeds to customers. While Apple does allow video camera to work through HomeKit, Apple does not perform any sort of quality control, triggerable actions, or other processing on live video feeds itself.

This does leave the door open to third-parties that take advantage of HomeKit's video feed functionality to perform processing on video they collect, but not Apple. At least not at this time.

A WWDC presentation slide revealing elements of Apple's HomeKit Secure Video offering

Apple's HomeKit Secure Video feature, which was originally announced in June but has yet to become available to use, will allow for the storage of HomeKit video in iCloud. As end-to-end encryption will be used to secure the video before it leaves the user's home, it means not even Apple will be able to access the video at all.

One video leak allegedly showing the feature in action indicates it will offer some artificial intelligence-based processing, which includes detecting motion events and identifying the main subject of the video, such as a dog or car. Given the highly privacy-focused nature of Apple's offering, including the encryption element, it is likely this is locally-performed processing, rather than one performed in the cloud.

Comments

The number of companies world wide involved with smart devices that haven’t used personal information is probably somewhere extremely close to zero.

Apple shared voice with outsiders. Bad. Google shared voice with outsiders. Bad. Microsoft shared voice with outsiders. Bad.

Now Amazon shares video with outsiders. Bad. I'll assume there will be another story in a few days where Google shares video with outsiders. Bad. Microsoft shares video with outsiders. Bad...

Common sense says humans are involved in the process some way or another. How else to tell if it's a cat or a small child detected at the door and do better at determining the difference, or what sound caused a security camera to trigger and avoiding future false events if possible?

The best thing going forward will be for the techs to bring all reviews in-house.

Yeah there will still be people, and people will sometimes make the wrong choices. At least those choices would better stay under the control of Apple or Google or Amazon or whoever. To it's credit that's the direction Apple has said they'll take going forward and Google has signaled the same. I believe Amazon will too as will Microsoft. Stuff will still happen, a former employee will still claim this or that regularly goes on (whether it actually does or not) but at least it won't be put in the hands of a 3rd party contractor with less of a reputation to protect.

Oh, and just be more transparent from now on. If you gotta do something explain how it benefits the customer and why it's done the way it is. Then ask for their explicit consent BEFORE doing it. Not being upfront makes it look like there's something to be ashamed of.

Overused and meaningless.

Users were surprised, not advised.

There was nothing explicitly said about the process, and no one reading an Apple ToS doc would have expected that their own voice recordings were being sent outside the company and put in the hands of 3rd party contractors in off-site locations for review and transcription. Nor would they have thought there was no way to prevent it outside of not using Siri at all.

So no it was NOT properly disclosed. Now it has been and Apple has taken steps to allow users to have more say in it while being more transparent about the whole process. Users not being aware it was going on is what has led Apple to make the changes it has.

I remember when Jurassic Park was first released people being amazed by the dinosaurs. I had a read an article about a bunch of the special effects used during production of the movie. I brought it up with a friend (who is not a techie), I said something along the lines of, “Did you see how they animated the dinosaurs?” His response was, “Yeah. With computers.” That’s it. To him, CGI consisted of typing into a computer: DRAW DINOSAUR and then MAKE DINOSAUR WALK. I am not exaggerating at all.

My point is, people like him, and there are many many like him, think that “computers” just do the work. If the marketing says that the camera’s AI scans the footage and determines what is in it then that’s the end of it. The computer in the camera is doing all the work, no human involved.

I agree on the transparency. My guess is that they aren’t up front about it because it sounds distasteful to many. How much harder would it be for a company to sell their camera to people if they have to explain that, yes, humans may be looking at the footage collected in your home? I bet that makes it a LOT harder. Better to just be vague and try to put that in the small print on the website that’s address is on the bottom of the box and not linked from anywhere.

Apple please hurry up and take Home seriously! I'm at the point of thinking Apple needs to acquire some home security companies and make these spyware devices irrelevant. Like Siri, iPhone and podcasts, this whole industry was Apple's idea and they're laying back allowing everyone in.

And cry me a river about the Siri thing. All information was anonymous and untraceable. Apple could release these recordings to the public and it would do diddly-squat!

Unlike spyware companies like Facebook and Google who know who your children are, where you live and what you purchase.

Apart from that: it becomes more and more obvious that machine learning is so much easier when you give a damn about privacy.

Google anonymized their voice recordings too before sending them out for 3rd party transcription. It still did not prevent a dishonest contracted person from taking a few containing personal information such as an address or person's name to a newspaper reporter who dedicated themselves to identifying the person behind the voice. successfully I might add allowing them to leap to the inference Google must be sharing personal information.

But Apple and Google were each doing the same thing, relegating a user to a number instead and otherwise attempting to separate the recordings from an identifiable person. So yeah it's not all "untraceable".

Side note: The same source claimed Apple also assigned certain demographics to the supplied voice recordings such as general location, male or female, what app the recording came from, that type of thing. So Apple does apparently keep track of a little more than you understand them to.

Unlike Facebook/Google/Amazon, Apple doesn't care what you do they only care about improving their services.

Privacy is a big deal to Apple.

Mention Google and he will come....

*Gatorguy summon card activated*

The source claimed the recording were completely anonymous and untraceable.

Also

Google isn't as good as you understand them to be.

"The whistleblower said: “There have been countless instances of recordings featuring private discussions between doctors and patients, business deals, seemingly criminal dealings, sexual encounters and so on. These recordings are accompanied by user data showing location, contact details, and app data.”

Any recording that includes an address or name, and especially if combined with approximate location, is traceable. You knew that.

Apple's voice recordings were no less (or more) traceable than Google's. Gosh is that me throwing Google under the bus too?