Internal Apple memo addresses public concern over new child protection features

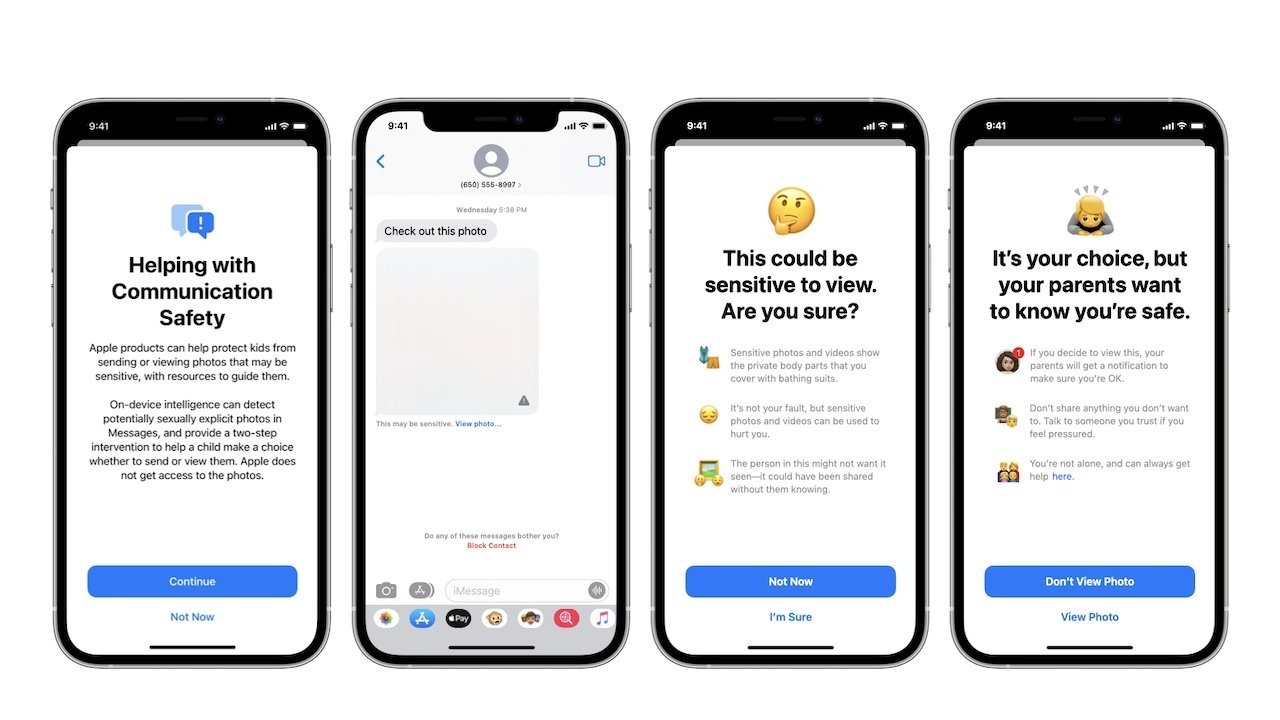

On Thursday, Apple announced that it would expand child safety features in iOS 15, iPadOS 15, macOS Monterey, and watchOS 8. The new tools include a system that leverages cryptographic techniques to detect collections of CSAM stored in iCloud Photos to provide information to law enforcement.

The announcement was met with a fair amount of pushback from customers and security experts alike. The most prevalent worry is that the implementation could at some point in the future lead to surveillance of data traffic sent and received from the phone.

An internal memo, obtained by 9to5Mac, addresses these concerns to Apple staff. The memo was reportedly penned by Sebastien Marineau-Mes, a software VP at Apple, and reads as follows:

The memo also included a message from Marita Rodrigues, the executive director of strategic partnerships at the National Center for Missing and Exploited Children.Today marks the official public unveiling of Expanded Protections for Children, and I wanted to take a moment to thank each and every one of you for all of your hard work over the last few years. We would not have reached this milestone without your tireless dedication and resiliency.

Keeping children safe is such an important mission. In true Apple fashion, pursuing this goal has required deep cross-functional commitment, spanning Engineering, GA, HI, Legal, Product Marketing and PR. What we announced today is the product of this incredible collaboration, one that delivers tools to protect children, but also maintain Apple's deep commitment to user privacy.

We've seen many positive responses today. We know some people have misunderstandings, and more than a few are worried about the implications, but we will continue to explain and detail the features so people understand what we've built. And while a lot of hard work lays ahead to deliver the features in the next few months, I wanted to share this note that we received today from NCMEC. I found it incredibly motivating, and hope that you will as well.

Apple has yet to make a public-facing statement addressing the backlash.Team Apple,

I wanted to share a note of encouragement to say that everyone at NCMEC is SO PROUD of each of you and the incredible decisions you have made in the name of prioritizing child protection.

It's been invigorating for our entire team to see (and play a small role in) what you unveiled today.

I know it's been a long day and that many of you probably haven't slept in 24 hours. We know that the days to come will be filled with the screeching voices of the minority.

Our voices will be louder.

Our commitment to lift up kids who have lived through the most unimaginable abuse and victimizations will be stronger.

During these long days and sleepless nights, I hope you take solace in knowing that because of you many thousands of sexually exploited victimized children will be rescued, and will get a chance at healing and the childhood they deserve.

Thank you for finding a path forward for child protection while preserving privacy.

Apple has long been viewed as a champion in user privacy. At WWDC 2021, Apple unveiled plans to radically expand privacy features across the Apple Ecosystem.

Read on AppleInsider

Comments

This is essentially a warrantless search of a person's personal property.

We've already seen that the government is colluding with social media to censor posts which they deem unfavorable to them. We've already seen the government asking people to rat out their family members and friends for what they perceive as "extremist" behavior. Is it really that much of a stretch to think the government would ask tech companies to monitor emails and messages for extremist content?

Your answer to "where it ends" is beyond ridiculous. There is always a next. There are always enhancements. It's the nature of technology.

Sure there's money in this kind of research and commercial software. So developers and companies will be more than happy to let someone else fix the major screwups new technology causes. It's not like the people in the country any clue about how to make good decisions, to begin with, so let the computer do -- it ain't my problem.

Your device scans your photos and calculates a photo “fingerprint” ON YOUR DEVICE. It downloads fingerprints of bad materials and checks ON YOUR DEVICE.

The big question is who creates/validates the media fingerprints that get compared. Example “what if” concerns include a government like China giving Apple fingerprints for anti-government images.

And who defend children from the mental and cultural castration product of the gods' lunacy?

” In true Apple fashion, pursuing this goal has required deep cross-functional commitment, spanning Engineering, GA, HI, Legal…”

-Tim Cook

Maybe I’m reading it wrong but “legal” is a government entity. I would bet some looneys from Congress or the FBI twisted Apple’s arm to provide this.

But the lack of transparency is the biggest concern. In the future, Apple will be given other databases to match against, and new laws will be drafted to

prevent Apple from telling citizens of the country that they’re now searching for pictures if dissidents on their phones.

Anyone?

Thought not.