Apple 'poisoned the well' for client-side CSAM scanning, says former Facebook security chi...

Alex Stamos, former Facebook security chief, says Apple's approach to CSAM scanning and iMessage exploitation may have caused more harm than good for the cybersecurity community.

Apple's CSAM scanning tool has become a controversial topic

Once iOS 15 and the other fall operating systems release, Apple will introduce a set of features intended to prevent child exploitation on its platforms. These implementations have created a fiery online debate surrounding user privacy and the future of Apple's reliance on encryption.

Alex Stamos is currently a professor at Stanford but previously acted as security chief at Facebook. He encountered countless damaged families as a result of abuse and sexual exploitation during his tenure at Facebook.

He wants to stress the importance of technologies, such as Apple's, to combat these problems. "A lot of security/privacy people are verbally rolling their eyes at the invocation of child safety as a reason for these changes," Stamos said in a Tweet. "Don't do that."

The Tweet thread covering his views surrounding Apple's decisions is extensive but offers some insight into the matters brought up by Apple and experts alike.

The nuance of the discussion has been lost on many experts and concerned internet citizens alike. Stamos says the EFF and NCMEC both reacted with little room for conversation, having used Apple's announcements as a stepping stone to advocate for their equities "to the extreme."

Information from Apple's side hasn't helped with the conversation either, says Stamos. For example, the leaked memo from NCMEC calling concerned experts "screeching voices of the minority" is seen as harmful and unfair.

Stanford hosts a series of conferences around privacy and end-to-end encryption products. Apple has been invited but has never participated, according to Stamos.

Instead, Apple "just busted into the balancing debate" with its announcement and "pushed everybody into the furthest corners" with no public consultation, said Stamos. The introduction of non-consensual scanning of local photos combined with client-side ML might have "poisoned the well against any use of client side classifiers."

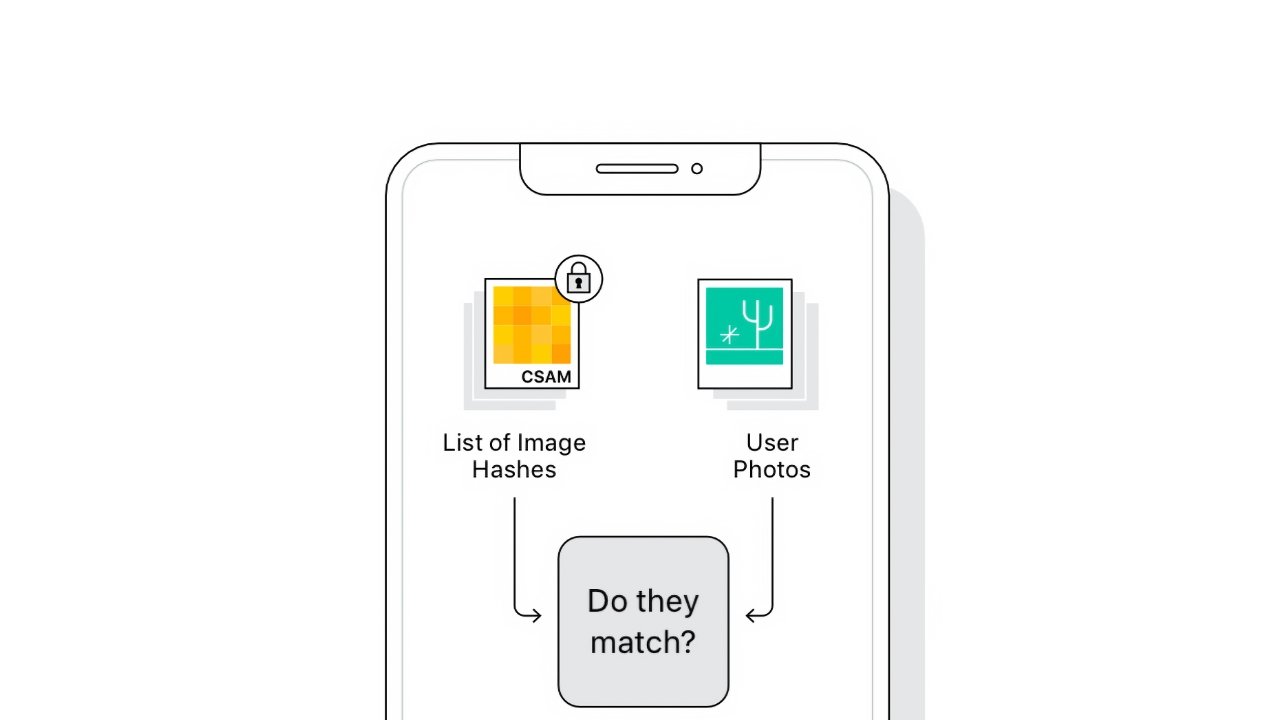

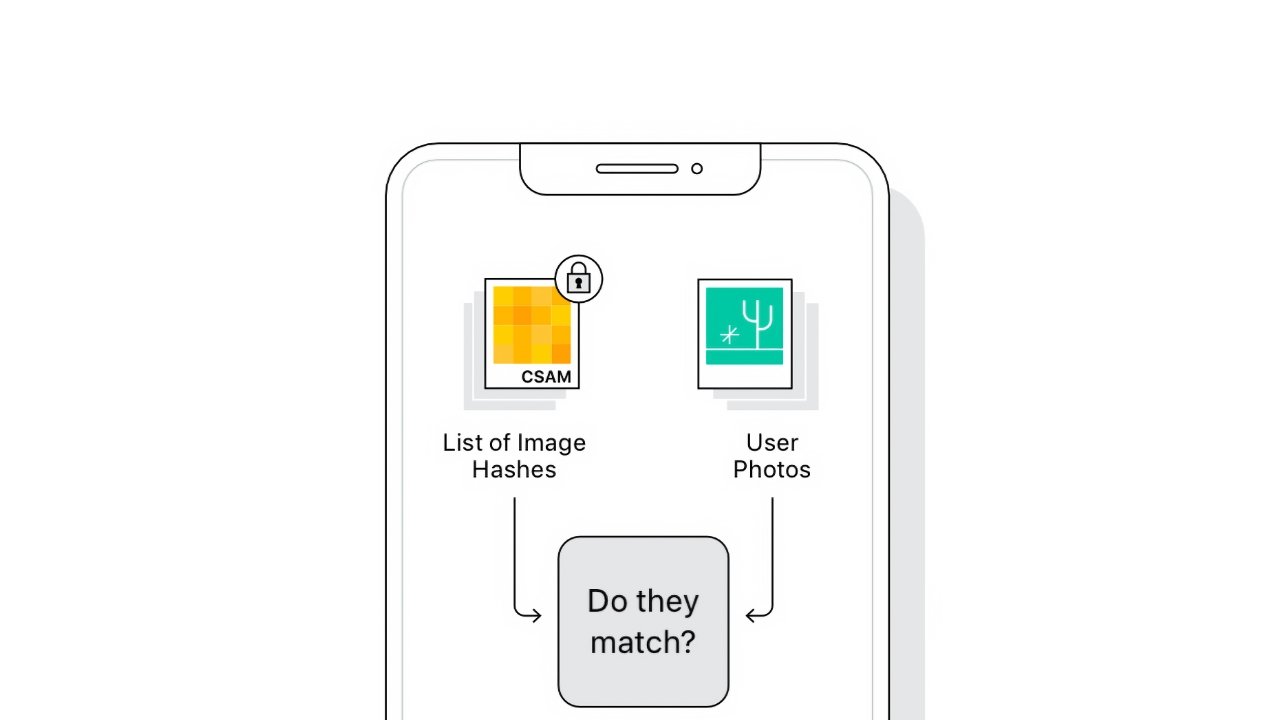

The implementation of the technology itself has left Stamos puzzled. He cites that the on-device CSAM scanning isn't necessary unless it is in preparation for end-to-end encryption of iCloud backups. Otherwise, Apple could easily perform the scanning server side.

The iMessage system doesn't offer any user-related reporting mechanisms either. So rather than alert Apple to users abusing iMessage for sextortion or sending sexual content to minors, the child is left with a decision -- one Stamos says they are not equipped to make.

At the end of the Twitter thread, Stamos mentioned that Apple could be implementing these changes due to the regulatory environment. For example, the UK Online Safety Bill and EU Digital Services Act could both have influenced Apple's decisions here.

Alex Stamos isn't happy with the conversation surrounding Apple's announcement and hopes the company will be more open to attending workshops in the future.

The technology itself will be introduced in the United States first, then rolled out on a per-country basis. Apple says it will not allow governments or other entities to coerce it into changing the technology to scan for other items such as terrorism.

Read on AppleInsider

Apple's CSAM scanning tool has become a controversial topic

Once iOS 15 and the other fall operating systems release, Apple will introduce a set of features intended to prevent child exploitation on its platforms. These implementations have created a fiery online debate surrounding user privacy and the future of Apple's reliance on encryption.

Alex Stamos is currently a professor at Stanford but previously acted as security chief at Facebook. He encountered countless damaged families as a result of abuse and sexual exploitation during his tenure at Facebook.

He wants to stress the importance of technologies, such as Apple's, to combat these problems. "A lot of security/privacy people are verbally rolling their eyes at the invocation of child safety as a reason for these changes," Stamos said in a Tweet. "Don't do that."

The Tweet thread covering his views surrounding Apple's decisions is extensive but offers some insight into the matters brought up by Apple and experts alike.

In my opinion, there are no easy answers here. I find myself constantly torn between wanting everybody to have access to cryptographic privacy and the reality of the scale and depth of harm that has been enabled by modern comms technologies.

Nuanced opinions are ok on this.-- Alex Stamos (@alexstamos)

The nuance of the discussion has been lost on many experts and concerned internet citizens alike. Stamos says the EFF and NCMEC both reacted with little room for conversation, having used Apple's announcements as a stepping stone to advocate for their equities "to the extreme."

Information from Apple's side hasn't helped with the conversation either, says Stamos. For example, the leaked memo from NCMEC calling concerned experts "screeching voices of the minority" is seen as harmful and unfair.

Stanford hosts a series of conferences around privacy and end-to-end encryption products. Apple has been invited but has never participated, according to Stamos.

Instead, Apple "just busted into the balancing debate" with its announcement and "pushed everybody into the furthest corners" with no public consultation, said Stamos. The introduction of non-consensual scanning of local photos combined with client-side ML might have "poisoned the well against any use of client side classifiers."

The implementation of the technology itself has left Stamos puzzled. He cites that the on-device CSAM scanning isn't necessary unless it is in preparation for end-to-end encryption of iCloud backups. Otherwise, Apple could easily perform the scanning server side.

The iMessage system doesn't offer any user-related reporting mechanisms either. So rather than alert Apple to users abusing iMessage for sextortion or sending sexual content to minors, the child is left with a decision -- one Stamos says they are not equipped to make.

As a result, their options for preventing abuse are limited.

What I would rather see:

1) Apple creates robust reporting in iMessage

2) Slowly roll out client ML to prompt the user to report something abusive

3) Staff a child safety team to investigate the worst reports-- Alex Stamos (@alexstamos)

At the end of the Twitter thread, Stamos mentioned that Apple could be implementing these changes due to the regulatory environment. For example, the UK Online Safety Bill and EU Digital Services Act could both have influenced Apple's decisions here.

Alex Stamos isn't happy with the conversation surrounding Apple's announcement and hopes the company will be more open to attending workshops in the future.

The technology itself will be introduced in the United States first, then rolled out on a per-country basis. Apple says it will not allow governments or other entities to coerce it into changing the technology to scan for other items such as terrorism.

Read on AppleInsider

Comments

Nice intentions…

…not worth the bits they are written with.

I just want to see Cook explain to shareholders the crash in Apple’s stock price as he announces leaving the Chinese market for refusing to scan for pictures of the Dalai Lama, Pooh, HK Protests, etc.

You know, just like Apple left the Chinese market when China asked that VPN apps be removed from the AppStore, or when China and Russia mandated that all iCloud servers for their country’s users be within their jurisdiction, or when the US government wanted iCloud backups to remain unencrypted.

Apple is always quick to point out that they comply with all the laws of the countries they operate in, so they will punt and point the finger to the authoritarian regimes’ laws as they obediently comply. And US authorities will use this as an excuse to not have national security disadvantages over other countries, etc.

In the good old days before electronic communications, law enforcement couldn’t tap into anything, and they still managed to prosecute crime, they just had to put in more actual shoe leather for investigations, while these days some guy thinks the only time he should get up from his office chair is to present evidence in court (unless that’s a video conference, too). The demands of law enforcement are simply an expression of laziness.

So even without E2E this makes sense.

Talk about an overblown "first world" problem.

I think he’s saying that there is a case for client-side scanning, but it should be for protecting users. No one complains when a virus checker scans files. That’s probably because you put it there yourself, and because it reports to you before it reports to the mothership.

This isn’t about “some very technical and narrow definition of privacy” this is about an infrastructure that can be used for arbitrary things. Just because, initially to sell it to the public, they limit it to child abuse, doesn’t mean there’s anything technical that limits the expansion to other domains.

In adaptation to your Brazilian saying: “It’s stupid to think just because a jaguar was slain, the jungle is no free of dangers.”

“People with real jobs and live worries” are bred, such that they prevent the solution of problems. Poor people desperate for a job won’t demonstrate or block the deforestation of the Amazon, they didn’t prevent the rise of Hitler to power, they were easily recruited by the Stasi in exchange for a little privilege and a small pay raise. They are the henchmen of any evil system, and then act surprised, when the powers show up at the door and drag them to a KZ or gulag.

Tell the GeStaPo you’re not a criminal, when they knock at your door for having looked at the wrong web site, tell yourself “me being deported is just an overblown first world problem.”

He's guest hosted the Risky Business security podcast numerous times. If you want to get a sense of the guy, I recommend listening to those episodes.

Governments have and do force the inclusion/exclusion of Apps and App-level functionality.

An infrastructure matter is hard to force, because a company like Apple can say it doesn’t fit into their OS’ architecture, etc.

None of these apply, once the infrastructure is actually in place. China, to stick with the example, may simply demand that they are in charge of the database and that they (rather than some NPO/NGO) be notified, citing “privacy laws” and “sovereign jurisdiction over criminal matters” as well as “national security concerns” as reasons, and on the surface, they are correct. After all, who guarantees that NPO isn’t an NSA front, and the hash database doesn’t contain items of concern to the Chinese government?

Once China is in charge of the hash database used in China, and violating notifications don’t go to Apple or an NGO but to Chinese authorities, it’s game over. After all, what’s a human rights violation and what’s a legitimate national security concern, is just a matter of perspective. We get (rightly) outraged at how Russia treats Navalny or China treats supporters of the Dalai Lama, yet many are blind to the plight of Snowden and Assange.

It’s one thing to architect a system that has no provisions for backdoors, it’s another to try to deny a government access to a back door that actually exists.

What you keep on your phone/computer, which in essence is a brain prosthetic, is in its very essence private and not shared.

Imagine Apple scanning your diary or your thoughts! We’re literally just a technological gap distanced from “Minority Report”.

What if machine learning derives personality profiles of rapists? What if social media profiles allow the creation of very detailed psychological personality profiles? (They do!) You want Apple to scan (on device of course, for the protection of your privacy,) scan peoples social media interactions, and alert an NGO of people’s sex offender potential?

What if someone installs a hidden folder with offensive files on someone’s device, they get flagged and they deny any knowledge? Whom do you think the courts will believe?

The problem is not what Apple is doing now, but what it opens the door to.

We can only hope that some organization with deep pockets sues Apple, gets a temporary restraining order, and then finally wins the case on a wide ranging constitutional foundation.

Personally I’d also be happy for a general CSAM ML model to flag/alert me about potential CSAM content hitting my daughter’s account but not reporting it further.

As for server-side scanning, I don’t see how mandatory scanning varies whether the code is in the cloud or on device. Either way it’s out of my control.

ok, now we're going off the deep end with the argument. While I have no doubt that Google, Amazon, and Facebook have personality profiling AI which would shock most people (ala the infamous Eric Schmidt "creepy line" quote from 2010), Apple comparing image hashes is far far from that. If you're seriously worried about that kind of future, you'd do far better to battle all the tracking and profiling being done on you by advertisers every time you use a web browser (or web-based app), open an email, etc.