Apple employees express concern over new child safety tools

Apple employees are voicing concern over the company's new child safety features set to debut with iOS 15 this fall, with some saying the decision to roll out such tools could tarnish Apple's reputation as a bastion of user privacy.

Pushback against Apple's newly announced child safety measures now includes critics from its own ranks who are speaking out on the subject in internal Slack channels, reports Reuters.

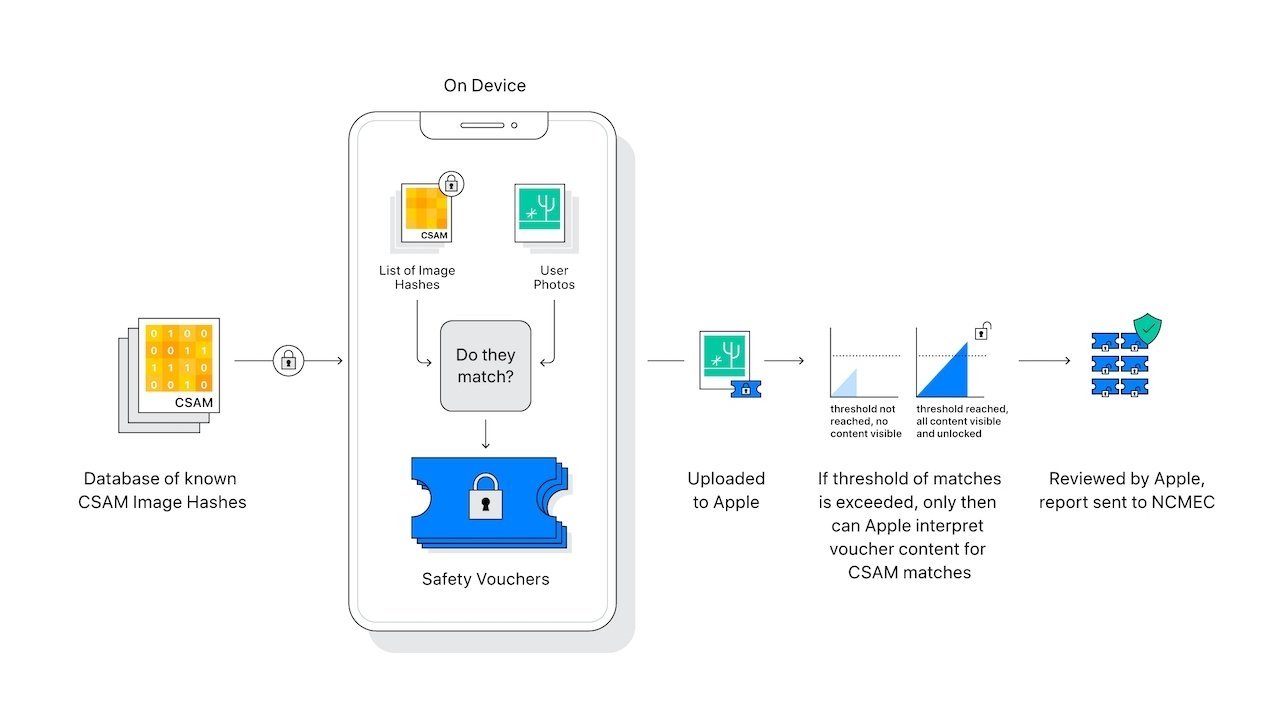

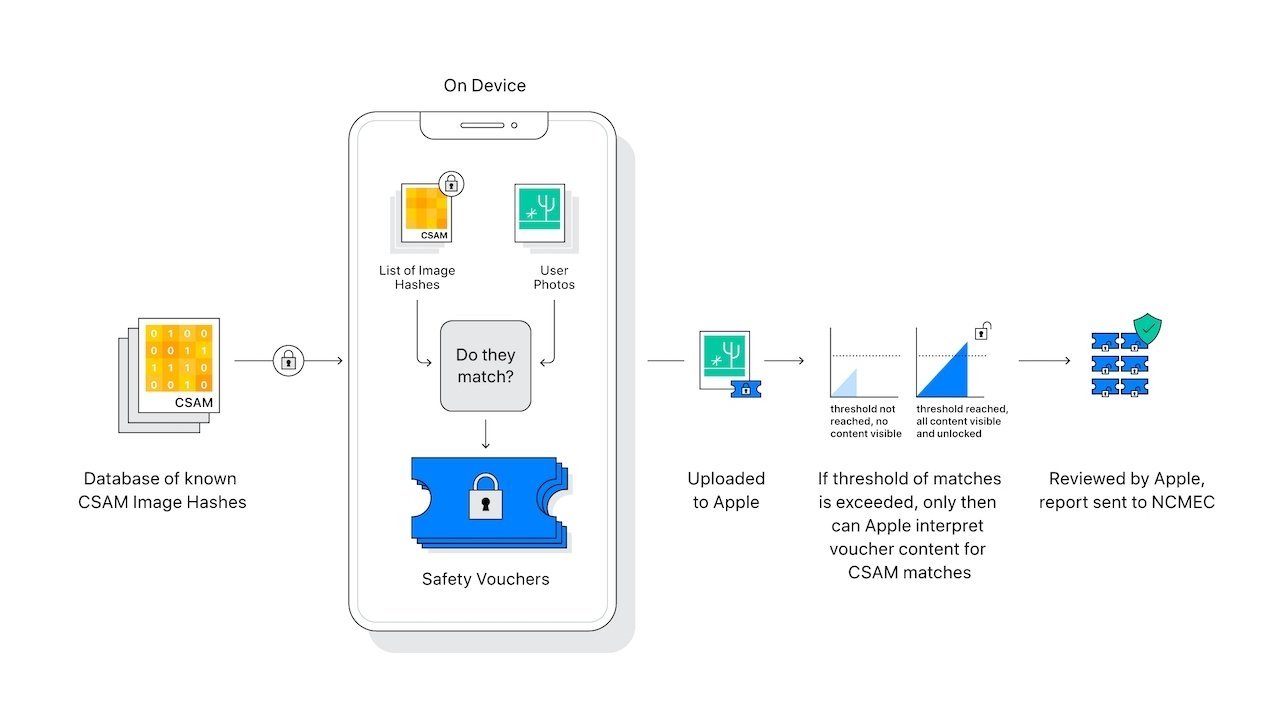

Announced last week, Apple's suite of child protection tools includes on-device processes designed to detect and report child sexual abuse material uploaded to iCloud Photos. Another tool protects children from sensitive images sent through Messages, while Siri and Search will be updated with resources to deal with potentially unsafe situations.

Since the unveiling of Apple's CSAM measures, employees have posted more than 800 messages to a Slack channel on the topic that has remained active for days, the report said. Those concerned about the upcoming rollout cite common worries pertaining to potential government exploitation, a theoretical possibility that Apple deemed highly unlikely in a new support document and statements to the media this week.

The pushback within Apple, at least as it pertains to the Slack threads, appears to be coming from employees who are not part of the company's lead security and privacy teams, the report said. Those working in the security field did not appear to be "major complainants" in the posts, according to Reuters sources, and some defended Apple's position by saying the new systems are a reasonable response to CSAM.

In a thread dedicated to the upcoming photo "scanning" feature (the tool matches image hashes against a hashed database of known CSAM), some workers have objected to the criticism, while others say Slack is not the forum for such discussions, the report said. Some employees expressed hope that the on-device tools will herald full end-to-end iCloud encryption.

Apple is facing down a cacophony of condemnation from critics and privacy advocates who say the child safety protocols raise a number of red flags. While some of the pushback can be written off to misinformation stemming from a basic misunderstanding of Apple's CSAM technology, others raise legitimate concerns of mission creep and violations of user privacy that were not initially addressed by the company.

The Cupertino tech giant has attempted to douse the fire by addressing commonly cited concerns in a FAQ published this week. Company executives are also making the media rounds to explain what Apple views as a privacy-minded solution to a particularly odious problem. Despite its best efforts, however, controversy remains.

Apple's CSAM detecting tool launch with iOS 15 this fall.

Read on AppleInsider

Pushback against Apple's newly announced child safety measures now includes critics from its own ranks who are speaking out on the subject in internal Slack channels, reports Reuters.

Announced last week, Apple's suite of child protection tools includes on-device processes designed to detect and report child sexual abuse material uploaded to iCloud Photos. Another tool protects children from sensitive images sent through Messages, while Siri and Search will be updated with resources to deal with potentially unsafe situations.

Since the unveiling of Apple's CSAM measures, employees have posted more than 800 messages to a Slack channel on the topic that has remained active for days, the report said. Those concerned about the upcoming rollout cite common worries pertaining to potential government exploitation, a theoretical possibility that Apple deemed highly unlikely in a new support document and statements to the media this week.

The pushback within Apple, at least as it pertains to the Slack threads, appears to be coming from employees who are not part of the company's lead security and privacy teams, the report said. Those working in the security field did not appear to be "major complainants" in the posts, according to Reuters sources, and some defended Apple's position by saying the new systems are a reasonable response to CSAM.

In a thread dedicated to the upcoming photo "scanning" feature (the tool matches image hashes against a hashed database of known CSAM), some workers have objected to the criticism, while others say Slack is not the forum for such discussions, the report said. Some employees expressed hope that the on-device tools will herald full end-to-end iCloud encryption.

Apple is facing down a cacophony of condemnation from critics and privacy advocates who say the child safety protocols raise a number of red flags. While some of the pushback can be written off to misinformation stemming from a basic misunderstanding of Apple's CSAM technology, others raise legitimate concerns of mission creep and violations of user privacy that were not initially addressed by the company.

The Cupertino tech giant has attempted to douse the fire by addressing commonly cited concerns in a FAQ published this week. Company executives are also making the media rounds to explain what Apple views as a privacy-minded solution to a particularly odious problem. Despite its best efforts, however, controversy remains.

Apple's CSAM detecting tool launch with iOS 15 this fall.

Read on AppleInsider

Comments

Sadly, due to this CSAM scanning, I will almost certainly be moving away from Apple. I have already disabled the auto-upgrade on our dozen, or so, iOS based devices. When iOS 15 comes out, that will be the end of updates for us. Since we don't use iCloud and rarely text, this "feature" would have little impact on us. However, the very notion that a company (any company) believes it has the right to place what amounts to spyware on devices I own is completely unacceptable! If this means eventually going back to a "dumb" phone, then so be it. Even if Apple were to state it will not include this "feature", I would probably not trust them enough to believe them... perhaps they would just do it anyway without telling anybody.

In fairness, I grew up consuming more than my fair share of dystopian novels and movies which might be influencing my position a bit. :-)

https://www.apple.com/child-safety/pdf/CSAM_Detection_Technical_Summary.pdf

The hash list is provided by National Center for Missing and Exploited Children (NCMEC). The images or likely just the hashes would have been provided by prosecutors/police agencies/child welfare.

The article above describes the hash algorithm. The algorithm creates a hash value. The hash value computed for the photos in the iCloud library are compared to hash values in the list.

An account is only flagged if there is a certain number of such hash matches.

Apple, as another poster said, is still by far the best game in town.

CSAM is probably not the hill to fight the privacy battle. Be sure that government will come to Apple and push for more. That's a better hill to fight the battle.

Problem is at the top of that hill is not only CSAM, but behind a chain-link fence is all legal data that every iOS user owns. Whilst the government may not have the means to climb the hill, they do hold a pair of wire cutters.

I don't have much respect for Cook at the best of times, but how he can stand up and tell barefaced lies that "privacy is in our DNA" is nigh on criminal IMO. They've blown people's trust overnight.

The iPhone has top notch photo and video capabilities and is very portable.

As such, it can easily be a portable tool to abuse children.

Apple wants to stop that. Go Apple go go go!

This is building in that back door that was requested some years ago.

Hashes of images of dissidents or foreign terrorists (not domestic of course) might accidentally work their way into the database. But probably only in countries with authoritarian governments.

If you happen to be misidentified as a 'bad guy', I'm sure they'll send you a registered letter to come down to the 'office' and help them clarify the situation. They'd never start surveilling you or breakdown your door over something like that...Right?

I am against this because of the way it is being done. Apple putting plumbing in that can later be abused when circumstances change, despite their best intentions today.

However, relying on misinformation does not help the discussion against this in any way.

From Wikipedia:

The National Center for Missing & Exploited Children (NCMEC) is a private, nonprofit organization established in 1984 by the United States Congress. In September 2013, the United States House of Representatives, United States Senate, and the President of the United States reauthorized the allocation of $40 million in funding for the National Center for Missing & Exploited Children as part of Missing Children's Assistance Reauthorization Act of 2013

What an emotive button to press!

once this pathway is enabled in the OS, how can Apple say no? It won’t be able to.