Apple wipes on-device CSAM photo monitoring from site, but plans unchanged

Apple removed all signs of its CSAM initiative from the Child Safety webpage on its website at some point overnight, but the company has made it clear that the program is still coming.

Apple announced in August that it would be introducing a collection of features to iOS and iPadOS to help protect children from predators and limit the spread of Child Sexual Abuse Material (CSAM). Following considerable criticism from the proposal, it appears that Apple is pulling away from the effort.

The Child Safety page on the Apple Website had a detailed overview of the inbound child safety tools, up until December 10. After that date, reports MacRumors, the references were wiped clean from the page.

It is unusual for Apple to completely remove mentions of a product or feature that it couldn't release to the public, as this behavior was famously on display for the AirPower charging pad. Such an action could be considered as Apple publicly giving up on the feature, though a second attempt in the future is always plausible.

Apple's features consisted of several elements, protecting children in a few ways. The main CSAM feature proposed using systems to detect abuse imagery stored within a user's Photos library. If a user had iCloud Photos on, this feature scanned a user's photos on-device and compared them against a hash database of known infringing material.

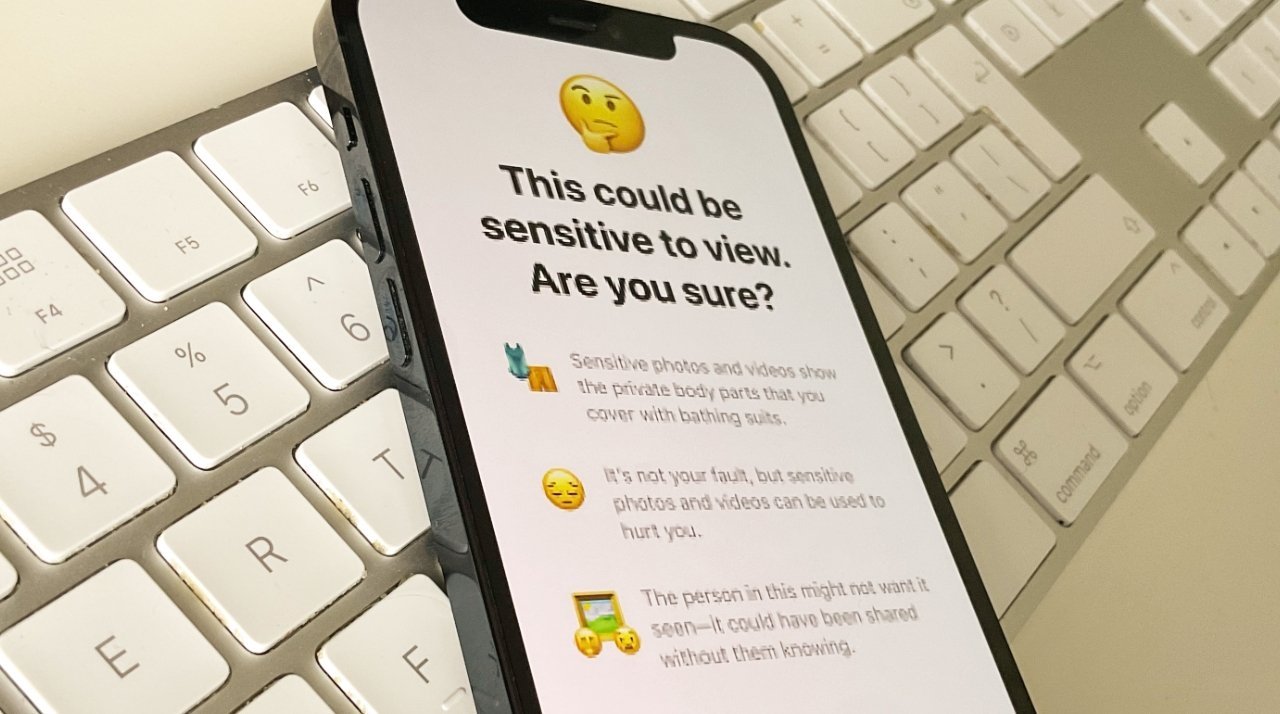

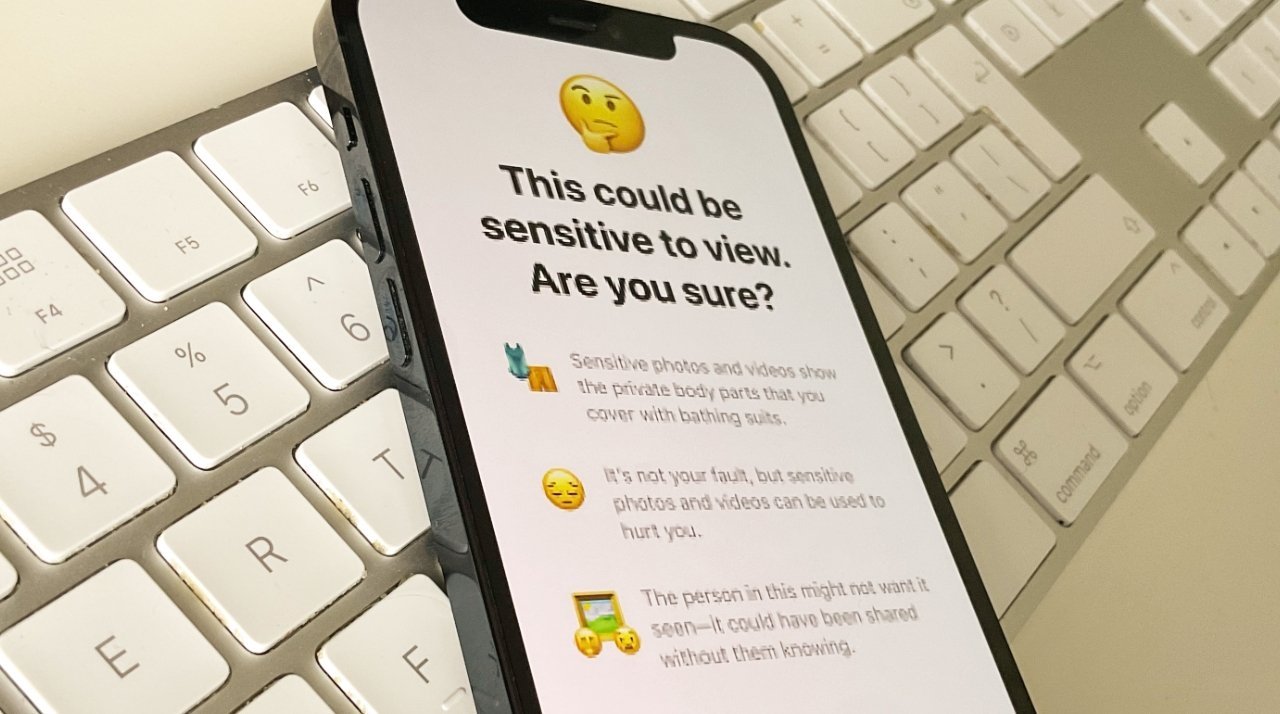

A second feature has already been implemented, intending to protect young users from seeing or sending sexually explicit photos within iMessage. Originally, this feature was going to notify parents when such an image was found, but as implemented, there is no external notification.

A third added updates to Siri and Search offering additional resources and guidance.

Of the three, the latter two made it into the release of iOS 15.2 on Monday. The more contentious CSAM element did not.

The Child Safety page on the Apple Website previously explained Apple was introducing the on-device CSAM scanning, in which it would compare image file hashes against a database of known CSAM image hashes. If a sufficient quantity of files were to be flagged as CSAM, Apple would have contacted the National Center for Missing and Exploited Children (NCMEC) on the matter.

The updated version of the page removes not only the section on CSAM detection, but also references in the page's introduction, the Siri and Search guidance, and a section offering links to PDFs explaining and assessing its CSAM process have been pulled.

On Wednesday afternoon, The Verge was told that Apple's plans were unchanged, and the pause for the re-think is still just that -- a temporary delay.

Shortly after the announcement of its proposed tools in August, Apple had to respond to claims from security and privacy experts, as well as other critics, about the CSAM scanning itself. Critics viewed the system as being a privacy violation, and the start of a slippery slope that could've led to governments using an expanded form of it to effectively perform on-device surveillance.

Feedback came from a wide range of critics, from privacy advocates and some governments to a journalist association in Germany, Edward Snowden, and Bill Maher. Despite attempting to put forward its case for the feature, and an admittance it failed to properly communicate the feature, Apple postponed the launch in September to rethink the system.

Update December 15, 1:39 PM ET: Updated with Apple clarifying that its plans are unchanged.

Read on AppleInsider

Apple announced in August that it would be introducing a collection of features to iOS and iPadOS to help protect children from predators and limit the spread of Child Sexual Abuse Material (CSAM). Following considerable criticism from the proposal, it appears that Apple is pulling away from the effort.

The Child Safety page on the Apple Website had a detailed overview of the inbound child safety tools, up until December 10. After that date, reports MacRumors, the references were wiped clean from the page.

It is unusual for Apple to completely remove mentions of a product or feature that it couldn't release to the public, as this behavior was famously on display for the AirPower charging pad. Such an action could be considered as Apple publicly giving up on the feature, though a second attempt in the future is always plausible.

Apple's features consisted of several elements, protecting children in a few ways. The main CSAM feature proposed using systems to detect abuse imagery stored within a user's Photos library. If a user had iCloud Photos on, this feature scanned a user's photos on-device and compared them against a hash database of known infringing material.

A second feature has already been implemented, intending to protect young users from seeing or sending sexually explicit photos within iMessage. Originally, this feature was going to notify parents when such an image was found, but as implemented, there is no external notification.

A third added updates to Siri and Search offering additional resources and guidance.

Of the three, the latter two made it into the release of iOS 15.2 on Monday. The more contentious CSAM element did not.

The Child Safety page on the Apple Website previously explained Apple was introducing the on-device CSAM scanning, in which it would compare image file hashes against a database of known CSAM image hashes. If a sufficient quantity of files were to be flagged as CSAM, Apple would have contacted the National Center for Missing and Exploited Children (NCMEC) on the matter.

The updated version of the page removes not only the section on CSAM detection, but also references in the page's introduction, the Siri and Search guidance, and a section offering links to PDFs explaining and assessing its CSAM process have been pulled.

On Wednesday afternoon, The Verge was told that Apple's plans were unchanged, and the pause for the re-think is still just that -- a temporary delay.

Shortly after the announcement of its proposed tools in August, Apple had to respond to claims from security and privacy experts, as well as other critics, about the CSAM scanning itself. Critics viewed the system as being a privacy violation, and the start of a slippery slope that could've led to governments using an expanded form of it to effectively perform on-device surveillance.

Feedback came from a wide range of critics, from privacy advocates and some governments to a journalist association in Germany, Edward Snowden, and Bill Maher. Despite attempting to put forward its case for the feature, and an admittance it failed to properly communicate the feature, Apple postponed the launch in September to rethink the system.

Update December 15, 1:39 PM ET: Updated with Apple clarifying that its plans are unchanged.

Read on AppleInsider

Comments

I always thought iCloud screened for CSAM, after all MSFT and Google have been doing it for years.

Freedom has its limits.

I see no reason for the move. As some people have previously stated, “maybe Apple is going to e2e encrypt iCloud photos”. The rub here is that it would not be e2e encrypted either way. Scanning and reporting necessitates access to the data. E2E encryption is only E2E encryption IFF there is no process to circumvent it (including at either end) to send the data to someone else outside of the authorized recipients intended by the sender. This very fact alone means that iCloud photos will never be e2e encrypted as Apple needs to do CSAM scanning.

So all things stated, I’m fine with the current state of server side scanning as it’s not on my device and the only way the scanning and reporting applies is IFF you use the service (some may argue that’s the way it would work on device, but that is subject to change, whereas if it’s on the server, they can’t make that change to scan more than what’s sent to iCloud)

But I'll also be real, I am 1000% sure Google, Microsoft, etc. are scanning my data already looking for illicit content and merely ways to sell me more stuff anyhow lol. I agree with an earlier poster that Apple will likely contain their hash scanning to iCloud data stored locally on their own servers and just not publicize this information while leaving CSAM off of people's local hardware (which, as more and more customers move towards 100% storage of their data in the cloud, will be nearly just as effective one would think - I just migrated everything on my MacBook Pro/iPad/iPhone into iCloud fully myself this past summer, with just the occasional Time Machine backup taken to the HDD in my fire safe).

Either way you look at it, there is a growing percentage of people, of a particular ideological persuasion, that would have us all believe these predators (pedophiles) are just merely "minor attracted persons" and seem to want to normalize this deranged euphemism for them. Sickening.

To protect against one of those partial directions being used as evidence of possession, they also set the system up to emit fake partial directions which are indistinguishable from real ones until after you have uploaded enough real ones.

The clear solution is to spin all of the iCloud-related functionality out of Photos and into a separate application. CSAM scanning then goes in that application. If you want to upload stuff to iCloud, you need that application, which has the CSAM scanning. If you don't want the CSAM scanning, don't load the application (or remove it if it comes preinstalled). Done. Addresses everybody's concerns.

Right now, Apple employees can view photos you store in iCloud. Presumably they are prohibited from doing this by policy, but they have the technical capability. With the endpoint CSAM scanning as explained in the technical papers Apple presented, they would no longer have that capability. That's because the endpoint CSAM scanning intrinsically involves end-to-end encryption.

We already trust that Apple won't rework their on-device content scanning. Or did you forget what Spotlight and the object recognition in Photos are? Not like you can disable either of those.

Note: I made a typo: reshape should have been re-shared. And yes, I’m well aware encryption keys need to be safely stored. Now I ask you this, once data is encrypted, to be sent, how does one decrypt it? With the corresponding decryption key (which is different from the encryption key of course but the 2 come as a pair).

Once a threshold is met what happens then? Do we trust Apple to handle the data correctly? Do we trust the authorities will handle the data correctly? Do we trust individuals at these places not to share it or leak it? Do we trust that the thresholds won’t change? Do we trust that other types of images won’t be deemed “illicit” in the future? Do we trust a similar threshold algorithm won’t be applied to text in imessages, looking for messages of terrorism or “hate” speech? Do we trust that it will only be done for photos uploaded to iCloud?

I for one am fine with my images not being e2e encrypted in iCloud, as I consider it public space anyway and act accordingly. I would expect apple is employing encryption for data at rest, which a department(s) has access to the keys. So which would you prefer, “wish it were e2e” or a select few with access to the data at rest? 6 one way, half a dozen another, (except one in my opinion has far more bad implications for the future)… both ways still involve trust however.

https://forums.appleinsider.com/discussion/comment/3342666/#Comment_3342666