Future HomeKit could track you through your house and predict your needs

A future version of HomeKit may keep track of where the people in the house are, and learn user habits to figure out when to automatically take actions, without you having to ask Siri.

The temperature and humidity sensors in the new HomePod, and now enabled in the HomePod mini, are designed to be used as part of automated systems. Right now, you can create a Shortcut that says if the indoor temperature falls below a certain point, you want your heater turned on.

That does require a heater you can control remotely, or perhaps just a smart outlet, plus the HomePod. But it also requires you to set up that Shortcut, and "Using In-home Location Awareness," a newly-granted patent, suggests that Apple wants to move away from that.

Rather than you having to create a Shortcut or to set up a HomeKit automation, Apple wants it all to just happen for you. By itself.

"Users often perform the same or repeated actions with accessory devices while in a particular location," says the patent. "For example, every time a user comes home from work, they may close the garage door when they are in the kitchen."

"Therefore, certain activities with respect to devices in a home may be performed regularly and repeatedly (e.g., daily, several times throughout a day) while the user is in a certain location," it continues. "This can be a time consuming and tedious task for a user since these tasks are performed regularly or several times throughout the day."

"Thus... it is desired for the home application on the mobile device to be able to determine a location of the user," says Apple, "and suggest a corresponding accessory device that a user may want to control or automatically operate a corresponding accessory device based on the location of the mobile device of the user."

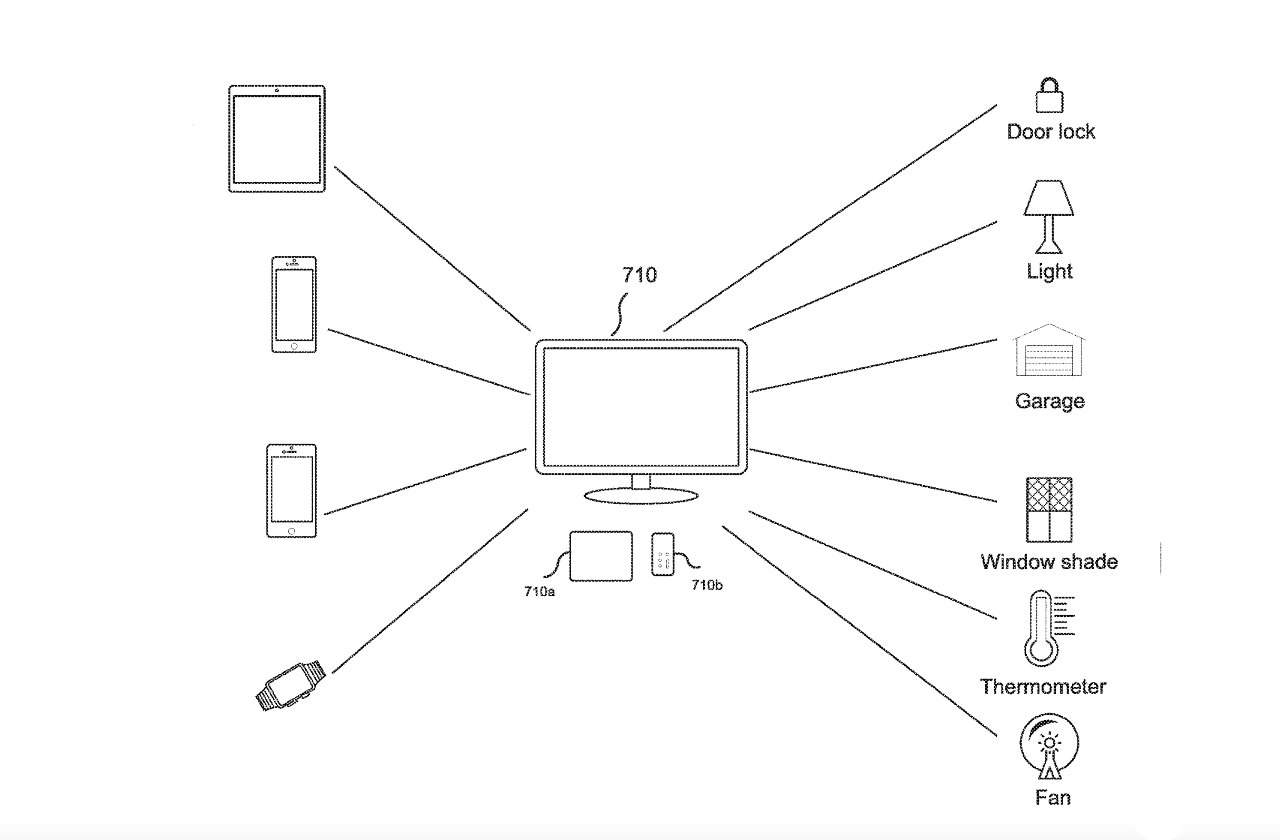

Detail from the patent showing a range of connected devices

Apple doesn't want to remove the user from the decision to turn on a heater, or a light, but rather to remove any effort.

"[When] a user is at a determined location according to detected sensor information, one or more associated accessory devices can be suggested to a user, thereby enhancing a user's experience," says the patent. "Also, rooms and accessory devices associated with the room can be suggested to a user."

"In addition, scenes and accessory devices associated with the scenes can be suggested to the user," it continues. "The suggestions regarding accessory devices and scenes can be learned or can be rule based."

The key word there is learned. Currently an iOS widget can offer you different information at different times of the day, and it learns that, for instance, you check your calendar first thing, but you like news headlines at the end of the day.

That's exactly what Apple wants with your smart home devices. They're smart, you use them all the time, they should just work.

"It would be beneficial if accessory devices and/or scenes can be automatically suggested to a user based on their current location," says the patent, "or if an accessory device is automatically controlled based on a current location of the mobile device based on the user's history of activity with an accessory device."

The patent runs to more than 17,000 words, and while naturally a large part of that is to do with privacy, the majority is about precisely detecting a user's presence.

As is typical for a patent trying to cover as broad an idea as possible, it's a little vague on the specifics. But if Machine Learning can already provide services such as presenting a calendar in the morning, it is this issue of precision location finding that is what's new and key.

Geolocation is not yet precise enough

Currently, HomeKit is able to have an automation that, for instance, stops music playing when everyone has left the house. That's chiefly done by determining whether iPhones are connected to the home's Wi-Fi network, though.

Or there are motion sensors which can pick up when you enter a room. But the problem there is that, normally, they can only detect motion such as entering or leaving, they don't actually sense a presence.

Aqara has begun selling what it calls a "human presence" sensor, which is able to detect minute body movements, but its device is not yet commonly available.

Rather than look to any one specific new technology, Apple's patent suggests combining information from many sources, and then making calculations.

"For example, the mobile device can include sensors to measure the distance that a user is walking or stepping as they turn on lights in, for example, a room or a house," says Apple. "Motion detectors and motion sensors in, for example, the accessory devices, can also be used to aggregate data regarding a user's movement in a room or area."

The patent doesn't directly refer to the U1 ultra wideband chip, but if it's in a user's devices, it will clearly be used.

So if you enter a room and also head toward your desk lamp instead of the overhead light, HomeKit could know to turn on only that lamp.

Apple is adding the U1 processor to more devices, plus HomeKit can already get information from sources such as motion sensors. Plus, Apple's support of Matter should mean such plans could extend to many more smart home devices.

This patent is one that was previously granted. While no patent is a guarantee of an eventual product, it being re-applied for means Apple is at least refining and updating its research.

Read on AppleInsider

Comments

*Walk into a room wearing Apple Glass*

Basically: why not use the one sophisticated sensor a person is wearing + explicit intent vs. tens of fixed sensors + inferred intent?

At least I certainly don’t want to be tracked throughout my home just for the possibility that my home automation might be a wee bit helpful but in all likelihood it would just be annoying or wrong.

I hate all forms of tracking and adverts but as I'm a grumpy old boomer, I don't count do I?

I actually have presence detection in my home right now (Not motion detection) and it is truly great that lights follow you as you go through the house. Also things like if the last person leaves a room whilst tv is running, to pause it, and then restart it when they return.

Wish I could get music to follow me.

Siobhan

"Open up your iPhone’s settings, tap “Privacy & Security,” and scroll down to “Analytics & Improvements.” There you’ll find a setting label “Share iPhone Analytics.” Toggle it on and off all you want. Tests from Mysk found that Apple collects device analytics data, no matter how you adjust the control"

I don't do social media

I don't use email on my phone.

I don't use Safari that much either.

When I'm driving, my phone is usually in Airplane mode.

Most of the apps I use are related to charging my EV. (different charging networks) or you know... speaking to someone on the phone.

The opportunity to fire ads at me is limited and I never respond to the few that I see anyway.

My phone is not the device that runs my life, it is a tool that is used when needed.

I've always found the user accessible temperature and humidity sensors in a speaker to be bizarre world level stuff. Putting them in a smart thermostat or indoor air quality monitor would absolutely make sense. Imagine the confusion that would ensue if the temp sensor on a HomePod or Echo was tied to the temperature controller in your house and something went wrong with the sensing function and your heat control got whacked out. You call out an HVAC service technician and they finally figure out where the disconnect is and tell you something like "Sorry ma'm, but you have to replace the speaker in your family room for the heat to come back on." I can imagine the home owner's response would be something like: "WTF, and you're probably going to tell me next that I need to replace the fan belt in my Subaru to get my ice maker to work."

I can totally understand where temperature and humidity sensors in a speaker would help with acoustic beamforming because both factors affect sound propagation. I suppose if those sensors were already there for sound processing, so exposing them to users would be low hanging fruit to add a couple of bullet points to the speaker's feature list. But from a smart home system perspective it is simply weird, especially for those folks who subscribe to the single responsibility principle for software design and architecture. Maybe I'm too much of a purest. I will go back to my cave, turn on my TV / lawn sprinkler controller.

I understand that Apple doesn't really want to be a system house for anything other than its own products and services. That makes sense for the way they've been doing business for decades, which is device centric, product centric, and with service cloud that brings a high level of connectedness and interoperability between their devices, products, and services. Having to physically touch every Apple TV to manage it does not seem like a stretch under their current model. But for home users or business users who have multiple Apple TVs, having to to physically visit every one of them to configure settings or force an update is kind of a pain. I only have 6 Apple TVs and it would be great to update any or all of them from one client or configure one of them to my liking, save the settings, and deploy the same configuration to the rest of them. Same deal with Time Machine configuration.

My hope is that the Matter standard will provide a way to build the kind of managed system I would like to have. I just hope that Apple plays along and doesn't lock us into using their Home client the way it currently works. If Matter provides a common, vendor independent, and platform independent way to create device profiles that can be used by any Matter compliant console/client to manage any Matter compatible device this could happen. I just don't think Apple is able to put up with the typical timelines and analysis paralysis that sometimes slows standards development to a crawl. We will see.