iOS 18 Project Greymatter will use AI to summarize notifications, articles and much more

Apple's next-gen operating systems will feature Project Greymatter, bringing a multitude of AI-related enhancements. We have new details on AI features planned for Siri, Notes and Messages.

AI will improve several core apps with summarization and transcription features

Following widespread claims and reports about AI-related enhancements in iOS 18, AppleInsider has received additional information about Apple's plans in the area of AI.

People familiar with the matter have revealed that the company is internally testing a variety of new AI-related features ahead of its annual WWDC. Known under project codename "Greymatter," the company's AI improvements will focus on practical benefits for the end user.

In pre-release versions of Apple's operating systems, the company has been working on a notification summarization feature known as "Greymatter Catch Up." The feature is tied to Siri, meaning that users will be able to request and receive an overview of their recent notifications through the virtual assistant.

Siri is expected to receive significantly updated response generation capabilities, through a new smart response framework, as well as Apple's on-device LLM. When generating replies and summaries, Siri will be able to take into account entities such as people and companies, calendar events, locations, dates, and much more.

In our earlier reports on Safari 18, the Ajax LLM, and the updated Voice Memos app, AppleInsider revealed that Apple plans to introduce AI-powered text summarization and transcription to its built-in applications. We have since learned that the company intends to bring these features to Siri as well.

This ultimately means that Siri will be able to answer queries on-device, create summaries of lengthy articles, or transcribe audio as in the updated Notes or Voice Memos applications. This would all be done through the use of the Ajax LLM or cloud-based processing for more complex tasks.

We were also told that Apple has been testing enhanced and "more natural" voices, along with text-to-speech improvements, which should ultimately result in a significantly better user experience.

Apple has also been working on cross-device media and TV controls for Siri. This feature would allow someone to, for instance, use Siri on their Apple Watch to play music on another device, though the feature is not expected until later in 2024.

The company has decided to embed artificial intelligence into several of its core system applications, with different use cases and tasks in mind. One notable area of improvement has to do with photo editing.

Apple has developed generative AI software for improved image editing

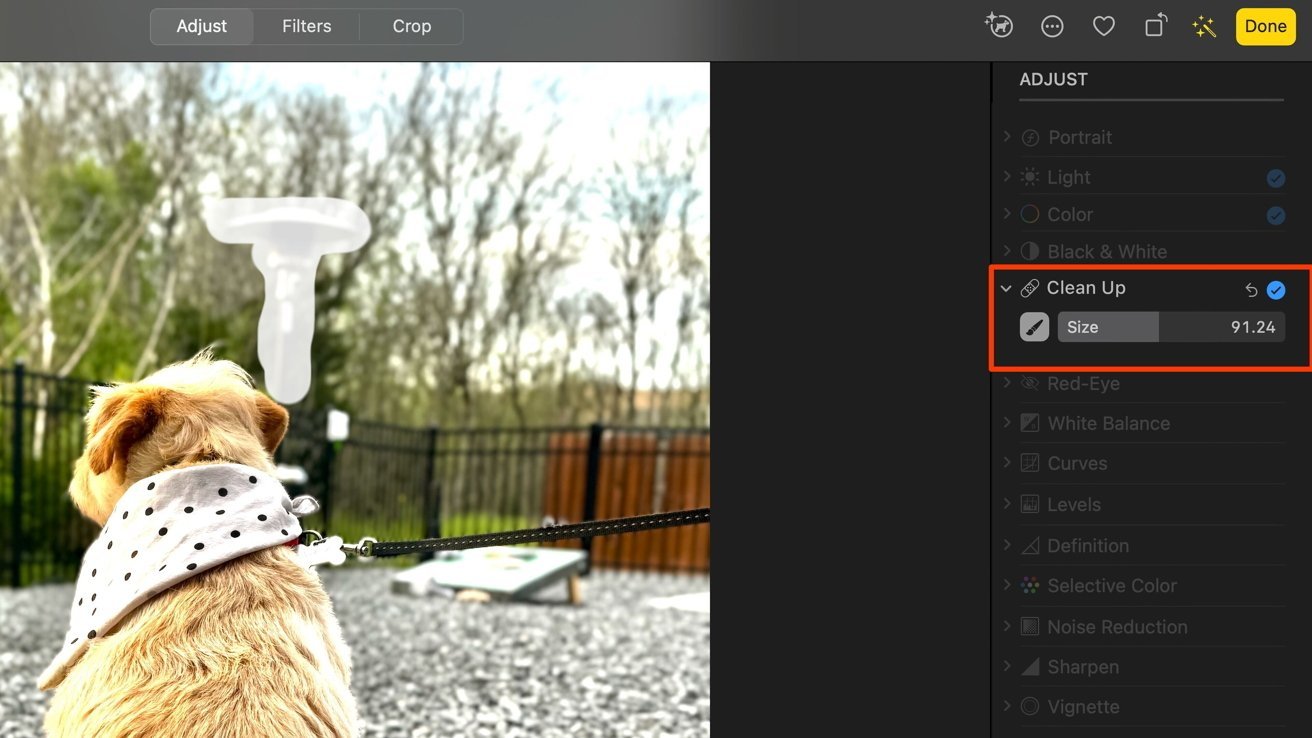

iOS 18 and macOS 15 are expected to bring AI-powered photo editing options to applications such as Photos. Internally, Apple has developed a new Clean Up feature, which will allow users to remove objects from images through the use of generative AI software.

The Clean Up tool will replace Apple's current Retouch tool

Also related to Project Greymatter, the company has created an internal-use application known under the name "Generative Playground." People familiar with the application revealed exclusively to AppleInsider that it can utilize Apple's generative AI software to create and edit images, and that it features iMessage integration in the form of a dedicated app extension.

In Apple's test environments, it's possible to generate an image through artificial intelligence and then send it off through iMessage. There are indications that the company has a similar feature planned for the end users of its operating systems.

This information aligns with another report claiming that users will be able to use AI to generate unique emojis, though there are additional possibilities for image-generation features.

According to people familiar with the matter, pre-release versions of Apple's Notes application also contain references to a Generation Tool, though it is unclear if this tool will generate text or images - as is the case with the Generative Playground app.

Notes will receive AI-powered transcription and summarization, along with Math Notes

Apple has prepared significant enhancements for its built-in Notes application, set to make its debut with iOS 18 and macOS 15. The updated Notes will receive support for in-app audio recording, audio transcription, and LLM-powered summarization.

iOS 18's Notes app will support in-app audio recording, transcription and summarization

Audio recordings, transcriptions, and text-based summaries will all be available within one note, alongside any other material users choose to add. This means that a single note could, for instance, contain a recording of an entire lecture or meeting, complete with whiteboard pictures and text.

These features would turn Notes into a true powerhouse, making it the go-to app for students and business professionals alike. Adding audio-transcription and summarization features will also allow Apple's Notes application to better position itself against rival offerings such as Microsoft's OneNote or Otter.

While support for audio recording at the application level, along with AI-powered audio transcription and summarization features will all greatly improve the Notes app - they're not the only things Apple has been working on.

Math Notes -- create graphs and solve equations through the use of AI

The Notes app will receive an entirely new addition in the form of Math Notes, which will usher in support for proper mathematical notation and enable integration with Apple's new GreyParrot Calculator app. We now have additional details as to what Math Notes will entail.

iOS 18's Notes app will introduce support for AI-assisted audio transcription and Math Notes

People familiar with the new feature have revealed that Math Notes will allow the app to recognize text in the form of mathematical equations and offer solutions to them. Support for graphing expressions is also in the works, meaning we could see something similar to the Grapher app on macOS, but within Notes.

Apple is also working on input-focused enhancements related to mathematics, in the form of a feature known as "Keyboard Math Predictions." AppleInsider was told that the feature would enable the completion of mathematical expressions whenever they're recognized as part text input.

This means that, within Notes, users would receive an option to auto-complete their mathematical equations in a similar way to how Apple currently offers predictive text or inline completions on iOS - which are also expected to make their way to visionOS later this year.

Apple's visionOS will also see improved integration with Apple's Transformer LM - the predictive text model which offers suggestions as you type. The operating system is also expected to receive a redesigned Voice Commands UI, which serves as an indicator of just how much Apple values input-related enhancements.

The company is also looking to improve user input through the use of so-called "smart replies," which will be available in Messages, Mail, and Siri. This would allow users to reply to messages or emails with basic text-based responses generated instantly by Apple's on-device Ajax LLM.

Apple's AI versus Google Gemini and other third-party products

AI has made its way to virtually every application and device. The use of AI-focused products such as OpenAI's ChatGPT and Google Gemini have seen a significant increase in overall popularity as well.

Google Gemini is a popular AI Tool

While Apple has developed its own AI software to better position itself against the competition, the company's AI is not nearly as impressive as something like Google Gemini Advanced, AppleInsider has learned.

During its annual Google I/O developer conference on May 14, Google showcased an interesting use case for artificial intelligence - where users could ask a question in video form and receive an AI-generated response or suggestion.

As part of the event, Google's AI was shown a video of a broken record player and asked why it wasn't working. The software identified the model of the record player and suggested that the record player may not be properly balanced and that it did not work because of this.

The company also announced Google Veo - software that can generate video through the use of artificial intelligence. OpenAI also has its own video-generating model known as Sora.

Apple's Project Greymatter and Ajax LLM cannot generate or process video, meaning that the company's software cannot answer complex video questions about consumer products. This is likely why Apple sought to partner with companies such as Google and OpenAI to reach a licensing agreement and make more features available to its user base.

Apple will compete with products like the Rabbit R1 by offering vertically integrated AI software on established hardware

Relative to physical AI-themed products, such as the Humane AI Pin or Rabbit R1, Apple's AI projects have a significant advantage in that they run on devices users already own. This means that users will not have to purchase a special AI device to enjoy the benefits of artificial intelligence.

Humane's AI Pin and the Rabbit R1 are also commonly regarded as unfinished or partly functional products, and the latter was even revealed to be little more than a custom Android application.

Apple's AI-related projects are expected to make their debut at the company's annual WWDC on June 10, as part of iOS 18 and macOS 15. Updates to the Calendar, Freeform, and System Settings applications are also in the works.

Read on AppleInsider

Comments

Which I hope will be part of a better designed UI component for text editing. Right now when I select text on my phone I’m given so many options that I sometimes need to tap 5 times to find the right option.

Which is a challenging component to improve since it needs to stay out of the way of editing and can only take up a little bit of space.

1. try to complete the request on-device with a simple LLM/SLM

2. If the request is outside the scope of on-device capabilities, send to the Cloud (which might be the OpenAI partnership that is rumored, and Apple most likely cannot compete with).

For some functions (1) without (2) might suffice. It is actually very hard to detect whether a request is better off handled by the Cloud instead because a language model will always try to help the user despite the outcome. As a non-deterministic concept it cannot self-assess its output quality that easily.

The last thing I want to see is Apple taking the same approach that Microsoft is taking by requiring substantial upgrades to even very recent hardware platforms to fully enjoy the anticipated benefits of AI.

I'm still waiting to see exactly what Apple can deliver under the AI umbrella, which seems more like a circus tent at times, but if it makes my life better, easier, frees me from repetitive and mundane tasks, or picks up the slack when I'm at risk of falling off the rails, I'm all in. I think the jury is still out when it comes to exactly how AI will personally impact our everyday lives. Huge potential, but what will it really deliver to ordinary users?

Amazon has been delivering AI based summaries of user reviews in their store for some time. They clearly identify these summaries as being AI generated, which is nice. Their AI summaries mostly roll-up commonly mentioned attributes that appear in user reviews. Do these summaries benefit my purchase decision process? Maybe a little bit because most of the attributes that are pulled out, say performance, have positive and negative counts. The AI summaries do provide a finer grained assessment of specific product qualities compared to the star ratings alone, which are an aggregate of all plusses and minuses at each star rating. This may save you some time digging into the individual reviews, for example the 1-star reviews, to see what each reviewer didn't like about the product. Without pulling out individual attributes you had to read through multiple reviews to determine whether the issues mentioned are things that matter to you.

Amazon is obviously doing all of this on their end, not on the device. But to be perfectly clear, the quality of the summaries is heavily dependent of the quality of the inputs that drive the summarization process. What about fake reviews, seeded reviews. or AI generated reviews? Hmmm.

I really hope that all people who are impacted by AI generated content learn and never forget the meaning of "garbage in - garbage out." A keen awareness of garbage in - garbage out is now a fundamental survival skill that everyone will need to have in order to move forward, not only for buying crap, but for most of what impacts us every minute of every day.