Apple is trying to reinvent group audio chat with no cell or WiFi needed

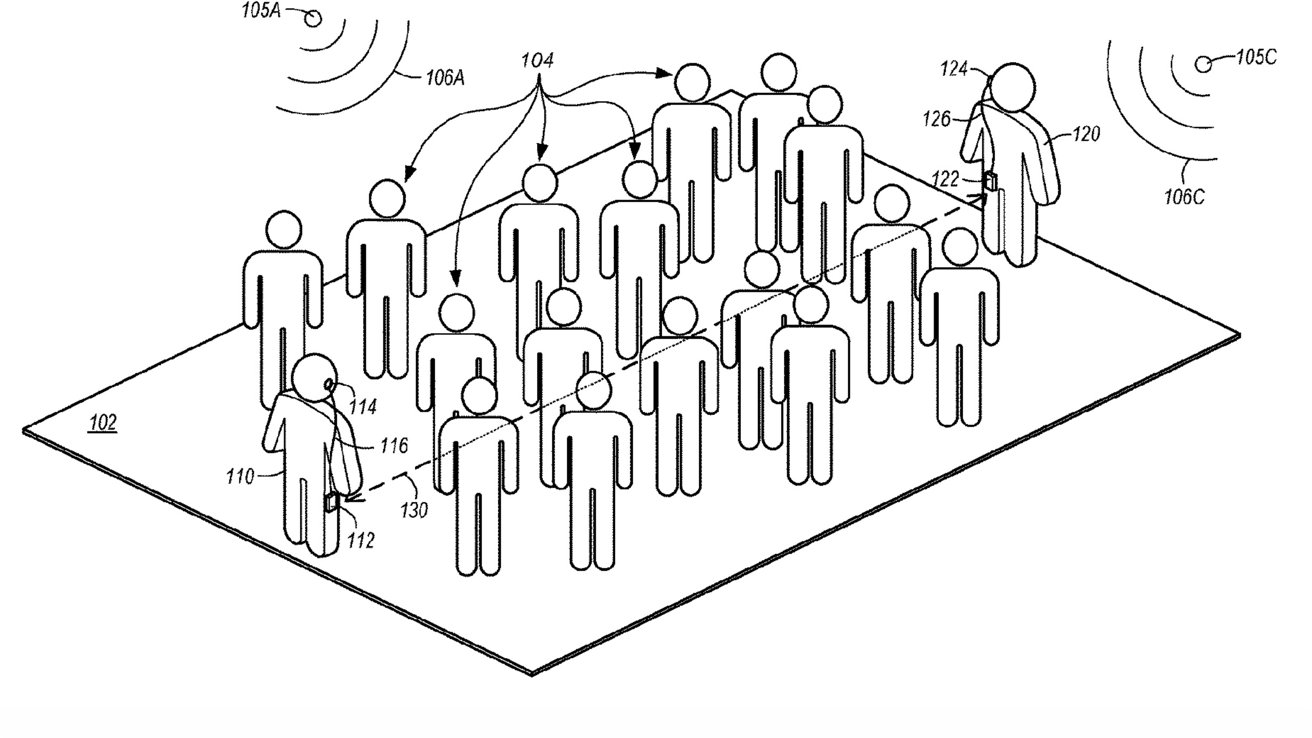

Apple is developing technology that would allow any number of willing people in close proximity to start an audio chat, using only an iPhone and a headset like AirPods, with no WiFi or cell service needed.

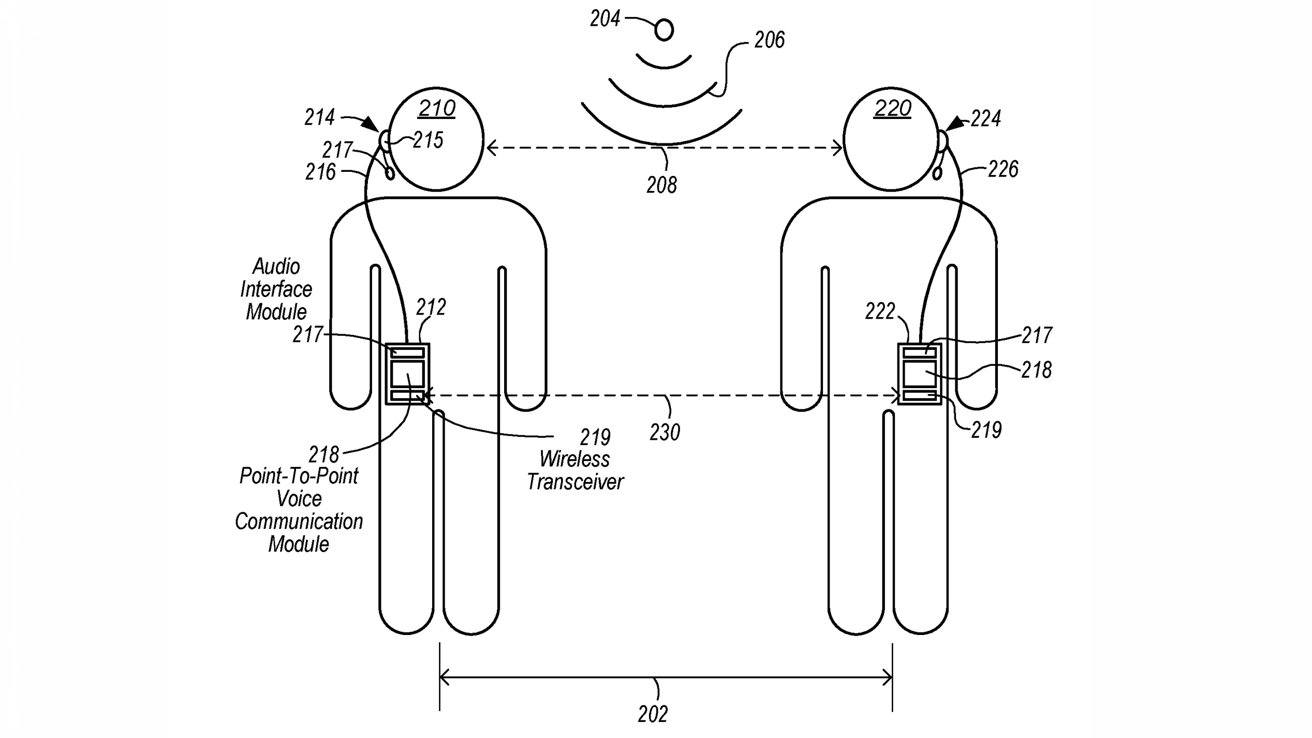

Using an iPhone and a headset, many people could talk with others nearby.

Instantaneous communication like the Walkie-Talkie feature on the Apple Watch would be possible but with groups of people instead of individuals.

Unlike the Walkie-Talkie feature, this new technology would eliminate the roundtrip to Apple's cloud servers, a step that makes Walkie-Talkie unreliable at times. Also, unlike Walkie-Talkie, this would allow groups of willing people to talk to each other simultaneously.

In a newly published patent application Apple details a system for person-to-person communications directly between devices that would communicate directly rather than using the internet to transmit messages.

This technology would usher in a new communication experience, allowing users to select one or more people to talk to with seamless functionality, using only the iPhone in their pocket.

In practice, this would function much like AirDrop, but for conversations. With AirDrop, iPhone users can connect to another user and share files without needing to connect to a network or use the cloud for transmission. This proposed technology would allow one-to-many connections, where all group members can talk to each other at the same time.

For example, a group of people at a crowded festival could communicate with each other to discuss where they are going to meet up. The iPhone would display how close people are based on the same technology that shows the direction and distance to your AirTag.

Apple's Ultra Wide Band (UWB) technology already allows the iPhone and Apple Watch to detect the location of a person or object. UWB works a bit like echolocation, sending out radio waves and measuring the return signals.

The UWB chip is what gives iOS and watchOS the ability to get compass like directions to devices like the AirTag and get a live update of the distance.

This could also be used to meet new people. Those same festival goers could chat with other willing attendees, sharing tips or providing guidance on the best things to do. The distance and direction to other people could help groups meet up.

Apple's system could allow groups to talk to anyone nearby.

Another powerful use would be in emergency response situations. Teams could coordinate without needing the same physical walkie-talkie system and frequencies.

Cell phone networks are often overloaded or not functional in an emergency. Apple's technology would allow coordination even if cell connections are down.

The patent shows an interface where nearby people are displayed in a proximity circle. People inside the main circle are closest, and people farther away are shown in a larger circle.

The rings of the circle indicate the distance that this ad-hoc network between people would work.

Like AirDrop, the new technology would allow people to add members to the chat even if they're not in a user's contact list. People known to the user would display their names, while unknown people would also appear on the interface based solely on distance.

Simply tapping on a name would add someone to the conversation, and users could tap as many nearby people as they'd like. People could be added to a conversation as they came into range.

Presumably, unknown people could share a contact card and photo, like when you start a Message conversation with a group.

The patent application specifically shows an iPhone and a headset as the central technology for this to work. Still, it's not difficult to imagine using headsets like the Apple Vision Pro for these point-to-point communications.

As these systems get smaller, perhaps to the size of the much-rumored Apple glasses, having the ability to chat with people in your area directly would become even easier.

The patent application is credited to Esge B. Andersen and Cedrik Bacon. Andersen filed the original patent in 2022 with the same name.

Read on AppleInsider

Comments

I would be willing to bet a dollar to a donut that the devices would link directly using the iPhones' WiFi radios.

My vision was that one could create ad hoc voice groups in small geographies that allowed regular people to have the same sort of always on communications as you see for security teams (like in the movies, but in less exciting situations). My use case was for situations like crowds or walking with a group down a city street where, with noise cancellation, you could have a conversation without yelling or needing to stand right next to someone.

And, in some future vision, if AI got good enough, it might be able to guess whom you were speaking with (maybe the start of the conversation is with all but then narrows to a pair when obvious whom the participants are). And dynamically adjust volume so others can sort of hear but assume they don't really need to pay attention until context makes it clear that something was meant for everyone.

And we know that Bluetooth works fine without WiFi or cellular data connectivity. Just switch your phone to airplane mode then enable Bluetooth.

https://www.rent2way.com/sprint-nextel-walkie-talkie/

Of course Apple can use Coded Phy for increased range, and send BLE advertisements when the screen is off - so their implementation will likely be free of the artificial restrictions on iOS.

There are also two levels of 'long range' in Bluetooth 5 - Coded Phy S2 and S8. They increase the range between handsets to ~100m. Apple doesn't expose these to developers for use in iOS - but all iPhones since iPhone X support it (they were briefly available in an iOS 13 beta I think). Android does have APIs for using these long range modes. From first hand experiments - Bluetooth LE Coded Phy S8 travels further than a WiFi hotspot from the same phone.

Another thing to bear in mind is that the codecs and bitrates chosen for Bluetooth headsets are mainly chosen to optimise battery life (and component cost) - so balancing the power needed for the codec complexity in the MCU to compress the audio vs the power needed to transmit the data. If you've already got a powerful CPU and a comparatively huge battery - and aren't trying to make the whole thing fit in someone's ear - you can make some different codec choices and really get the bitrate down on a phone.

The Opus codec ( https://opus-codec.org ) at 64kbps (less than half Coded Phy S8s 125kbps) is pretty much transparent (to me) 48kHz Stereo - which would be fine for a one-one call. This codec is used by Google's Pixel Buds Pro for its spatial audio implementation.

For group calls - and bearing in mind modern phones do not lack in compute - if you can tolerate a drop to wide-band (which most 'Classic' Bluetooth headphones would force anyway) Google has made their Lyra V2 codec open (and MIT licenced) - which goes down to just 3.2kbps - which would allow 40 channels on Coded Phy S8. It's worth checking the samples at ( https://opensource.googleblog.com/2022/09/lyra-v2-a-better-faster-and-more-versatile-speech-codec.html ).

Meta also has MLow - which at 6kbps also does wide-band, but at a lower complexity than Opus. Meta seem to be keeping this proprietary though ( https://engineering.fb.com/2024/06/13/web/mlow-metas-low-bitrate-audio-codec/ )

Another low bitrate codec is LMCodec (https://arxiv.org/pdf/2303.12984). This goes down to 0.5kbps, and still sounds very acceptable (there was a project page with very impressive samples at ( https://mjenrungrot.github.io/chrome-media-audio-papers/publications/lmcodec/ ) - but it's doing odd things today). Voice codecs below 0.7kbps come under export controls in the UK ( https://assets.publishing.service.gov.uk/media/660d281067958c001f365abe/uk-strategic-export-control-list.pdf ), and I've heard 3.2kbps for some other countries, so I'm not expecting to see that appear in the wild.

Google has made their Lyra V2 codec open (and MIT Apache-2.0 licenced)

Anyhow - no - not confused, perhaps not being clear though - I don't think we're in disagreement. Different audio codecs have different processing costs (and also latency), and for a given frequency can give higher or lower bitrates, or for a given bitrate give higher or lower signal bandwidth. As a very crude rule of thumb - You can pick two from:

- Low bitrate (Small packets on the network->short transmit duration->low radio transmit power consumption)

- High signal bandwidth, (FullBand vs Narrowband)

- Low processing cost (doesn't require expensive chips or a large battery to power it)

... depending on which codec you choose to encode/decode the audio. Decode is often simpler than Encode too - so on headphones for instance, it may only be feasible to receive in one codec (such as FullBand AAC), and transmit in another (such as WideBand SBC - which also has a lower latency).My main point was that audio codecs are chosen to suit. Bluetooth Earbuds have tiny batteries, so they use codecs that optimise for power usage, and as they require FullBand (48kHz) audio for listening to music - the encoded bitrate is quite high. If you're transmitting from phone to phone though - with comparatively huge batteries and a far more processing power - the constraints are different. You can use codecs that optimise for low bitrate instead, like LMCodec, and get a really low bitrate (0.5kbps) and still have WideBand (16kHz) voice - which is massively better than say, the G.711 codec, which is incredibly simple (low processing cost), but has a pretty high bitrate (64kbps) and only gives you NarrowBand (8kHz).

In my opinion, Apple should consider reintroducing APIs for Coded Phy, as there are numerous applications that could benefit from the extended range it offers. Personally, I was working on an app for auto-tracking using an iPhone and Apple Watch. Now, with the addition of Apple DockKit and the Vision framework, this idea is even more appealing. You can combine object tracking with the Vision API and enhance it with BLE when the tracked subject moves out of range or behind an obstacle.

If you're familiar with the Soloshot product (https://soloshot.com/), which uses BLE for auto-tracking with a tag, you'll know the concept is great, but the product itself is difficult to use and suffers from poor hardware and software quality. As a surfer, I would love to see an app that can track me using person detection (via pose or other methods) and location with an Apple Watch.