Apple's fix for bad AI notification summaries won't actually improve results

In a response to BBC concerns over incorrect Apple Intelligence notification summaries, Apple has promised to make it more clear when AI is used.

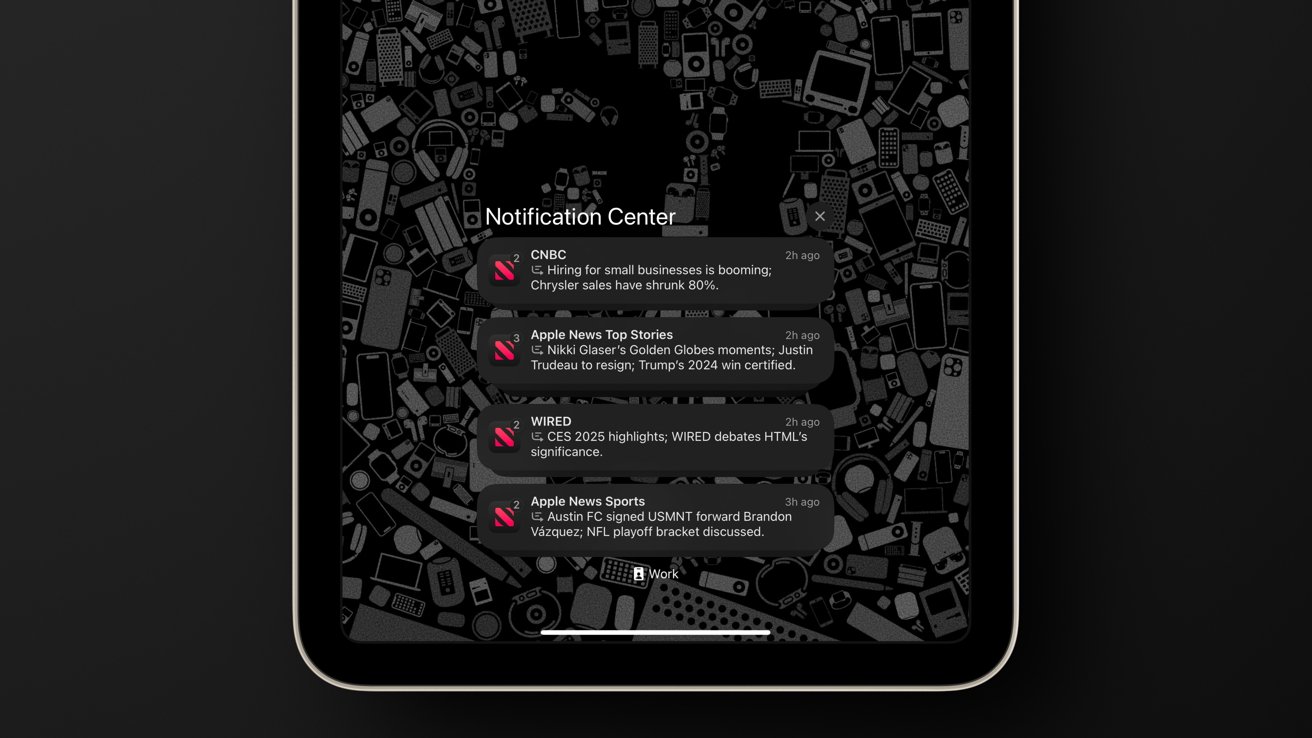

AI summaries don't always get the details right

Apple Intelligence rolled out to the public in October 2024 in a very early state. One of the primary features, notification summaries, often stumbles over the nuances in language that result in incorrect or outlandish results.

Rather than promise to make notification summaries better, Apple has shared, through a statement to the BBC that it will make it more clear when AI is used to generate a notification summary. The fix is meant to ensure users know that what they see may not be 100% accurate.

Apple's response comes after repeated complaints from the publication. Summaries have shown people being murdered, coming out as gay, or other shocking combinations that stun users and content publishers.

The fix will likely help reduce some of the concerns, as the current UI shows a tiny symbol of two lines and a curved arrow when a summary is performed. If users know a summary is AI-generated before they read it, it may help reduce confusion.

Apple could have promised to "fix" Apple Intelligence summaries, but it isn't as simple as that. The company is always working to improve the model, but there will always be a chance that different notifications coming in could create disastrous results.

A recent example being shared to social media is a summary suggesting Nikki Glaser was killed at the Golden Globes. The real headline read "Nikki Glaser killed as host of the Golden Globes."

As many non-native English speakers will tell you, there are way too many idioms and alternative meanings in the language. An artificial language generation tool looking for keywords to use in a summary can't pick up on subtleties or sarcasm.

It seems doubtful that Apple's fix will satisfy the complainers, but there is another option for users. Go to Settings -> Notifications -> Summarize Notifications to turn off the feature or at least toggle which apps use it.

Read on AppleInsider

Comments

@kkqd1337 never said Apple themselves were processing the data. It is being processed by on-device AI. You and Apple literally call it AI notification summaries.

I think the issue is the summary is sometimes so short it is limited on the number of words it can say, so it just picks what it thinks are important points like in the previous BBC article someone's name, and "gay" and mangles them together which completely loses context and often reality.

One thing that is crystal clear: LLM-powered AI is utterly devoid of common sense. Which actually makes sense if you think about it.

Consumer facing AI is really just a probability estimator based on data gathered from the Internet. There's a lot of B.S., parody, misinformation, whatever on the Internet and quite a few people with zero common sense. Boil all of this down and much of what floats to the top is nonsense.

There are also language subtleties that LLMs don't understand. Satire, sarcasm, parody, April Fools, whatever. In fact, that's why there are emojis and smileys: to communicate meanings that might be absent in written communication. Unfortunately, not everyone uses these additional language additions consistently.

So "yeah, right" gets interpreted by LLMs as "yes, correct" because they are too dumb to understand sarcasm.

AI can't figure out whether or not something is poppycock or not. Hell, I can scan through my e-mail inboxes (including junk mail) and identify 98.5% of junkmail almost instantaneously without even opening up the message. There is no consumer facing AI that can do this. Because AI has zero common sense.

So you have AI generating notification summaries in 2025. They are going to be garbage because LLMs are trained off of what these service providers find online.

This is the classic computer science acronym: GIGO (Garbage In, Garbage Out). Only AI is doing this for us in 2025, not humans. Wow, progress.

Best course of action for current AI-powered notification summaries is to turn them off. 2025 LLMs simply aren't sophisticated enough to make sense of these language nuances. So you spend more effort deciphering/laughing at AI summaries versus just reading the original human-authored headlines/articles.

It's becoming more and more clear that AI's capabilities are actually very limited and require a *large* amount of user review, oversight, and often override. It's clearly more useful for things like mathematics and engineering problems at this point. It's actually pretty poor at language-based tasks.

Remember everyone, treat AI/LLM chatbots as what they truly are: alpha software. Not even beta. That's the sophistication of most of this stuff in most situations at this time. It'll get better in time but it will probably take longer than the folks at OpenAI, xAI, Google, Meta, Amazon, Apple, whoever think it will take.

Already we are seeing an enormous slowdown in LLM improvements. I'm no AI scientist myself but I have a strong suspicion that LLMs are a dead end, at least with the way current research is being done with today's popular algorithms.

And there's always the ever looming problem with GIGO. AI services need to find more accurate datasets. Just spidering the entire Internet is a great way for them to generate a large pile of worthless crap. All these weird AI hallucinations are a symptom of this fundamental issue.

At least for now, comedians don't need to create parodies of AI. Actual AI is already a joke so frequently that the media doesn't need to go far to find something preposterous that a working AI actually spewed forth.

Note that I still believe that AI has some bonafide real world uses -- mostly in commercial/enterprise sectors -- especially when it revolves around a mostly numerical dataset or engineering problem (like semiconductor chip layout).

You don't understand why BBC is upset that Apple Intelligence is mischaracterizing BBC articles? How would you feel if someone summarized this very article as "Apple refuses to fix BBC article summaries"?

Again this goes back to the GIGO (Garbage In, Garbage Out) concept.

Worse, there are considerable differences between American English and British English. The initial version of Apple Intelligence is being written by American software engineers using an LLM that is highly skewed toward American English. This is why the BBC headlines are being butchered more than headlines from mainstream American media sources.

I don't know of an easy fix. And apparently neither does Apple because their response doesn't fix the problem. It's just a more obvious acknowledgement of Apple Intelligence's primitive capabilities.

As I mentioned earlier, AI has zero common sense. A relatively intelligent, well read, and thoughtful person can read a BBC headline and say "well, I think it actually means something considerably different than the literal prose". Brits do this all the time, use words that might be the opposite of what is trying to be conveyed because other Brits will understand.

If you source news from various media outlets, with a combination of American, British, and other English variants, I would expect these sort of AI news summary errors to continue for the time being.

Not convinced that today's LLMs are sophisticated enough to discern and correctly process the nuanced differences. LLMs seem to do much better with numbers and hard science (mathematics, physics, engineering, maybe chemistry) where data is quantitative.

As 2025, I suspect we will see more shortcomings and clearer examples of limitations of today's AI implementations. That is, AI will bungle more stuff this year, not just news headline summaries.

In a couple of years it is likely that some of the tasks that AI is doing now will be abandoned because it simply creates more problems than it fixes. Luckily iOS 18 allows users to disable AI notification summaries.