Siri in iOS 18.4 is getting worse before it gets better

Never mind that the beta of iOS 18.4 doesn't include the promised Siri improvements yet, Apple's voice assistant is now poorer than ever.

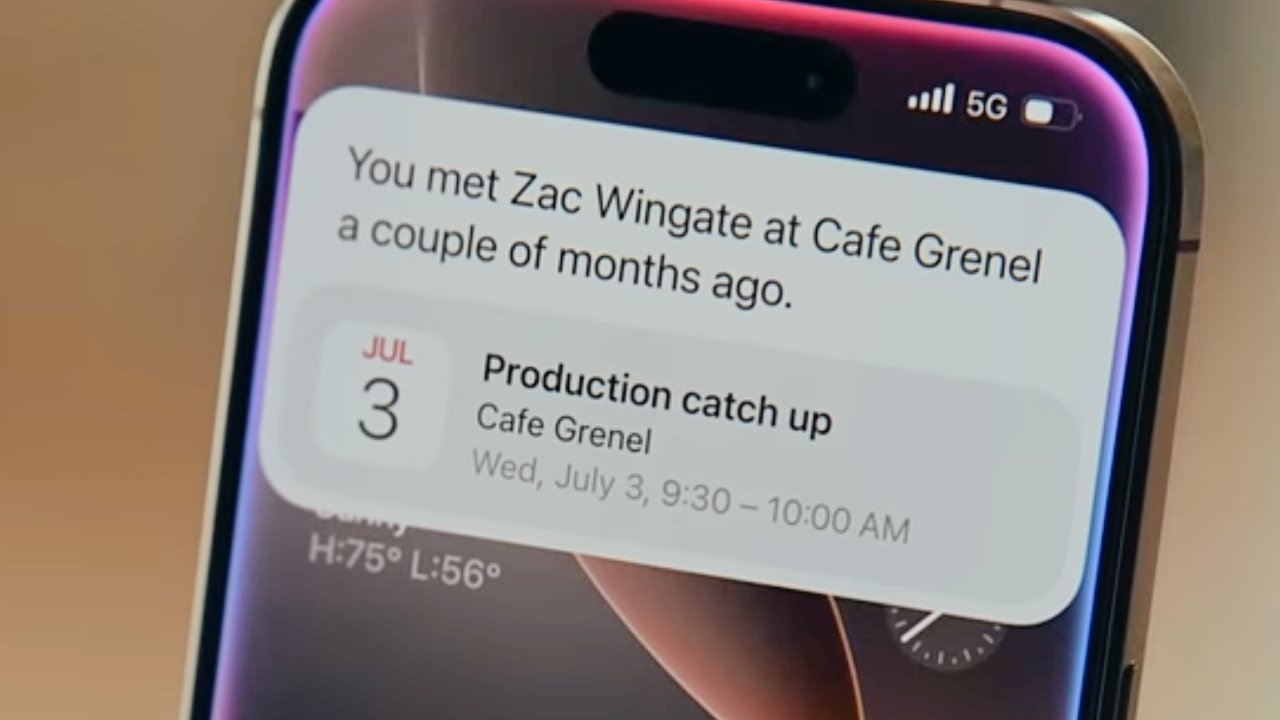

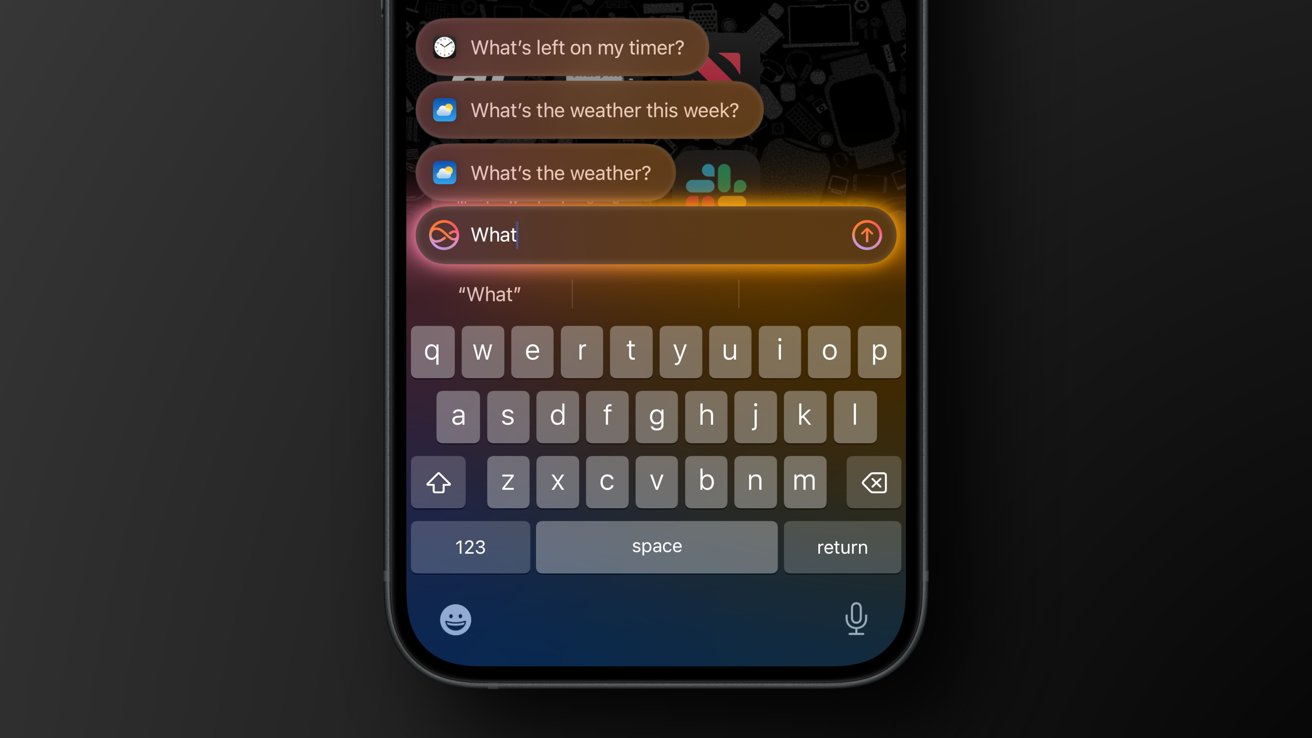

The type of answer Apple promises Siri will be able to answer with Apple Intelligence. Image source: Apple

Right from the start of Apple Intelligence, a key part of its promise has been that Siri will be radically improved. Specifically, Apple Intelligence would give Siri a better ability to follow a series of questions, and even understand when we change our mind and correct ourselves.

Siri will be able to use the personal information in our devices, such as our calendars. And while never exactly becoming sentient, Siri will, with permission, be able to relay queries to ChatGPT if more resources are needed.

All of this was promised and still is promised, but then practically from that moment, Apple has said the improvements start with iOS 18.4.

That iOS 18.4 has now entered developer beta and there is no sign of an improved Siri -- beyond its very nice new round-screen animation. Things slip, especially in betas, and there were already reports of problems delaying Siri, so as disappointing as it is to have to wait longer, it's not a surprise.

Siri has a new glow animation, but not much else

What is a surprise is that somehow the existing Siri is definitely worse than it used to be. In one case during AppleInsider testing, the problem was that Siri erroneously wanted to pass a personal information request on to ChatGPT, as if that functionality were in place and this was the correct thing to do.

But the rest of the time, Siri is simply often wrong.

However, as great as Siri can be, it does have the frustrating habit of suddenly being unable to understand something it has successfully parsed many times before. So your mileage may vary, but in testing we asked Siri the same questions over a couple of days and consistently got the same incorrect responses.

Keeping it simple

If you ask Siri, "What's the rest of my day look like?" then it will tell you what's left on your calendar for today. Or it did.

Ask Siri under iOS 18.4 and most of the time you get "You have 25 events from today until March 17." The date keeps moving back -- it's always a month -- but the number of events is always 25, seemingly whether that's correct or not.

Type to Siri works great, but Siri doesn't

But of course, the key thing is that you ask about today, and you get told the next four weeks instead. Just occasionally and for no apparent reason, the same request doesn't get you the wrong verbal answer.

Instead, it gives you the wrong answer visually. You may instead get a dialog box showing today's and the next few days' events.

"Siri, what am I doing on my birthday?" ought to be a straightforward request because iOS has the user's date of birth in their contact card or health data, and it has the calendar. But no, "You have 25 events from today to March 17."

"When's my next trip?" also should be able to check the calendar, and it does. But it returns "There's nothing called 'trip' in your calendar."

Or rather, you can first get the absolutely maddening response of, "You'll have to unlock your iPhone first." Since there is a switch in Settings called Allow Siri While Locked, this is right up there with how Siri will sometimes say it can't give you Apple Maps directions while you're in a car.

Half integrated with ChatGPT

Much of the improvement with Siri is supposed to come with ChatGPT, and that isn't here yet -- except iOS thinks it is. You can try something Siri definitely can't do now, should be able to do eventually, but which it has a go at answering anyway.

Apple Intelligence works with ChatGPT

"Siri, when was I last in Switzerland?" That's using personal on-device data, again really just checking the calendar. But instead, you get the prompt -- "Do you want me to use ChatGPT to answer that?"

If you then say why not, go on then, good luck with it, then Siri passes the request to ChatGPT. That either comes back saying it doesn't do personal information, duh, or sometimes asks you to tell it yourself when you were last there.

Mind you, it seems to always ask you that through a text prompt, and Siri is inconsistent here. Sometimes asking Siri to "Delete all my alarms" will solely get you a text prompt asking if you're sure, but "what's 4 plus 3" gets both text and a spoken response.

Then ChatGPT is also just in an odd place now. If you go to Apple's own support page about using Siri and recite all of its examples in your iPhone, most of them work -- but not in the way you might expect.

It used to be, for instance, that Siri would do a web search if you asked, as Apple suggests, "Who made the first rocket that went to space?" Now if you ask that, you are instead asked permission to send the request to ChatGPT.

Sending images and text to ChatGPT is a feature of Apple Intelligence, but also a crutch

The next suggestion in Apple's list, though, is "How do you say 'thank you' in Mandarin?" and the answer depends on whether you've just used ChatGPT or not.

If you haven't, Siri asks which version of Mandarin you want, then audibly pronounces the word. If on your immediately previous request you agreed to use ChatGPT, though, Siri now uses it again without asking.

So suddenly you're getting the notification "Working with ChatGPT" and no option to change that. Plus, ChatGPT gives you the answer to that question in text on screen, rather than pronouncing it aloud.

All of which means that Siri can be flat out wrong, or it can give you different answers depending on the sequence in which you ask your questions.

We are so far away from being able to ask "Siri, what's the name of the guy I had a meeting with a couple of months ago at Cafe Grenel?" -- like Apple has been advertising.

Some signs of improvement

To be fair, you can never know entirely for sure whether an issue with Siri is down to your pronunciation or the load on Apple's servers at the time you ask. But you can know for certain when a request keeps going right or wrong.

Or, indeed, when it suddenly works.

"Siri, set a timer for 10 minutes," has been known to instead set the timer to something random, such as 7 hours, 16 minutes, and 9 seconds. Since iOS 18.4, AppleInsider testing has not shown that problem again, the timer has always set itself correctly.

So there's that. But then there's all the rest of this about inconsistencies, wrong answers, and the will-it-never-be-fixed "You'll have to unlock your iPhone first."

Apple is right to regard the improved Siri as a great and persuasive example of Apple Intelligence because it's a part that will most visibly, most immediately, and most users will benefit from. And it's nobody's fault that the improvements have been delayed.

Apple Intelligence is here, but Siri hasn't benefited from it yet

But Apple ran that ad about whoever the guy was from Cafe Grenel five months ago. Apple was telling us Siri is fantastically improved before it is.

Even this doesn't account for how Siri is worse than before, but new Apple Intelligence buyers will be disappointed. Long-time Apple users will understand things can get delayed, but still, there are limits.

Siri didn't get better at the start of Apple Intelligence as Apple's ads promised. It hasn't gotten better with the first beta release of iOS 18.4.

At some point, it will surely, hopefully, improve exactly as so long rumored -- but by then, there will have to be users who won't ever try Siri again.

Read on AppleInsider

Comments

How exactly is that quality tech journalism ?

The author is writing about observations from different sources as well as within the AppleInsider team that brings a variety of experience and skills to the table.

As far as scraping/starting-over and waiting for a new Siri as implied by some, elsewhere online, Apple has to keep some core functions working that have been useful in things such as ‘Apple Home’ functions albeit with new hiccups.

If AI is being trained on the vast ocean of garbage that resides in cyberspace, how exactly will it be able to attain near-perfect accuracy? In fact, what is AI's method for determining whether a piece of information is true or false? No, the most common or popular answer or opinion doesn't work in a country where the stupid and ignorant vastly outnumber the wise and learned.

AI is by far the most vast and pervasive instantiation of the Dunning-Kruger effect that humanity has ever seen. With the possible exceptions of Trump and Musk.

I read a book by some tech researcher who said "if you can replace a neuron with a man-made nano-device that did everything a neuron did, then would that brain function any differently?" (Or words to that effect.) He then added that logically then, you should be able to replace every neuron in the human brain with the same nano-device and have an artificial brain and AI that is indistinguishable from the human variety. That is his argument for why AI will eventually instantiate human intelligence.

Of course the main stumbling block in his argument is that he assumed that a man-made nano-device that does EVERYTHING that a neuron does is unquestionably attainable. We don't even know how neurons work. We don't even know if we will ever know enough to truly understand how a neuron works. This is the fallacy of assuming infinite future knowledge that a lot of futurists including AI advocates unwittingly commit.

Yes R-AI, AI with Reasoning, would solve a lot of the criticisms leveled on AI. Only problem is, no one really knows how to get a machine to truly reason the way the smarter segment of the human population does. We don't even know if that is achievable, but some just power through with their arguments by treating it as a given. (Reasoning like the other, much larger, segment of humanity, on the other hand, --well, AI has already achieved that.)

I only use it for a count down timer for my coffee machine so I can work in the office and when the timer goes off on my watch, I go get a fresh cup and take it to my wife for her first cup of the day while in bed.

Based upon my limited use, Siri works.

It's hard not to think that there is truly something about Siri that is irreparably broken. One can only imagine how much money and resources Apple has thrown at this problem by now. How can we still be HERE after 15 years? How could the "Siri promised land" of 18.4 possibly be such an embarrassment upon its first release? How could Siri actually be WORSE? "Delayed" is an acceptable excuse for the launch of an all-new feature. But it's no excuse for Siri, who's about to celebrate her quinceañera.