OpenAI wants the US government to legalize theft to reach the AI promised land

OpenAI may have already crawled the internet for all the world's data to train ChatGPT, but it seems that isn't enough as it wants protection from copyright holders to allow it to continue stealing everything that both is and isn't nailed down.

OpenAI proposes freedom of intelligence in United States. Image source: ChatGPT

The latest manufactured goalpost OpenAI has set dubbed "AGI" can't be reached unless the company is free to do whatever it takes to steal everything good and turn it into AI slop. At least, that's what the latest OpenAI submission to the United States Government suggests.

An OpenAI proposal submitted regarding President Trump's executive order on sustaining America's global dominance explains how the administration's boogeyman might overtake the US in the AI race. It seems that the alleged theft of copyright material by Chinese-based LLMs put them at an advantage because OpenAI has to follow the law.

The proposal seems to be that OpenAI and the federal government go into a kind of partnership that enables OpenAI to avoid any state-mandated laws. Otherwise, the proposal alleges that the United States will lose the AI race.

And to prove it's point, OpenAI says will lose that race to the US government's favorite boogeyman -- China.

One bullet point reads:

Its ability to benefit from copyright arbitrage being created by democratic nations that do not clearly protect AI training by statute, like the US, or that reduce the amount of training data through an opt-out regime for copyright holders, like the EU. The PRC is unlikely to respect the IP regimes of any of such nations for the training of its AI systems, but already likely has access to all the same data, putting American AI labs at a comparative disadvantage while gaining little in the way of protections for the original IP creators.

Such a partnership would protect OpenAI from the 781 and counting AI-related bills proposed at the state level. AI companies could volunteer for such a partnership to seek exemption from the state laws.

The proposal also suggests that China and countries like it that don't align with democratic values be cut off from AI built in the United States. This would mean Apple's work with Alibaba to bring Apple Intelligence to China would be halted if required.

It also calls for a total ban on using equipment produced in China in goods that would be sold to Americans or used by American AI companies.

The section on copyright more or less calls for the total abandonment of any restriction for access to information. According to the proposal, copyright owners shouldn't be allowed to opt out of having its content stolen to train AI. It even suggests that the US should step in and address restrictions placed by other places like the EU.

The next proposal centers around infrastructure. OpenAI wants to create government incentives (that would benefit them) to build in the US.

Plus, OpenAI wants to digitize all the government information that is currently still in analog form. Otherwise, it couldn't crawl it to train ChatGPT.

Finally, the proposal suggests the US government needs to implement AI across the board. This includes national security tasks and classified nuclear tasks.

The lunacy of OpenAI

One of the US Navy nuclear trained staffers here at AppleInsider pointed this out to me, cackling as he did so. As the other half of the nuclear-trained personnel on staff, I had to join him in laughing. It's just not possible for so many reasons.

AI is a tool, not some kind of cataclysmic event in human history

Admiral Hyman G. Rickover, the father of the nuclear Navy, helped build not just the mechanical and electrical systems we still use today, but the policies and procedures as well. One of his most important mantras besides continuous training was that everything needed to be done by humans.

Automation of engineering tasks is one of the reasons the Soviets lost about a submarine a year to accidents during the height of the Cold War.

When you remove humans from a system, you start to remove the chain of accountability. And the government must function with accountability, especially when dealing with nuclear power or arms.

That aside, there are an incredible number of inconsistencies with the proposals laid out by OpenAI. Using China as a boogeyman only to propose building the United States policy on AI around China's is hypocritical and dangerous.

OpenAI has yet to actually explain how AI will shape our future beyond sharing outlandish concepts from science fiction about possible outcomes. The company isn't building a sentient, thinking computer, it won't replace the workforce, and it isn't going to fundamentally transform society.

It's a really nice hammer, but that's about it. Humans need tools -- they make things easier, but we can't pretend these tools are replacements for humans.

Yes, of course, the innovations created around AI, the increased efficiency of some systems, and the inevitable advancement of technology will render some jobs obsolete. However, that's not the same as the dystopian promise OpenAI keeps espousing of ending the need for work.

Deepseek is an existential crisis for OpenAI's bottom line, not Democracy

Read between the lines of this proposal, and it says something more like this:

OpenAI got caught by surprise when DeepSeek released a model that was much more efficient and undermined its previous claims. So, with a new America-first administration, OpenAI is hoping it can convince regulators to ignore laws in the name of American exceptionalism.

The document references that authoritarian regimes that allow DeepSeek to ignore laws will enable it to get ahead. So, OpenAI needs the United States to act like an authoritarian regime and ensure it can compete without laws getting in the way.

AI has an intelligence problem

Of course, the proposal is filled with the usual self-importance evoked by OpenAI. It seems to believe its own nonsense about where this so-called "artificial intelligence" technology will take us.

Someone should warn OpenAI that hallucinations like this won't get you sympathy from the US government

It had to adjust goal posts to suggest that the term "AI" wasn't the sentient computer it promised us. No, now we've got two other industry terms to target: Artificial General Intelligence and Artificial Superintelligence.

To be clear, none of this is actual intelligence. Your devices aren't "thinking" any more than a calculator is. It is just a much better evolution of what we had before.

Computers used to be more binary. A given input would give a predetermined output. Then, branching allowed more outputs to occur for a given input depending on conditions.

That expanded until we got to the modern definition of machine learning. That technology is still fairly deterministic, meaning you expect to get the same output for the given inputs.

The next step past that was generative technology. It uses even bigger data sets than ML, and outputs are not deterministic. Algorithms attempt to predict what the output should be based on patterns in the data.

That's why we still sarcastically refer to AI as fancy autocomplete. Generating text just predicts what the next letter is most likely to be. Generating images or video does the same, but with pixels or frames.

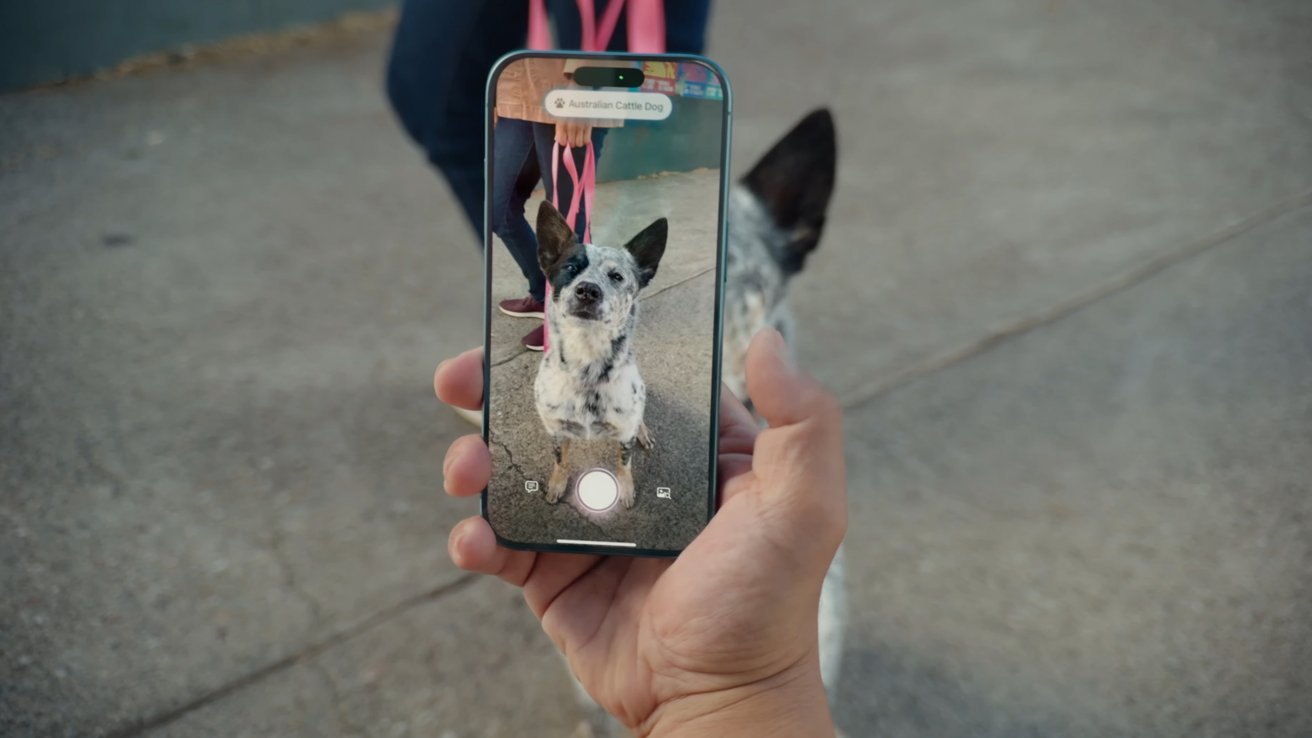

Human intelligence is asking the owner about the breed, artificial intelligence is awkwardly pointing your phone at it

The "reasoning" models don't reason. In fact, they can't reason. They're just finer tuned to cover a specific case that makes them better at that task.

OpenAI expects AGI to be the next frontier, which would be a model that surpasses human cognitive capabilities. It's this model that OpenAI threatens will cause global economic upheaval as people are replaced with AI.

Realistically, as the technology is being developed today, it's not possible. Sure, OpenAI might release something and call it AGI, but it won't be what they promised.

And you can forget about building a sentient machine with the current technology.

That's not going to stop OpenAI from burning the foundation of the internet down in the pursuit of the almighty dollar.

Apple's role in all this

Meanwhile, everyone says Apple is woefully behind in the AI race. That since Apple isn't talking about sentient computers and the downfall of democracies, it's a big loser in the space.

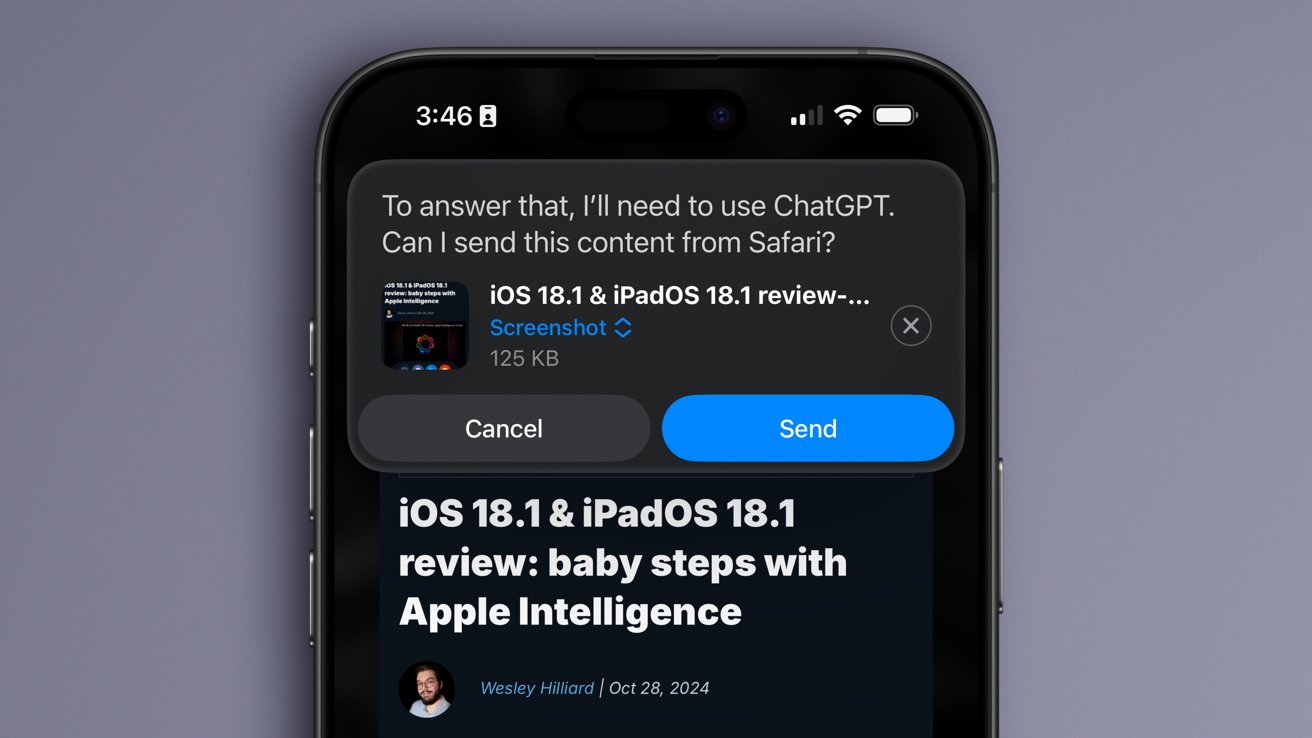

Perhaps Apple Intelligence can catch up by stealing copyrighted materials

Even if OpenAI succeeds in getting some kind of government partnership for AI companies, it is doubtful Apple would participate. The company isn't suffering from a lack of data access and has likely scraped all it needed from the public web.

Apple worked with copyright holders and paid for content where it couldn't get it from the open web. So, it is an example of OpenAI's arguments falling flat.

While OpenAI tries and fails to create something beyond the slop generators we have today, Apple will continue to refine Apple Intelligence. The private, secure, and on-device models are likely the future of this space where most users don't even know what AI is for.

Apple's attempt at AI is boring and isn't promising the potential end of humanity. It'll be interesting to see how it addresses AI with iOS 19 during WWDC 2025 in June after delaying a major feature.

Read on AppleInsider

Comments

First, I have four novels, hundreds of short stories, plus paintings, drawings, and a full 50 episode podcast series. They are all setting on my system and not on the web. Starting over a year ago I started pulling anything and everything that I cared about off the web simply because I will not let the thieves steal and profit from my work. It is going to stay there until some kind of protections for copyright are in place.

Second, I don’t understand what you are trying to say with the Gulf of Mexico image. It IS the Gulf of Mexico regardless of what the moron in chief says.

so the only way we can win this race is if we cheat and steal?

Like, these AI companies are offering a service for subscription money. That type of business model will limit revenue and seems doubtful they can make money that way? It ultimately will be powered by ads. So the race is really to be in the position that Google is in right now? Ads, ads, ads?

The race is to build the software and hardware to replace humans in factories? Humans are probably cheaper. The race to replace desk and service workers? Desk work has been whittled down so much. So, it’s just the continuing trend. Humans are probably cheaper? Service workers? Humans are probably cheaper.

I think my position has settled on Butlerian jihad. It’s really just a normal resistance to robber barons, but Butlerian Jihad sounds apropos.

https://edition.cnn.com/2024/01/09/tech/duolingo-layoffs-due-to-ai/index.html

Klarna replaced customer service staff with AI:

https://www.cbsnews.com/news/klarna-ceo-ai-chatbot-replacing-workers-sebastian-siemiatkowski/

Employees are expensive:

100 employees x $50,000 = $5m/year

100 x M3 Ultra ($4k) = $400k over multiple years

The code-generating AI is very useful and boosts productivity:

https://arstechnica.com/ai/2024/10/google-ceo-says-over-25-of-new-google-code-is-generated-by-ai/

It's like having a user manual on steroids.

For media, it is being used for VFX and audio. The fake celebrity video on the following site was AI generated:

https://www.hollywoodreporter.com/news/general-news/kanye-west-viral-video-scarlett-johansson-1236134290/

It has been used for de-aging actors:

James Earl Jones who was the voice of Darth Vader died and AI is now being used to replicate his voice. The voice in the Obi-Wan TV show was AI:

https://www.youtube.com/watch?v=ogNzvIoD2Qo&t=51s

It's most useful and reliable as a tool to help with tasks that are tedious and repetitive. Transcribing audio for subtitles for example.

People are not aware just now of the impact AGI will have because AI doesn't seem reliable enough to replace a human. It doesn't have to though, it only has to replace a job role and it can replace a significant amount of job roles that make up a huge segment of the economy. This will mainly benefit corporations at the expense of lower skilled jobs but it can also empower individuals to compete with companies. Game studios hire thousands of people. When an AI can replace a lot of the job roles, an individual will be able to spool up a digital army of workers and be able to manage a virtual game studio. This will allow individuals to make millions or even billions in revenue where they never could before. It will also create a flood of mediocre content way bigger than we see already.

I have no doubt that there has already been an impact to some job sectors. It's what happens when new technology comes into the mainstream. The problem is that the AI grifters are trying to convince people that they can replace people with AI when they actually can't.

I'm not sure how those companies are doing with their replacements or staff reductions, but that'll pan out in time. Many of the mid to small range businesses that have attempted to replace people with AI go out of business. Large companies can afford to experiment.

AI is a good tool. There will be some jobs lost because of it, but some jobs created because of it too. But it is in no way the existential crisis that OpenAI wants people to believe.

This so-called AGI is just more nonsense packaged in a way that means OpenAI can charge more money for whatever mediocre model the release under that guise. I can't comment on something that doesn't exist yet, so let's see how AGI goes if it ever really happens.

I have a feeling we're already way past peak AI and all that's left for the current iteration is refinement. No, that doesn't mean a new more capable model won't be out next week, what I mean is we've seen the peak of what it can do and it's just going to get better at those tasks. Like these examples, better movie deepfakes, better translation skills, better scientific research and data parsing.

I doubt we'll see something significantly different emerge beyond the AI slop images, video, and text. And we most definitely are not on the way to a sentient computer.

Then again the master of the felt pen Donald tRump can be bought, so they might have a chance.

Realistically ... comparing 'Artificial' vs. 'Natural' Intelligence, what would be the difference. Experts read books, articles etc. to form their knowledge base on which they reach new conclusions. That also happens in schools everywhere (or am I supposed to believe, that kids have personally witnessed all the events they write essays about)!?

Humans then use this knowledge base to invent new stuff, improve on that and last but not least earning money for using that knowledge.

So what's the difference?

YES!!! 🤣😂🤣😂🤣

https://loris.ai/blog/klarna-chatbot-strategy-shift-why-companies-are-rebalancing-human-and-ai-customer-service/

"Klarna Chatbot Strategy Shift: Why Companies Are Rebalancing Human and AI Customer Service

My question, "going to benefit us", is referring to some of this. Even in this new Klarna rebalanced AI chatbot support service organized, will there be a net benefit of service to Klarna's customers. Automated response systems suck. The chatbot AI serves as a hopefully more advanced automated response service that provides customers good answers and responses. Given the current inputs going into LLM (thousands of interaction sessions between support and customers), I don't think it will be very good. Millions of specifically tuned sessions, curated with proper responses will be needed to capture the potential scenarios customers have, and there is still going to be some uncertainty resulting in responses that the LLM can't interpolate to.

To fix it, requires more data, more rules, more everything. So, there is going to be race for what is cheaper, people or the LLM service. I don't think anyone knows if the LLM service comparable to humans will get there. Same scenario for robots and stuff, except hardware has maintenance and stuff as well.

Yes, useful, but not national strategic initiative worthy. I liken it to more advanced grammar checkers, more advanced code completion. Great stuff, but that doesn't drive Nvidia to be worth $3T in the stock market. It's "robots replaced humans" and all the money that used to flow to humans will be flowing to AI service owners and AI hardware owners. So, concentrating billions for the many to a few.

Yes. Granted. Good set of tools. I totally expect and want artists to use state-of-the-art tooling for all their creations.

I would have preferred the zeitgeist at the time of hiring Sebastian Stan to play and older Luke Skywalker in the Mandalorian rather than digitally putting Mark Hamill's face on a stand-in actor or a mimic for James Earl Jones, but code, algorithms have giving content producers a lot options. But the question still begs, is it worth trillions?

The worth trillions question, a corollary to the benefit question, is big as it is giving OpenAI, Meta, etc, the pass to use copyrighted works, unlicensed. Not only that, it extends to every single thing we do now. Klarna has rebalanced their chatbot support service with more humans in the loop now, but their AI service - I don't know, but really suspecting of it - is recording every single one of their human staff's support sessions now to feed their LLM. Day by day, year by year, their human support staff is talking themselves out of job. There doesn't seem to be justice in that.

I don't begrudge Klarna or Loris.ai their endeavor here. They should try if that is what they want to do. But, I don't think their human support staff has much choice in the matter. Not an equitable balance there.

it’s all stealing. Just because a script is doing it doesn’t mean it’s not stealing. The sooner we get some serious laws in place to protect people, the better.

https://en.wikipedia.org/wiki/Artificial_general_intelligence

All that would be needed from an AGI initially is to replicate a human in a role:

https://en.wikipedia.org/wiki/Turing_test

AGI is described on the following page as doing all the work of a company of people independently and is generally expected within the next 5 years:

https://www.tomsguide.com/ai/chatgpt/sam-altman-claims-agi-is-coming-in-2025-and-machines-will-be-able-to-think-like-humans-when-it-happens

Online customer service is the most obvious use case as many of those roles are online and this is millions of jobs. AI is not at this level yet and it's obvious when an AI is being used but it can reach a level where it's not obvious.

Another example would be a film editor who would edit product review videos. This person would take a lot of raw footage, crop it, sort the files in order, drop them into a timeline and make a sequence where the dialog is cohesive.

Let's say Apple adds an AI feature to Final Cut Pro where you select a group of clips and ask it to make a review video. It would transcribe the audio from all the clips, do image recognition, abbreviate the text into an interesting short form and arrange the clips in Final Cut according to the audio timestamps. It can do this very quickly. This would be capable enough to replace the editor entirely.

It might be more apt to describe it as advanced automation vs intelligence but either way, it is replacing the human role entirely.

The more roles that a singular AI can replace, the more of a general intelligence agent it will be.

Then add it to a physical robot and it can be asked to make dinner, clean the house, take the dog for a walk, do the tax returns, wash the car:

This kind of personal robotics is huge for elderly people.

Nvidia is making a lot of revenue from hardware sales:

https://nvidianews.nvidia.com/news/nvidia-announces-financial-results-for-fourth-quarter-and-fiscal-2025

Their stock valuation is based on growth rate and earnings. Nvidia's latest earnings show $72b net income for the year (112% revenue growth), $2.9t market cap. Apple's last report showed $93b net income ($3.2t market cap, 2% revenue growth).

AI is well beyond simple tools and needs to be taken seriously at the national level. It poses a national security risk when it comes to cybersecurity. It has applications for everything: military strategy, security, facial recognition, medicine, it is discovering new materials, new drugs, new processes for diagnosing diseases.

https://moneywise.com/investing/stocks/bill-gates-says-this-new-technology-is-the-first-that-has-no-limit

People are being complacent with it now because it's at the stage of a child/adolescent where it makes a lot of mistakes. It will need the right training to correct this and this will need more research but once people reconcile with the fact that humans are just smarter apes that have evolved through massive training programs, it's easy to see that we can put machines through the same process and things accelerate quickly when the AI is used to train itself.

Look how advanced this video and audio generation is:

https://www.youtube.com/watch?v=EdQaiDT-Ecg&t=526s

The rate of improvement over the last few years has been very high. AI will go beyond basic tools. Add in augmented reality and robotics and there will be a lot of interesting directions to go in. Many possibilities won't happen for commercial reasons so healthy skepticism is justified but people are overly focused on the mistakes of the early products.