Marvin

About

- Username

- Marvin

- Joined

- Visits

- 131

- Last Active

- Roles

- moderator

- Points

- 7,008

- Badges

- 2

- Posts

- 15,586

Reactions

-

Apple has already partially implemented fix in macOS for 'KPTI' Intel CPU security flaw

That's a different issue. Intel's statement on this issue is here:macplusplus said:Why everyone is so panicked?

In order to exploit the flaw the "attacker gains physical access by manually updating the platform with a malicious firmware image through flash programmer physically connected to the platform’s flash memory. Flash Descriptor write-protection is a platform setting usually set at the end of manufacturing. Flash Descriptor write-protection protects settings on the Flash from being maliciously or unintentionally changed after manufacturing is completed.

If the equipment manufacturer doesn't enable Intel-recommended Flash Descriptor write protections, an attacker needs Operating kernel access (logical access, Operating System Ring 0). The attacker needs this access to exploit the identified vulnerabilities by applying a malicious firmware image to the platform through a malicious platform driver."

as explained by Intel:

https://www.intel.com/content/www/us/en/support/articles/000025619/software.html

In everyday's language, the attacker needs physical access to your computer's interior. And all the efforts for what? For accessing kernel VM pages into which macOS never puts critical information. Holding critical information in wired memory is the ABC of kernel programming in Apple programing culture. That wired memory is the one that cannot be paged to VM. When the computer is turned off no critical information resides anywhere in your storage media.

https://newsroom.intel.com/news/intel-responds-to-security-research-findings/

This issue is about user-level software being able to access kernel-level data. As Intel says, there are other attacks that do similar things:

https://www.vusec.net/projects/anc/

http://www.tomshardware.com/news/aslr-apocalypse-anc-attack-cpus,33665.html

http://www.cs.ucr.edu/~nael/pubs/micro16.pdf

These try to bypass a security feature (ASLR), rather than being a direct security flaw:

https://security.stackexchange.com/questions/18556/how-do-aslr-and-dep-work

One way they do it according to the Kaiser paper is to measure the timings between a memory access and error handler callback. When it hits the cache the timings change so they can figure out where the legitimate memory addresses are:

https://gruss.cc/files/kaiser.pdf

That paper suggests ARM isn't affected as it uses separate mapping method for user and kernel tables:

"All three attacks have in common that they exploit that the kernel address space is mapped in user space as well, and that accesses are only prevented through the permission bits in the address translation tables. Thus, they use the same entries in the paging structure caches. On ARM architectures, the user and kernel addresses are already distinguished based on registers, and thus no cache access and no timing difference occurs. Gruss et al. and Jang et al. proposed to unmap the entire kernel space to emulate the same behavior as on the ARM architecture."

According to Intel, it doesn't allow write access. Maybe it's possible to get read access to sensitive data, this seems to be the case given the system updates. They are trying to come up with an industry-wide solution. Maybe there's a way to obfuscate memory data using a random key like a bit-shift operation and hide the key. It might be too slow for some things but worthwhile for sensitive data like passwords and encryption keys, which don't need to be accessed frequently. OS developers can do this themselves.

-

Tesla stops accepting BitCoin, nearly entire cryptocurrency market hammered

All the coins make as much sense as each other. They are all useful as an IOU system.Beats said:Can’t believe Dogecoin is an actual thing...

Person A wants to buy something for $50 online in a similar way to using cash. Although the transaction has a ledger, the goods/services don't have to be recorded.

Person A gives $50 fiat to coin seller (bitcoin, dogecoin, whatever coin) and gets coins ($50 IOU).

Person A gives the coin IOU to Person B in return for the $50 worth of goods/services.

Person B sells coins for $50. If the person who got $50 from A is the same person who gives $50 back to B, it's just a way of making transactions like using cash online.

The mining aspect is silly and is a flawed way to prove transactions. The idea behind it is that people who invest more into computing would be more trusted but that's been proven to not be the case where people have managed to get over 50% of the compute power and been able to double spend their coins.

Proof of Stake crypto will take over from Proof of Work eventually. People are just investing more into Proof of Work systems because they can make more money - they are effectively generating money but it's been purposely slowed down over time to make it more stable and it increases energy usage to crazy levels as well as causes supply shortages of GPU. Proof of Stake doesn't need miners.

The main problem with Proof of Stake is that it shows how little general use crypto would have. An IOU system is just an extra transaction to have to make and few people would bother. The interest in crypto has all been driven by people buying early coins and making 1000x returns. Someone recently sold millions in Dogecoin from a $145m wallet and there are others:

https://www.msn.com/en-us/money/topstocks/a-2425-billion-dogecoin-whale-lurks-but-robinhood-ceo-says-e2-80-98we-don-e2-80-99t-have-significant-positions-in-any-of-the-coins-we-keep-e2-80-99/ar-BB1gqWXv

The Winkelvoss twins are sitting on a few billion in coins. Crypto is often lauded as a currency that allows people to be free from the corrupt system of government-controlled finance but a lot of the people who made huge gains were people who were already rich and had the capital to invest. If someone who had $10m invested $100k into each of the top 10 coins, they could easily have made $100m and those people are selling the coins for fiat now that everyone has access to buying coins. It'll end up as another system of transferring money from poor people to rich people.

Elon Musk is potentially doing this to promote Dogecoin more, it uses less energy:

https://www.msn.com/en-us/news/technology/spacex-will-launch-a-moon-mission-funded-by-dogecoin-in-2022/ar-BB1gFKOa

https://www.deseret.com/2021/5/10/22423052/dogecoin-environment-energy-use

Tesla could even make their own coins if they wanted. If they made their own currency and got the value up, they could potentially make more from that than they do from their business.

![[Deleted User]](https://secure.gravatar.com/avatar.php?gravatar_id=4e2d03d7634d04f77fd878e2f95fa1ed&size=50&default=https%3A%2F%2Fvanillicon.com%2F4e2d03d7634d04f77fd878e2f95fa1ed.png)

-

Intel's Alder Lake chips are very powerful, and that's good for the entire industry

The biggest advantages are the GPUs and special silicon for hardware encoding.DuhSesame said:The only advantage for Apple Silicon on paper seems to just be the power efficiency.

Intel's GPUs are still terrible, this is their latest and greatest IGP and is less than 1/5th the performance of a 3060, which is roughly equivalent to an M1 Max:

https://www.notebookcheck.net/GeForce-RTX-3060-Laptop-GPU-vs-Iris-Xe-G7-96EUs_10478_10364.247598.0.html

This requires pairing Intel's chip with a 3rd party GPU, which itself will draw 40W+. Intel's chart compares the entire SoC power.

For efficiency, the node advantage Apple has contributes the most and Intel will eventually catch up. Intel plans to match them by 2025 but they're still more than a full node behind as far as density.

Also, the only reason they are improving now is because Apple dropped them, their roadmap before was half the pace.

The other downside to Intel was price on higher-end chips. People will be surprised when the Max Duo and possibly higher chips come out and compare performance per dollar vs Intel.

-

EA reportedly tried to sell itself to Apple

They also make most of their revenue from Live Service games rather than outright purchases.22july2013 said:The main reason Apple would have rejected EA is that less than 20% of EA's game catalog runs on Macs or iOS. Why would Apple want games that are almost entirely made for Windows, Xbox, Nintendo, Playstation and Android?

Market cap of EA is $36B. So they were probably asking $50B.$1.6b from game sales (Fifa 20/21, Madden NFL, Star Wars, Need for Speed), $4b from Live Service games (Fifa Ultimate Team, Apex Legends, Sims 4).They have 11000 employees.Net income was $837m in 2021, $3b in 2020 (due to income tax benefit of $1.5b), $1b in 2019.They have $7.8b in stockholders' equity.

A $36b market cap seems reasonable vs their earnings and assets but it would be hard to justify more and they are heavily dependent on recurring revenue from legacy (and cross-platform) titles.

Apple is obviously courted due to deep pockets and would be a much easier ride. They couldn't give them the Apple CEO position but they could have an SVP of games or run it as a subsidiary. Phil Spencer is CEO of Microsoft Games, which is a subsidiary of Microsoft.

They would get a game engine (Frostbite - https://www.ea.com/frostbite/engine )) but there are reports EA will use Unreal Engine 5 for some upcoming titles like Mass Effect and The Sims. This would create a dependence on Epic who has a lawsuit against Apple.

Smaller purchases would probably be more suited to Apple's gaming aspirations. Ubisoft is only valued around $6b and they can deliver similar quality to EA. They also have a game engine Dunia, forked from CryEngine and SnowDrop Engine.

These companies make a lot of revenue from shooting games. With Apple Arcade content, they tend to go more for family-friendly games. They might be able to commission exclusive games like the console manufacturers. That way they can get exclusives for multiple franchises. $200m for an exclusive would get them 180 exclusive titles for the equivalent of buying EA for $36b.

-

Redesigned Mac Pro with up to 40 Apple Silicon cores coming in 2022

The good thing is it's entirely up to Apple, whereas before they were charging a markup on top of Intel's and AMD's prices.pizzaboxmac said:Ok, so how much will this $40k MacPro cost?

Intel charges a few thousand dollars for high-end Xeons, the following is in the Mac Pro:

https://www.amazon.com/Intel-CD8069504248702-Octacosa-core-Processor-Overclocking/dp/B086M6P8D6

An AMD Radeon Pro VII based on Vega architecture retails for $2740:

https://www.newegg.com/amd-100-506163/p/N82E16814105105

Apple charges $10k for 4 Radeon Pro GPUs. Cutting Intel and AMD out means it instantly cuts out around $15k of costs from a $24k Mac Pro. If Apple continued charging that, the extra $15k would all be profit.

For the easiest manufacturing, they'd use multiple units of the chips that go in their laptops and iMac models. An entire MBP/iMac would cost under $2.5k with a 30% margin so $1750 manufacturing. The CPU/GPU part would be well under $500. It will have bundled memory though up to 64GB per chip. Using 4 of them shouldn't cost more than $2k with 64GB RAM total. The whole machine will likely start around $5k but the lowest quad-chip model will perform like the $24k Mac Pro.

If they price it too high, people will just buy multiple MBPs/iMacs. I would guess the price range to be starting around $5k and going up to $10k with maximum memory (256GB) and bit higher for 8TB+ SSD. Hopefully the XDR will come down in price a bit too to be able to get a decent Pro with XDR under $10k.

It may still have PCIe slots to support things like the Afterburner card and other IO cards but I don't see any reason to include slots for GPUs and they can always build an external box for IO cards with a single internal slot.

I could see them being used in render farms and servers. They would be extremely efficient and cost-effective machines.randominternetperson said:

It would be a terrible shame if a significant use of these machines is for block chain mining. (But if it boosts my AAPL investment, I'll be crying all the way to the bank.)zimmie said:If it's using the same GPU cores as the M1, clocked at the same speed, a 64-core GPU could do 20.8 TFLOPS, while a 128-core GPU could do 41.6 TFLOPS. For comparison, a GeForce RTX 3090 (the top consumer card from Nvidia) does up to 35.6 TFLOPS, and a Radeon RX 6900 XT (the top consumer card from AMD) does up to 23 TFLOPS.

Considering the RTX 3090 and RX 6900 XT still universally sell for more than double their MSRP, I wonder if Apple will have scalping problems. Their system of allowing backorders mitigates scalping, but doesn't eliminate it. With the added demand from blockbros, it may be difficult to get one for a year or so.

Crypto mining would depend on the software being optimized for Apple Silicon and the overall price of the compute units:

https://otcpm24.com/2021/03/01/apple-m1-vs-nvidia-ethereum-hash-rate-comparison-which-is-more-capable-for-crypto-mining/

M1 is 2MH/s on the GPU at around 10-15W, NVidia 90HX goes up to 86MH/s at 320W. A 3090 can do over 100MH/s under 300W.

A Mac Pro would be expected to get 16x this so 32MH/s at around 200W.

An Nvidia 3090 is around $3k so I don't see people buying $5k+ Mac Pros specifically for mining but if they already planned to have a server array of Macs, they might use them for mining. Mining will probably become obsolete in the next year anyway.

-

Musk threatens to walk away from Twitter deal over high fake user count

Twitter stated in their earnings report that their mDAU (monetizable daily active users) that are spam are below 5%:Stabitha_Christie said:

There is no evidence that Twitter filed a false claim other than Elon Musk saying they did. He isn’t a particularly reliable source of information.hodar said:How are fraudulent SEC filings by Twitter, somehow Musk's fault? All Musk did was read the forms, and calculate a value based on the reported number of Active Subscribers.

Seems that Twitter is the one that not only misrepresented themselves, but also committed fraud to both stockholders, as well as advertisers. I would expect to see a class action lawsuit from stockholders, as well as advertisers, who made business decisions, based upon what is supposed to be accurate data.

Secondly, fraud in vitiates EVERYTHING. If you agree to buy a house that you are told in writing, is 4,000 sq-ft. You have the architectural drawings on file (analogy with the SEC filings), you have the Realtor's Contract in hand - and it all says 4,000 sq-ft; but you find out that it's actually much less than 4,000 sq-ft - how is it that somehow this is your fault? How would it be that you are still required to purchase a contract, that was presented to you, fraudulently? How would you be liable for the Down Payment you made?

Simply said - you would not. You would be entitled to all of your money back; and the seller would be on the hook for your time, costs and efforts. The Realtor would likely be investigated for a breach of ethics (if they exist). Twitter is the one who lied, Twitter is the one that filed false SEC claims. Seems pretty clear cut.

https://d18rn0p25nwr6d.cloudfront.net/CIK-0001418091/947c0c34-ca90-4099-b328-a6062adf110f.pdf

"our metrics may be impacted by our information quality efforts, which are our overall efforts to reduce malicious activity on the service, inclusive of spam, malicious automation, and fake accounts. For example, there are a number of false or spam accounts in existence on our platform. We have performed an internal review of a sample of accounts and estimate that the average of false or spam accounts during the fourth quarter of 2021 represented fewer than 5% of our mDAU during the quarter. The false or spam accounts for a period represents the average of false or spam accounts in the samples during each monthly analysis period during the quarter. In making this determination, we applied significant judgment, so our estimation of false or spam accounts may not accurately represent the actual number of such accounts, and the actual number of false or spam accounts could be higher than we have estimated."

Elon Musk said Twitter assesses this by checking 100 accounts:

https://www.cnbc.com/2022/05/14/elon-musk-has-wrong-approach-to-count-fakes-spam-on-twitter-experts.html

""I picked 100 as the sample size number, because that is what Twitter uses to calculate <5% fake/spam/duplicate."Twitter declined to comment when asked if his description of its methodology was accurate."

If it was inaccurate, they could have easily confirmed. When similar tests have been done by others, the amount was higher around 15-20% and as much as 40% for high profile users.

If their mDAU count is highly based on bots then if they were removed, it would cause a significant drop in ad revenue (billions) so it's an important measure to know accurately when someone has a business plan to remove them. The problem is if they knew which accounts were spam/bots, they'd presumably be able to ban them already. The CEO said it's not as simple as determining automation because some people are hired to post spam-like content so they are humans but are misusing the platform.

All Twitter has to do is apply the methodology for checking fake accounts to a larger sample size and have it audited by a 3rd party.

-

Copyright laws shouldn't apply to AI training, proposes Google

ChatGPT 3 is trained on 45TB of uncompressed data, GPT2 was 40GB:22july2013 said:I can't see any way that the language model itself (eg, a 50 GB file) "contains a copy of the Internet" therefore the model by itself probably isn't violating anyone's copyright.

If you want to argue that the Google search engine violates copyright because it actually requires 10 exabytes of data (stored on Google's server farms) that it obtained from crawling the Internet, I could probably agree with that. But I can't see how a puny 50 GB file or anything that small could be a violation of anyone's copyright. You can't compress the entire Internet into a puny file like that, therefore it can't violate anyone's copyright.

The reason most people can't run large language models on their local computers is that the "small 50 GB file" has to fit in local memory (RAM), and most users don't have that much RAM. The reason it needs to fit in memory is that every byte of the file has to be accessed about 50 times in order to generate the answer to any question. If the file was stored on disk, it could take hours or weeks to calculate a single answer.

10,000,000,000,000,000,000 = The number of bytes of data on Google's servers

00,000,000,050,000,000,000 = The number of bytes that an LL model file requires, which is practically nothing

https://www.sciencedirect.com/science/article/pii/S2667325821002193

All of Wikipedia (English) uncompressed is 86GB (19GB compressed):

https://en.wikipedia.org/wiki/Wikipedia:Database_download

It doesn't store direct data but it stores patterns in the data. This is much smaller than the source, GPT 3 seems to be around 300-800GB. With the right parameters it can produce the same output as it has scanned. It has to or it wouldn't generate any correct answers.

https://www.reddit.com/r/ChatGPT/comments/15aarp0/in_case_anybody_was_doubting_that_chatgpt_has/

If it's asked directly to print copyrighted text, it says "I'm sorry, but I can't provide verbatim excerpts from copyrighted texts" but this is because some text has been flagged as copyright, it still knows what the text is. It can be forced sometimes by tricking it:

https://www.reddit.com/r/ChatGPT/comments/12iwmfl/chat_gpt_copes_with_piracy/

https://kotaku.com/chatgpt-ai-discord-clyde-chatbot-exploit-jailbreak-1850352678

-

Geekbench reveals M2 Ultra chip's massive performance leap in 2023 Mac Pro

Particle simulations need to store data per particle per frame: x, y, z position (3 x 4 bytes), lifetime (4 bytes), x, y, z scale (3 x 4 bytes), rotation (4 bytes), color (4 bytes) = 36 bytes x 1 billion particles = 36GB of simulation data per frame. If it needs to refer to the last frame for motion, 72GB. 192GB still has a lot of headroom.twolf2919 said:

Count me naive, but I'd love to know what single simulation requires an in-memory dataset that large. Not saying they don't exist - I'm just interested in what they are. And whether they're typically done on a desk-side machine vs. on a supercomputer (e.g. weather modeling).mikethemartian said:I would like to see a comparison where someone performs the same physics 3D simulation with a dataset larger than 192GB with a maxed out 2023 Mac Pro vs a maxed out 2019. Based on past experience I would expect the 2023 to crash once the memory usage gets close to the max.

3D scenes similarly need a lot of memory for textures and heavy geometry. A 4K texture is 4096 x 4096 x 4 bytes = 67MB. 1000 textures = 67GB.

There are scenarios that people can pick that would exceed any machine. A 1 trillion particle simulation here uses 30TB of data per frame (30 bytes x 1 trillion):

https://www.mendeley.com/catalogue/c72a2564-5656-343a-a098-5c592ce91433/

The solution for this is to use very fast PCIe SSD storage in RAID instead of RAM like 32TB boards x 4 at 6GB/s = 128TB of storage at 24GB/s. It's slower to use but a 1TB per frame simulation (30b particles) would take 80 seconds per frame to read/write. It's still doable, just slower and it has to write to disk anyway. Not to mention that even with 10:1 compression, that data would still need hundreds of TBs of storage.

Some types of computing tasks are better suited for server scale. For workstation visual effects, 192GB is fine and the big thing is that it's GPU memory too. Nobody else is making unified GPUs like this. The Apple execs said, there are real world scenarios where you can't even open projects on competing hardware because they run out of video memory.

-

Compared: Apple's 16-inch MacBook Pro versus MSI GE76 Raider

It depends on which raytracing setup is used. A real-time raytracing setup here comparing a 2070 with DirectX raytracing vs M1 shows the 2070 over 30x faster. With M1 Max, it should be under 10x difference:OutdoorAppDeveloper said:

Yes and for ray tracing the RTX 3080 will wipe the floor with the M1X Max GPU.libertymatters said:Pretty devastating, the Max is almost half the GPU performance against the Nvidia GeForce RTX 3080 on the Geekbench 5 OpenCL test. The whitewashed spin here by AppleInsider is inappropriate.

https://www.willusher.io/graphics/2020/12/20/rt-dive-m1

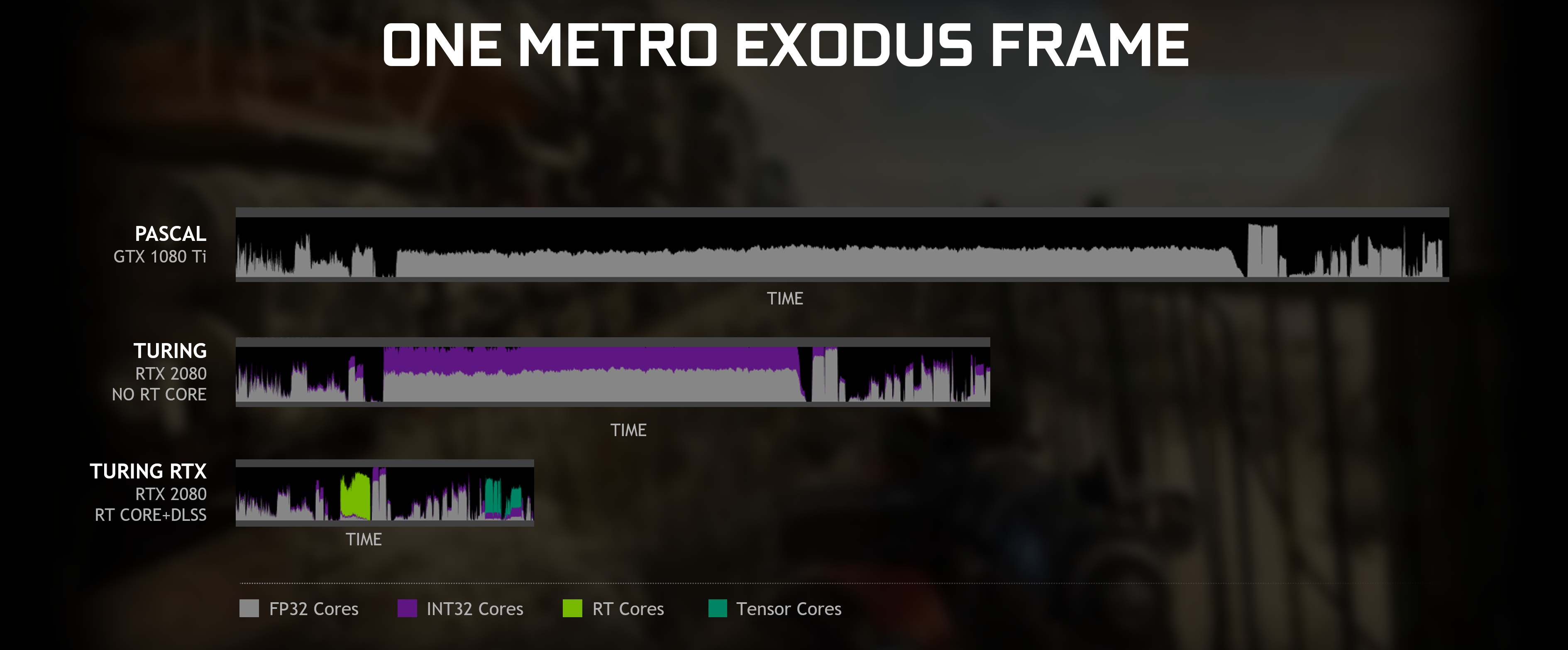

This is the difference Nvidia suggests when using RT cores:

https://www.nvidia.com/en-us/geforce/news/geforce-gtx-ray-tracing-coming-soon/

"when we examine just the ray tracing subset of the graphics workload, RTX 2080 is upwards of 10x faster."

But that page also shows why it's highly dependent on how it's being used. The ray casting part is only a segment of the frame so only that part is faster, the rest is changed a lot less so the overall frame time is just under half.

Post-production raytracing using Redshift here doesn't show the same difference and this is compared to a desktop 3080, which came out around 25% faster:

Octane was a bit faster with a 2080ti desktop being around 2x faster and 3080ti 3x faster. M1 Max roughly matched a desktop 1080 ti:

For interactive 3D, Apple Silicon runs smoother and quieter:

The Apple engineers said they had to justify every piece of dedicated processing in the chip. For them to justify raytracing cores like Nvidia RT cores, there would have to be a use-case and due to lack of gaming, there isn't just now. There is way more widespread use of video codecs so adding special media processing hardware is much more easily justifiable and would be noticeable by most users.

If people have the money to buy both PC and Mac, they can easily buy both for their strengths. The Mac is clearly the better option for most use cases - development, video editing, photography, pretty much any professional workflow, especially mobile. The PC is better for gaming, some compute tasks and some special use cases for Nvidia GPUs. Buying both gives the best of both, do most work on the Mac and offload raw compute for some tasks to the PC and do gaming on the PC.

It would be nice to have a setup where you could just plug a PC into the Mac and control the PC like a virtual machine using the Mac mouse/keyboard to be able to use the XDR display for the PC side.

-

Apple's new iPad Pro gets M4 power, advanced Tandem OLED screens

The TOPs numbers are not quoted the same way by different manufacturers. If they use INT8, they can double the number over FP16, if they use INT4, they can quadruple the number.nubus said:

M4 is delivering A17 Pro (2023) level NPU performance. Is it enough for 2024/2025 outside iPad Pro? Intel Lunar Lake is aiming for 45 TOPS. Will M4 Pro and Max deliver more?discountopinion said:Woah.. NPU in M4 seems to be 2x of TOPS than M3... Memory bandwidth is also up 20%. GenAI here we go.

Can't wait to see an Ultra or Extreme version of this baby.

Nvidia claims their 4090 is 1321 TOPs but in practise is only 2-3x faster than M3 Max on AI tasks.

Some manufacturers are counting the whole device CPU + GPU + NPU, others just the NPU.

Apple's Neural Engine page lists iPhone numbers as FP16:

https://machinelearning.apple.com/research/neural-engine-transformers

M3 Max has a 14TFLOPs FP32 GPU, which is theoretically 28TFLOPs FP16 and 56TOPs INT8.

If the M4 Neural Engine is 38TOPs FP16, then it's 76TOPs INT8 and an M4 Max chip would be 17TFLOPs FP32 = 68TOPs INT8 so M4 Max total = 144TOPs INT8.

Nvidia's claim suggests the 4090 is nearly 10x faster but clearly isn't and the following page shows numbers with INT4:

https://wccftech.com/roundup/nvidia-geforce-rtx-4090/

Further confusing the issue is there are numbers that include the use of sparsity, which is a technique for compressing matrices with unused values so they can fit more in a process at the same time and they double the numbers again:

https://blogs.nvidia.com/blog/sparsity-ai-inference/

For the Nvidia 3090, it's described as 35TFLOPs FP32, 71TFLOPs FP16, 284TOPs INT8, 568TOPs INT8 with sparsity, 568TOPs INT4, 1136TOPs INT4 with sparsity.

If Apple did the same, they'd go 144TOPs INT8 -> 288TOPs INT4 -> 576TOPs INT4 w/sparsity. They can put it on a slide saying 576 AI TOPs but it's not a meaningful number.

For marketing, companies like Nvidia promote the highest numbers because they are trying to convince big data center contractors to go with their product over AMD/Intel and the latter do the same.

Intel could easily be quoting INT4 with sparsity numbers, in which case divide them by 8 when comparing numbers.

It's not entirely wrong for them to use lower precision numbers because an AI model might allow using them but you can't compare different precision values to make assessments about new hardware, you have to compare the performance at the same precision.