wizard69

About

- Banned

- Username

- wizard69

- Joined

- Visits

- 154

- Last Active

- Roles

- member

- Points

- 2,255

- Badges

- 1

- Posts

- 13,377

Reactions

-

How Apple Silicon on a M1 Mac changes monitor support and what you can connect

Mike;Mike Wuerthele said:

We're not happy about how Apple has labeled this either. We shouldn't have had to hammer on PR folks to find out.Hap said:

You're right. I missed that Apple called them USB4 above. I think was is somewhat deceiving about them though is that they don't support a lot of USB4 speeds - only 10Gb/s and not the 20Gb/s or 40Gb/s. Note I'm talking about USB speeds and not TB speeds.Mike Wuerthele said:

They are USB4.Hap said:To be clear. The ports are still USB3/TB3. They are not USB 4.

USB 4 would mean USB data rates of 40Gbps regardless of TB support. That is not the case for these ports.

According to Apple, the ports support this:- Thunderbolt 3 (up to 40Gb/s)

- USB 3.1 Gen 2 (up to 10Gb/s)

Yes, they have TB3, but USB4 does not require TB to operate at those speeds.

While the TB3 thing is correct, Apple has told us that they support full USB4 speeds, and use a USB 4 controller, making them beyond a shadow of a doubt, USB4. Because a port supports a speed, does not mean it is limited to those speeds.

For the time being, though, there isn't very much around in regards to peripherals that support USB4.

Would be nice if they actually said it support DisplayPort 2.0 which is part of the USB4 spec as well.

You are not the only ones that are not happy with Apple and how they have handled this release. It would be nice if you and AI can use whatever influence you have to express how revolting the dog and pony show was. Much of what we are seeing confusion wise would never exist if Apple had decent spec sheets for the machines, and spent far more time in the video concisely relaying information. Instead they went way out of their way to commit to nothing. As far a conveying useful information, the show is the worse I've seen from Apple.

This is really disgusting because M1 deserves better. I really believe we are at the foot of a step hill of new innovation from Apple. At least that is my hope for the future of the company because innovation in the Mac sector has been non existent for some time. The problem is Apple can make or break any product and frankly they need to pull head form butt and stop treating their customers like idiots.

-

First Apple silicon Mac could debut on Nov. 17

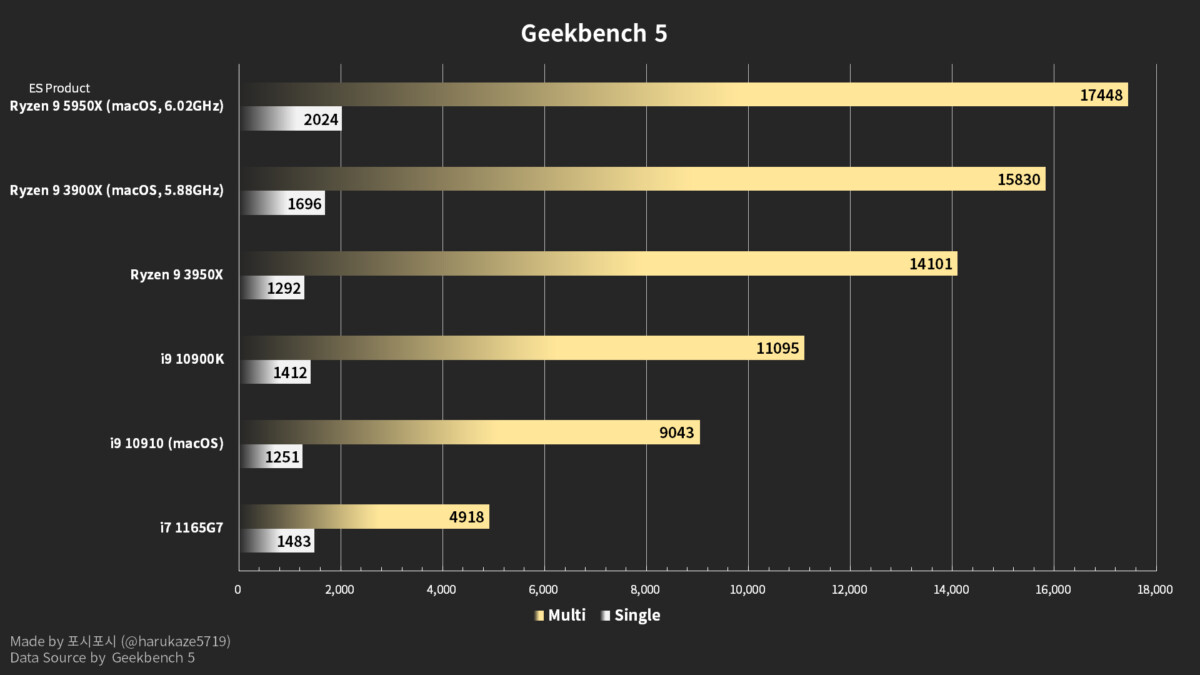

i don’t think a lot of people understand that beating Intel based hardware is easy. The real task for Apple will be competing against high performance AND based hardware in 2021.mdriftmeyer said:From TechPowerUp Article on a Hacintosh using the new Ryzen 9 5950X

https://www.techpowerup.com/273426/amd-ryzen-9-5950x-16-core-zen-3-processor-overclocked-to-6-ghz-and-geekbenched

This is without any Zen optimizations running on Apple Hardware with the latest Catalina, using an AMD Ryzen 9 5950X engineering sample.

Any questions? This is why Apple should have dumped Intel, gone AMD as it matured Apple Silicon for a few more years at least. Personally I don’t see Apple having a problem here. The A Series is already pretty good design to grow from. Simple things like clock rate increases and larger caches will only improve the base hardware. There are many unanswered questions though such as does Apple addd SMT or blow out the number of cores available. Also the area dedicated to Neural Engine indicates to me that they will quickly flip the common performance metrics over. That is general CPU cores may not have a big future at Apple.

Personally I don’t see Apple having a problem here. The A Series is already pretty good design to grow from. Simple things like clock rate increases and larger caches will only improve the base hardware. There are many unanswered questions though such as does Apple addd SMT or blow out the number of cores available. Also the area dedicated to Neural Engine indicates to me that they will quickly flip the common performance metrics over. That is general CPU cores may not have a big future at Apple.

-

First Apple silicon Mac not expected to launch until November

mjtomlin said:It's rumored to sport the A14X processor, a custom GPU, and a battery life between 15 to 20 hours.

Umm, who started that rumor? And why am I hearing that the first ASi Mac will have an A14X in it? Apple has already said they were working on a new family of SoCs for the Mac.

I’m guessing there will be two new series; X-series for consumer and Z-series for Pro systems. And they will have an “M” variant for mobile (laptop). And they will use the same CPU and GPU cores as the A-series, as well as other logic units.

Here’s what I think we’ll get...

consumer desktop... X8, X12, X20

consumer laptop... X8M, X12M

pro desktop... Z16, Z20, Z28

pro laptop... Z16M, Z20M

The # in the name represents the CPU core count

X @ 3GHz, and Z @ 4GHz

Desktop SoCs will have only 2 efficiency cores, the rest will be performance cores.

Mobile SoC cores will be half performance and half efficiency.

GPU cores will very from 8 to 16 (maybe higher on Pro systems).Actually this is rather simple to address, the first Apple Silicon Mac, using the A14X, is likely to be the Mac Book. The A14X would be a perfect fit for this micro laptop with just a few tweaks over the A13 series. These are tweaks that are likely to happen anyways to move the iPad pros forward and on 5nm will not be a negative. In a nut shell the A13 already has everything the Mac Book requires, an A14X would just make it more compelling.Now I'm in agreement that we will likely see a ""laptop"" processor real soon now. Laptop is in quotes because they will likely just run it at higher power levels for the Mac Mini and the entry level iMac (if those models remain in the line up). What is really interesting is the core counts and if SMT will be supported on these advanced processors. If there is no SMT then I can see Apple shipping lots of cores (fairly easy with ARM cores). I'm seriously thinking 16 cores as a minimal if there are no low power cores. However 12 could be a minimal in an 8 + 4 configuration where 8 is the performance variant. The next step up would add 8 more cores to either approach, so we could see 24 core chips real early in Apples silicon.It gets even more interesting if you start to think about how Apple will implement these higher end SoC. I can see Apple going the chiplet route similar to AMD's approach with possibly a different partitioning of responsibilities. An 8 + 4 chiplet with the GPU on the "I/O" die would make for an very interesting start. However 8 + 4 would not be leveraging the 5nm process very well which is why I can see a 16 core chiplets as a minimal chiplet size. If they put the low power cores ( common need) on the I/O die / GPU, they would be able to scale to many cores quickly (think Mac Pro processor). So we would have 16, 32, 48 & 64 cores + 4 configurations. This should be a no brainer for 5nm space wise, there will be issues with data data transfers but 5nm also allows for big caches. Also the I/O die could completely delete the GPU for systems not needing it. In a nut shell huge flexibility for Apples more demanding Macs. And yes this could mean a laptop with 32 cores as the use of ARM plus 5nm should keep the power profile in check.

-

Apple rebuts House antitrust report, says developers 'primary beneficiaries' of App Store

Honestly Apple needs to be screwed over royally. A different management team could have taken the company in a less controversial direction and made more money. Instead the drove the company with anti consumer policies, a massive amount of corporate double speak and frankly a lot of crappy products that didn’t live up to Apple’s legacy (Mac).

-

First Apple silicon Macs likely to be MacBook rebirth, iMac with custom GPU

The number one issue is that GPU's scale very well and if you make a big GPU chip you have far more computational power.Rayz2016 said:

I have a question, as a GPU layperson.foregoneconclusion said:

I would imagine that the MacBook would continue to use the SoC style GPU just like the iPad. It will be interesting to see what Apple does for higher end hardware like the MBP or iMac line. Will it still be integrated, or will they do a discrete version of the GPU?Rayz2016 said:An in-house GPU eh?

This is where the bun fight starts.If they’re using their own tech for GPUs then what is the advantage of a discrete GPU?