auxio

About

- Username

- auxio

- Joined

- Visits

- 142

- Last Active

- Roles

- member

- Points

- 5,065

- Badges

- 2

- Posts

- 2,796

Reactions

-

Instagram chief's mic drop: 'Android's now better than iOS'

ok, so now we get a debate with more than talking points...

I have a combination of many new and old systems of all kinds. Over the years I've used Commodore, Atari, Intel PC, Mac, SGI, Sun (probably more I can't remember).avon b7 said:

I'm pretty much 'old school' in many ways and all my macs are vintage now. Like me! LOL.muthuk_vanalingam said:

You are not alone on the bolded part. It is my experience as well.avon b7 said:Personally I much prefer Android but Android comes in infinitely more flavors than iOS so it's impossible to have used them all to any real degree. The same applies to iOS and the changes from one versión to another.

I'd say that over recent years, iOS has taken a huge amount of influence from Android. Although it is a two way street, it definitely looks like Apple is loosening it's grip on key areas like personalisation.

For me, as someone who constantly has to dip into iOS to resolve issues, it can be very frustrating to see how some things seem so kludgy there.

That could be due to my wife's particular situation/configuration or how certain apps 'behave' but it doesn't feel intuitive to me.

"how certain apps 'behave' but it doesn't feel intuitive to me" - I also have the same feeling but I always thought it was due to me being born and brought up with Windoze OS. I am surprised to see this from you, a person who has primarily MacOS experience.

I've used Windows from 3.1 to 11, but stopped using it daily when I discovered Linux (way before Android was a thing). It was nice to have a computer which didn't need to be rebooted daily, and I was studying computing science at the time, so it fit well. After that I only used Windows for games and cross-platform testing.I have never used Windows though as a daily driver. I only touch Windows to try and solve problems.

I see things differently too.I tend to see things differently to the majority. For example, I was overjoyed when Intel hit their performance bottlenecks from around 2009 onwards.

It kind of killed the need to upgrade to more modern systems just so that the system wouldn't creak under the weight and lack of RAM!

After studying many operating systems in school and coming to understand the design behind them, plus working on rock solid UNIX systems, I really wanted to support the systems which had the best design and thinking behind them. At the time, Macs were still stuck on the old Mac OS, which was archaic, and I could see that the execs had no vision (just riding out existing technology). I was pretty keen on a DEC Alpha running Linux, but it was prohibitively expensive for a student.

The Wintel monopoly was in full force, and I certainly wasn't a fan of their business tactics (locking PC vendors into paying for Windows even if they didn't bundle it).

Then I read about Rhapsody (what eventually became Mac OS X) and how it used the Mach microkernel (great OS design at the time) and I knew I had to get a Mac as soon as it was released. And yes, Windows NT was also based on this kernel design (probably the only version of Windows I enjoyed using), but the business tactics are what kept me from supporting Microsoft.

So the TiBook was where I started with Mac.

I only ever owned one iMac (27" i7 circa 2011), and it still works to this day, though the fan rattles terribly. Can't say that it was my favourite Mac, but it was a powerhouse and the big screen was beautiful for its time. The only Mac I've ever seen with hardware problems was the infamous MacBook Pro that switched GPUs between Intel integrated graphics and NVIDIA (would crash shortly after switching to NVIDIA). That's the one which led to a class action lawsuit and Apple to cutting ties with NVIDIA.I literally had no need for a new system for a full 10 years and surely more if Apple’s terrible iMac thermal design hadn't killed the graphics card in it. That was unheard of in those days. For my needs it would have sufficed for many more years (full disclosure: it was an I and powerful from the get go).

I've designed Mac apps which follow Apple UI guidelines for years and don't remember that ever being something Apple pushed. They did make it so that Mac apps would automatically save the application state periodically (autosave became a default behaviour if your app used their APIs), and I remember some apps replacing save functionality with that feature so that users didn't have to remember to hit save all the time. But it was never mandated that apps remove "save" or "save as..."From a software perspective things were different and I really hated the changes that appeared from around when they got rid of 'save as...'

Not sure why you didn't just use Terminal for everything. Apple realized that most power users were just using the command line for advanced functionality and so they stopped making things which could accidentally damage the OS/system easily available to everyone (probably because of the support costs and/or lawsuits). But it was always there if you knew the commands. And you could find apps which wrapped those commands if you really wanted (I downloaded a nice firewall rule management app which wrapped the ipfw commands).I didn't like many things after that. Sometimes little things like how Disk Utility, erm, had its 'utility' taken away. Hiding the System Folder etc.iTunes was breaking under its own bloat at the time and the whole syncing process was getting terribly out of control.

I remember once just adding a book (a..pdf), less than 1MB, and watched in agony as iTunes went through its seven or eight step sync routine - and failed! Utterly.

A long story but definitely a WTF! moment.I'll agree that iTunes was a bit of a mess for a while. But no one else at the time had anything better. I remember a few mobile phone manufacturers were actually hijacking the syncing functionality from it for their own phones instead of writing their own syncing software. Now THAT behaviour (much like the clone and own behaviour of Google) is something which really makes me say "WTF! Pay for the development of your own damn software!"

With iOS, Apple was trying to remove as much complexity as possible from using computers. Part of removing that complexity was realizing that most people who use computers end up with a mess of files everywhere that they don't know how to navigate (or don't care). And navigating them on a small screen would just make it more difficult. So they were designing the system to automatically route files/documents into the apps which could handle them. Just choose the right app to open the document in, and each app manages a set of documents related to it. Global search allows you to find your documents no matter which app they're in. Which I actually thought was a pretty clever system, but obviously limiting for some scenarios, which is why they eventually created the Files app (where you can see the underlying folders each app is using for it's files). But I'd be willing to bet that most iPhone users barely touch, if at all, the Files app.That all bled into iOS.

I just wanted to be able to copy a file over to an iPhone and have it read but Apple was saying 'no' you MUST jump through all these hoops and do it 'OUR' way.

This kind of situation has been seen too often and in the end Apple has changed how it does things. But only after a lot of suffering.

Now we have the 'Files' app but for far too long people defended the 'Apple Way'. Attachments being forced into the cloud? Why?

<many more points related to wanting features to stay the same>

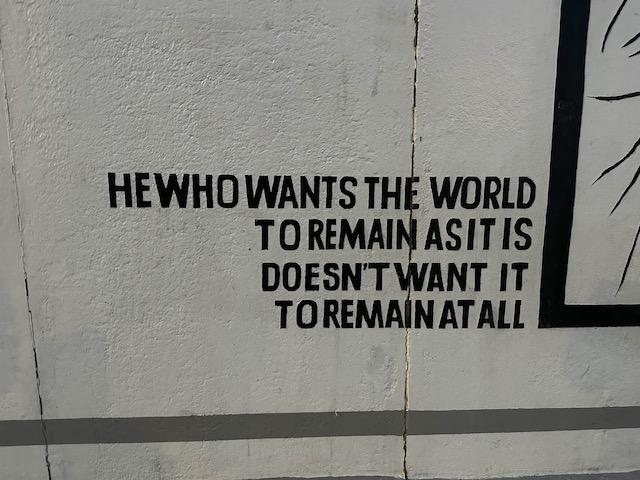

Just as we moved away from computers using punch cards, added screens, put all the components in one box, added a graphical interface, etc, etc, things need to move forward and become easier to use. Apple is one of the few companies willing to take risks, potentially making mistakes along the way, to move things forward. But there are always some people desperately wanting things to stay the same because they only see one way to do things. They aren't willing to shift their perspective and embrace new ways to do things, as they likely did when they were younger and still learning about life.

Here's a relevant piece of art which caught my eye in Berlin while I was there recently:

-

Instagram chief's mic drop: 'Android's now better than iOS'

From an application developer perspective (both Android and iOS), Android's technology stack is a mess compared to iOS. They put Kotlin on top of Java, which is basically just syntax sugar coating (Java was already a pretty high level language). Java has zero direct interoperability with native programming languages like C/C++, so if you do need to do more advanced things, or make things work cross-platform, it takes 2-3x more effort. And don't even get started on how awful Android Studio is ("Repair IDE" is one of the menu items in it, no joke).avon b7 said:Personally I much prefer Android but Android comes in infinitely more flavors than iOS so it's impossible to have used them all to any real degree. The same applies to iOS and the changes from one versión to another.

I'd say that over recent years, iOS has taken a huge amount of influence from Android. Although it is a two way street, it definitely looks like Apple is loosening it's grip on key areas like personalisation.

For me, as someone who constantly has to dip into iOS to resolve issues, it can be very frustrating to see how some things seem so kludgy there.

That could be due to my wife's particular situation/configuration or how certain apps 'behave' but it doesn't feel intuitive to me.

Not surprising most people just create web apps in JavaScript on Android. Which is likely Google's plan anyways since it's easier for them to gather rich data on customers via the web than monitoring via Android itself (where figuring out what people are doing is more complex).

Apple's equivalent technologies (Objective-C and Swift) are very cleanly designed and intuitive, and integrate easily with cross-platform code, which isn't surprising since they were designed in-house rather than via clone and own (Java) and a 3rd party (Jetbrains created Kotlin).

I think most consumer problems with iOS stem from the fact that Google invests heavily in Chromium/web technologies, at the expense of their native technologies. Whereas Apple is the reverse. So if you spend your whole life in web apps and/or a browser then the experience is going to be better. Also, most web apps completely disregard any sort of platform UI standards. So again, if you're used to the wild west of web app/page interfaces, the native platform interfaces are going to seem less intuitive.

As someone who tries to avoid using web apps as much as possible because I find them slower to use, less intuitive, and battery killers, I find iOS very intuitive to configure and navigate.

-

Apple's Windows Game Porting Toolkit gets faster with new update

Computer graphics is definitely one of the more complex areas of programming. I remember first trying to learn it (and now I'll be dating myself) from Michael Abrash's articles on the Quake engine back in the late 1990s, and having a tough time wrapping my head around it. I eventually learned it from the CG bible, where it's presented more formally and without the complexity of clever optimizations.jblongz said:How are people making high-end games for iOS/MacOS without tools like Unreal Engine or Unity? I've read some introductory documents about Metal and it seems so complex just for that one area of gave development. Anyone know that the Apple pipeline really looks like?

Anyways, once you wrap your head around the concepts and how GPUs work, Metal is no different from other modern GPU rendering architectures like Vulkan. I actually find MSL to be much easier to use than GLSL and other shader languages. And Apple's rendering pipeline is much easier to debug problems with than DirectX (at least, when I used it about 10 years ago). But yes, if you're only used to a prepackaged 3D engine like Unreal or Unity, the internals of how they work (using DirectX/Vulkan/Metal) can certainly be daunting.

-

Why Apple uses integrated memory in Apple Silicon -- and why it's both good and bad

And this really gets at the core of the mindset of PC users who have an irrational hatred of Apple: we do it this way, why does Apple think they're special?lam92103 said:So every single PC or computer manufacturer can use modular RAM. Including servers, workstations, data centers, super computers.But somehow the Apple chips cannot and are trying to convince us that it is not just plain & simple greed??

That mindset typically carries into other areas of life too: why doesn't everyone speak the same language, worship the same god, look the same, etc, etc. They feel this need for everything to be the same, and for some reason want to force everything to be that way. I'd really like to know the reason why, because for myself, diversity is what makes life interesting. And in both nature and technology, it's proven to have great benefits for survival and progress.

-

China tells Apple to beef up its data security practices

Enshrined might not be the right word, but Tim Cook has called privacy a "fundamental human right" on a number of occasions. It's an important statement to make given how many other companies (and governments) have turned the internet into one big tracking and profiling system.chutzpah said:

What?AppleInsider said:

There have been lapses, such as lawsuits over alleged data collection practices, but it has typically enshrined privacy as a human right.

They have a decent track record for privacy and take it seriously, but "enshrine privacy as a human right" is abject nonsense.