Marvin

About

- Username

- Marvin

- Joined

- Visits

- 131

- Last Active

- Roles

- moderator

- Points

- 7,013

- Badges

- 2

- Posts

- 15,588

Reactions

-

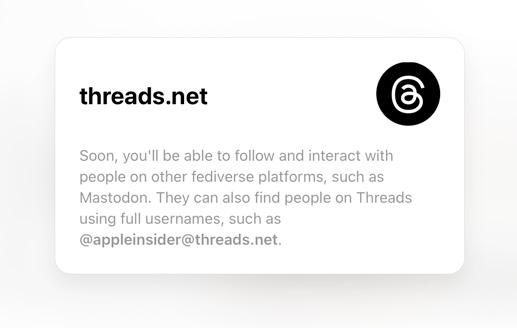

Meta's Instagram soft-launches Threads to take on Twitter

They have an interesting setup:Alex1N said:

Not on ‘Threads’ for me, see above. Mastodon/Toot!, ok, but not on Meta.jSnively said:

More please?ericthehalfbee said:I see AppleInsider already has over 2,000 followers. Curious what you think of that?

https://www.slashgear.com/1332608/meta-threads-fediverse-new-explained/

BlueSky seems to be setup in a similar way:

https://educatedguesswork.org/posts/atproto-firstlook/

The federated network is looking like the popular way to go for social media with each network being able to communicate with the other and you pick which one you feel most comfortable with signing up to.

Threads looks very clean and fast and they have direct marketing to billions of people. They can scale this up very quickly.

The main thing that people need from chat networks is ease of use and reliability, few people are aware of the privacy implications of any online services.

A lot of companies could get in on this. Even things like Youtube with video comments. You could have an account on Threads, Mastodon, Bluesky and leave a comment on a Youtube video. The video viewer can choose where to see comments from.

Apple App Store reviews/ratings could be posted from a social network. They'd just need to get a token to verify that a user has an app. The review/rating could be seen by the person's followers.

This is moving social media to be closer to email where the user signs up to any mail service and uses any mail server. A message is tagged with a sender and recipient address, the server identifies the recipient and delivers it. The same can be done with public messages where people have an identity and they can pick any number of destinations. The destinations would be more granular like apple/appstore/appid/reviews, apple/appstore/appid/complaints, threads/account/commentid/replies.

A single app like Apple Messages could let you post to all destinations. If an account is blocked on any service, the messages get rejected from the destination.

This kind of messaging could replace everything - email, Slack, SMS, social media. It just needs a robust protocol, ease of use and reliability.

-

Getting started with Apple Vision Pro developer software

There's a page here saying macOS 14 can be used with Parallels as long as the host is on macOS 13 Ventura:jellybelly said:Can Parallels or VMWare be used to run the beta development tools? (and beta MacOS needed?)

https://kb.parallels.com/129770

Another route is to setup dual boot:

Dual boot will probably be best to use for performance but shutting down the system can get annoying because all the main apps and data are on the other partition.

-

Mac Pro M2 review - Maybe a true modular Mac will come in a few more years

There's no shock going to happen with a boring tower PC, nobody cares about these legacy types of computer any more.9secondkox2 said:Your argument is basically that Apple should not have waited and just released the same lame Mac Pro earlier.If this is all the Mac Pro is meant to be - a Mac Studio with more ports and PCI-E slots, then sure. And it’s obvious they absolutely could have done that. But they didn’t. And it’s likely for a few reasons combined:The only problem is that it is such a disappointment that they may have done more harm than good. When the Mac Studio was announced, we were given the expectation that this was somehow the iMac replacement t when coupled with the studio display. Shortly thereafter, we were told that the Mac Pro story would continue later. The iMac was never a Mac Pro level machine and the Mac Studio wasn't either. Hence the wait. When the AS Mac Pro launched and was revealed to be on the level of an iMac replacement, it was bound to disappoint. And it did/does. Apple really truly should have waited until they were ready to shock the world. Instead they took the pinnacle of computing and made it ordinary and vastly underwhelming.Such a shame. Next year, there is an opportunity to reclaim some honor, but the shame of this launch will haunt them for at least s year.

All people ever describe about what Apple should do to make a 'real Mac Pro' is make it faster. But most Mac Pro buyers don't buy the higher-end models. The vast majority of Mac Pro users bought mid-range models and the Ultra chips are way beyond those at the same price point.

Apple delivered all they needed to for most of their customers.

The realism of the virtual sets is primarily due to the assets. Photogrammetry assets in Unity look photoreal too.9secondkox2 said:As far as Unity, it’s lower tier stuff.Your argument amounts to comparing a turd made with gold to a crown made with bronze.You can point out examples of stuff made with superior tools that look as bad as Unity graphics, but we both know that’s a disengenuous argument as art direction, budget, and creative talent have everything to do with that. The capability ceiling for Unreal 5 and even CryEngine is light years above Unity. There is a reason most of the Mandalorisn “CGI” is made with Unreal 5. It looks indistinguishable from real life.

https://www.youtube.com/watch?v=d4FGrVh5ZrI&t=24s

Unreal is the better engine for visual quality but it's not as big of a difference as you are suggesting. With good assets, shaders and lighting, Unity can render photorealistic results, Unreal just tends to have that out of the box.

https://www.youtube.com/watch?v=SDN22snbfZA

Unreal also isn't excluded, there will be Unreal content made for Vision Pro.

-

Reducing crushed black levels on XDR displays

This was common with OLED phones at first where people initially preferred the more saturated colors but after a while, heavily saturated colors look unnatural.chutzpah said:Maybe I'm alone, but I can see almost no difference, and if anything the images on the left look better.

Some of the visual quality difference here is due to Youtube compression. Brighter images show the compression noise. The black levels being crushed hides the noise but also the detail. On higher quality video compression, it just hides the details.

One of the most obvious differences in the images is the steps in the 2nd image. The top step is much less visible on the left side.

You have to view these images on an XDR display to see the difference. The images will look fine on an IPS display.

-

Geekbench reveals M2 Ultra chip's massive performance leap in 2023 Mac Pro

Particle simulations need to store data per particle per frame: x, y, z position (3 x 4 bytes), lifetime (4 bytes), x, y, z scale (3 x 4 bytes), rotation (4 bytes), color (4 bytes) = 36 bytes x 1 billion particles = 36GB of simulation data per frame. If it needs to refer to the last frame for motion, 72GB. 192GB still has a lot of headroom.twolf2919 said:

Count me naive, but I'd love to know what single simulation requires an in-memory dataset that large. Not saying they don't exist - I'm just interested in what they are. And whether they're typically done on a desk-side machine vs. on a supercomputer (e.g. weather modeling).mikethemartian said:I would like to see a comparison where someone performs the same physics 3D simulation with a dataset larger than 192GB with a maxed out 2023 Mac Pro vs a maxed out 2019. Based on past experience I would expect the 2023 to crash once the memory usage gets close to the max.

3D scenes similarly need a lot of memory for textures and heavy geometry. A 4K texture is 4096 x 4096 x 4 bytes = 67MB. 1000 textures = 67GB.

There are scenarios that people can pick that would exceed any machine. A 1 trillion particle simulation here uses 30TB of data per frame (30 bytes x 1 trillion):

https://www.mendeley.com/catalogue/c72a2564-5656-343a-a098-5c592ce91433/

The solution for this is to use very fast PCIe SSD storage in RAID instead of RAM like 32TB boards x 4 at 6GB/s = 128TB of storage at 24GB/s. It's slower to use but a 1TB per frame simulation (30b particles) would take 80 seconds per frame to read/write. It's still doable, just slower and it has to write to disk anyway. Not to mention that even with 10:1 compression, that data would still need hundreds of TBs of storage.

Some types of computing tasks are better suited for server scale. For workstation visual effects, 192GB is fine and the big thing is that it's GPU memory too. Nobody else is making unified GPUs like this. The Apple execs said, there are real world scenarios where you can't even open projects on competing hardware because they run out of video memory.