Inner workings of Apple's 'Face ID' camera for 'iPhone 8' detailed in report

Apple is widely rumored to integrate cutting-edge facial recognition solution in a next-generation iPhone many are calling "iPhone 8," and a report published Friday offers an inside look at the of the technology before its expected debut on Tuesday.

In a note to investors seen by AppleInsider, KGI analyst Ming-Chi Kuo details the components, manufacturing process and behind-the-scenes technology that make Apple's depth-sensing camera tick.

Referenced in HomePod firmware as Pearl ID, and more recently as "Face ID" in an iOS 11 GM leak, Apple's facial recognition system is expected by some to serve as a replacement for Touch ID fingerprint reader technology. As such, the underlying hardware and software solution must be extremely accurate, and fast as well.

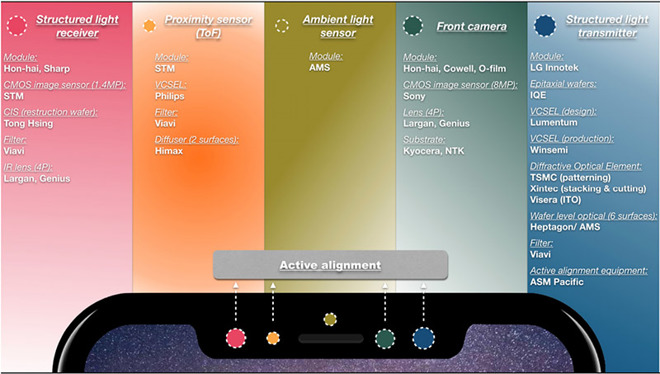

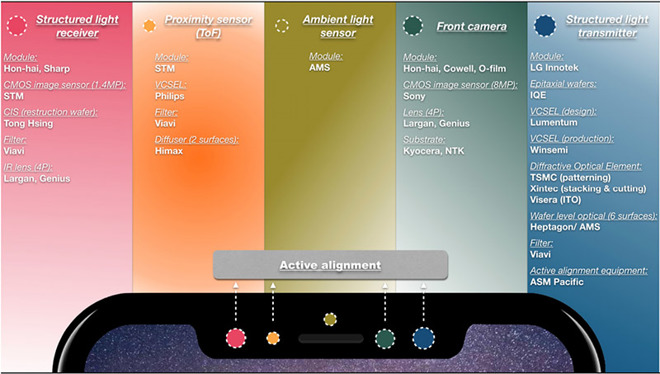

According to Kuo, Apple's system relies on four main components: a structured light transmitter, structure light receiver, front camera and time of flight/proximity sensor.

As outlined in multiple reports, the structured light modules are likely vertical-cavity surface-emitting laser (VCSEL) arrays operating in the infrared spectrum. These units are used to collect depth information which, according to Kuo, is integrated with two-dimensional image data from the front-facing camera. Using software algorithms, the data is combined to build a composite 3D image.

Kuo points out that structured light transmitter and receiver setups have distance constraints. With an estimated 50 to 100 centimeter hard cap, Apple needs to include a proximity sensor capable of performing time of flight calculations. The analyst believes data from this specialized sensor will be employed to trigger user experience alerts. For example, a user might be informed that they are holding an iPhone too far or too close to their face for optimal 3D sensing.

In order to ensure accurate operation, an active alignment process must be performed on all four modules before final assembly.

Interestingly, the analyst notes the ambient light sensor deployed in the upcoming iPhone will support True Tone display technology. Originally introduced in 2016, True Tone tech dynamically alters device display color temperatures based on information gathered by ambient light sensors. This functionality will also improve the performance of 3D sensing apparatus, Kuo says.

The analyst believes the most important applications of 3D sensing to be facial recognition, which could replace fingerprint recognition, and a better selfie user experience.

Kuo believes all OLED iPhone models -- white, black and gold -- will feature a black coating on their front cover glass to conceal the VCSEL array, proximity sensor and ambient light sensor from view. The prediction is in line with current Apple device designs that obscure components from view.

In a note to investors seen by AppleInsider, KGI analyst Ming-Chi Kuo details the components, manufacturing process and behind-the-scenes technology that make Apple's depth-sensing camera tick.

Referenced in HomePod firmware as Pearl ID, and more recently as "Face ID" in an iOS 11 GM leak, Apple's facial recognition system is expected by some to serve as a replacement for Touch ID fingerprint reader technology. As such, the underlying hardware and software solution must be extremely accurate, and fast as well.

According to Kuo, Apple's system relies on four main components: a structured light transmitter, structure light receiver, front camera and time of flight/proximity sensor.

As outlined in multiple reports, the structured light modules are likely vertical-cavity surface-emitting laser (VCSEL) arrays operating in the infrared spectrum. These units are used to collect depth information which, according to Kuo, is integrated with two-dimensional image data from the front-facing camera. Using software algorithms, the data is combined to build a composite 3D image.

Kuo points out that structured light transmitter and receiver setups have distance constraints. With an estimated 50 to 100 centimeter hard cap, Apple needs to include a proximity sensor capable of performing time of flight calculations. The analyst believes data from this specialized sensor will be employed to trigger user experience alerts. For example, a user might be informed that they are holding an iPhone too far or too close to their face for optimal 3D sensing.

In order to ensure accurate operation, an active alignment process must be performed on all four modules before final assembly.

Interestingly, the analyst notes the ambient light sensor deployed in the upcoming iPhone will support True Tone display technology. Originally introduced in 2016, True Tone tech dynamically alters device display color temperatures based on information gathered by ambient light sensors. This functionality will also improve the performance of 3D sensing apparatus, Kuo says.

The analyst believes the most important applications of 3D sensing to be facial recognition, which could replace fingerprint recognition, and a better selfie user experience.

Kuo believes all OLED iPhone models -- white, black and gold -- will feature a black coating on their front cover glass to conceal the VCSEL array, proximity sensor and ambient light sensor from view. The prediction is in line with current Apple device designs that obscure components from view.

Comments

Maybe they were waiting on revealing the software API for this kind of thing after the HW is available so people don't know about this capacity months in advance.

9to5Mac also provides hints that computational photography may enable portrait or scene "relighting" thanks to this enhanced depth sensing.

It's going to be a VERY interesting iPhone 8 announcement.

Clean AF

The back cameras can do this, by using computational imaging from the two cameras (and perhaps a time of flight sensor, which might be included). The front camera can provide a color and texture overlay to the 3D point cloud data from the structured light sensor and the time of flight sensor. Given one of the cheap solar rotary tables that you can find on Amazon, you could create assets for games, AR, and accurate models for 3D printing, which is what I'm looking for.

It's been a published rumor for months that no, you would not have to hold it in front of your face, akin to the ARKit document scanning in iOS 11.

What else? Anything new about the double camera? Photo enhancements based on feature recognition. OK I get that.

Maybe a ML chip supporting both Face ID and photo enhancements? I hope that. But an ML chip is a core tech and Apple would put it in all models to push the platform forward as a whole. Restricting it to only OLED model is very unlikely, similar to restricting the Motion chip to only one model.

Inductive charging is something, fortunately all models will have it. What else?

Sorry for scrutinizing the ultimate popular tech dream of the day but just remember how an unimportant issue like Macbook keyboard keys' travel distance has created so much controversy and it comes again and again at every Macbook launch. People can't even tolerate a sub-millimeter change in their interaction with their Macbooks, does anyone think that they will pass on their bezels, clickable Home button and TouchID so easily?

Such an automatic authorization won't take long to be disputed in courts. Just remember how the software license agreements are accepted. One must explicitly click on "I agree". There are legal reasons for that, its history is going back to the '90s.

Because of that implicit authorization issue, I think Apple won't take such a legal risk with Face ID and I'm afraid it will ask for password in those explicit authorization instances.

Any hint about that in iOS 11 Golden Master?

Now, if the rumours play out, then there will be no need to hold the phone up to your face. If this thing can identify you from a 1 metre away, then it can certainly identify you while you're holding the phone near an NFC terminal.

I have a feeling that the recognition will not be automatic; that would mean your phone unlocking every time you walked past it. It would also mean that the cameras would need to be switched on all the time which would raise eyebrows around privacy and would also drain the battery. I think you will need to touch the screen, or that massive power button before the phone tries to identify you.

Another thing: people have expressed concern that someone could grab your phone, unlock it by pointing it at you, then run off. Or outlaw enforcement officers try to unlock your phone by pointing it at you. The first case is unlikely, the second is a real possibility. How will the phone know? Orientation, possibly? No idea.

I read something (and I've lost the URL for it), which may explain why they're abandoning TouchID. They may have realised that a separate keyboard with TouchID would be too expensive to sell. But what they can do is build FaceID into an iPhone, an iPad, a MacBook, an iMac … and maybe a high-end standalone monitor.

The abandoning of TouchID is as unlikely as FaceID will happen in all products is likely. If the keyboard cost were a source of concern Apple wouldn't launch it at first place. There is a reason the FaceTime camera is driven by the same T1 chip driving also the TouchID in the new MBPs. When launching the new MBPs with TouchID they knew in advance that they'd implement also the FaceID in the future. I believe multi biometrics is the goal, and all products will include both TouchID and FaceID sooner or later. And despite the evil hopes of all those naysayers, the Touch Bar hopefully is not going anywhere

(I'm mostly being facetious here ... no need to remind me that the Touch ID will still work after Face ID is launched.)