Enterprise certificates still being abused to spy on iPhone users

Apple's Enterprise Certificate program continues to be abused for unauthorized purposes with the discovery of a disguised spyware app that has the capability to acquire a considerable amount of data from a user's iPhone, one that may have been created by a government surveillance app developer.

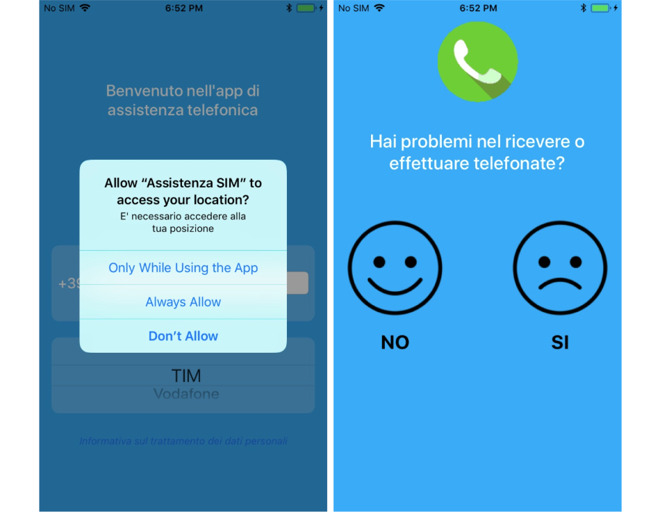

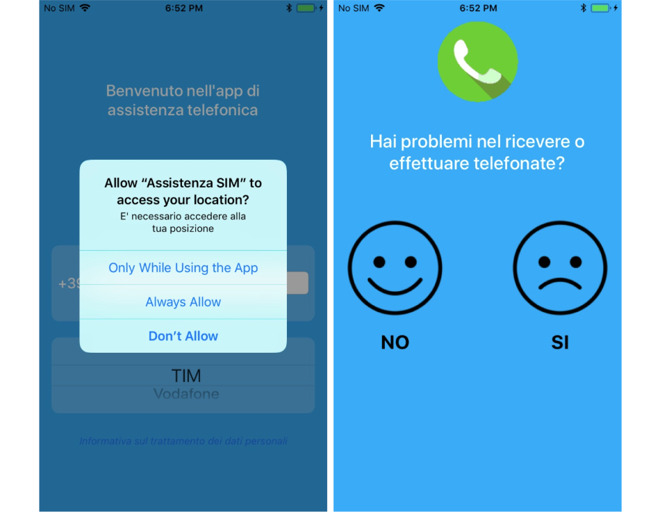

Screenshots of the disguised spyware (via TechCrunch)

Apple's program enables enterprise customers to create and distribute apps within an organization without being subjected to the App Store's content guidelines. The system allows for apps with far greater access to data within iOS than normal consumer versions, but the rules for the program means it cannot be used outside of an organization.

Despite it being against the rules, this hasn't stopped unscrupulous organizations from taking advantage of the Enterprise Certificate system to distribute apps that don't have to abide by the consumer-protecting App Store guidelines.

Mobile security outfit Lookout advised to TechCrunch a spy app was discovered pretending to be a carrier assistance app for mobile networks in Italy and Turkmenistan. Once installed, the app us capable of quietly acquiring contacts stored on an iPhone, as well as audio recordings, photos and video, real-time location data, and can even be used to listen in to conversations.

It is believed to have been developed by Connexxa, the creators of a similar Android app named Exodus that has been used by Italian authorities for surveillance purposes. The Android version had more reach than the latest iOS discovery, via the use of an exploit to gain root access.

Both the iOS and Android apps used the same backend, indicating the two are linked. The use of certificate pinning and other techniques to disguise its network traffic is thought to be a sign that the app was created by a professional group.

Once Apple was informed of the app's unauthorized activity by the researchers, Apple revoked the app's certificate, preventing it from functioning. It is unknown how many iOS users were affected by the attack.

The misuse of Apple's Enterprise Certificates program has become an issue for the company since the start of 2019. Early stories focused on how Google and Facebook were providing end users with Enterprise Certificate-equipped apps that monitored their usage habits, a situation that led to Apple revoking the certificates and, in Facebook's case, causing internal issues.

In February, it was discovered developers were also abusing the program to offer apps that would normally be banned from the App Store, including porn and gambling apps. Many were found to have acquired the certificates using another firm's details, allowing them to work around limitations to the number of users allowed under a certificate.

It was also found some developers were distributing hacked versions of popular apps, with users capable of streaming music without paying subscription fees, blocking advertisements, and bypassing in-app purchases. It also meant the developers of the legitimate versions of the apps were missing out on revenue, along with Apple failing to receive its usual 15 or 30 percent cut of all App Store Purchases.

Screenshots of the disguised spyware (via TechCrunch)

Apple's program enables enterprise customers to create and distribute apps within an organization without being subjected to the App Store's content guidelines. The system allows for apps with far greater access to data within iOS than normal consumer versions, but the rules for the program means it cannot be used outside of an organization.

Despite it being against the rules, this hasn't stopped unscrupulous organizations from taking advantage of the Enterprise Certificate system to distribute apps that don't have to abide by the consumer-protecting App Store guidelines.

Mobile security outfit Lookout advised to TechCrunch a spy app was discovered pretending to be a carrier assistance app for mobile networks in Italy and Turkmenistan. Once installed, the app us capable of quietly acquiring contacts stored on an iPhone, as well as audio recordings, photos and video, real-time location data, and can even be used to listen in to conversations.

It is believed to have been developed by Connexxa, the creators of a similar Android app named Exodus that has been used by Italian authorities for surveillance purposes. The Android version had more reach than the latest iOS discovery, via the use of an exploit to gain root access.

Both the iOS and Android apps used the same backend, indicating the two are linked. The use of certificate pinning and other techniques to disguise its network traffic is thought to be a sign that the app was created by a professional group.

Once Apple was informed of the app's unauthorized activity by the researchers, Apple revoked the app's certificate, preventing it from functioning. It is unknown how many iOS users were affected by the attack.

The misuse of Apple's Enterprise Certificates program has become an issue for the company since the start of 2019. Early stories focused on how Google and Facebook were providing end users with Enterprise Certificate-equipped apps that monitored their usage habits, a situation that led to Apple revoking the certificates and, in Facebook's case, causing internal issues.

In February, it was discovered developers were also abusing the program to offer apps that would normally be banned from the App Store, including porn and gambling apps. Many were found to have acquired the certificates using another firm's details, allowing them to work around limitations to the number of users allowed under a certificate.

It was also found some developers were distributing hacked versions of popular apps, with users capable of streaming music without paying subscription fees, blocking advertisements, and bypassing in-app purchases. It also meant the developers of the legitimate versions of the apps were missing out on revenue, along with Apple failing to receive its usual 15 or 30 percent cut of all App Store Purchases.

Comments

In the iOS version, users had to give permission for each of the areas where it wanted to gather data, for example, which might raise suspicions ("why does my carrier need permission to access my voice memos or contacts?"). The Android version installed a rootkit, meaning it had full access to everything.

In addition, the iOS version of the app was not distributed in the App Store, even in Italy and Turkemenistan (where the app pretended to be the official app of one of the local carriers), The iOS version was given out only to people willing to "side-load" a dev-only app; the Android version was distributed in the Play Store and other outlets for anyone to download.

Finally, Apple's revoking of the certificate means the app can no longer run at all, even if users granted permissions. The Android version was kicked off the Play Store, but the installed versions are still happily ticking along, having used the rootkit to gain full access.

If Apple makes it much more expensive (or difficult) to get an enterprise certificate then they’ll be criticized for making it “too hard”.

Uh, Apple does revoke their certificate. Then those people create a new fake company and apply for another one. Rinse and repeat. Not much Apple can do besides their current practice of revoking a certificate once they discover it. Not without making things tougher on the legitimate developers.

You have to be a legal entity (basically a corporation) for starters. You also need a DUNS (Dun & Bradstreet) number, a website attached to your company (this would be the easiest to fake) and the person applying needs to have legal authority to act on behalf of the company.

When you think about it, this is all you need to open a bank account. And once that bank account is open you could launder money through it or so many illegal things. Yet the bank still accepted your credentials to grant you that account in the first place.

It’s not like Apple just hands these out to anyone who asks.

Verifying the App is the tricky one. Who’s going to verify it? Apple? So now Apple has to start checking on Apps that aren’t even being delivered through The App Store? Who will pay for this service? Most likely the developer, as an added fee on top of the yearly $299 enterprise developer fee.

About the only thing I could think of is requiring smaller companies to have someone “sign off” on an agreement that if they abuse their certificate they can be liable for a hefty fine/penalty. Though I imagine people are using fake IDs to set up these shell companies so you’d never be able to enforce/collect any fine. Perhaps a bond of $10K (or more) to be paid up front that you forfeit if you abuse your certificate. Then we’ll see smaller companies complaining about the high cost of entry to start developing enterprise Apps.

I just don’t see an easy way to stop criminals from abusing certificates without unduly affecting honest users.