Civil rights groups worldwide ask Apple to drop CSAM plans

More than 80 civil rights groups have sent an open letter to Apple, asking the company to abandon its child safety plans in Messages and Photos, fearing expansion of the technology by governments.

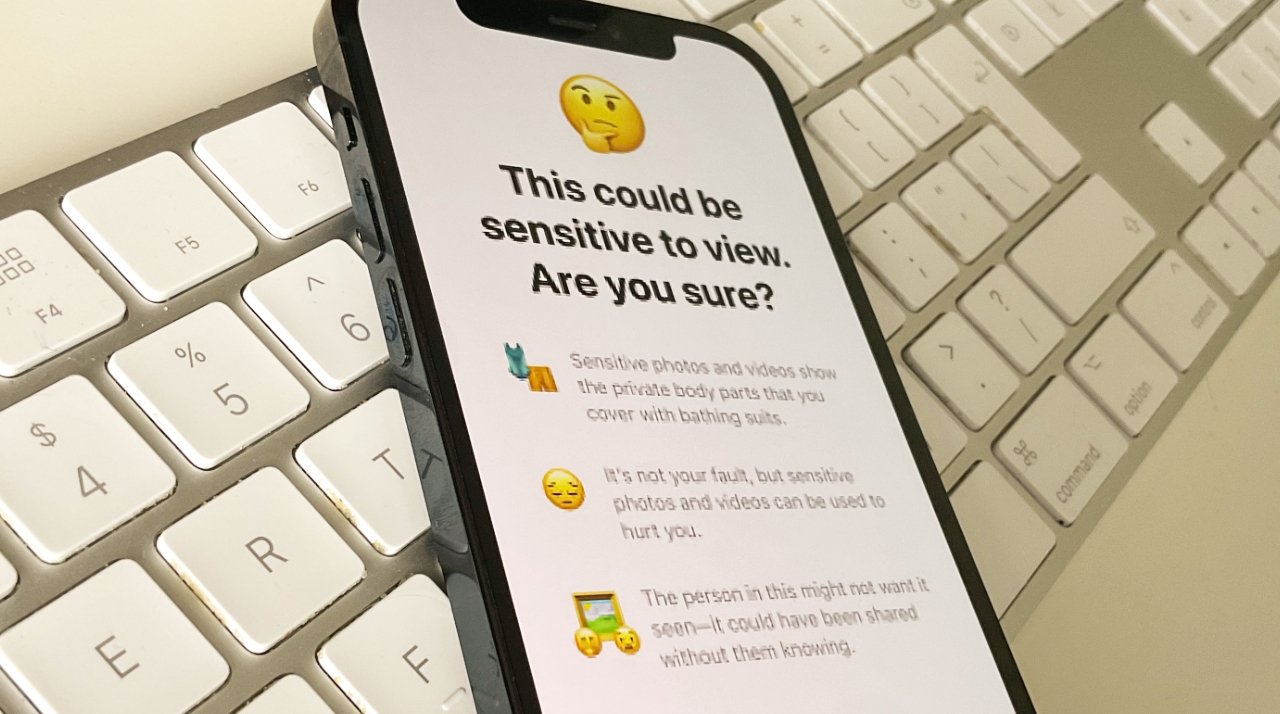

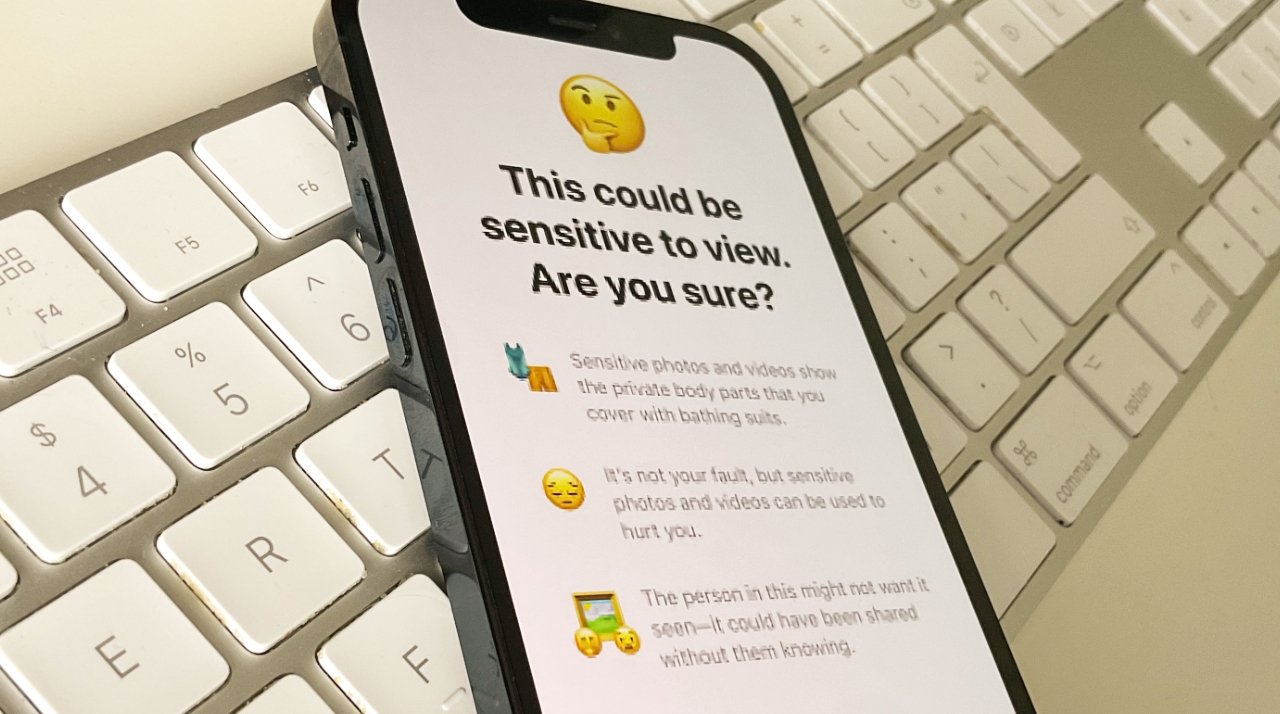

Apple's new child protection feature

Following the German government's description of Apple's Child Sexual Abuse Material plans as surveillance, 85 organizations around the world have joined the protest. Groups including 28 US-based ones, have written to CEO Tim Cook.

"Though these capabilities are intended to protect children and to reduce the spread of child sexual abuse material (CSAM)," says the full letter, "we are concerned that they will be used to censor protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children."

"Once this capability is built into Apple products, the company and its competitors will face enormous pressure -- and potentially legal requirements -- from governments around the world to scan photos not just for CSAM, but also for other images a government finds objectionable," it continues.

"Those images may be of human rights abuses, political protests, images companies have tagged as 'terrorist' or violent extremist content, or even unflattering images of the very politicians who will pressure the company to scan for them," says the letter.

"And that pressure could extend to all images stored on the device, not just those uploaded to iCloud. Thus, Apple will have laid the foundation for censorship, surveillance and persecution on a global basis."

Signatories on the letter have separately been promoting its criticisms, including the Electronic Frontier Foundation.

The letter concludes by urging Apple to abandon the new features. It also urges "Apple to more regularly consult with civil society groups," in future.

Apple has not responded to the letter. However, Apple's Craig Federighi has previously said that the company's child protection message was "jumbled," and "misunderstood."

Read on AppleInsider

Apple's new child protection feature

Following the German government's description of Apple's Child Sexual Abuse Material plans as surveillance, 85 organizations around the world have joined the protest. Groups including 28 US-based ones, have written to CEO Tim Cook.

"Though these capabilities are intended to protect children and to reduce the spread of child sexual abuse material (CSAM)," says the full letter, "we are concerned that they will be used to censor protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children."

"Once this capability is built into Apple products, the company and its competitors will face enormous pressure -- and potentially legal requirements -- from governments around the world to scan photos not just for CSAM, but also for other images a government finds objectionable," it continues.

"Those images may be of human rights abuses, political protests, images companies have tagged as 'terrorist' or violent extremist content, or even unflattering images of the very politicians who will pressure the company to scan for them," says the letter.

"And that pressure could extend to all images stored on the device, not just those uploaded to iCloud. Thus, Apple will have laid the foundation for censorship, surveillance and persecution on a global basis."

Signatories on the letter have separately been promoting its criticisms, including the Electronic Frontier Foundation.

Apple's latest feature is intended to protect children from abuse, but the company doesn't seem to have considered the ways in which it could enable abuse https://t.co/FjIJN8bKaL

-- EFF (@EFF)

The letter concludes by urging Apple to abandon the new features. It also urges "Apple to more regularly consult with civil society groups," in future.

Apple has not responded to the letter. However, Apple's Craig Federighi has previously said that the company's child protection message was "jumbled," and "misunderstood."

Read on AppleInsider

Comments

Agreed - our ability to say, post or store online what we want without consequence has gone too far. This has been caused by the tech companies so it’s really up to them to fix it.

is about on the wrong hands or even with government pressure what else can I scan on that device…

again not totalitarian governments because they just do it. I am talking about every other government.

https://spectrum.ieee.org/hans-peter-luhn-and-the-birth-of-the-hashing-algorithm

The reality is that freedom must be fiercely protected. While there are many people who don’t understand this, there are many people who do. And that’s why we’re seeing the pushback against Apple’s surveillance technology. I’m glad there are still people in higher profile organisations who see it.

The idea, that a temporary technological weakness (analog transmissions) leads to a permanent right to unfettered access to people’s data, is ridiculous.

Does the constitution make any exceptions about search and seizure? No, it doesn’t. Police can’t just randomly enter your house and say: “Oh, we’re just looking for child porn.”

Does the constitution allow torture or “truth serums”? No, it doesn’t. Modern computing devices, especially phones, are in essence brain prosthetics. Access to these is like tapping someone’s brain.

Our ability to say, post, or store online what we want without consequences by far doesn’t go far enough, as cancel culture, political correctness, executed atheists, incarcerated regime critics, or even the cases of Snowden and Assange amply prove.

Apple is adding client-side file scanning on customers devices - both with hashes (photos) and with ML matching (messages), and everyone's point is that it's almost certain that it will be abused. Once that 'protection' is added, governments will start the push for matching content on the device regardless of whether it's being uploaded to iCloud, and push to expand the definition to include political content, copyrighted content, and anything they want to control. You have humble Apple defending this idea, rights groups, governments, technical experts, and regular people questioning it. It shifts the definition of "privacy" to include searching your content on your own device when there's no warrant and no reasonable suspicion.

That cloud-side content has been scanned for a while is well known, even if it seems like a questionable practice as well. If you put something in a bank vault, it typically at least takes a search warrant to get that opened. If a bank decided it was going to install cameras in your home - but would only use the cameras to inspect items you were going to bring to their vault, pinky promise that it wouldn't be abused! - most people would see that as overreach.

Others would explain that cameras aren't a new technology and that it's too late to worry about the implications of how they're used. 🤦♂️

Apple can get out of this: yank out the feature, and implement E2E encryption for all data, including iCloud backups.

Apple can say, that they were emotionally swayed in their attempt of solving this problem, but were convinced that it could and likely would be abused, and that they through the resulting discussion were convinced to double down on user privacy.

They can. The question is: will they?

The only time hash scanning could possibly work, is if someone opens up an album for cloud sharing to friends, at which time it must be unencrypted or encrypted with a key to which Apple has access. But such scanning would then be stupid, because only the dumbest of the dumb would share questionable contents directly on an open photo album, rather than in an encrypted zip file, via file sharing.

In other words: it’s rather clear: unless there’s on-device scanning, people’s data can be kept safe by e2e encryption, something that if Apple won’t do it GrapheneOS will do; so if Apple wants to remain competitive, they should do it, too.

On device scanning is opening the door to all sorts of devils, it’s utterly irrelevant how old the basics of hash scanning are. Anyone who pretends otherwise either demonstrates a lack of understanding of security, or is a government/law enforcement troll who wants to spread FUD about the whole thing, in an effort to save and later expand on Apple’s efforts.

i took your response as sarcasm. If it wasn’t I apologize.

You clearly don’t understand the First Amendment.

'Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances.”

Congress shall make no laws... I have the right to censor speech in my own home. I get to decide who says what in my business. If you work for me and use my internet access, computer, phone, email, I decide what’s appropriate. I get to censor people on the social media platform I own. Only the government is prohibited by the First Amendment from doing so. And by the way, the government does control speech when it comes to certain situations, like incitement to riot, violence, etc.

I agree with the objections, especially with Apple having demonstrated to let their commercial interests be a priority over their privacy mantra (foregoing end-to-end encryption after FBI objections, concessions after objections from Saudi Arabia, Chinese and Russian governments).

At least I hope Apple opens up the operating system for equal integration opportunities (e.g storage of photos on third party cloud storage solutions that actually provide 100% privacy security).

Bunch of hypocrites.