Apple backs down on CSAM features, postpones launch

Following widespread criticism, Apple has announced that it will not be launching its child protection features as planned, and will instead "take additional time" to consult.

Apple's new child protection feature

In an email sent to AppleInsider and other publications, Apple says that it has taken the decision to postpone its features following the reaction to its original announcement.

"Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material," said Apple in a statement.

"Based on feedback from customers, advocacy groups, researchers and others," it continues, "we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features."

There are no further details either of how the company may consult to "collect input," nor with whom it will be working.

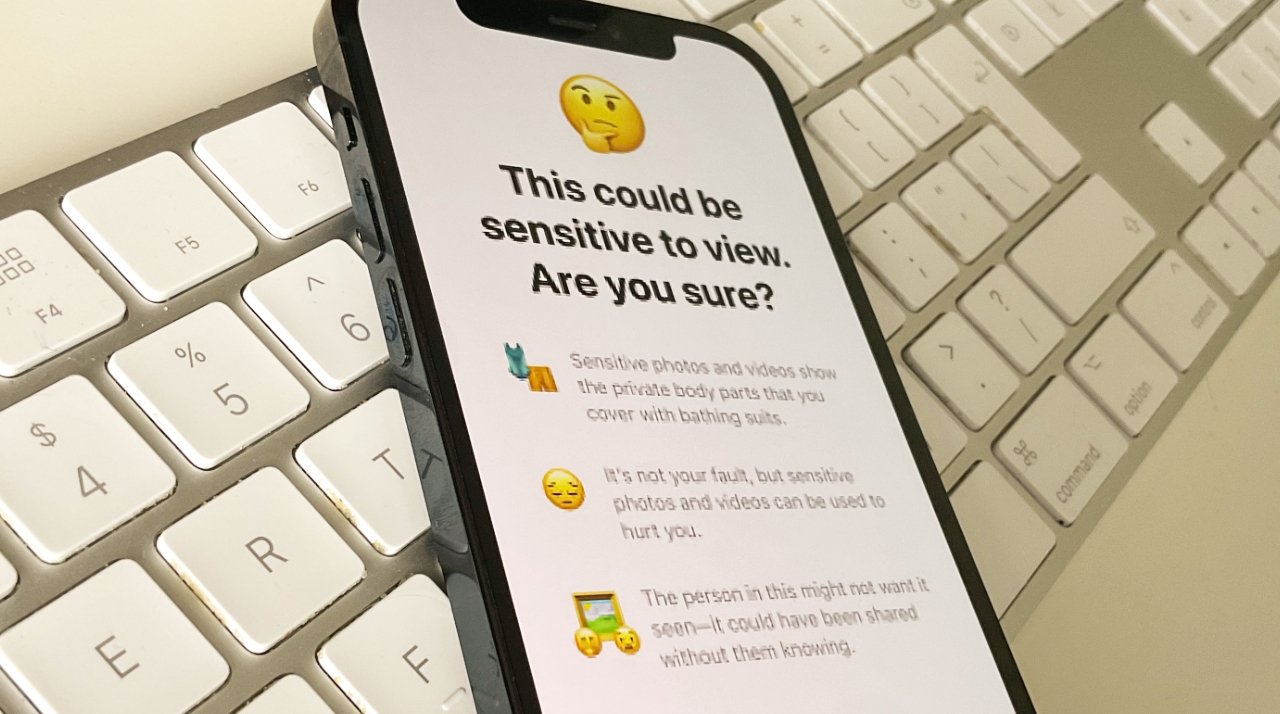

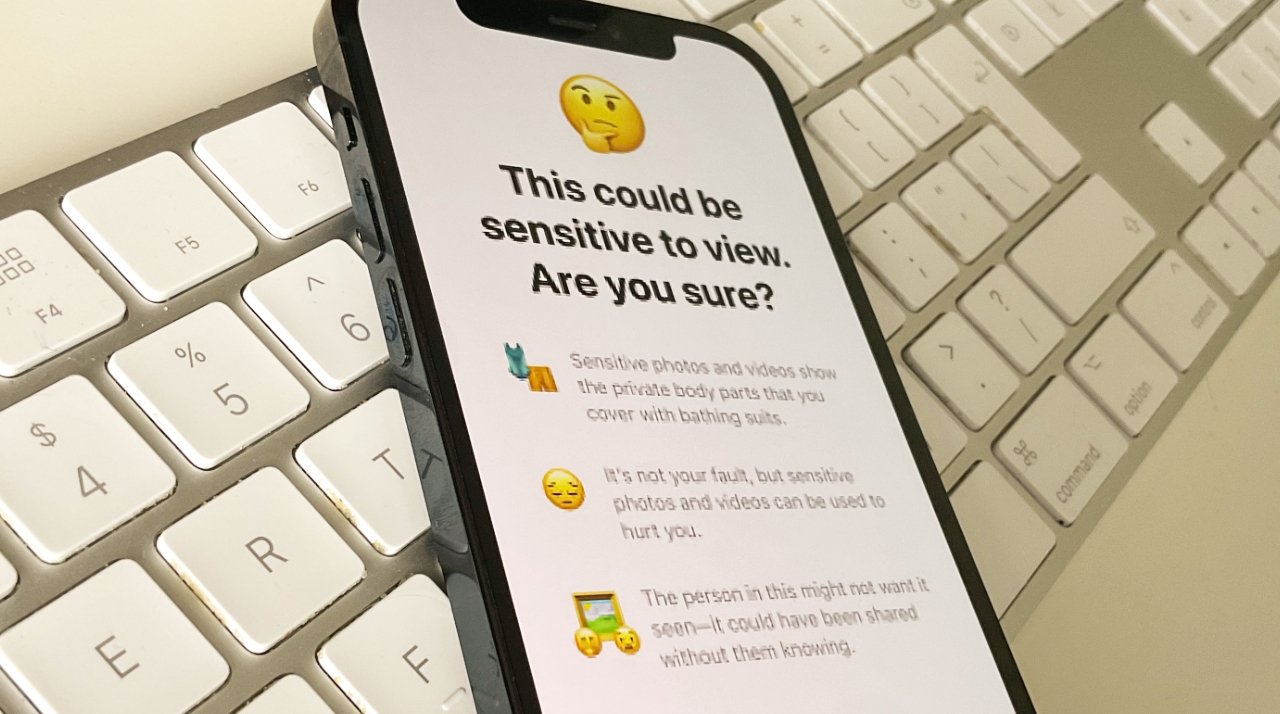

Apple originally announced its CSAM features on August 5, 2021, saying they would debut later in 2021. The features include detecting child sexual abuse images stored in iCloud Photos, and, separately, blocking potentially harmful Messages sent to children.

Industry expert and high-profile names such as Edward Snowden responded with an open letter asking Apple to not implement these features. The objection is that it was perceived these features could be used for surveillance.

AppleInsider issued an explanatory article, covering both what Apple actually planned, and how it was being seen as an issue. Then Apple published a clarification in the form of a document detailing what its intentions were, and broadly describing how the features are to work.

However, complaints, both informed and not, continued. Apple's Craig Federighi eventually said publicly that Apple had misjudged how it announced the new features.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi.

"I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion," he continued. "It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

Read on AppleInsider

Apple's new child protection feature

In an email sent to AppleInsider and other publications, Apple says that it has taken the decision to postpone its features following the reaction to its original announcement.

"Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material," said Apple in a statement.

"Based on feedback from customers, advocacy groups, researchers and others," it continues, "we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features."

There are no further details either of how the company may consult to "collect input," nor with whom it will be working.

Apple originally announced its CSAM features on August 5, 2021, saying they would debut later in 2021. The features include detecting child sexual abuse images stored in iCloud Photos, and, separately, blocking potentially harmful Messages sent to children.

Industry expert and high-profile names such as Edward Snowden responded with an open letter asking Apple to not implement these features. The objection is that it was perceived these features could be used for surveillance.

AppleInsider issued an explanatory article, covering both what Apple actually planned, and how it was being seen as an issue. Then Apple published a clarification in the form of a document detailing what its intentions were, and broadly describing how the features are to work.

However, complaints, both informed and not, continued. Apple's Craig Federighi eventually said publicly that Apple had misjudged how it announced the new features.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi.

"I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion," he continued. "It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

Read on AppleInsider

Comments

As soon as the uproar has died down and Apple thinks it is safe they will be back. They will frame what they put forth next time as "look what we have done to protect you." when in reality it will likely just be cosmetic.

this terrible but well-intended idea should be buried under a mile of cement.

This is far from dead and you laud them forgetting the implications and actions that led to what they were going to do. It is like the convicted thief who repents after getting caught. He/she/they are still a thief until they establish otherwise through building a history of doing the right thing and one mere act to step back does not establish them as having changed. It just takes one lie to make someone dishonest, but it takes a massive number of honest acts to reestablish yourself as trustworthy after you have violated the compact of trust.

Yes, I give them credit for pulling back, but praising their "humility" and framing it as right thing versus wrong thing speaks highly of them? Come on George. They screwed up big and only temporarily pulled back because the outrage and anger far exceeded anything they expected. This does not equate to "Realizing that they are not perfect or humility or any of the intrinsic characteristics you suggest.

With that one simple act, Apple destroyed several decades of the good will and trust they had built on the subject of privacy. It is not mended by them pulling back temporarily.

I have no tolerance or use for people who exploit children - or adults - and would have no problem with the death penalty for such conduct.

But that does not justify such a practice as this. This is Apple’s attempt to cave to big brother government that wants your devices under constant surveillance. The potential for this technology to make false accusations and the resulting destruction of reputation is immense. Once your reputation is damaged by such an accusation it is simply beyond restoration - you will always be suspect.

The privacy of our digital devices has been litigated to death and the current Chief Justice of the Supreme Court wrote the opinion telling the Donut Patrol to get a warrant. He stated that our digital devices have essentially become extensions of our homes in regards to privacy and that should extend to the iCloud service. The cops want to sit back, surveil you 24/7/365 and that is not what our laws say our rights are. The cops need to get back to good old fashioned police work and stop trying to create Orwell’s Telescreen with our phones.

This was bungled from day one, both in announcement, and in the design of what they wanted to do. The stated goal is fine, but it was handled astoundingly badly, especially for a company like Apple that normally does these things so well.

As I’ve thought all along, if they want to scan what’s on their servers, in iCloud, I’m fine with that. They are liable for any such material they are hosting. It was the initial work to be done on our devices that was the problem. That was also what posed the most risk to human rights activists and others.

Now I will update to iOS 15 (at least using the first version until it's clear how this evolves)...