EFF urges Apple to drop CSAM tool plans completely

The Electronic Frontier Foundation has responded favorably to Apple's announcement it would delay implementing its CSAM tools, but still wants Apple to go further and give up on the plans entirely.

On September 3, Apple announced it was going to "take additional time" to consult about its plans to launch child protection features, with a view to improving the tools and implementing them within a few months. In response, the EFF believes Apple could do more on the matter.

In its Friday response, the digital rights group said it was "pleased Apple is now listening to the concerns" of its users "about the dangers posed by its phone scanning tools." However, Apple "must go further than just listening, and drop its plans to put a backdoor into its encryption entirely."

The statement by the group recapped the criticism Apple had received from over 90 organizations around the world, asking the iPhone maker not to implement the features. The claims are that they could "lead to the censoring of protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children."

A petition hosted by the EFF against Apple's initiative reached 25,000 signatures on September 1, and was nearing 27,000 at the time of publication. According to the EFF, the figure grows to "well over 50,000 signatures" when taking into account similar petitions from groups including Fight for the Future and OpenMedia.

"The enormous coalition that has spoken out will continue to demand that user phones - both their messages and their photos - be protected, and that the company maintain its promise to provide real privacy to its users," the EFF blog post ends.

It remains to be seen what changes Apple will make to its CSAM features, but given it is opening itself up to consultation, it won't be short of suggestions and instruction from observers and critics.

Read on AppleInsider

On September 3, Apple announced it was going to "take additional time" to consult about its plans to launch child protection features, with a view to improving the tools and implementing them within a few months. In response, the EFF believes Apple could do more on the matter.

In its Friday response, the digital rights group said it was "pleased Apple is now listening to the concerns" of its users "about the dangers posed by its phone scanning tools." However, Apple "must go further than just listening, and drop its plans to put a backdoor into its encryption entirely."

The statement by the group recapped the criticism Apple had received from over 90 organizations around the world, asking the iPhone maker not to implement the features. The claims are that they could "lead to the censoring of protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children."

A petition hosted by the EFF against Apple's initiative reached 25,000 signatures on September 1, and was nearing 27,000 at the time of publication. According to the EFF, the figure grows to "well over 50,000 signatures" when taking into account similar petitions from groups including Fight for the Future and OpenMedia.

"The enormous coalition that has spoken out will continue to demand that user phones - both their messages and their photos - be protected, and that the company maintain its promise to provide real privacy to its users," the EFF blog post ends.

It remains to be seen what changes Apple will make to its CSAM features, but given it is opening itself up to consultation, it won't be short of suggestions and instruction from observers and critics.

Read on AppleInsider

Comments

I don't think you have any idea what you are talking about.

https://www.eff.org/

"no-compromise privacy zealots" is how a lot of people would describe Apple. Remember the San Bernardino case where they refused to help decrypt the shooter's iPhone? What about when the FBI asked for a backdoor to help fight crime? In both cases, Apple has clearly said "NO, we will not help you hack our phones because it would compromise our users' privacy." Beginning in MacOS 10.8, Apple added privacy checks that required applications to ask permission to read your personal data. In Mojave (10.14), they ramped it up with the requirement to ask permission to use the camera and microphone, and in Catalina (10.15) they make apps ask permission to use screen recording or scan most files on your disk. They have made an entire series of commercials about privacy.

The one place, sadly, where Apple has "compromised" is in their dealing with China, where they contracted iCloud to GCBD, a company that is capable of being influenced the Chinese Communist Party. Without this arrangement, the CCP would have embargoed ALL iPhone sales inmainland China. Period. This set a terrible precedent, and the EFF and others continue to give them flak for it. The CSAM image scanning would be a bridge too far, because scanning and reporting rules could be enforced by foreign governments looking to silence dissidents for sharing memes or pictures that match a "known database" of images.

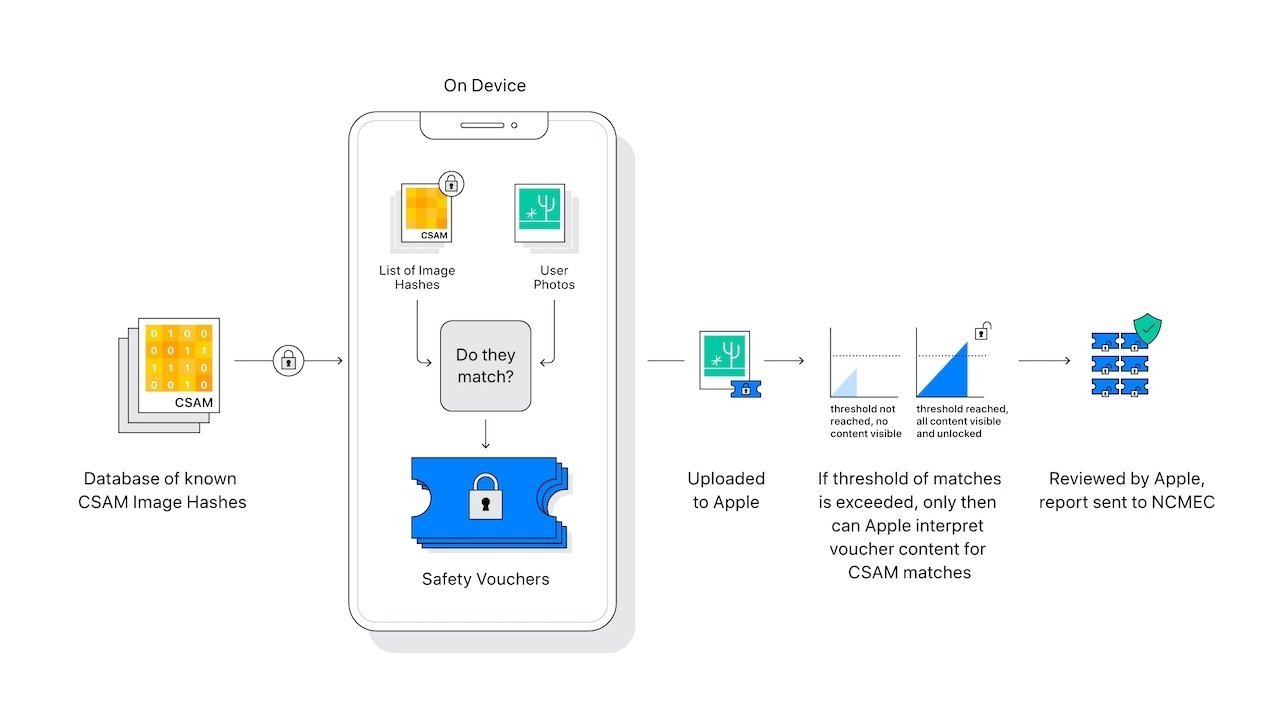

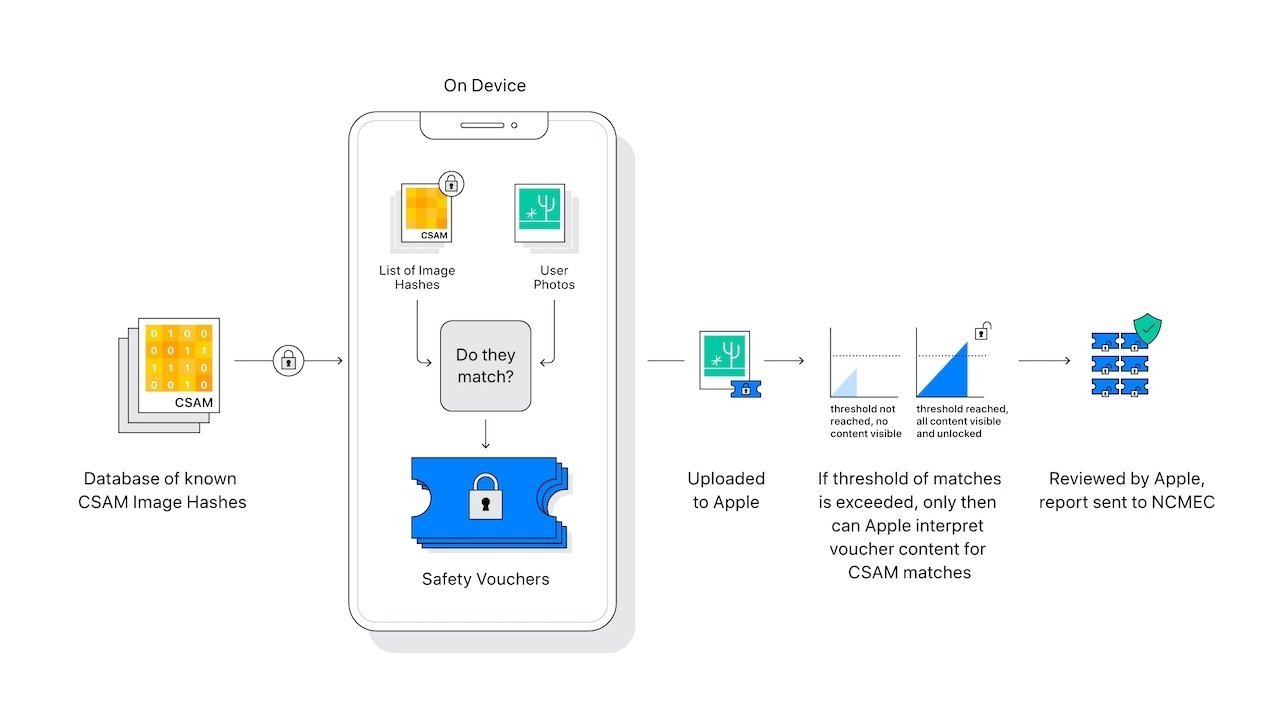

Scenario B. User agrees to iCloud terms of service which grant Apple the right to scan files from apps the user chooses to back up in iCloud. All the files coming from those user designated apps are scanned on the device.

In both scenarios, the user has complete control over which apps/files are backed up and scanned. The files that get scanned are exactly the same. There is absolutely no difference at all in terms of user control or Apple's ability to scan files.

”I want to shackle you on hands and feet, blindfold and gag you!”

”I like to retain my freedom!”

”OK, let’s compromise. I’ll only gag you, and put you on handcuffs. I’ll also turn off the lights and cover the windows. But you’re going to be free to walk about the room.”

”No, I like to retain my freedom!”

”Well, that’s a surefire way to get yourself sidelined. If you can’t be reasonable and have a dialogue, then you’ll be treated as unreasonable and unworthy of dialogue.”

🤦🏻♂️