Wozniak, Musk & more call for 'out-of-control' AI development pause

Steve Wozniak, Elon Musk, and over 1,000 others have signed an open letter asking for an immediate six-month halt on AI technology more powerful than ChatGPT-4.

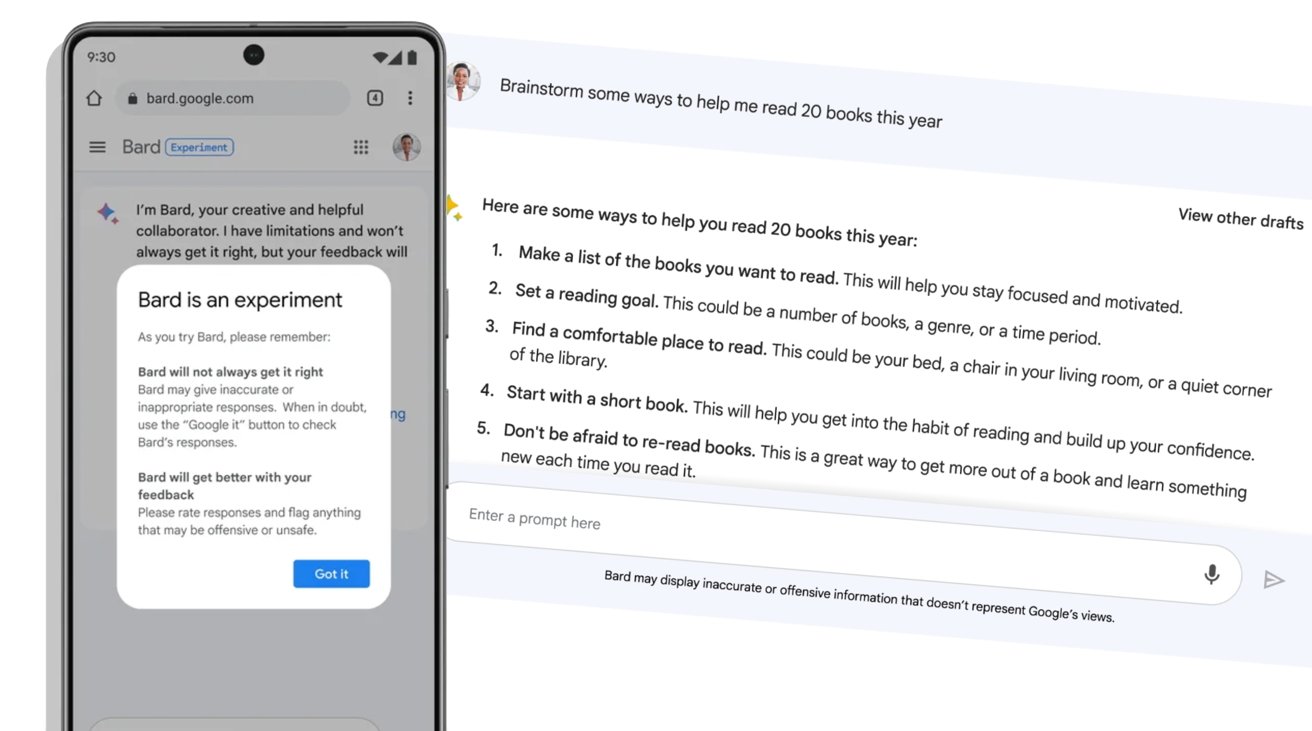

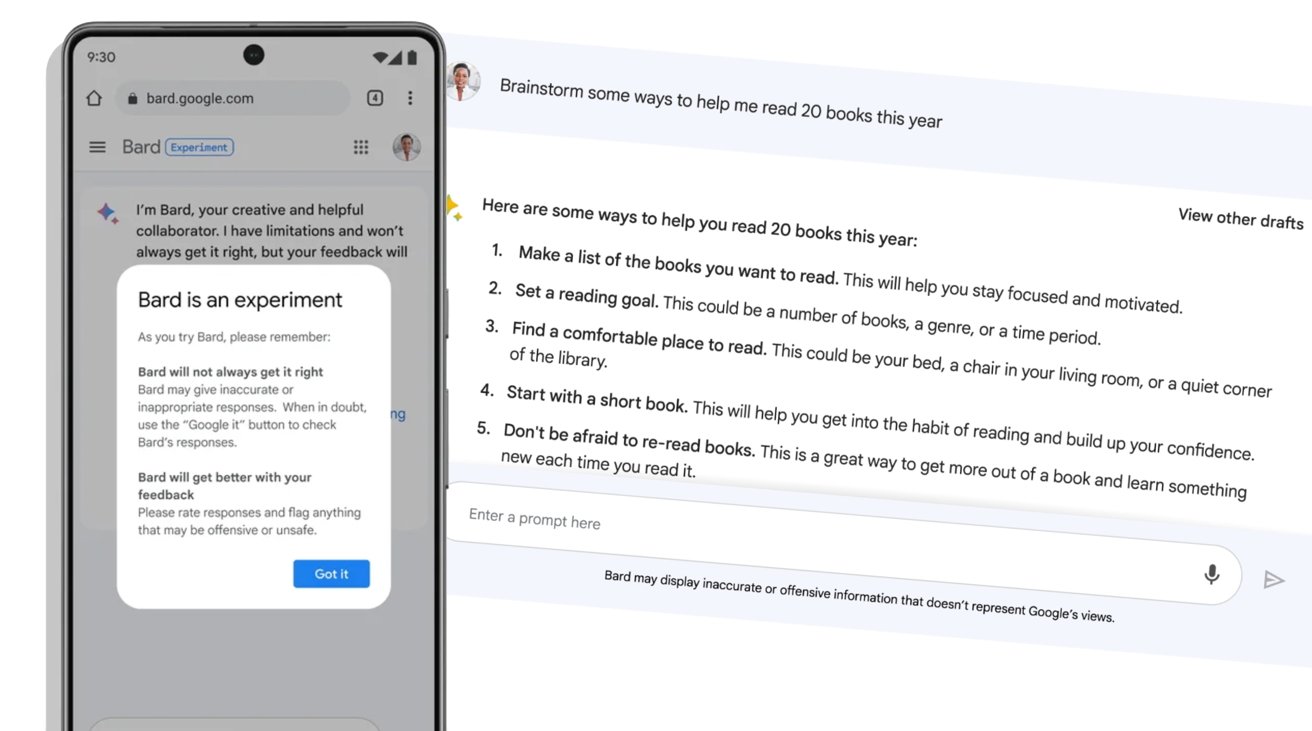

Google Bard is rolling out to users.

This has been the year of artificial intelligence agents such as ChatGPT and Google Bard becoming mainstream. Despite all AI companies describing their products as experiments, or effectively beta releases, their language-processing features are being integrated into Microsoft 365 and searches including Bing.

Now the Future of Life Institute is asking for "public and verifiable" pause that includes "all key actors" in the field. "If such a pause cannot be enacted quickly," it adds, "governments should step in and institute a moratorium"

The Future of Life Institute aims to "steer transformative technology towards benefitting life and away from extreme large-scale risks."

This 600-word letter, aimed at all AI developers, argues that a pause is needed because "recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one -- not even their creators -- can understand, predict, or reliably control."

"AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts," it continues. "These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt."

"This does not mean a pause on AI development in general," says the open letter, "merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities."

At time of writing, the institute's open letter has 1,123 signatories. Those include high-profile ones such as:

Read on AppleInsider

Google Bard is rolling out to users.

This has been the year of artificial intelligence agents such as ChatGPT and Google Bard becoming mainstream. Despite all AI companies describing their products as experiments, or effectively beta releases, their language-processing features are being integrated into Microsoft 365 and searches including Bing.

Now the Future of Life Institute is asking for "public and verifiable" pause that includes "all key actors" in the field. "If such a pause cannot be enacted quickly," it adds, "governments should step in and institute a moratorium"

The Future of Life Institute aims to "steer transformative technology towards benefitting life and away from extreme large-scale risks."

This 600-word letter, aimed at all AI developers, argues that a pause is needed because "recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one -- not even their creators -- can understand, predict, or reliably control."

"AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts," it continues. "These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt."

"This does not mean a pause on AI development in general," says the open letter, "merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities."

At time of writing, the institute's open letter has 1,123 signatories. Those include high-profile ones such as:

- Elon Musk, CEO of SpaceX, Tesla & Twitter

- Steve Wozniak, Co-founder, Apple

- Jaan Tallinn, Co-Founder of Skype

- Evan Sharp, Co-Founder, Pinterest

Read on AppleInsider

Comments

https://www.cnbc.com/2023/02/08/chinese-tech-giant-alibaba-working-on-a-chatgpt-rival-shares-jump.html

Wasn't Elon Musk one of the founders of OpenAI, then sold his stock (because he didn't think it would make any profit?) and suddenly he's calling for them to pause development? Isn't he guilty for starting the OpenAI company and isn't he planning to put brain implants in people as we speak?

Developers seem to think this can be controlled. Maybe it can but maybe it can't.

Only until the business users discover just how much of their company-sensitive data is being sent to the ChatGPT controllers.

The year of Linux on the desktop may well be here at last if that happens. Oh... sorry, I should have said...

The year of MacOS on the desktop (sic)

If there was a computer program smarter than a human, great. Ask it to solve difficult problems. Let it teach people's kids.

If it spits out misinformation, turn it off.

If it is attached to a time-traveling murder robot, turn it off.

The research papers linked from the letter talk about the same kind of anecdotes like spreading misinformation to cause political divisions eventually leading to nuclear war:

https://arxiv.org/pdf/2209.10604.pdf

https://arxiv.org/pdf/2206.13353.pdf

https://arxiv.org/pdf/2303.12712.pdf

Also things like becoming dependent on AI. This would be similar to what happens due to relying on computers for everything. When there's a power cut, everything grinds to a halt because nobody knows how to do anything without power or a network connection any more. If AI is eventually tasked with a lot of the complex jobs and humans aren't trained to do it any more, there would be some bad consequences.

https://nypost.com/2019/10/20/pentagon-scraps-obsolete-floppy-disk-system-controlling-us-nuclear-arsenal/

Not sure what they replaced it with, but hopefully it is just as unbreachable as the Gerald Ford era tech was.

Rather than worry about AI becoming sentient or taking over / destroying the world, what many of these people are probably worried about is a human reliance on AI tech rather than their own brains. The world has a drug problem now, so we can see AI as a new kind of drug that people will get hooked on. That doesn't mean the tech is bad or that it needs a halt. It means human beings need a kind of help that no AI can provide.