Apple's security message: Keep data safe by keeping it out of the cloud

Apple consistently beat the drum about data security at its Wonderlust event and it's a message that's worth emphasizing -- keeping your data safe sometimes means keeping it out of the cloud altogether.

Apple certainly has a better track record than some companies when it comes to corporate data security. And, the company has emphasized many times over the years that it doesn't try to monetize your info in the same was as Google and other companies.

The risk is always there that someone, somewhere may get access to your personal information in the cloud.

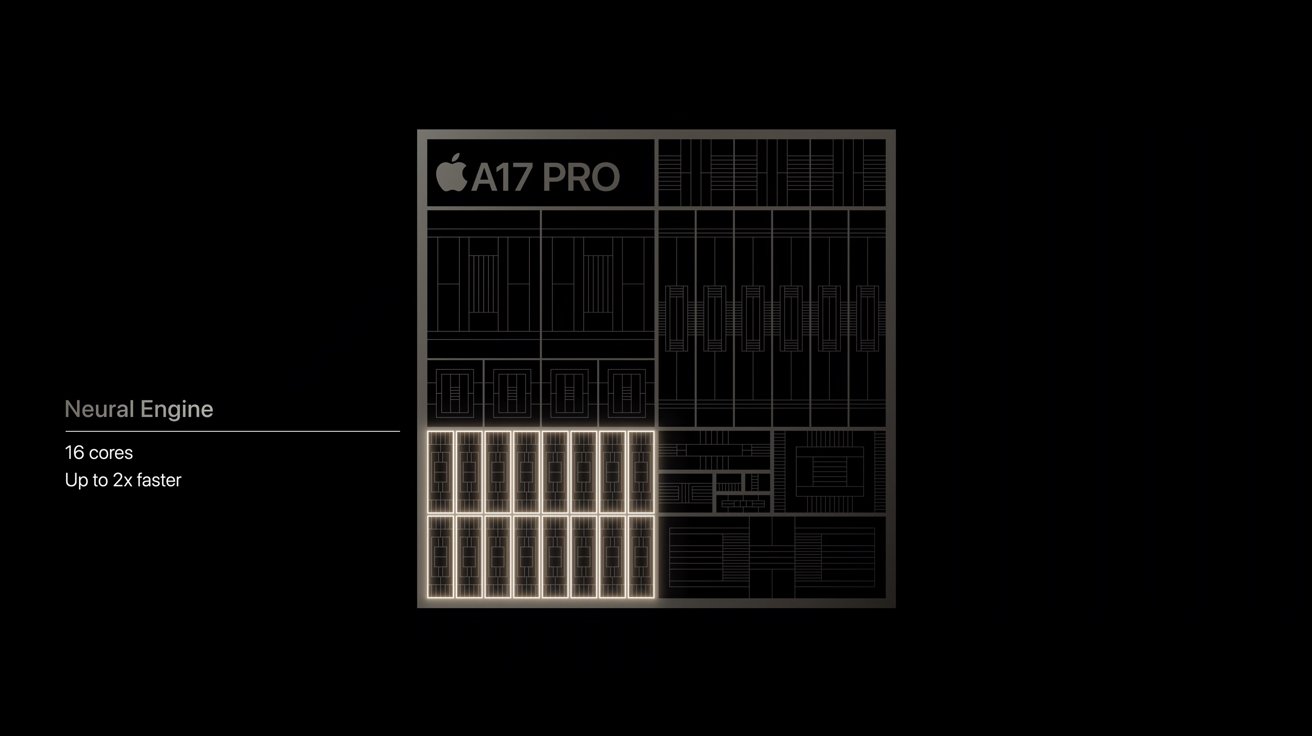

Doubling-, tripling-, and perhaps even quadrupling-down on the concept of data security this week, Apple emphasized features in both the A17 Pro System on Chip (SoC) powering the iPhone 15 Pro and the S9 System in Package (SiP) powering the Apple Watch Series 9 that enhance personal data security by helping to keep more of your most intimate health information local, and out of the cloud altogether.

The key is the increased capability of the Neural Engine components of both these systems - the part of both systems that manages Machine Learning (ML) functions.

Keeping data on device

During the event, Deidre Caldbeck, Apple's Director, Apple Watch Product Marketing, gave an explanation of how the S9 chip works.

"Thanks to the powerful new Neural Engine, Siri requests are now processed on-device, making them faster and more secure," said Caldbeck. "This means that the most common requests, like 'Siri, start an outdoor walk workout,' no longer have to go to the cloud, so they can't be slowed down by a poor Wi-Fi or cellular connection."

What's more, Caldbeck explained that Siri health queries are processed on-device by the S9, eliminating roundtrip cloud data movement to record and view personal health data like sleep schedule, medication info, workout routines or menstrual period data.

Siri Health data is processed on Apple Watch S9 instead of the cloud

Later on during the event, Sribalan Santhanam, Apple's VP, Silicon Engineering Group, echoed some of Caldbeck's comments to explain how the iPhone 15 Pro's A17 Pro chip worked.

"The Neural Engine uses machine learning on the device without sending your personal data to the cloud," said Santhanam. He couched his explanation with examples of convenience more than security, as the capability enables typing autocorrect functionality to work more accurately, or being able to mask subjects in photos from their background, or even to create a Personal Voice.

Regardless, the emphasis is the same. Both the new S9 and A17 Pro processors do more on-chip, to keep your data on the device instead of traveling to the cloud.

Inside Apple's Neural Engine

Apple introduced the Neural Engine with the A11 chip when it rolled out the iPhone 8 and iPhone X, and it's been a part of Apple Silicon ever since.

The A17 Pro features twice the Neural Engine performance as the M2

Apple assiduously avoids terms like "artificial intelligence" in its press releases and the scripts for its events, and it's easy to understand why -- the term is politically loaded and intentionally vague. Not that "Neural Engine" is any less vague, as it implies if not AI, something parallel.

Ultimately, whatever you call the technology, it's all about making machine learning more efficient. Apple's Neural Engine is a cluster of compute cores known generally as Neural Processing Units (NPUs).

In the same way that Graphics Processing Units (GPUs) are specialized silicon designed to accelerate the display and processing of graphics information, NPUs speed the processing of Machine Learning (ML) algorithms and associated data. They're both distinct from the more generalized designs of CPUs, aimed at handling massive amounts of high parallelized data processing quickly and efficiently.

The iPhone 15 Pro's A17 Pro chip sports a Neural Engine with 16 cores, the same number of cores as the Neural Engine found in the M2 and M2 Max chips powering newer Mac models.

While the M2 neural engine can process 15.8 billion operations per second, Santhanam confirmed that the A17 Pro's is much faster.

"The Neural Engine is now up to twice as fast for machine learning models, allowing it to process up to 35 trillion operations per second," said Santhanam, describing the A17 Pro.

Edge computing, straight to the device

The global trend in cloud computing continues to emphasize development of edge networks which move data and compute capabilities out of monolithic data centers clustered in specific geographic areas, and closer to where the user needs the functionality.

By doing so, cloud computing services can deliver much faster performance and considerably lower latency (by reducing the round-trip time it takes for packets of data to travel). In fact, this functionality is absolutely vital to getting so-called "metaverse" -- a word that Apple will also never say out loud -- to work like its makers envision.

In some ways, Apple's development of ML capabilities in its own silicon reflect this emphasis on moving data closer to the user. As these Apple executives noted during the event, leaning on ML capabilities on the device provides both greater security and faster performance.

In that respect, the data privacy aspect of this almost seems like an afterthought. But it's really a central part of the message implied by Apple -- your data is safer on our devices than others.

Ultimately this is a big win for any consumer who's worried about who might see their personal information along the way.

Read on AppleInsider

Comments

I have noticed that some of the new devices have thread capability, so does this mean future HomePods and Apple TVs will be able to function better with Homekit by using on-chip Siri on those devices?

The fact that the adjective "cloud" being used doesn't change anything. Hell, you could balance your checkbook on a standalone computer and still risk losing all of your records if the drive crashes, corrupts the data, and you have no backup.

Nothing new here from Apple, just reiterating what needs to be repeated occasionally to a new generation (or oldsters who have somehow forgotten how things were 10-20 years ago).

Writing your passwords down on a sheet of paper and storing it in a fire safe is less risky but more inconvenient. This is easier to do if you're single. It's much more challenging to do in a family where people are sharing accounts, passwords, and services.

Again, this is all about risk assessment and each person understanding the risks and accepting their decision to use/not use the network. It's a tradeoff between security and convenience. Time and cost are two other factors. There are probably more.

Remember that even a password written on a piece of paper can be unearthed on the server (your bank, insurance company, brokerage account, school, webmail provider, airline, Netflix, whatever).

And even if you don't use the Internet at all, your private data (name, SSN, DOB, sex, etc.) are still available on corporate machines like your bank, the government, healthcare providers, etc.

Redundancy never went out of fashion. Wear it with pride. Also consider acquiring your redundancies from more than one supplier to avoid having all of your redundancies ripping in the same place.

Contacts on your Windows Phone that you wanted to call, simply hold the button and say, "call Mike Jones on mobile"

Voice command would instantly respond with "Call Mike Jones on mobile?"

I respond, "Yes"

and the call would go through.

The whole idea of having my voice be telegraphed to some computer for processing 20 (it was November 2003 when Voice Command was released) years later is a bit ludicrous, especially when the Windows Phones at the time were still 5 years behind the first iPhone.

Voice recognition isn't new, by any stroke of the imagination.

The phone I had was pretty tricked out. I had the HTC TyTN, with 64MB of RAM and I did put Voice Command on my 128MB SD card.

The point of sending Siri commands to "some computer for processing" is to allow for far more sophisticated computational work to be applied in order to allow for different accents, interpretation of varied ways of stating commands, and for a significantly wider variety of tasks to be carried out. In 2003, sending voice commands over the cellular/wireless internet for processing wasn't even possible. BlackBerry was still cranking email through its proprietary centralized email system in order to minimize the use of a very, very limited capacity for data transmission over cellular networks.

By 2011, the calculus had flipped. With increased bandwidth, transmitting voice commands over the cellular internet for offsite processing allowed for significantly more robust voice recognition and linguistic interpretation than could have been handled by an A5 chip in an iPhone 4S.

Now, with significantly greater processing power in portable devices, things can flip again, and Siri commands can effectively be processed on-device without a loss of computational capacity. This, in turn, allows for the higher level of data security inherent in not transmitting the voice command data at all.