Hands on: Siri starts to get better thanks to Apple Intelligence

Apple has released our first look at Apple Intelligence, and with it, a wholly refreshed Siri. Even though it's early, let's take a look at how Siri is getting even better - and what features are still coming.

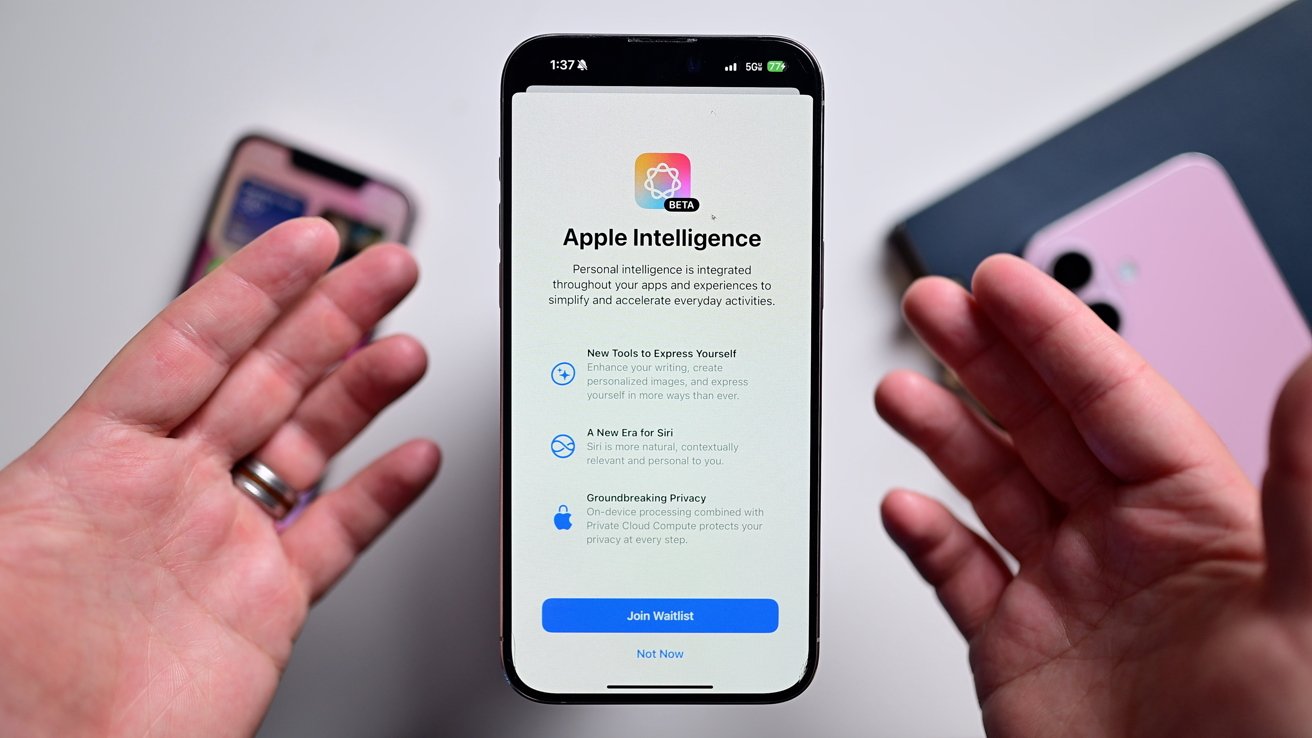

Apple Intelligence is now here

Based on the folks we've talked to about it, Apple's long-running voice assistant Siri is the most anticipated upgrade that's set to arrive with Apple Intelligence. It's long past time for Apple to upgrade Siri to make it a viable alternative to other virtual assistants.

Charitably, before the Apple Intelligence upgrade coming in some version of iOS 18, it's pretty bad.

We've updated our iPhone 15 Pro Max -- one of two supported iPhone models -- to the iOS 18.1 beta, the delivery vehicle for Apple's big AI push. It's likely to arrive by this October.

It's important to note that while Siri has a new look, as of August 2, 2024, many of the features aren't here yet. That makes it really hard to judge, this early, at how good the new AI-powered Siri will be when at full power.

Regardless, let's take a look at how Siri has gotten better and what we're still waiting for.

Apple Intelligence: Siri's new look

Siri has a whole new look. Gone is the small glassy orb and in its stead is a colorful animation emanating from the edges of your phone.

Siri is looking good with Apple Intelligence

It does this on iPhone, iPad, and even when using CarPlay. This light reacts to your voice, moving like an on-screen visualizer.

If you invoke Siri using the side button, the animation sprawls out from that top-right corner while if you use your voice or the keyboard, it comes in from the edges.

Speaking of the keyboard, this too is new. With a double-tap at the bottom of your phone screen, a keyboard will appear to type Siri your questions.

Double tap the bottom of your phone to type to Siri

It's easy to move between typed and verbal queries. Just tap the microphone button on the bottom-right of the keyboard to move back to spoken if that's easier in the moment.

Apple Intelligence: A better understanding

Aside from a fresh coat of paint, Siri has improved awareness. It's able to retain context between queries and even when you stumble.

"Can you check the weather tomorrow, actually, no, check it for Tuesday in Cupertino" will return the correct answer while asking on the legacy version of Siri gives a range of weather info.

Similarly, if you say "set a timer for three hours, wait I meant set an alarm for three," the old Siri somehow set a timer for about 5 hours while the new Siri correctly set an alarm for 3PM.

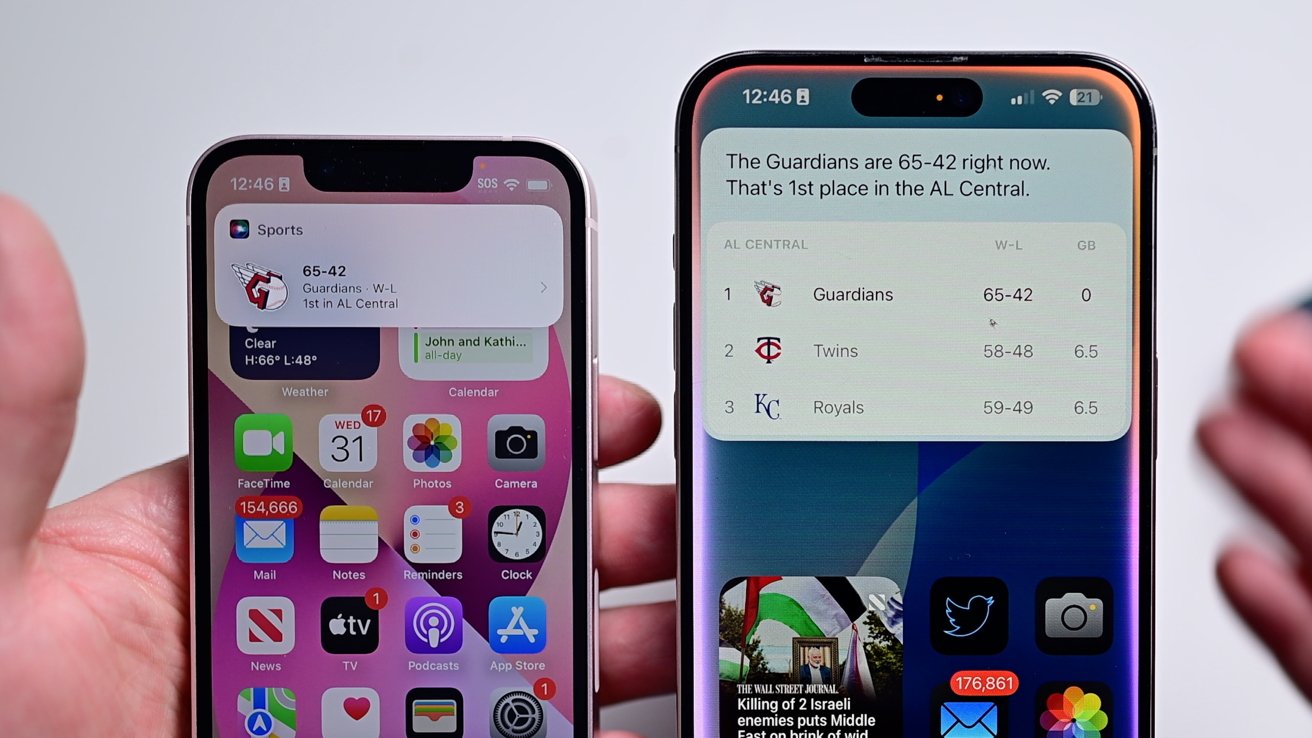

Aside from stutters, context is vastly improved. You can ask follow-up questions that require understand of the prior question and its answer.

For example, you can't ask "old" Siri "check when the next Cleveland Guardians game is?," and then follow it up with "that looks good, add that to my calendar."

The new Siri interface looks better for many searches

The soon-to-be outdated Siri give you the Guardian's schedule and then creates a blank calendar appointment without any information attached. The new Siri knew exactly what to do.

We can ask follow up questions with the updated Siri, like adding a game to our schedule after asking when the game was

It works for anything that has follow up context. In the home, you can ask Siri to turn off the bedroom lights and follow it up by saying "and the living room ones too."

Compounded questions still elude Apple's voice assistant though. If you try to combine two questions into one, it only is able to answer one.

We wish this worked better with the upgraded Siri

"Text Faith and ask what she wants me to make for dinner, then set a reminder to start cooking at 5," gave us a single text message with the contents "what do you want me to make for dinner, then set a reminder to start cooking at 5."

Maybe this will get better as the beta progresses.

Apple Intelligence: Product knowledge

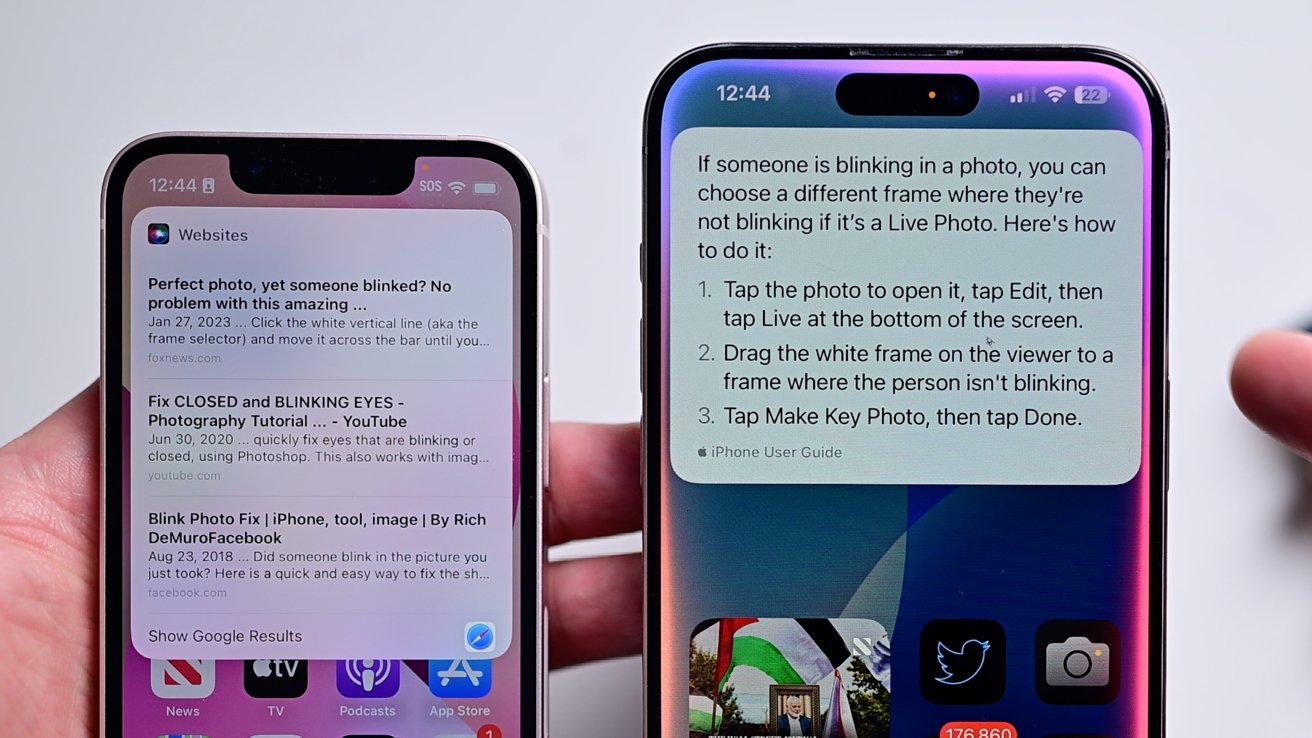

AppleInsider readers should appreciate the next new feature coming to Siri by way of Apple Intelligence. It will be able to answer questions about Apple products, helping curb those late night support calls we're all familiar with.

Siri can answer many questions about your phone

You can ask things like "how do I send a message later?," or "how to I fix a photo when someone is blinking." We tested a variety of these and got hit or miss answers so far.

Old Siri just gave us search results for blinking in photos

When it worked, it was great. It gave step-by-step instructions on how to do whatever we asked. Including with the two outlined above.

Asking something like "How do I log my omeprazole for the day" threw it for a loop. This isn't far off from "how do I log medicine on my iPhone," which gave good results.

Medicine databases are huge, and available. Apple Intelligence theoretically should understand context enough to realize omeprazole is a medicine and what we were asking for. Average users may not know how to phrase a question to elicit the correct response, so its ability to parse here needs to improve.

Again, this is an initial beta so we're hopeful that these things do improve by the full launch. Apple does have a new feedback option when using Siri to help provide that necessary guidance.

Apple Intelligence: More of the same -- for now

The new keyboard and new design has caused us to use Siri more over the past few days. But the more we do, the more it's clear that much of Siri is still the same.

Many results, while they look better, still just show web views or open a browser

A lot of answers still take you to the web or require inevitably opening a browser window to answer. Even though all this makes Siri easier to use, it still doesn't really know any more than it did before yet.

It's clear right now that Siri is still Siri. And, right now, it is missing a lot of functionality that other virtual assistants bring to bear.

The old Siri orb is gone

The good news is most of those features are coming. Other than answering questions about your phone, few of the new features for Siri have been released.

In future updates, Siri will include onscreen awareness to be able to take actions and respond based on what you're looking at. It'll also be able to understand personal context, pulling form your calendar, messages, emails, and more to become more personal.

Plus, it'll be able use a dozen app intents to perform actions across third-party apps. These actions apply to browsers, eReaders, photo and camera apps, and more.

ChatGPT is coming to Siri later this year

Not to mention the hotly anticipated integration with ChatGPT. That, and other LLMs, will add a wealth of knowledge that's currently absent.

Together all of these are poised to deliver a vastly improved Siri. Only time will tell if it will ultimately be enough.

Apple Intelligence and the upgraded Siri are set to arrive with iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1 this fall.

Read on AppleInsider

Comments

Now… or in a near futue… AI-Siri will become a real ‘assistant.’

The best part is that it will preserve your privacy… like a real personal assistant. When your assistant does not know the answer… he/she/it will go to the library or the encyclopedia… well… to ChatGPT by now…

Have you enabled "Hey, Siri" in Settings and is it enabled when Locked? Also , there is an Accessibility setting for using "Hey, Siri" when the iPhone is face down.

PS: I personally don’t like to have features accessible from the Lock Screen. In fact, I hate that phone numbers and emails show up on the Lock Screen after a restart. It doesn’t connect with Contacts, but I feel that a full phone number reveals more info than simply a name like Bob being displayed. To rectify that I lock the SIM.

Siri doesn't understand what you say at all - that is really one of the key definitions of something being AI.. You can miss out words and it works still even if those words are critical to getting the action right. In fact works better if you say the absolute minimum as there's less for it to judge wrongly. "Siri, music" for example.

It used to use Wofram Alpha if it didn't understand, but Apple apparently abandoned that way back in about 2015. You could ask complex things like "how many busses does a whale weigh" and it'd give you a correct answer. Now it's just a glorified web search for anything it needs "intelligence" for - which is even worse for small or no screen devices. "I can show you that on your iPhone"... great.

And that's where the biggest downside is. The sheer amount of "I found some results and can show them to you on your iPhone" or "I cannot answer this while you are driving" is mind boggling for something that should help users do stuff without physical interaction with their devices. I'm as big an Apple fanboy as the next guy, but I hate how Apple has been so slow on anything Siri related—especially the consistency and seamlessness across devices, which has been Apple's hallmark.

We are clearly moving in the direction of voice interaction (here's looking at you, Picard) and if Apple would spend half the time on developing Siri properly than it does on super-important stuff like phones that are 0.00001 inch thinner than last year's or teaching their employees ridiculous hand gestures for presentations, they'd be scorching the competition and making all of us much happier.