Future versions of Apple's Siri may interpret your emotions

Future versions of Apple's Siri may go beyond voice recognition to enhance accuracy, taping into FaceTime cameras in the company's devices to simultaneously analyze facial reactions and emotions as users engages in dialog with the voice assistant.

Apple is developing a way to help interpret a user's requests by adding facial analysis to a future version of Siri or other system. The aim is to cut down the number of times a spoken request is misinterpreted, and to do so by attempting to analyse emotions.

"Intelligent software agents can perform actions on behalf of a user," says Apple in US Patent Number 20190348037. "Actions can be performed in response to a natural-language user input, such as a sentence spoken by the user. In some circumstances, an action taken by an intelligent software agent may not match the action that the user intended."

"As an example," it continues, "the face image in the video input... may be analysed to determine whether particular muscles or muscle groups are activated by identifying shapes or motions."

Part of the system entails using facial recognition to identify the user and so provide customized actions such as retrieving that person's email or playing their personal music playlists.

It is also intended, however, to read the emotional state of a user.

"[The] user reaction information is expressed as one or more metrics such as a probability that the user reaction corresponds to a certain state such as positive or negative," continues the patent, "or a degree to which the user is expressing the reaction."

This could help in a situation where the spoken command can be interpreted in different ways. In that case, Siri might calculate the most likely meaning and act on it, then use facial recognition to see whether the user is pleased or annoyed.

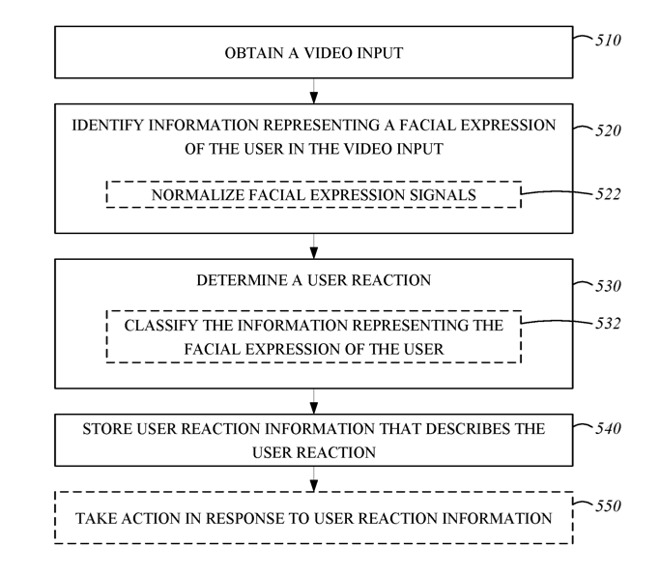

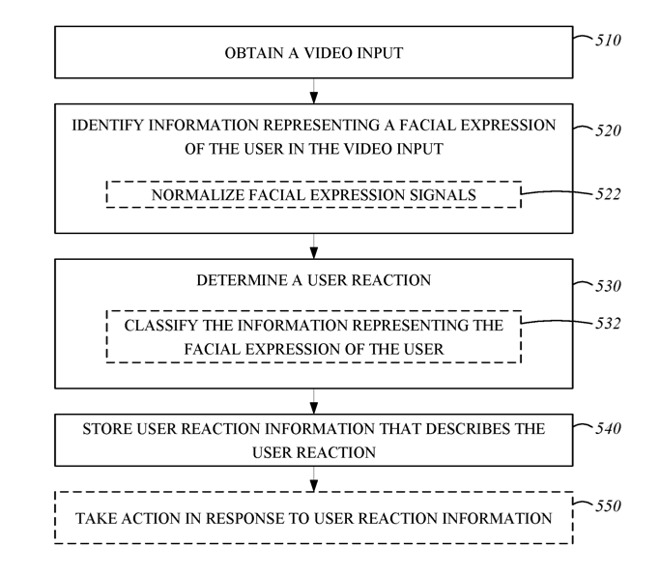

Detail from the patent regarding one process through which facial expressions can be acted upon

The system works by "obtaining, by a microphone, an audio input, and obtaining, by a camera, one or more images." Apple notes that expressions can have different meanings, but its method classifies the range of possible meanings according to the Facial Action Coding System (FACS).

This is a standard for facial taxonomy, first created in the 1970s, which categorizes every possible facial expression into an extensive reference catalog.

Using FACS, Apple's system assigns scores to determine the likelihood of which is the correct interpretation and then can have Siri react or respond accordingly.

Of the seven inventors credited on Apple's filing, only one has had a previous patent. Jerremy Holland is also listed as sole inventor on a 2014 Apple patent to do with syncing video playback on a media device. However, inventor Nicholas E. Apostoloff is cited in numerous other patents for his work on analysing and manipulating video using machine learning techniques.

Keep up with all the Apple news with your iPhone, iPad, or Mac. Say, "Hey, Siri, play AppleInsider Daily," -- or bookmark this link -- and you'll get a fast update direct from the AppleInsider team.

Apple is developing a way to help interpret a user's requests by adding facial analysis to a future version of Siri or other system. The aim is to cut down the number of times a spoken request is misinterpreted, and to do so by attempting to analyse emotions.

"Intelligent software agents can perform actions on behalf of a user," says Apple in US Patent Number 20190348037. "Actions can be performed in response to a natural-language user input, such as a sentence spoken by the user. In some circumstances, an action taken by an intelligent software agent may not match the action that the user intended."

"As an example," it continues, "the face image in the video input... may be analysed to determine whether particular muscles or muscle groups are activated by identifying shapes or motions."

Part of the system entails using facial recognition to identify the user and so provide customized actions such as retrieving that person's email or playing their personal music playlists.

It is also intended, however, to read the emotional state of a user.

"[The] user reaction information is expressed as one or more metrics such as a probability that the user reaction corresponds to a certain state such as positive or negative," continues the patent, "or a degree to which the user is expressing the reaction."

This could help in a situation where the spoken command can be interpreted in different ways. In that case, Siri might calculate the most likely meaning and act on it, then use facial recognition to see whether the user is pleased or annoyed.

Detail from the patent regarding one process through which facial expressions can be acted upon

The system works by "obtaining, by a microphone, an audio input, and obtaining, by a camera, one or more images." Apple notes that expressions can have different meanings, but its method classifies the range of possible meanings according to the Facial Action Coding System (FACS).

This is a standard for facial taxonomy, first created in the 1970s, which categorizes every possible facial expression into an extensive reference catalog.

Using FACS, Apple's system assigns scores to determine the likelihood of which is the correct interpretation and then can have Siri react or respond accordingly.

Of the seven inventors credited on Apple's filing, only one has had a previous patent. Jerremy Holland is also listed as sole inventor on a 2014 Apple patent to do with syncing video playback on a media device. However, inventor Nicholas E. Apostoloff is cited in numerous other patents for his work on analysing and manipulating video using machine learning techniques.

Keep up with all the Apple news with your iPhone, iPad, or Mac. Say, "Hey, Siri, play AppleInsider Daily," -- or bookmark this link -- and you'll get a fast update direct from the AppleInsider team.

Comments

Yes I've seen some pretty weird goings on like that. Not related as such but all of a sudden certain artists can't be found in my iTunes Match by HomePods even though they are there on all other devices. I have to assume Apple is hard at work tweaking Siri's back end (pardon the expression lol) and these are anomalies caused by this and hopefully temporary.

"Touchy today, aren't we!"

Unfortunately it was a case of Siri responding to something that wasn't aimed at it in the first place.

Made me laugh out loud, though.

this will end badly.

Maybe Apple needs to scrap Siri completely and start again. It can't even get the basic things right virtually all the time.