HomePod of the future may only answer Siri queries if you look at it

Apple device users may not necessarily have to even call out the word "Siri" in future, with Apple researching ways to use gaze detection for a device to know it's wanted.

Owners of multiple devices in the Apple ecosystem will be familiar with one of the lesser-known problems of using Siri, namely getting it to work on one device and not another. When you are in a room that contains an iPhone, an iPad, and a HomePod mini, it can be hard to work out which device will actually respond to a query, and it may not necessarily be the desired device at that.

Furthermore, not everyone feels comfortable with the "Hey Siri" prompt being used at all, which is why Apple has recently announced cutting that down to just "Siri," or at least on certain devices.

Even so, errant uses of "Siri" or "Hey Siri" -- and trigger phrases of other digital assistants -- on television and radio can still cause queries to be made that users may not want to take place.

Then there is also the possibility of users needing to interact with devices without using their voice at all. There can of course be situations where a command needs to be issued from a distance, or where it could be socially awkward to talk to the device.

In a newly granted patent called "Device control using gaze information," Apple suggests it may be possible to command Siri visually. Specifically, it proposes that devices could detect a user's gaze to determine if that user wants that device to respond.

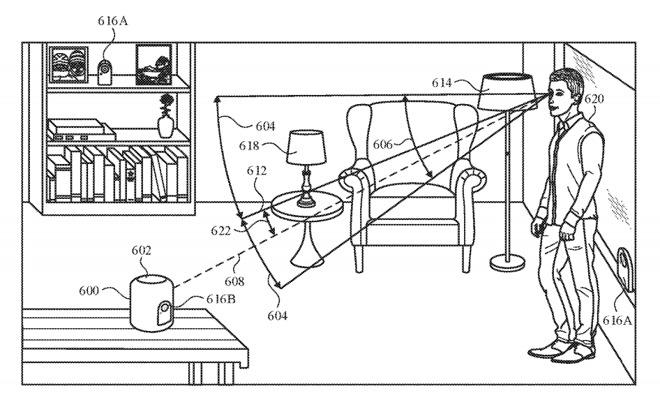

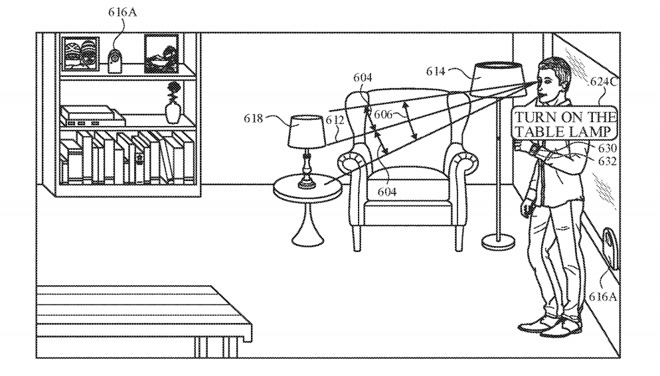

The filing suggests a system which uses cameras and other sensors capable of determining the location of a user and the path of their gaze, to work out what they are looking at. This information could be used to automatically set the looked-at device to go into an instruction-accepting mode where it actively listens, in the expectation that instructions will be told to it.

If the digital assistant interprets what could be a command in this state, it can then carry it out as if the verbal trigger was said beforehand, saving users from a step. This would still allow for phrases like "Hey Siri" to function, especially when the user isn't looking at the device.

Using the gaze as a barometer for whether the user wants to tell the digital assistant a command is also useful in other ways. For example, gaze detected looking at the device could confirm that the user actively intends the device to follow commands.

A digital assistant for a HomePod could potentially only interpret a command if the user is looking at it, the patent suggests.

In practical terms, this could mean the difference between the device interpreting a sentence fragment such as "play Elvis" as a command or as part of a conversation that it should otherwise ignore.

For owners of multiple devices, gaze detection could allow for an instruction to be made to one device and not others, singled out by looking at it.

The filing mentions that simply looking at the device won't necessarily register as an intention for it to listen for instruction, as a set of "activation criteria" needs to be met. This could merely consist of a continuous gaze for a period of time, like a second, to eliminate minor glances or false positives from a person turning their head.

The angle of the head position is also important. For example, if the device is located on a bedside cabinet and the user is laid in bed asleep, the device could potentially count the user facing the device as a gaze depending on how they lie, but could discount it as such for realizing the user's head is on its side instead of vertical.

In response, a device could provide a number of indicators to the user that the assistant has been activated by a glance, such as a noise or a light pattern from built-in LEDs or a display.

Given the ability to register a user's gaze, it would also be feasible for the system to detect if the user is looking at an object they want to interact with, rather than the device holding the virtual assistant. For example, a user could be looking at one of multiple lamps in the room, and the device could use the context of the user's gaze to work out which lamp the user wants turned on from a command.

Nearby devices could detect the user's gaze of other controllable objects in a room.

Originally filed on August 28, 2019, the patent lists its inventors as Sean B. Kelly, Felipe Bacim De Araujo E Silva, and Karlin Y. Bark.

Apple files numerous patent applications on a weekly basis, but while the existence of a patent indicates areas of interest for its research and development efforts, they do not guarantee the patent will be used in a future product or service.

Being able to remotely operate a device has cropped up in earlier patent filings a few times. For example, a 2015 patent for a "Learning-based estimation of hand and finger pose" suggested the use of an optical 3D mapping system for hand gestures, one that could feasibly be similar in concept to Microsoft's Kinect hardware.

Another filing from 2018 for a "Multi media computing or entertainment system for responding to user presence and activity" proposes the introduction of three-dimensional sensor data to produce a depth map of the room, one which could be used for whole-room gesture recognition.

Gaze detection has also been explored extensively, most recently to do with the Apple Vision Pro headset.

Read on AppleInsider

Comments

Such a feature would be useful for hands free AppleTV use or easier use of Siri on iPhones (which already happen to include gaze detection features.)

I would hope to see this rely fully on faceID-type tech, rather than a full-vision (AI-based) camera system, else I believe I would be too worried to have one in more personal areas.

Great idea and hope this is the case.

I like that all devices are activated by ONE phrase. The problem is having the wrong device activate. @EsquireCats may be onto something.

Point being: if a visual clue improved upon that for her, I can't imagine it being a bad thing.

We have 13 home pods. My wife & I each have an iPhone, Apple Watch & iPad. Always only one device answers. Unless the iPhone or iPad are unlocked and in use, a home pod answers. If you are actively using an iPhone or iPad, they will take priority and only one will answer. This works by all the devices talking on the same reliable WiFi network. Multiple devices hear the "Hey Siri!, and they decide which will answer & inform the others to not answer.

You need to troubleshoot your home infrastructure. This is a problem that Apple solved from the beginning.