Future Mac Pro may use Apple Silicon & PCI-E GPUs in parallel

Despite Apple Silicon currently working solely with its own on-board GPU cores, Apple is researching how to support more options, like PCI-E GPUs, all working in tandem.

One thing Intel Macs had that Apple Silicon ones do not, is the ability to use GPUs in external enclosures across Thunderbolt, or internally in a Mac Pro. There are just no provisions with Apple Silicon to do so, at present.

It might not be an issue that concerns most Mac users. It is a big deal for some -- and particularly for Mac Pro buyers.

Now, however, a series of four newly-revealed patent applications appear to show that Apple is at least considering this issue.

For instance, unlike typical RAM chips in a device, the new Unified Memory system saw the RAM installed on the central processor. It means you can't upgrade it later, but it also radically sped up how fast that CPU could use RAM.

Apple Silicon processors come with graphics cores built-in for similar reasons. And in order to support third-party ones, Apple would have to find a way to achieve several things.

Everything else in the list is addressed by one or more of the four newly-revealed patent applications.

"APIs such as Metal and OpenCI give software developers an interface to access the compute power of the GPU for their applications," it continues. "In recent times, software developers have been moving substantial portions of their applications to using the GPU."

Apple uses the term "kick" to refer to the kind of discrete unit of graphics work that a GPU may perform. It then says that there is an issue of getting these kicks to the right GPUs.

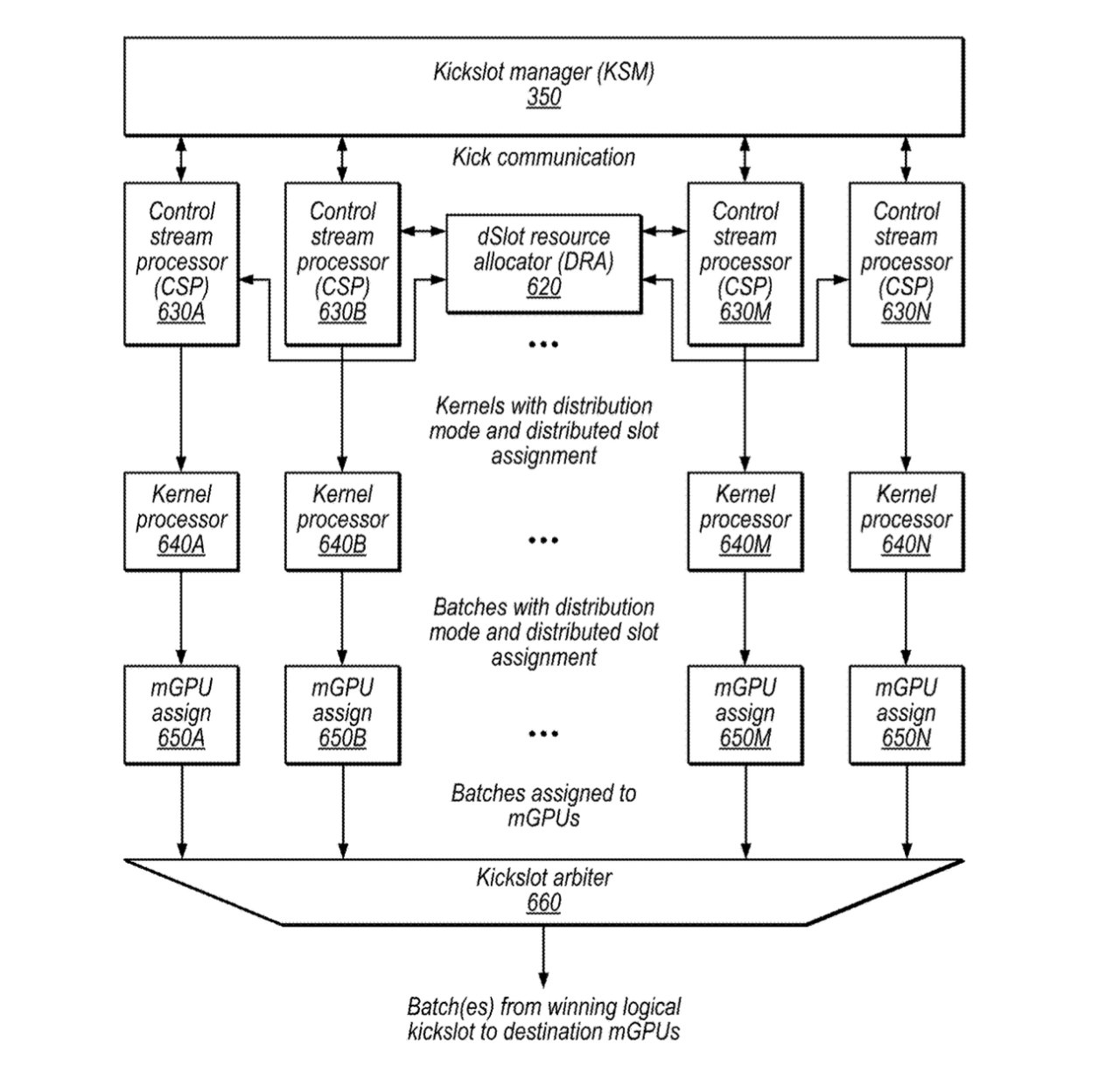

This diagram is repeated in most of the new patent applications.

"Data master circuitry (e.g., a compute data master, vertex data master, and pixel data master) may distribute work from these kicks to multiple replicated shader cores," it says, "e.g., over a communications fabric."

A graphics card may occupy what Apple calls a "kickslot" which appears to be little more than a PCI-E slot either internal or external to the computer. There could be two or more of these, with macOS switching between them.

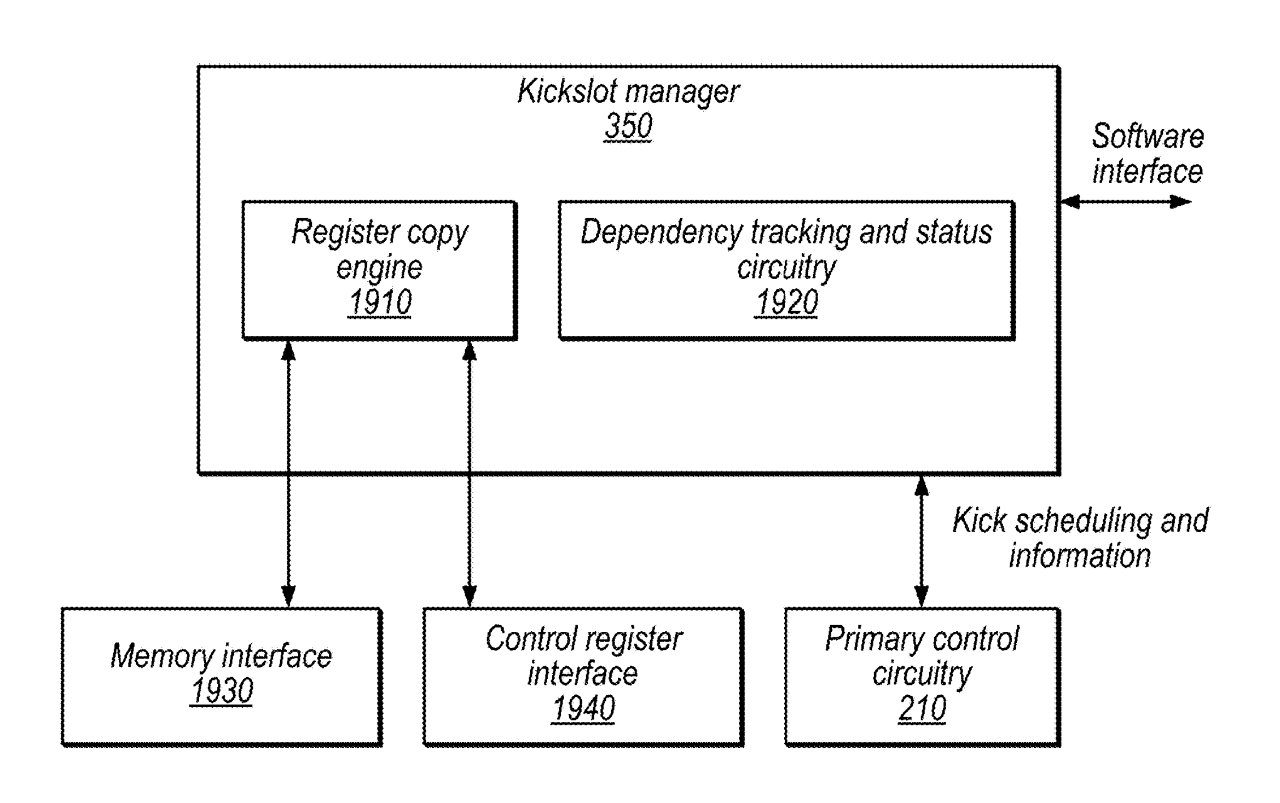

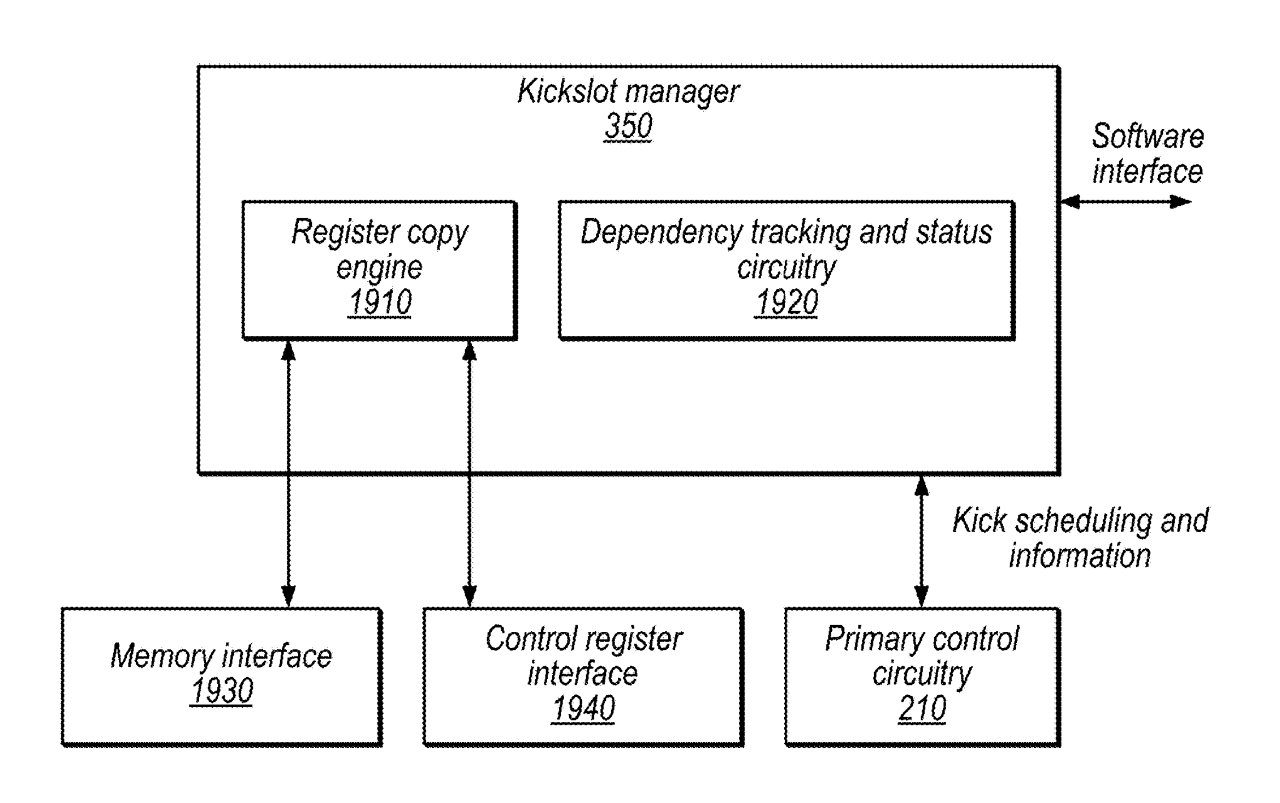

Apple's new patent applications include one called "Kickslot Manager Circuitry For Graphics Processors," which is part of achieving the same result.

"Slot manager circuitry may store, using an entry of the tracking slot circuitry, software-specified information for a set of graphics work," says Apple. "The slot manager circuitry may prefetch, from the location and prior to allocating shader core resources for the set of graphics work, configuration register data for the set of graphics work."

Detail from a patent application concerning the scheduling of data being sent to more than one GPU

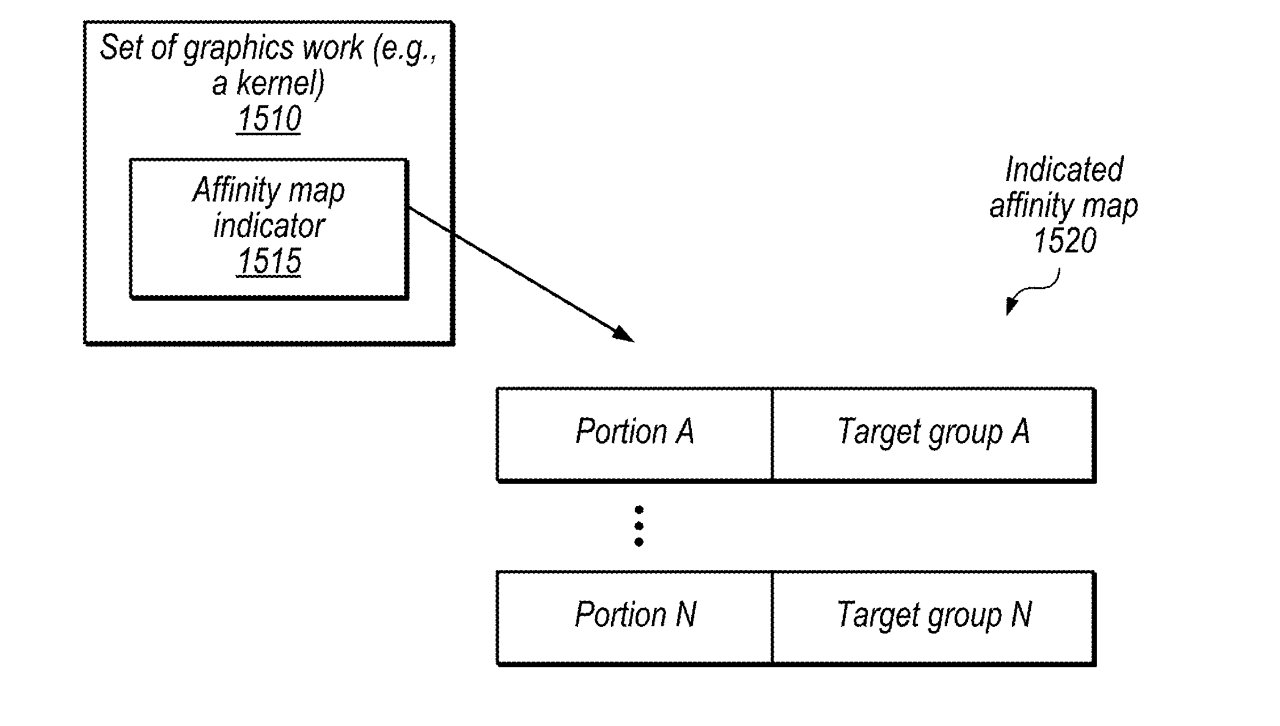

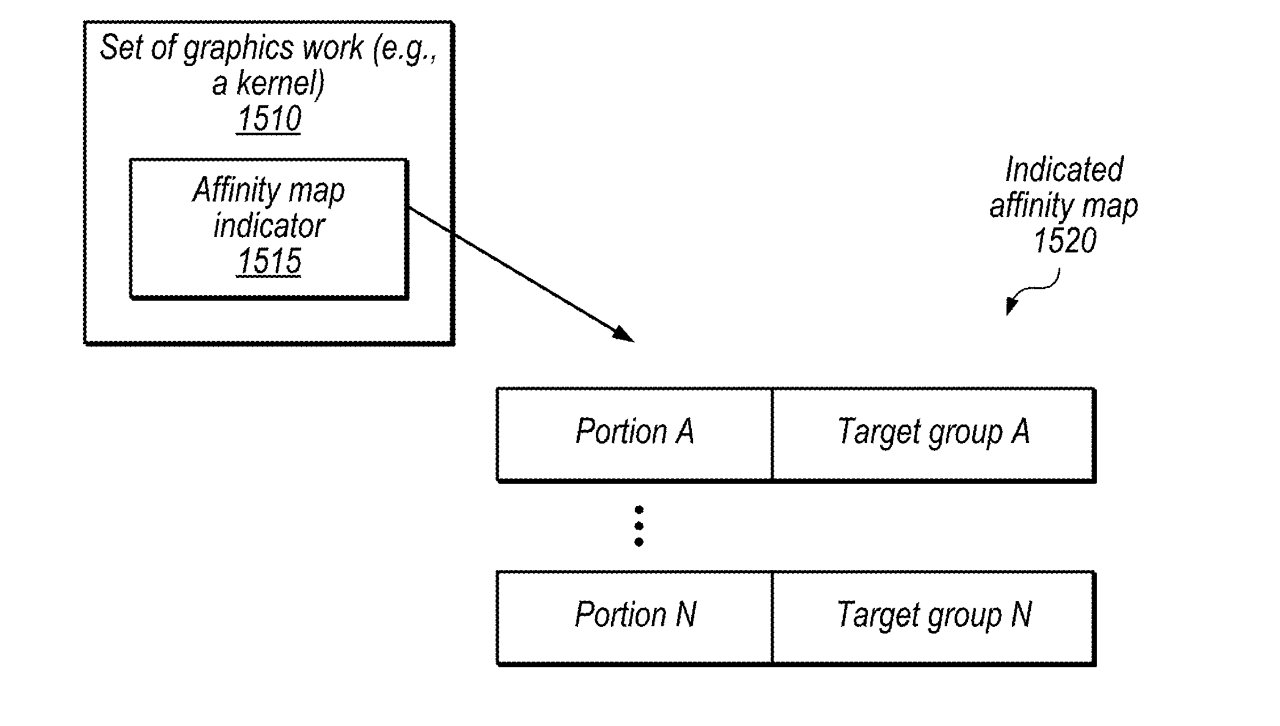

So two or more GPU cards can work together, but that requires scheduling. Hence Apple's third new patent application, "Affinity-Based Graphics Scheduling."

"Distribution circuitry may receive a software-specified set of graphics work," says Apple in this application, "and a software-indicated mapping of portions of the set of graphics work to groups of graphics processor sub-units."

"This may improve cache efficiency, in some embodiments," notes Apple, "by allowing graphics work that accesses the same memory areas to be assigned to the same group of sub-units that share a cache."

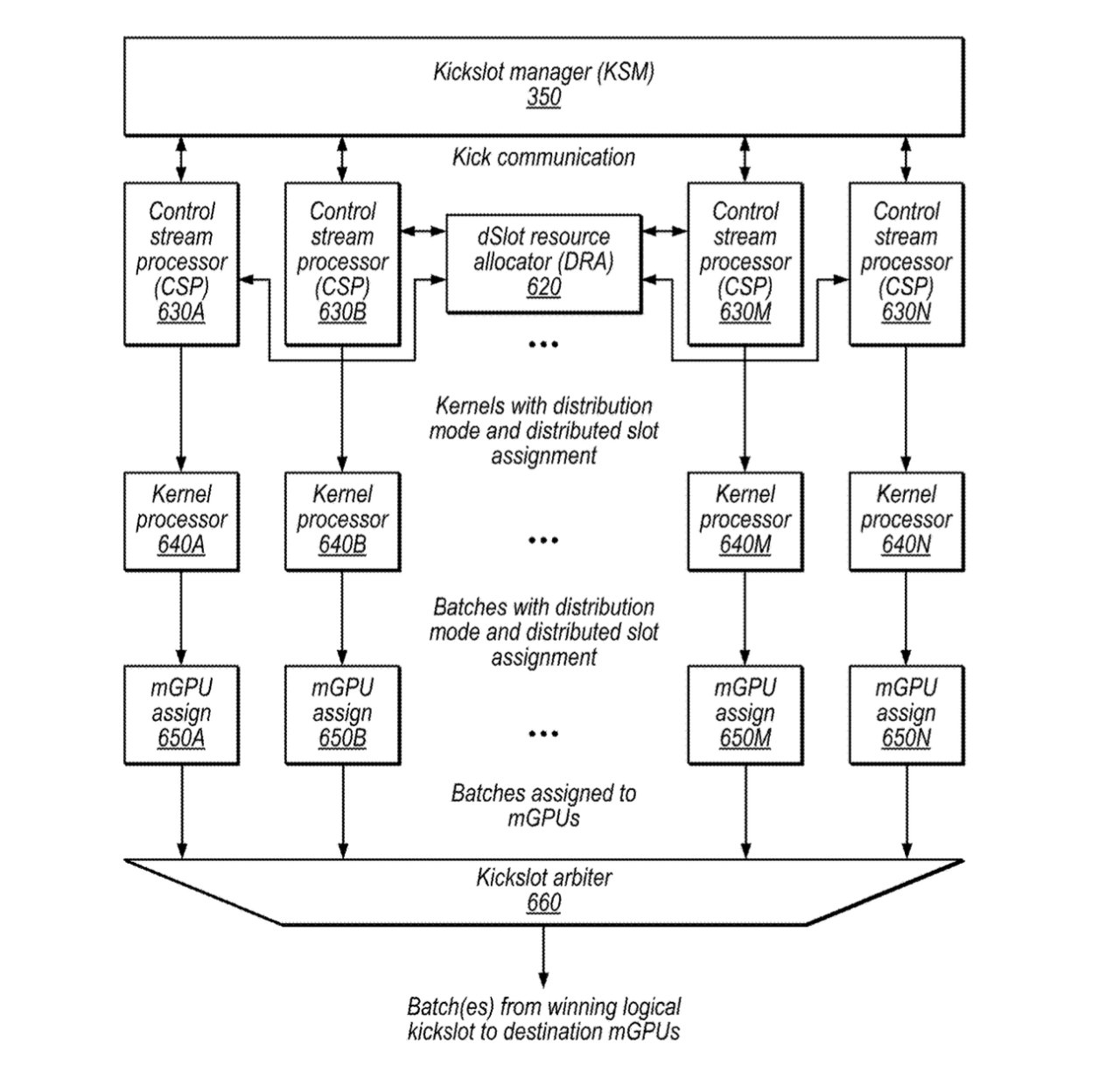

That leaves getting back the data from GPU, and that comes over the more general patent application called, "Software Control Techniques For Graphics Hardware That Supports Logical Slots."

This patent application includes descriptions of how control "circuitry may determine mappings between logical slots and distributed hardware slots for different sets of graphics work."

"Various mapping aspects may be software-controlled," it says. "For example, software may specify one or more of the following: priority information for a set of graphics work, to retain the mapping after completion of the work, a distribution rule, a target group of sub-units, a sub-unit mask, a scheduling policy, to reclaim hardware slots from another logical slot, etc."

Detail from the patent applications showing an overview of the process

It appears that every issue raised by the desire to use multiple graphics cards has at least been investigated by Apple.

So that leaves the obvious question about whether Apple will make a Mac that adds multiple GPU support to Apple Silicon -- and when.

So despite all of the evidence, it is not guaranteed that Apple will support multiple GPUs in Macs -- and in particular, it can't be presumed that the next Mac Pro that's expected soon will.

But the intention is clearly there, and this is not a chance collection of unrelated patents happening to be applied for at the same time. Three of the four patents, for instance, name Andrew M. Havlir as an inventor, and three name Steven Fishwick.

Read on AppleInsider

One thing Intel Macs had that Apple Silicon ones do not, is the ability to use GPUs in external enclosures across Thunderbolt, or internally in a Mac Pro. There are just no provisions with Apple Silicon to do so, at present.

It might not be an issue that concerns most Mac users. It is a big deal for some -- and particularly for Mac Pro buyers.

Now, however, a series of four newly-revealed patent applications appear to show that Apple is at least considering this issue.

Why Apple abandoned multiple GPU support

Apple Silicon brought dramatic, practically unheard of, performance and capability improvements over the earlier Intel processors. Part of that was how the new Apple-designed processors cut down on previous bottlenecks.For instance, unlike typical RAM chips in a device, the new Unified Memory system saw the RAM installed on the central processor. It means you can't upgrade it later, but it also radically sped up how fast that CPU could use RAM.

Apple Silicon processors come with graphics cores built-in for similar reasons. And in order to support third-party ones, Apple would have to find a way to achieve several things.

- Physically include space for GPU cards, or connectors for external GPUs

- Determine when a task is better served by another GPU

- Then root data to that GPU

- Handle how it gets data back from the GPU

Everything else in the list is addressed by one or more of the four newly-revealed patent applications.

The benefits of multiple GPU support

"Given their growing compute capabilities, graphics processing units (GPUs) are now being used extensively for large-scale workloads," says Apple in the patent application, "Logical Slot To Hardware Slot Mapping For Graphics Processors.""APIs such as Metal and OpenCI give software developers an interface to access the compute power of the GPU for their applications," it continues. "In recent times, software developers have been moving substantial portions of their applications to using the GPU."

Apple uses the term "kick" to refer to the kind of discrete unit of graphics work that a GPU may perform. It then says that there is an issue of getting these kicks to the right GPUs.

This diagram is repeated in most of the new patent applications.

"Data master circuitry (e.g., a compute data master, vertex data master, and pixel data master) may distribute work from these kicks to multiple replicated shader cores," it says, "e.g., over a communications fabric."

A graphics card may occupy what Apple calls a "kickslot" which appears to be little more than a PCI-E slot either internal or external to the computer. There could be two or more of these, with macOS switching between them.

Switching between GPUs

Switching between these graphics cards requires technology similar to NVidia's old Scalable Link Interface (SLI), which controlled cards and sets of kicks.Apple's new patent applications include one called "Kickslot Manager Circuitry For Graphics Processors," which is part of achieving the same result.

"Slot manager circuitry may store, using an entry of the tracking slot circuitry, software-specified information for a set of graphics work," says Apple. "The slot manager circuitry may prefetch, from the location and prior to allocating shader core resources for the set of graphics work, configuration register data for the set of graphics work."

Detail from a patent application concerning the scheduling of data being sent to more than one GPU

So two or more GPU cards can work together, but that requires scheduling. Hence Apple's third new patent application, "Affinity-Based Graphics Scheduling."

"Distribution circuitry may receive a software-specified set of graphics work," says Apple in this application, "and a software-indicated mapping of portions of the set of graphics work to groups of graphics processor sub-units."

"This may improve cache efficiency, in some embodiments," notes Apple, "by allowing graphics work that accesses the same memory areas to be assigned to the same group of sub-units that share a cache."

Getting back data from a GPU

So Apple's patent applications cover physically supporting two or more graphics cards, then determining which is the best for a particular task. The patent applications then describe how work can be divided across the available GPUs.That leaves getting back the data from GPU, and that comes over the more general patent application called, "Software Control Techniques For Graphics Hardware That Supports Logical Slots."

This patent application includes descriptions of how control "circuitry may determine mappings between logical slots and distributed hardware slots for different sets of graphics work."

"Various mapping aspects may be software-controlled," it says. "For example, software may specify one or more of the following: priority information for a set of graphics work, to retain the mapping after completion of the work, a distribution rule, a target group of sub-units, a sub-unit mask, a scheduling policy, to reclaim hardware slots from another logical slot, etc."

Detail from the patent applications showing an overview of the process

It appears that every issue raised by the desire to use multiple graphics cards has at least been investigated by Apple.

So that leaves the obvious question about whether Apple will make a Mac that adds multiple GPU support to Apple Silicon -- and when.

When we'll see multiple graphics cards in a Mac

Apple does apply for patents constantly, and there is no guarantee that even granted patents will lead directly to products. Plus patents might be applied for years before Apple can use them.So despite all of the evidence, it is not guaranteed that Apple will support multiple GPUs in Macs -- and in particular, it can't be presumed that the next Mac Pro that's expected soon will.

But the intention is clearly there, and this is not a chance collection of unrelated patents happening to be applied for at the same time. Three of the four patents, for instance, name Andrew M. Havlir as an inventor, and three name Steven Fishwick.

Read on AppleInsider

Comments

Optional GPU expansion card(s) augmenting the M2 Ultra’s integrated GPU is the only way I can see an Apple Silicon Mac Pro truly matching or exceeding the capabilities of the current Intel Mac Pro’s dual Radeon Pro W6900X GPU option. At this point my guess would be such an expansion card would use an Apple GPU rather than AMD, but we’ll see.

Thus I think something like this will likely come with the new Mac Pro this year. Very cool to see that indeed Apple is working on this kind of thing.

It's a good overview of what Apple is trying to do. If you think about what he's saying carefully, despite what he says about the Mac joining the "cadence" of the iPhone and iPad, you can see Apple isn't going to release silicon just because: "If we’re not able to deliver something compelling, we won’t engage, right? ... We won’t build the chip."

So, there never has been any reason for Apple Silicon Macs not supporting discrete graphics via M.2, PCIE or Thunderbolt other than Apple simply not wanting to. Which was the same reason why Apple locked Nvidia out of the Mac ecosystem and had people stuck with AMD GPU options only: purely because they wanted to. My guess is that Apple believed that they were capable of creating integrated GPUs that were comparable with Nvidia Ampere and AMD Radeon Pro, especially in the workloads that most Mac Pro buyers use them for. Maybe they are, but the issue may be that it isn't cost-effective to do so for a Mac Pro line that will sell less than a million units a year.

Long-term there isn’t any reason for Apple to support third-party, GPUs, Nvidia, and AMD or any other third-party company, Apple has been there done that, and is just going to hold Apple back down the road, if Apple supports third-party cards in the Mac Pro, it will just be a short term solution long-term it does not work not for what Apple may want to build in the future and that’s what we’re not seeing and we don’t know exactly what the long-term roadmap for Apple is, but it definitely isn’t based upon it being dependent on third-party companies holding them back.

Apples lack of AAA games, or games that can take advantage of the Apple Silicon to its fullest is also a short term problem. It took Apple 13 years to get where they are today with their architecture, so what if it takes another 3 to 5 years to get there, graphically most people don’t use, or have the need for 300 W GPUs, that market is also a very small market.

What probably will happen is that Apple will probably need to roll up its sleeves again and do some thing in the gaming area but that doesn’t mean buying another company that means actually getting involved in at least one or two games to show the true potential of Apple Silicon.

I do think that Apple should release their entire range (all form factors) each time they upgrade to a different M-Series SOC generation, Apple should not hold back, Apple has never been about the absolute performance. It’s always been about how everything works together as one in an efficient manner the PC world however, works on the highest wattage and highest megahertz solves every problem, like a Dodge Demon, Mustang, or a Corvette the biggest engine wins, and that has never been Apple in the computing world, the fit, finish, efficiency, and overall design of the OS, has been more important to Apple.

Yes,. for some things the bus matters, but for the most part, the bus isn’t saturated with normal app to GPU communication. TB3/4 has been mostly fine, and TB5 is on the way, right?

That said, Apple went to ridiculous lengths with the M1 Ultra to avoid NUMA concerns. I'm not sure I see them bringing these concerns back just for the Mac Pro.

This has been the case since MacBookPros with Snow Leopard and beyond.

Drivers have always been the biggest issue with Apple GPU support, and it has always been a hot potato tossed back and forth between Apple's OS group and the 3rd party HW vendor (including Intel for the integrated GPUs). GPU drivers are terribly complex things, and Apple can't/doesn't use the drivers written by AMD/Intel/nVidia... and those vendors aren't likely to put much effort into writing drivers for macOS even if Apple were to start shipping their GPUs in Apple products. They never did before, the market is too small. So will Apple write drivers for any 3rd party devices? Their current direction suggests that the answer is a resounding "no", but that's not definitive and could change. They still have drivers that work on the Intel chip based Macs, and porting to Aarch64 may not be terribly difficult. Keeping up with the moving target that is the latest AMD GPUs though, is a lot of work. On top of supporting Apple's own GPU designs.

The Apple Silicon hardware is almost certainly hardware compatible with most GPUs from other vendors, thanks to PCI-e / Thunderbolt being standardized in its various flavours. So you can physical install any of the devices, but you need drivers to make it interoperate with macOS and macOS needs to continue to expose the functionality required to do that (which conceivably it may not on Apple Silicon since the macOS team may be taking advantage of detailed knowledge of the hardware).