The history -- and triumph -- of Arm and Apple Silicon

Decades ago, Apple started down a path that has revolutionized both its own products and the entire technology industry. Here's where Apple Silicon began, and where it's going.

Apple's M-series chips rejuvenated the Mac range

From the very beginning of Apple Computer, the company has relied on core parts made by other firms. The Apple I had a MOS Technology 6502 chip at its heart, though co-founder Steve Wozniak designed most of the other original hardware, including the circuit boards.

Apple continued to invent and patent many technologies as it grew, but it was always reliant on other companies to provide the primary chip powering the machines, the CPU. For many years, Apple's chip supplier was Motorola, and later Intel.

Looking back, it's fairly clear that this necessary relationship with other firms -- often with companies that Apple competed against on various levels -- was not what co-founder and CEO Steve Jobs wanted. Apple was at the mercy of Motorola's or Intel's timelines, and sometimes shipping delays, which hurt Mac sales.

The prelude to Apple Silicon

Prior to Jobs' return to Apple in 1997, Apple had used a company called Advanced RISC Machines, later formalized as Arm Holdings PLC, to design a chip for the Newton handheld. Jobs promptly killed the device when he took over as CEO, despite the outcry of fans of the PDA.

Luckily, Arm got a second chance to work with Apple on the iPod project, which required lead Tony Fadell to seek a chip supplier specializing in high-efficiency, low-power chips, among other challenges. The first iPod, which debuted in late 2001, used a Portplayer PP5502 system-on-a-chip (SOC) powered by dual Arm processor cores.

A few years later, Apple used Samsung-designed Arm-based SOC chips for the original iPhone. Apple bought shares in Arm and deepened its relationship with the firm.

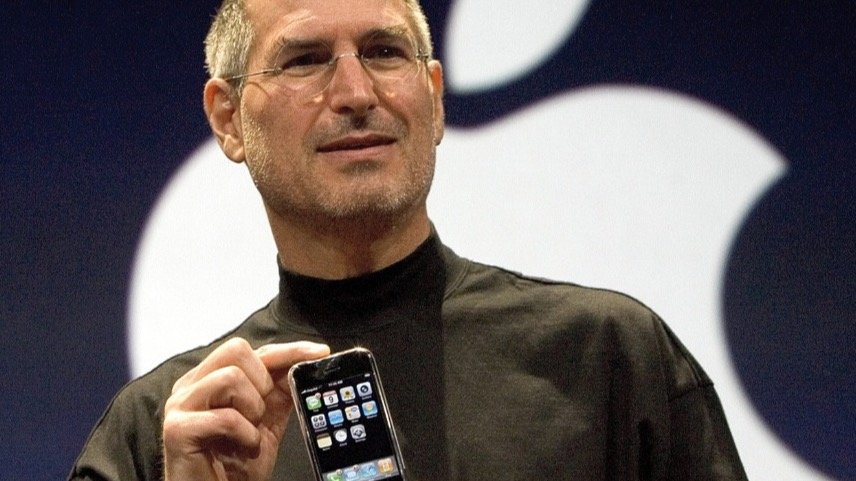

Apple co-founder Steve Jobs

By the mid-2000s, Apple's notebook computers were beginning to seriously outpace the iMac as the computer of choice for consumers. Mobile devices like the Palm Pilot had introduced a new level of access to information on the go, and the iPhone's debut cemented that desire.

It seems obvious now that Apple's notebooks would eventually need to move to much more power-efficient chips, but at the time the only place you could find such research was deep inside Apple's secret "skunkworks" labs.

In June of 2005, Jobs announced Apple's entire Mac lineup would move to Intel-based chips. The Mac had been lagging behind on Motorola chips, and sales were down again.

Long-time customers were worried about the effect of such a major transition, but Apple's behind-the-scenes work paid off with emulation software called Rosetta. It was a key technology that kept customer's existing PPC-based programs running smoothly, even on the new machines.

Apart from some early hiccups, the big transition went pretty well, and customers were excited about Apple again.

The move quickly brought more than just speed to Apple's portables: with the Intel chips being more efficient, it also improved battery life. The new Macs were also able to run Windows more efficiently, a great sales advantage to users who needed or wanted to use both platforms.

Moving towards independence

In hindsight, Jobs and his new executive team had a clear plan to strengthen Apple by reducing dependencies on other companies down to a minimum. The long-term goal was for Apple to design its own chips, and Arm's architecture became key to that mission.

In 2008, just a year after the iPhone debuted, the first prong of Apple's plan to make its own chips began to take shape. In April of that year, the company bought a chip design firm called P.A. Semiconductor, based in Palo Alto, for $278 million.

By June of that year, Jobs publicly declared that Apple would be designing its own iPhone and iPod chips. This was disappointing news to both Samsung and Intel.

Samsung wanted to retain its role as Apple's chip designer and fabricator. Intel tried hard to woo Apple into using their low-power Atom chipsets for the iPhone, and was shocked when Apple declined.

The first iPhone models used Arm-based chips designed and fabricated by Samsung. This changed in 2010 when Apple introduced its first self-designed mobile chip, the A4. It debuted in the the first-generation iPad, and then the iPhone 4.

It was also used in the fourth-generation iPod Touch, and second-generation Apple TV. Samsung was still used to fabricate the chips, but not for much longer.

The following year, Apple began using Taiwan Semiconductor Manufacturing Company (TSMC) to fabricate the chips independently. Apple continues to use TSMC as its chip fab to this day, often buying up its entire production capacity of some chips.

The two big factors in Apple's favor in using its own chips are all about control and customizability. They give Apple a significant advantage over rival mobile chips from Qualcomm and Samsung.

Using Arm's RISC template, Apple now has complete control over the design of the chips it uses. This means it can add, change, and optimize chip features -- like the Neural Engine -- when it needs to, for example, rapidly increase its machine-learning and AI processing capabilities.

Secondly, Apple's chips -- whether for its mobile devices, Macs, or other products like the Apple TV -- are in harmony with the software and operating system used. This is a factor that most Android-based device manufacturers can't compete with.

Google has started designing its own chips for its own Pixel line of smartphones, but Samsung now relies on Qualcomm chips for its mobile devices, which aren't customizable to Samsung-specific features. The same is true for other Android device makers.

This is why Apple's chips usually match or beat rival systems even when the rivals have more RAM, more processor cores, and other seeming advantages. Apple can integrate software innovations with hardware features -- such as optimizing battery life -- on a level almost nobody else can.

On to the Apple Silicon Mac

As early as 2012, rumors began circulating that Apple was planning to eventually introduce Arm-based, Apple-designed chips into its Mac notebook line. It seemed like a logical extension, given the success of the iPhone, but it would likely take years of dedicated engineering work.

It started to become obvious, though unannounced, that such a transition was already in the works.

Apple's developer transition kit for Apple Silicon

As AppleInsider mentioned in 2018, pretty much all smartphones and even Microsoft had embraced Arm architecture by this point. The rumored transition away from Intel would ironically be as smooth as the migration to Intel had been in 2005.

Sure enough, in 2019 Intel finally got the official word that their chips would soon be no longer required for the Mac. By this point, Apple was on the A12 chip for the iPhone, and it's benchmarks suggested that it was desktop-class in terms of performance.

On June 22nd, 2020, Apple CEO Tim Cook announced at WWDC that Apple would move to Apple Silicon chips, designed in-house by Apple itself, over the course of the next two years. Cook stressed that the machines would be able to run Intel-based Mac software using "Rosetta 2" to help with the transition.

Apple CEO Tim Cook in front of a MacBook Pro

The first M1 Macs -- the Mac mini, MacBook Pro 13-inch and 13-inch MacBook Air -- arrived in November of that year. The Mac Pro was the last machine to make the leap, skipping the M1 generation entirely -- finally debuting last June with a new M2 Ultra chip on board.

Apart from the delayed Mac Pro, the rest of the Mac line had moved into the M-class chips within the promised two years. The "growing pains" of moving to Arm-based technology were much more minor than in transitions past.

Users responded very positively to the M1 Apple Silicon Macs, praising the huge boost in speed and power that the line brings compared to the Intel-based machines of 2020. Intel and AMD have worked hard to catch up, but Apple Silicon is now the new benchmark by which other machines are measured when it comes to consumer machines.

How Apple Silicon keeps its competitive edge

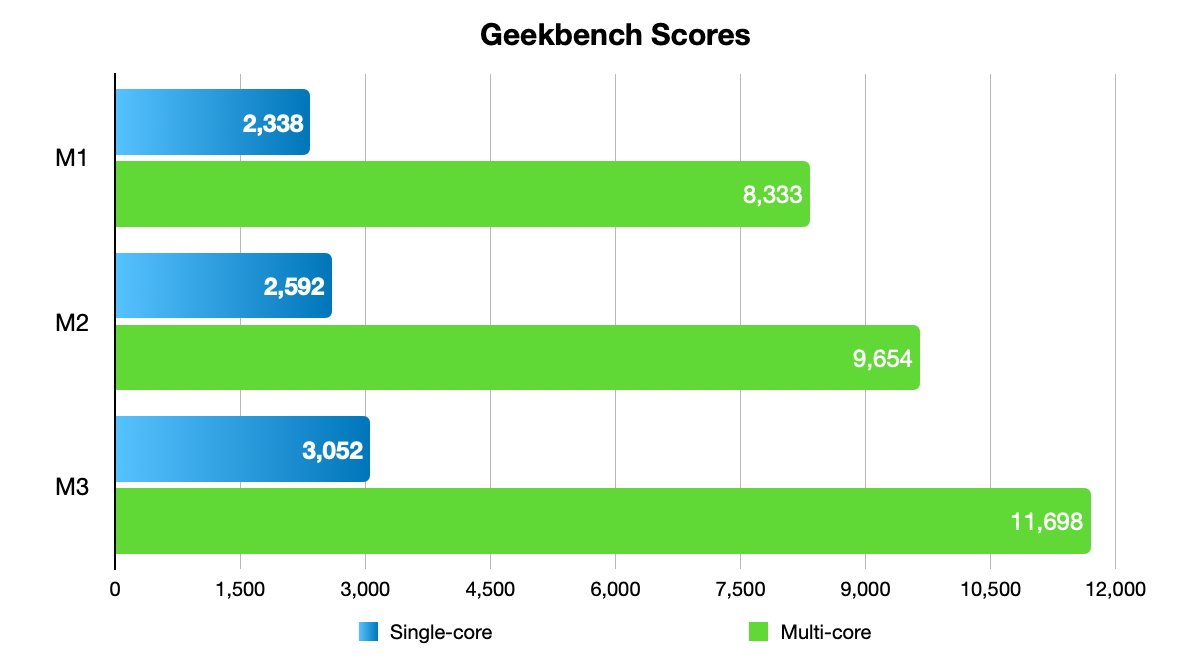

Since the M1, Apple fans have gotten used to routine double-digit improvements every year or so. Both in comparing the most recent base M-class chip to its successor and in comparing each variant to the one below it, and then again when a new set shows up.

It can't last forever, but Apple's ability to design chips perfectly customized to its own hardware has proven to be a major advantage.

This means Apple's chip designers can give more emphasis to areas that will need more attention, and we'll be hearing much more about that this coming June. Apple will undoubtedly be expanding the speed, computing power, and complexity of its existing Neural Engine in its next chips, to accommodate the greater use of machine learning and AI technologies.

Already, we've previously noted that Apple's machines can maintain the same high speeds in the MacBook line regardless of whether the machine is on battery or mains power. Most PC laptops only run at their best speed when plugged in, with noisy fans blazing.

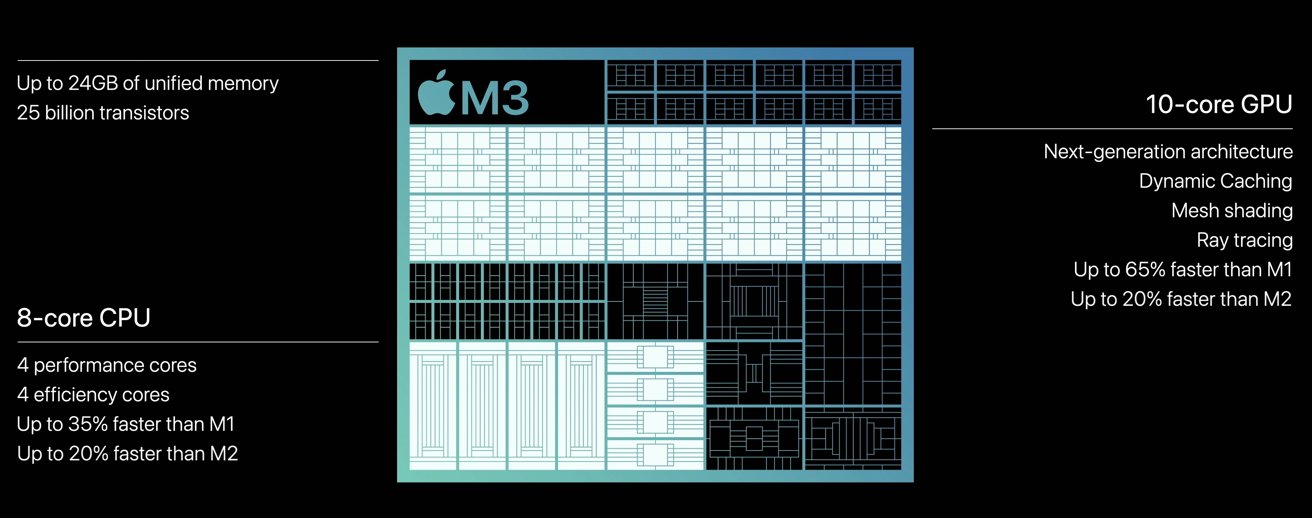

Graphic representation of M3 chip with performance statistics, including CPU and GPU core counts, transistor count, and comparisons to M1 and M2 chips.

YouTube reviewers of the New MacBook Air models have noted, with some astonishment, that even "pro" apps like Photoshop, Lightroom, and Final Cut Pro run just fine for light- and medium-duty jobs -- on a machine that doesn't have a fan. This would have been a nigh-inconceivable notion to these reviewers even two years ago.

Credit should be given to other chipmakers who have made heroic efforts -- albeit not entirely successful -- to catch up. Qualcomm, for example, has brought out the Snapdragon 8 Gen 3, which aims to take on the A17 Pro used in the iPhone 15 Pro and Pro Max.

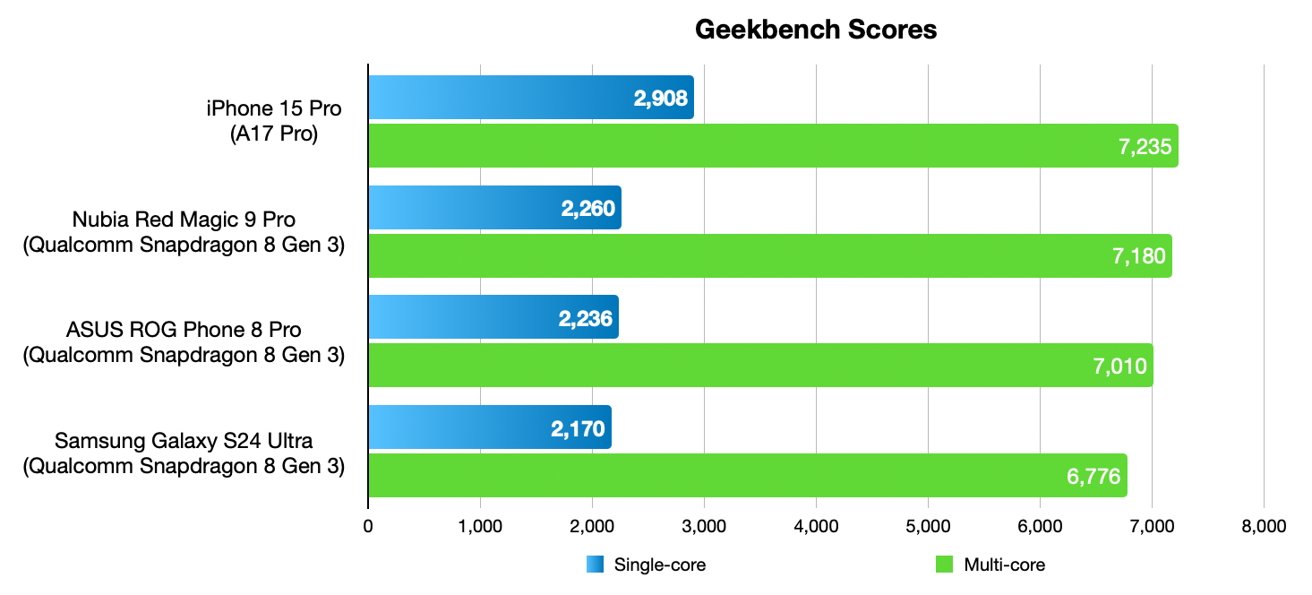

The A17 Pro and M3's nearest current competition: Qualcomm's Snapdragon 8 Gen 3

This is fine, except for the fact that the iPhone 16 will be coming out in the fall, with a new chip that will likely continue the now-tradition of double-digit increases. In particular, we'll note that GPU performance in the M3 has increased 50 percent over the M1 in just three years.

Apple's other big advantage, especially in portable devices is energy efficiency which leads to world-beating battery life.

The Snapdragon 8 Gen 3 features seven CPU cores with a mix of performance and efficiency cores, similar to Apple's approach. Snapdragon 8 Gen 3's graphics are handled by the Adreno 750 GPU.

The improvement in the Snapdragon chip from its predecessor is truly impressive. But, in post-release benchmarks, Qualcomm's so-called Apple-beater doesn't quite live up to the hype.

Geekbench benchmark results for the iPhone 15 Pro and smartphones using the Snapdragon 8 Gen 3

Geekbench single-core and multi-core results for new devices like the Nubia Red Magic 9 Pro, the ASUS ROG Phone 8 Pro, and the Samsung Galaxy S24 Ultra get close to what the A17 Pro offers in terms of single-core and multi-core processing. Except "close" is not good enough.

The future's so bright

It's easy to throw massive amounts of power at a chip to make it more performant. It's still astonishing that Intel, AMD, and now Qualcomm's very best consumer-grade desktop and laptop chips struggle to break even with Apple Silicon at the same power-sipping levels.

The M3 typically surpasses them all in single-core tests, and Apple's current chips already come in a very close third to Samsung and Qualcomm's current chips in AI and Machine Learning benchmarks.

Geekbench score changes for single-core and multi-core tests of the base M1, M2, and M3 models

And all of this is before the big AI/Machine Learning boost we expect in the A18 Pro and M4 chips. It's worth repeating that Apple accomplishes all this while offering significantly superior battery life -- vital for smartphone and notebook users -- and in nearly all cases, the same level of performance on the battery as when plugged in.

To be sure, Apple's everything-on-one-chip design has some weak spots for some users. RAM and the on-board GPU can't be upgraded later, and the chips themselves aren't designed to be upgradeable within a given machine. These are advantages the other computer makers can offer that Apple cannot.

Apple also doesn't seem to care about how to integrate eGPUs and other PCI graphics options, even in its Mac Pro tower. It's likely not a high priority for the company, given the low sales of dedicated desktops these days, but it hurts those who really need it.

Apple has recently added more graphics capabilities for the M3 generation, such as ray tracing and mesh shading. This has helped close the gap -- a little -- between Apple devices and PCs with dedicated graphics cards.

Introducing the M4

Given the industry shift to what Apple calls "machine learning" and the rest of the industry shortens to "AI," the forthcoming M4 chip will likely play catch-up in that area. Apple's next-generation chips will likely be announced at WWDC, and start appearing in new Macs starting in the fall.

Expectations are that the M4 will be more than just an improvement on the M3, but rather feature a redesign to accommodate a larger Neural Engine and various other AI enhancements. As with the previous generation, the M4 will come in at least three variants.

The base M4, used in products like the Mac mini and entry-level MacBook Air, is codenamed "Donan." The M4 Pro is currently codenamed "Brava" and will be used in updated higher-end configurations of the MacBooks and Mac mini.

The next version of the Mac Studio is likely to use an M4 Max, while the Mac Pro will eventually be revised to get what is likely to be called the M4 Ultra, codenamed "Hidra." Indications are that the M4 family will boost processing capabilities significantly as part of its "AI" upgrades, even though it is expected to still be created using the 3nm process.

The bottom line

This era marks the second hugely successful transition for Apple that grew out of its Newton and iPod days. Steve Jobs, Tony Fadell, and many others at Apple deserve a lot of credit for their early faith in the potential of Arm-based chips.

In the areas that the vast majority of computer users care about, Apple has become the industry standard to try and beat. There are still some areas where both Apple and its chip rivals could stand to improve, but the competition between them is good for everyone.

Now that Apple has finally had a taste of being on the high end of consumer-level computer performance, it's unlikely that they will ever go back. The heads of hardware development at Apple frequently frame their discussions about the future in optimistic terms, implying an expectation of future innovations for years to come.

This has also been a remarkable journey for Arm, originally leveraging RISC to design infrastructure for efficient handheld devices.

Arm chips are now the basis for all mobile device chips, as well as tablets from Apple, Android, and Microsoft, and of course Apple's notebook and desktop computers as well.

The current hardware team at Apple has described themselves as "giddy" to be able to design custom chips tailored to the particular needs of each of their devices. It is an ability it never would have had control over with other chipmakers.

Both classes of Apple products have seen big leaps in capability, right alongside massive improvements in battery life, as a result.

Read on AppleInsider

Comments

The M series chips we have today would not exist without a decade of blunders by senior executives at Intel.

Also, the PPC to Intel transition was not the first heart transplant for Apple; back in 1994 it abandoned Motorola’s 68xxx product line that it started using 10 years prior with the Macintosh and transitioned to PowerPC, and that transition was incredibly smooth and well executed. A 68k emulator was provided and legacy code was supported, and software makers were able to ship “fat binaries” that ran optimally on both architectures.

The transition to OSX/macOS, which is at the core a reskinning of NeXT OS, brought with it NeXT’s own experience in supporting multiple CPUs (it initially shipped on 68k but eventually supported Sun SPARC, HP and Intel) and made the transition from PPC to Intel painless. Apple’s know how in the field is unique and spectacular.

Apple licensed Imagination IP, for how long I don't know, but the current designs are entirely Apple's, which makes sense since the GPU cores are fully integrated into the M Series SOC architecture, as well as the A series.

If you have a link to support your statement, this would be a great time to respond.

Granted, the 24 GB of embedded RAM probably doesn't hurt things, but my initial impressions are very positive.

I wouldn't say Apple’s know-how in transitions is unique in a general sense. The original Rosetta solution was licenced from a company using technology from the UK. It wasn't an Apple creation.

Designing your own solutions for emulation based on knowledge of your own chip designs is normal though.

I'd give Apple a 'good enough' grade for its transition solutions/implementations. I believe the transition from 68k had FP limitations and so did Rosetta (don't quote me on that though) but by far Apple's biggest problem with transitions has been support (backwards compatibility). They want everyone off one ship and onto the other ASAP and have no qualms in leaving people behind by dropping support. If the Intel transition was completed in 2008, dropping Rosetta in 2010 was too soon IMO. Especially for a company with so much cash available.

Good for Apple but not so great for older users.

It's the number one reason Apple has never been an option in certain areas. Fintech for example.

It might be beneficial if you're always on the latest options and of course it's a classic way to push users onto new hardware but for users it is tiresome and no transition is ever headache free and pushing developers doesn't always work because there are cost and resources constraints to take into account.

I can't speak about the Apple Silicon transition because I have no experience with that but I imagine the same headaches will exist and the same 'cut off' issues will arise sooner rather than later.

So 'relatively smooth' would be a decent description but definitely not 'buttery smooth'.

Hopefully the architecture transitions are done with for a long time to come.

But you never really know.

In terms of OS transitions there was huge push back on the Blue Box. OSX was for 'the next 15 years'. Are we now going to see a major overhaul of the foundations of macOS?

The real problem is how Apple decided to entomb the Mac to deliver performance from Apple Silicon. The performance comes at the cost of an Apple Tax on memory and storage. These computers are fast, stupid, and costly. I do hope Apple will make devices upgradeable. We will probably never get the keyboard from the Pismo back or be allowed to swap network tech again, but Apple should design beyond glue.

3DMark Solar Bay tests some of the latest graphics features such as ray tracing.

I'd also add that Qualcomm hasn't included their NUVIA cores (Oryon) yet either. These will be in both their upcoming Snapdragon X Elite (laptops) and Snapdragon 8 Gen 4 (smartphones).

They already close the gap with Apple by a good margin (shown Oct 2023):

In reality, Apple Silicon has made this worse. Motorola to PPC and PPC to Intel were much smoother transitions. Apple transitioned all Macs to Intel in 270 days. Apple Silicon took THREE years. The Intel Mac Pro was superior to the Power Mac G5 in every way. The Apple Silicon Mac Pro is $3,000 more expensive with zero expansion except for SSD cards. Fixed memory and a fixed GPU, in a Pro tower. It is embarrassing. Apple burned themselves with that one. It is as bad as the 2013 Mac Pro with zero expansion.

Apple makes their own chips, but they cannot even put the current chip in all their products. They shipped a 15" MacBook Air with a CPU that was a year and a half old (M2), and called it new. The iMac, used to be their top selling Mac, had an outdated M1 chip for three years. The Mac mini didn't get upgraded to M3 or M3 Pro. The Mac Studio did not get upgraded to M3 Max or Ultra. What are they waiting for? Why is the mini and Studio ignored? Why didn't they also get the M3? The MacBook Pro with M3 Max is faster than the Mac Pro in a few benchmarks. The Mac Pro should have been upgraded to M3 Ultra for a blazing fast Mac. Nope. The iPad has an M chip, but iPadOS cannot take advantage of it.

Apple is taking more of a hit on sales now more than ever. No one wants to buy a new Mac because the chip is outdated, or they wonder when that Mac will get the latest chip, when it is already available in other Macs, but not the Mac they want. Now there is talk of the M4 coming soon? So now no one will spend top dollar for a Mac with an outdated CPU, especially the mini or the Studio. Many consumers also despise Apple for the very inflated memory and SSD storage upgrade prices. Now you have to buy the upgrades from Apple at very inflated prices because Apple now knows you cannot upgrade later with less expensive options. That is what Apple Silicon gave us. The chips are fast, but not many are happy with the inflated upgrade prices or weird CPU options in different Macs, some new CPUs, some outdated CPUs. It doesn't make any sense. They claim they now control everything, but their decisions don't reflect that. That is the reality.

For me though it is the battery life that is exceptional. There is a game I play which is battery intensive. The old machine would get hot and the battery only lasted an hour. Now I can play up to 4 hours and still have battery left. The same for watching videos, the battery lasts a lot longer on one charge.

This machine is over 2 years old and still going strong. I would love to get an M3 or M4 when it comes out but I really can't justify the new spend when this machine works so well. Amazing.

Apple is right here. Artificial intelligence is far broader than machine learning, broadly summarised as 'the simulation of successful, external human behaviour'. Neural networks are one tiny tool in the vast field of AI. Machine learning lies in the sub-field of AI classed as 'sub-symbolic techniques', along with evolutionary algorithms (e.g. genetic algorithms). Most AI is symbolic and nothing to do with machine learning.

One minor nitpick.... in the sentence:

"The first iPod, which debuted in late 2001, used a Portplayer PP5502 system-on-a-chip (SOC) powered by dual Arm processor cores."

It is "PortalPlayer PP5502", not "PortPlayer PP5502".