MacBook Pro Touch Bar could be revived as a strip that supports Apple Pencil

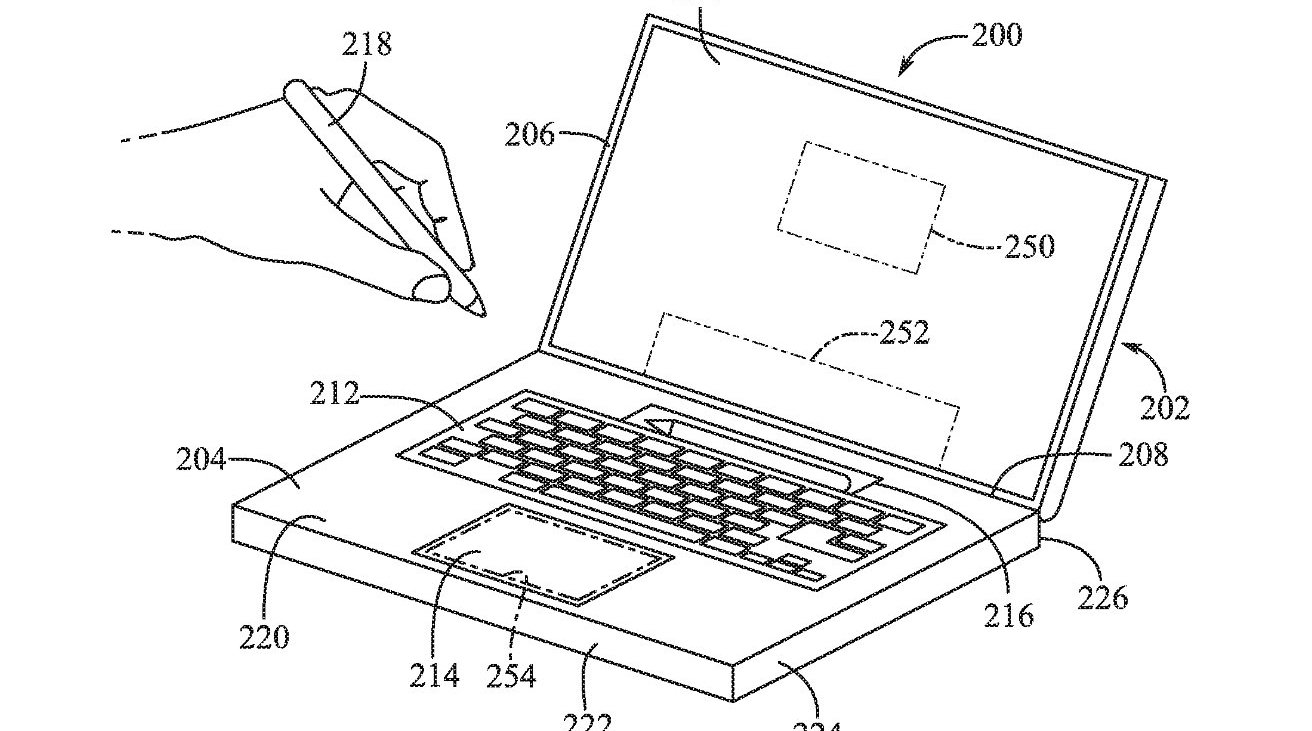

Apple keeps researching how to have the iPad-centric Apple Pencil do the work of the old Touch Bar on the surface of a future MacBook Pro.

The patent doesn't appear to rule out a touch screen Mac, but it's focused on the Touch Bar-like strip

It's just a patent application and not only may one of those not be granted, it may not ever lead to an actual product if it is. Except this patent application made public on May 9, 2024 is an expansion of a series of previous ones -- including some that have actually been granted.

So this is not a skunkwork project by a few Apple people, it is a project that at least was under continuous development. Mildly hidden under the dull title of "Mountable tool computer input," it's really about how an Apple Pencil could be used with a MacBook Pro.

That sounds as if it obviously means how it could be used to draw and write on a MacBook Pro screen. But perhaps with a mind to Steve Jobs's famous criticism of how "you have to get 'em, put 'em away, you lose 'em, yuck," it is more about storing the Apple Pencil.

There's a chance that Apple is only thinking of a MacBook Pro for very tidy people, as just like the previous versions of the patent application, this new one talks of people having a separate device for drawing on.

"[Some] computing devices, such as laptop computers, can have a touch screen positioned in or adjacent to a keyboard of the device that can be configured to provide many more functions than a set of traditional keys," begins the patent.

"However, an ancillary touch screen can be difficult to use in some cases," it continues. "Touch typists may dislike using the touch screen because it lacks tactile feedback as compared to a set of mechanical, moving keys."

Specifically, that they use the Pencil in at least roughly the area where Apple used to include a Touch Bar.

"The touch screen is also generally positioned near the user's hands and therefore may be prone to being obscured from the user's vision by their own hands," says the patent. "Also, even when the user looks at the touch screen, it is positioned at a different focal distance from the user as compared to the main display, so the user must readjust their head or eyes to effectively read and interact with the touch screen..."

It's a wonder Apple ever bothered with a Touch Bar. Yet the company wants to do something with that space, and it persists. The Bar may be replaced by a touch panel.

"The touch panel may include a touch-sensitive surface that, in response to detecting a touch event, generates a signal that can be processed and used by other components of the electronic device," continues Apple. "A display component of the electronic device may display textual and/or graphical display elements representing selectable virtual buttons or icons, and the touch sensitive surface may allow a user to navigate and change the content displayed on the display screen."

None of this would immediately seem to fix Apple's criticisms of the Touch Bar. A user would have to break off typing, look for the Pencil to pick it up out of the holder, then write or tap with it on the touch sensitive strip.

A stylus may be more natural than a Touch Bar

Yet that is more natural than the Touch Bar. While it stops the user typing, it feels more natural to look away from the screen to find the Pencil.

And rather than trying to remember a control that is a small spot on the Touch Bar -- which also moves -- then picking up a Pencil is a lot easier.

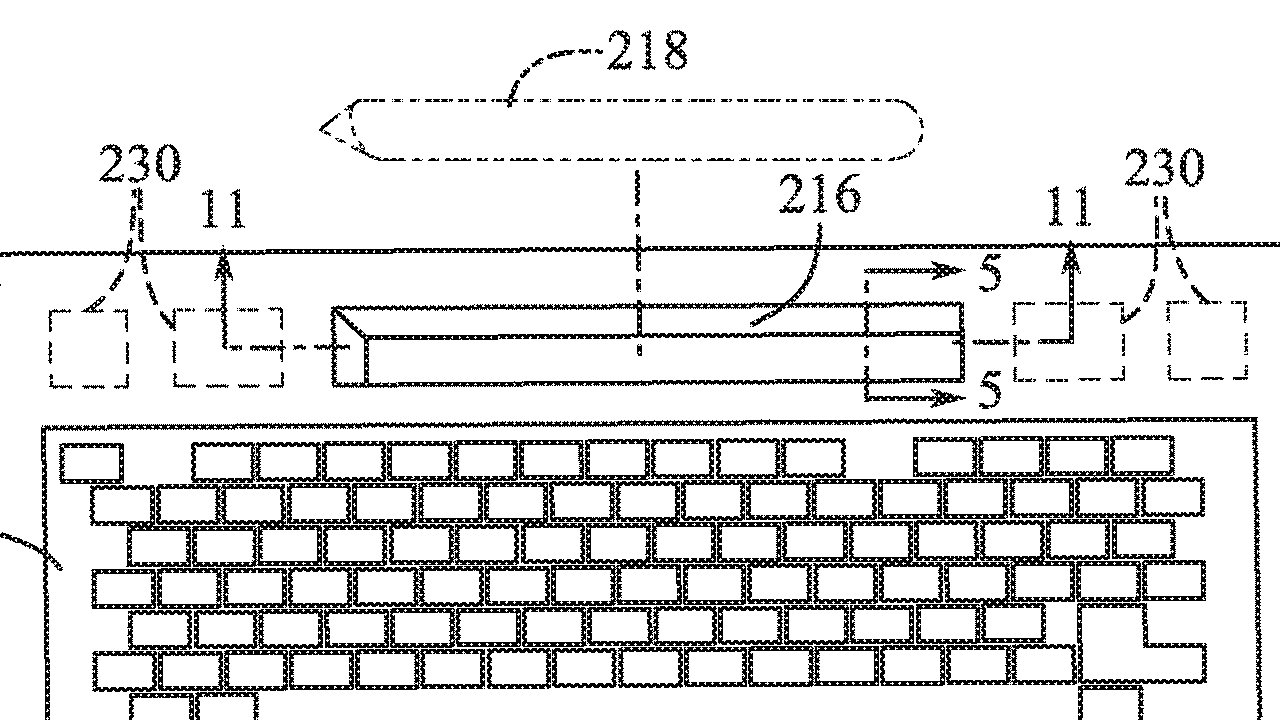

Detail from the patent showing a housed Apple Pencil being swiped across

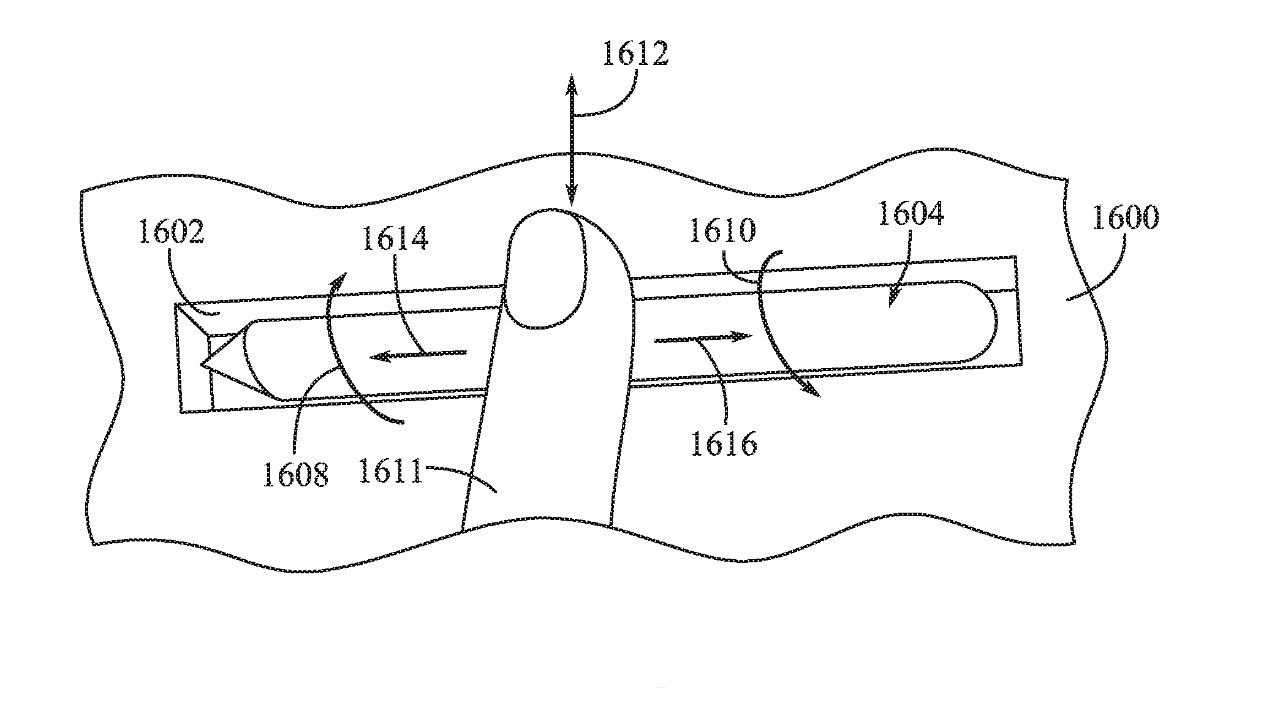

There is one more thing, though. In a few patent drawings, a user is shown tapping on the Pencil, or touching it, or swiping across it.

The Apple Pencil could then show Touch Bar-like controls while it's in the holder.

This patent application, like its previous granted versions, is credited to Paul X. Wang, Dinesh C. Mathew, and John S. Camp. Wang is listed on many previous patents for Apple, including more on user input devices.

Read on AppleInsider

Comments

I'm not convinced. Part of the Touch Bar's downfall was that it didn't do anything that standard macOS features ('cos not everyone has a Touch Bar) didn't do at least as well: I can't see this being any different.

For me function keys are completely useless and never used.

Like the control key; function keys are only necessary for Windows compatibility.

Apple’s trackpads are unusually large. It’s conceivable that certain regions of their massive trackpad could be used on-demand and in an app-centric context to place additional controls directly on the trackpad, e.g., a vertical or horizontal slider/fader along an edge of the trackpad that would pop up when the user selects an on-screen control that requires a variable value. Using the trackpad as both a control and display surface may make sense, or it might be a complete disaster, i.e., Touch Bar Fail v2.0.

I’m actually not trying to suggest any kind of UI concept, all I’m saying is that Apple has painted themselves in a corner by insisting “fingers off” when it comes to the primary display surface. Adding a secondary display surface with touch by eliminating the function keys didn’t work out so well in the long run. Doing something similar with the trackpad may be a repeat of that previous failure.

Popping up touch controls, fully in-context with an app’s workflow, directly on the screen and within the un-averted sight lines of the user, is an obvious solution to the problems the Touch Bar was trying to solve. I hate fingerprint smudges as much as anyone does, and I’ll never like typing on glass, but how many more crazy ways of dancing around the “no fingers on the screen” edict will Apple have to come up with before they finally admit that the obvious solution is probably the right solution?

To avoid some of the complaints to the previous Touch Bar, it need not replace the top row of control keys, and it could be located elsewhere, for example by (or on) the touchpad on a MacBook, on on a bezel below the actual screen.

Signed,

An Anonymous Member of Apple's Post-Jony Ive Design Team

Apple doesn't want to implement touch on the MacBook Pro screen because

a) macOS is not designed for touch. Tapping at menu-driven options with your finger doesn't work well because, for instance, menus are small, your fingers aren't and your hand would always be in front of things you need to see. Now that's something that will interrupt your workflow.

and

b) macOS is designed to run not just MacBooks, but desktop Macs all the way up to Mac Pro. Doing something like changing macOS to look more like iPadOS to improve a touch experience on a MacBook Pro would degrade the UI experience on desktop Macs, which would never be ideal for a touchscreen experience. There are already ergonomic and engineering issues with tapping at a MBP screen, but desktop Macs use larger screens at varying distances and elevations and in varying screen multiples that would make trying to tap away at them with your finger a complete ergonomic mess. That problem then leads to the idea of having both a touch-optimized UI as well as a cursor-optimized UI, depending on the user's current interaction - in essence a hybridized macOS and iPadOS. That of course describes Windows, the all-things-for-all-people-on-all-occasions operating system that has for decades served as a great example to remind Apple what not to do.

Anything that Apple does that takes your attention and focus away from the work being done on-screen is only going to reduce productivity. But I think that Apple has actually articulated a possible solution to the problem that addresses many of our concerns, including losing focus by having to look down at the keyboard area, clumsiness and fatigue of using touch screens, fingers/hands blocking the view and sightline to onscreen elements, and a lingering need to visually navigate around on-screen menus using pointing devices that move your focus. We need to look no further than the Apple Vision Pro to consider some potential ways of dealing with user focus, UI control, text selection, etc.

If Apple could bring some of the eye tracking, finger tracking, and voice control innovation from Vision Pro and other products to their intelligent displays they could potentially provide a way to coordinate a user's focus with the control of specific on-screen actions. For example, if the user focused on the start of a block of text or selectable content, they could invoke a voice command to initiate an action, like "Begin Selection" (or "Control KB" for WordStar fans), move their focus to the end of the block to select and say "End Selection" (or "Control KK") followed by the action word "Copy", "Cut", "Delete", "Move", "Make Bold", "Make Italic" "Underline", etc. The user would then move their focus to the insertion point and give the action command, like "Paste", or say "Menu", to bring up a choice of options including things "Mail", "Print", "Send to", etc., with a keyboard key tied to the action, the user's focus would never leave the screen. Same thing with accessing menus or form controls, i.e., use eye tracking and either audible commands, finger clicking (would require hand tracking like on Vision Pro), or quick keyboard shortcuts overlaid on an on-screen popup list, to initiate the action, or to revert a previous action with a audible "Undo" or by visually clicking a main toolbar or a context-aware floating toolbar containing action buttons, much like what is used on Vision Pro and Apple Watch. If the user wanted to empty the trash they would focus on the trash bucket and say "Empty the trash". Likewise things like "Open Folder", "Rename Folder", "Create Folder", and similar file management functions could be done visually with voice, focus based context menus, or using keyboard shortcuts. In a large office you probably don't want everyone blabbing away, so all commends would have a non-audible equivalent.

The goal here is to provide a "virtual touchscreen" or "visual touchscreen" that does not require physically touching the screen. Action controls that were previously delegated to the Touch Bar could be overlaid on the monitor's display surface (perhaps with translucency) and controlled via voice,, virtual clicking, or keyboard shortcuts. Controls could be provided for scrolling left/right, VCR style controls, up/down numerical controls, etc., all of which use the voice recognition, visual control, and eye tracking and hand/gesture tracking built into the display itself. This would definitely require a lot more intelligence to Apple's displays, both built-in ones, but also Studio Display and Pro Display XDR. Finally Apple would have a way to encourage monitor buyers to go with Apple's "Intelligent Displays" rather than the dumb/generic displays from third party vendors that only provide a display surface. Much of the required functionality could be put into the intelligent display itself. Hopefully the functionality would be orders of magnitude better than Apple's current Studio Display. I thought the Studio Display had at least some of the guts of an iPad, but somehow all of its processing potential has not been utilized to any great extent.