tht

About

- Username

- tht

- Joined

- Visits

- 195

- Last Active

- Roles

- member

- Points

- 8,021

- Badges

- 1

- Posts

- 6,020

Reactions

-

Apple ends its Qualcomm dependency with the new C1 modem chip

This is incorrect. Apple used Infineon modems from 2007 to about 2011, when the Verizon iPhone 4 came out. Qualcomm had a patent lock on CDMA cellular technologies in the USA, with Verizon being a big adopter. If an OEM wanted their phone on Verizon, they had to use Qualcomm modems. Qualcomm was also a big contributor to LTE networking as well, and it continued to have a patent lock in the USA, resulting in most OEMs needing to use their modems in the USA.When Apple launched the first iPhone in 2007, Qualcomm's modem chips were a critical component. The collaboration seemed straightforward -- Qualcomm provided the technology, and Apple integrated it into its iPhones.

Infineon couldn't transition from 3G to 4G (HSPA, LTE) on their own? They ran into trouble? Intel bought them out, and tried their hands at modem chips.

By 2019, Intel knew they were cooked. Intel's fabrication process advantage was a crutch for their chip designs. They always counted on it to recover from their design mistakes, either by enabling them to make the cheapest chips, chips with the most margin, or simply using a lot of power to maintain performance. If they had a good design, like Yonah and the Bridges, they dominated. By 2019 however, TSMC overtook Intel in fabrication technology, with Intel having failed for about 4 years to get their 10nm fab to mass produce chips.But for Intel, the outcome was bleak. Since Apple was its only modem customer, the new arrangement meant Intel was out of the picture.

Chips are only as good as the fabrication process they are made on. Chips have to be within a half-node behind to maintain competitiveness to chips fabbed on a leading edge node. If they aren't and are say 1 node behind, the numbers are bleak: they have half the number of transistors, power consumption is 30% to 100% higher, costs per chip are 50% to 100% higher.

Without being able to compete on fab with TSMC, and Samsung has even caught up, a power consumption sensitive chip like for a cell modem just isn't going to be competitive. It's not hard for a CEO to look at the basic facts of what their fab can do, what their competitor fabs can do, and begin to jettison all the chips that they know won't be competitive. Modems were definitely in that category. x86 had a lot of legacy while being able to tolerate bigger power envelopes, but modems? No.

Apple has the advantage of having a great relationship with TSMC, a very symbiotic one, and can use TSMC's fabs for modem chips.

Intel's other issue was a business one. They wanted 60% margins for their chips. Cell phone SoC and modem chips are targeted to cost about $30 to $50 to OEMs. Intel was in the business of selling $300 to $500 chips to OEMs. They were blinded by their business model debt on where technology was going. And oddly, they saw things like HPC on a card and AI accelerators for a long time. They just could execute on the chip design side on high margin chips either. So, fuckups all around for Intel.

Would be interesting to here the story, read the book, on what Apple was trying to do from 2019 when they bought Intel's modem assets, to the shipping of the C1 modem in the iPhone 16e in 2025.The C1 modem is significant as Apple's first in-house modem used in a production iPhone.

Did they start from a blank piece of paper for a new modem design? 5 years isn't a bad timeline for a modem chip going from nothing to shipping millions. Did they plan on modifying an existing Intel design? Minimum had to be reworked from being fabbed on Intel 7/10nm to TSMC 4/5nm.

There will be a patent royalties lawsuit campaigns to come with patent pool holders.

-

Apple's C1 modem signals the end of its Qualcomm dependence

Takes a bit of study here:

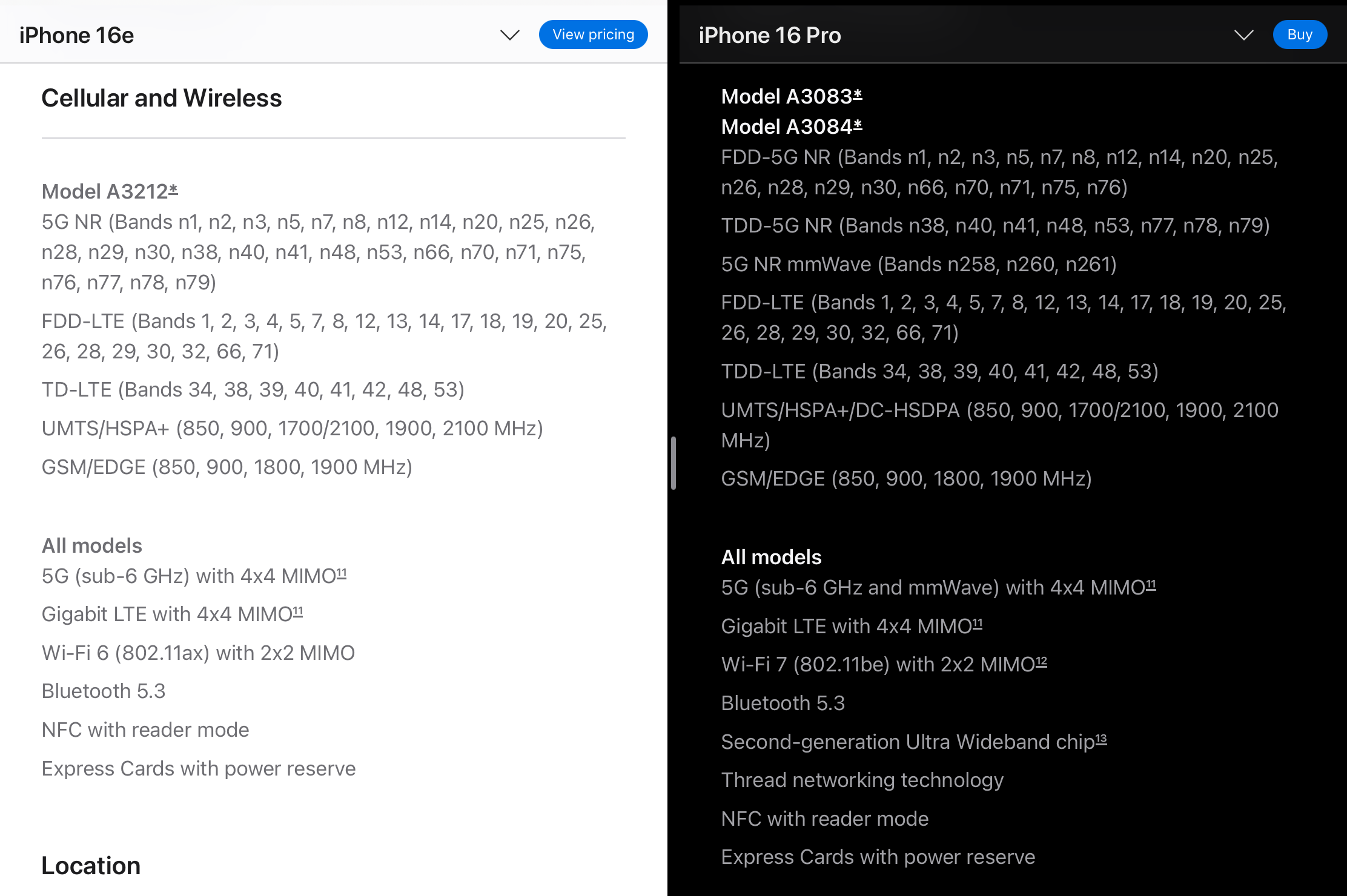

5G band n53 is the GlobalStar sat-comm band. No UWB in the iP16e. Apple's cellular modem has about 8 more 5G bands supported than the iP16P. GPS is single band.

The single most important thing about Apple's C1 modem is power efficiency and performance at low signal levels. Hopefully tests will be done.

-

New Apple Studio Display rumors: MiniLED, ProMotion, and more

The typical TFT LCD should be characterized as having a monolithic backlight. There is a display sized chamber that forms the backlight. LEDs on the edge of the display light up the chamber. The chamber's job is to turn the light from the rows of LEDs on the edge into a uniform backlight across the entire LCD. This matrix of LCD pixels (red, green, blue crystals) and the film layers then turn the backlight into pixels of color.The current backlighting system of the Apple Studio Display uses rows of LEDs, which shine through a TFT LCD layer. For a second-gen model, Apple is believed to be switching the tech used for backlighting from LED to MiniLED.

To create high contrast visuals, the LCD crystals have to block the backlight for black while letting as much light through as possible for white. There is the same amount of light under both black and white areas of an LCD display. Blocking the light completely for a deep black is not possible for a TFT LCD and it really ends up being a dark gray.

To me, local dimming or FALD is from TV oriented marketing and doesn't say what Apple is doing with miniLED. Apple doesn't really employ these features in the way a lot of TVs implement them. Instead of a monolithic backlight across the entire display, Apple's miniLED implementation discretizes the monolithic backlight into 576 (?) backlights for the Pro Display XDR or over 2500 for the iPad Pros and Macbook Pros, arranged in a grid behind the LCD. For the iPP and MBP, there are 4 LEDs in each of those backlight zones, not sure about XDR.The idea is that Apple could use many hundreds of MiniLEDs for the backlighting, allowing for a more even level of light being emitted to the user's eyes. With the strategic use of localized dimming, the display could feasibly make sections of the screen much darker than an LED backlight could manage, allowing for much deeper blacks and dark shades of color to be used.

With the 2500 backlight zones in the iPad Pro 12.9 miniLED, each zone is only 0.032 in2, or 0.2 cm2 or 20 mm2. They could turn these zones off and on at will, and generally create completely black color by turning off the back light in one zone and have 1600 nits of brightness on the next zone, thus creating a high contrast image. Blacks would be black in Apple's miniLED and you would have to look very carefully to differentiate it from the bezels.

Where it is inferior to OLED is in static images of thin lines, such as in fonts. Fonts are smaller than 20 mm2 and hence, you can see blooming with displaying white text on black background. OLED's don't have this type of problem because each subpixel is an LED that emits light.

You should just explicitly say it. Thunderbolt 5 is an explicit requirement for a 5K 120 Hz Apple display. Thunderbolt 4 doesn't have the bandwidth for it. In order for Apple to actually sell a 5K 120 Hz display, there needs to be a market of users with Macs with Thunderbolt 5 so that it can actually be driven at 120 Hz.Thunderbolt 5 can deal with multiple 8K displays at once, or three 4K screens at 144Hz. When pushed, it could even support a single display at up to 540Hz.

Apple isn't going to use compression. They are not going to make an HDMI display. They want to make a Thunderbolt display with a dock in it, webcam, microphone, speakers, anything else they want to put in there. So, 5K 120 Hz or higher wasn't going to happen until Thunderbolt 5 is on Macs. It is now, so, a waiting game in 2025 and 2026.

-

Updated Apple Studio Display with miniLED, ProMotion expected in late 2025

Ross Young is unreliable. He was reliable in the 2015 to 2020 time frame or so. Lately? Not so much.

I think the "rumor score" should be a bullshit score, not a likelihood score. Almost anything is possible, which makes saying something is possible not worth much. On a bullshit scale however, I'd call this 75% bullshit.

-

Services buoy slumping iPhone sales in record-breaking holiday quarter earnings

Apple said the iP16 models performed better than iP15 models, YoY. They said the 2 to 4 weeks of data for when Apple Intelligence was available hints that 18.2’s LLM features resulted in better relative sales than the 15 models.charlesn said:

Well, never underestimate the marketing prowess of a company that sold people on a piece of titanium trim being a reason to upgrade their phones! I don't think Apple broke them out separately, but I would have been curious to know how U.S. iPhone sales did this quarter since it was the one market that actually had these early stages of Apple Intelligence implemented for the whole three months. Not that what we have is all that compelling--if people are upgrading for Apple Intelligence, I'd say it's due more to the relentless marketing for it than for what it actually does at present. Personally, I'm waiting most for "brain transplant Siri" and hoping that Apple's voice assistant will finally not be an embarrassment.tht said:What could change it are LLM models requiring 8 to 16 GB RAM. This could drive a quicker replacement cycle, but I currently don't think so. The mass market is pretty happy with what they have, the new features have a fair bit of complication to use, and therefore, people will wait.Obviously too early to tell. I don’t think you can separate whether AI features drove sales, if the marketing campaign drove sales, or if it was some other feature like 8 GB of RAM that drove sales. And, it wasn’t much of sales difference anyways.Looking at AI another way, I have not heard LLM features driving sales of any device from any OEM. AI pendants and AI gadgets? Abject failure. Snapdragon X Elite AI PCs? Sales have been disappointing. Intel AI PCs? No success there either, though Intel has structural issues impacting them.Amazon Alexa devices? Not really? They only sell because they are cheap. Or perhaps LLM chatbot services haven’t arrived there yet, so unknown. Either way, I think devices with audio only interfaces will fail until proven otherwise.

Where LLM chatbots have done well are advanced search style services, with keyboard input and large displays. They, currently, answer questions and requests, as opposed to the current search which devolved to SEO shit. Search used to answer questions well, which should be a portent for where LLM chatbots are going.

I would defend titanium and the steel before it. Your comment is like saying “it’s just gold”. Society puts a value on uncommon metals, or materials that they perceive are “premium” over the everyday materials they experience. Steel and titanium imparted value. The precision of Apple’s devices impart value. So, their marketing did its job. Perhaps your comment was an unintended compliment?