Apple details user privacy, security features built into its CSAM scanning system

Apple has published a new document that provides more detail about the security and privacy of its new child safety features, including how it's designed to prevent misuse.

Credit: Apple

For one, Apple says in the document that the system will be auditable by third parties like security researchers or nonprofit groups. The company says that it will publish a Knowledge Base with the root hash of the encrypted CSAM hash database used for iCloud photo scanning.

The Cupertino tech giant says that "users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the Knowledge Base article." Additionally, it added that the accuracy of the database will be reviewable by security researchers.

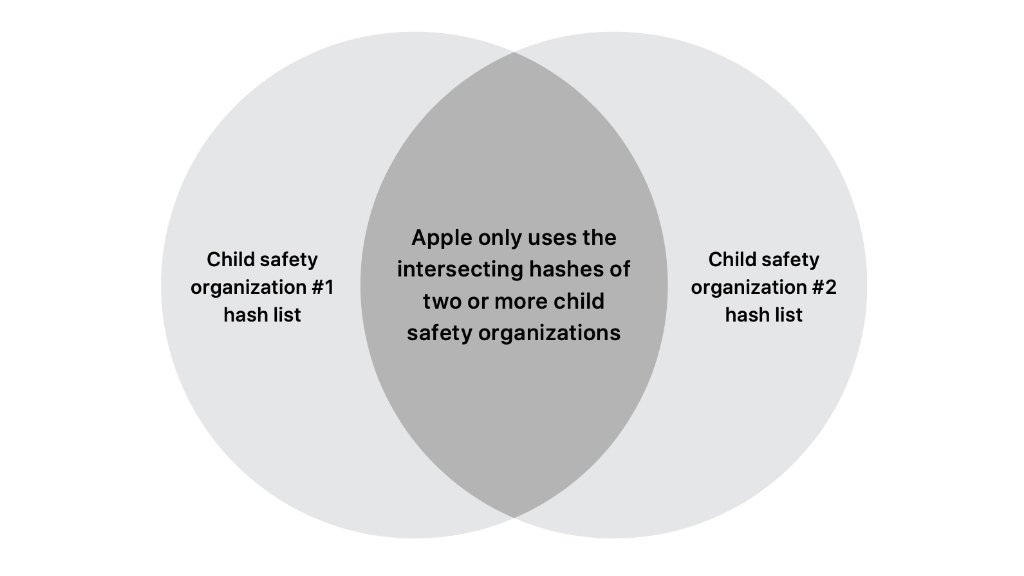

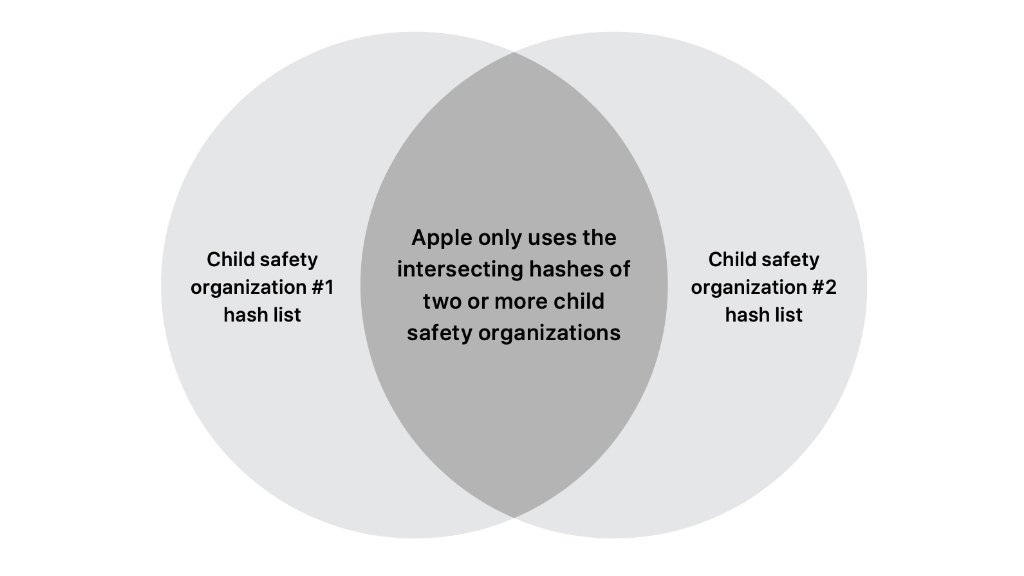

"This approach enables third-party technical audits: an auditor can confirm that for any given root hash of the encrypted CSAM database in the Knowledge Base article or on a device, the database was generated only from an intersection of hashes from participating child safety organizations, with no additions, removals, or changes," Apple wrote.

Additionally, there are mechanisms to prevent abuse from specific child safety organizations or governments. Apple says that it's working with at least two child safety organizations to generate its CSAM hash database. It's also ensuring that the two organizations are not under the jurisdiction of the same government.

If multiple governments and child safety organizations somehow collaborate and include non-CSAM hashes in the database, Apple says there's a protection for that, too. The company's human review team will realize that an account was flagged for something other than CSAM, and will respond accordingly.

Separately on Friday, it was revealed that the threshold for a CSAM collection that would trigger an alert was 30 pieces of abuse material. Apple says the number is flexible, however, and it's only committed to stick to that at launch.

Bracing for an expected onslaught of questions from customers, the company instructed retail personnel to use a recently published FAQ as a resource when discussing the topic, according to an internal memo seen by Bloomberg. The letter also said Apple plans to hire an independent auditor to review the system.

Apple's child safety features has stirred controversy among privacy and security experts, as well as regular users. On Friday, Apple software chief Craig Federighi admitted that the messaging has been "jumbled" and "misunderstood."

Read on AppleInsider

Credit: Apple

For one, Apple says in the document that the system will be auditable by third parties like security researchers or nonprofit groups. The company says that it will publish a Knowledge Base with the root hash of the encrypted CSAM hash database used for iCloud photo scanning.

The Cupertino tech giant says that "users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the Knowledge Base article." Additionally, it added that the accuracy of the database will be reviewable by security researchers.

"This approach enables third-party technical audits: an auditor can confirm that for any given root hash of the encrypted CSAM database in the Knowledge Base article or on a device, the database was generated only from an intersection of hashes from participating child safety organizations, with no additions, removals, or changes," Apple wrote.

Additionally, there are mechanisms to prevent abuse from specific child safety organizations or governments. Apple says that it's working with at least two child safety organizations to generate its CSAM hash database. It's also ensuring that the two organizations are not under the jurisdiction of the same government.

If multiple governments and child safety organizations somehow collaborate and include non-CSAM hashes in the database, Apple says there's a protection for that, too. The company's human review team will realize that an account was flagged for something other than CSAM, and will respond accordingly.

Separately on Friday, it was revealed that the threshold for a CSAM collection that would trigger an alert was 30 pieces of abuse material. Apple says the number is flexible, however, and it's only committed to stick to that at launch.

Bracing for an expected onslaught of questions from customers, the company instructed retail personnel to use a recently published FAQ as a resource when discussing the topic, according to an internal memo seen by Bloomberg. The letter also said Apple plans to hire an independent auditor to review the system.

Apple's child safety features has stirred controversy among privacy and security experts, as well as regular users. On Friday, Apple software chief Craig Federighi admitted that the messaging has been "jumbled" and "misunderstood."

Read on AppleInsider

Comments

I will NEVER accept that my data will be scanned on my OWN DEVICES and for sure I won't PAY FOR A DEVICE to spy me.

iOS 15 and thereby iPhone 13 is now dead for me.

I don’t think this is an issue of Apple’s “messaging” or understanding by users. I still have three serious concerns that don’t seem to be addressed.

#1 Apple has acknowledged the privacy impact of this technology if misapplied by totalitarian governments. The response has been, “we’ll say no”. In the past the answer hasn’t been no with China and Saudi Arabia. This occurred when Apple was already powerful and wealthy. If a government compelled Apple, or if Apple one day is not in a dominant position they may not be able to say no even if they want to.

#2 We’ve recently observed zero-day exploits being used by multiple private companies to bypass the existing protections that exist in Apple’s platforms. Interfaces like this increase the attack surface that malicious actors can exploit.

#3 Up until this point the expectation from users has been that the data on your device was private and that on-device processing was used to prevent data from being uploaded to cloud services. The new system turns that expectation around and now on-device processing is being used as a means to upload to the cloud. This system, though narrowly tailored to illegal content at this time, changes the operating system’s role from the user’s perspective and places the device itself in a policing and policy enforcement role. This breaks the level of trust that computer users have had since the beginning of computing, that the device is “yours” in the same way that your car or home is “your” property.

Ultimately I think solving a human nature problem with technology isn’t a true solution. I think Apple is burning reputation with this move that was hard won. In my opinion law enforcement and judicial process should be used to rectify crime rather than technology providers like Apple.

No user data is being shared with other orgs — until a very concerning threshold has been met, and Apple has full rights to audit and monitor their own servers for abuse. They would be irresponsible to allow anybody to user their servers for any purpose without some protections in place.

This is not an invasion of privacy, no matter how people want to spin it.

https://www.missingkids.org/theissues/csam#bythenumbers

This system can be abused locally to search or collect data. I want Apple to at the very least state they will never ever do it, and if they do, are fine with the world-wide legal implications/liability.

Secondly, it’s MY device that I paid good money for and Apple allows me ZERO options to replace Photos with another app. It’s not that I can seamlessly switch and my camera and file browsing defaults to this new app - another topic but still relevant here.

Ofcourse nobody wants child porn, but Apple is not the police nor did I choose to have my photos scanned.

Screw this company and their hypocritical culture. I’m so much invested in their hardware and software but they are simply not the company anymore that I used to respect.