AppleInsider · Kasper's Automated Slave

About

- Username

- AppleInsider

- Joined

- Visits

- 52

- Last Active

- Roles

- administrator

- Points

- 10,953

- Badges

- 1

- Posts

- 66,634

Reactions

-

Apple Music expands annual subscription option beyond gift cards

People with an existing Apple Music subscription can now switch to a cheaper annual plan without having to buy a gift card -- though the option is relatively hidden.

Image Credit: TechCrunch

To find it, subscribers must go into the iOS 10 App Store, scroll to the bottom of the Featured tab, and select their Apple ID, TechCrunch noted on Monday. This will prompt for a password, after which people can tap on "View Apple ID," then finally on the Subscriptions button to check their Apple Music membership.

A one-year individual plan is $99 in the U.S., a significant discount off the nearly $120 it costs to go month-by-month. Unfortunately, there are no annual tiers for student or family accounts, and the option is invisible to people who aren't already using Apple Music.

In fact, Apple has so far downplayed the annual option in marketing. The gift card first appeared in September, but is only highlighted in the company's online store.

Offering a discounted plan may nevertheless help Apple compete with the market leader in on-demand streaming, Spotify. The latter is still selling 12-month gift cards for the same price it costs to go month-to-month.

Apple is also aiming to compete with Spotify by offering more original video content. Recently it premiered "Planet of the Apps," and hired two former Sony executives to spearhead future efforts.

-

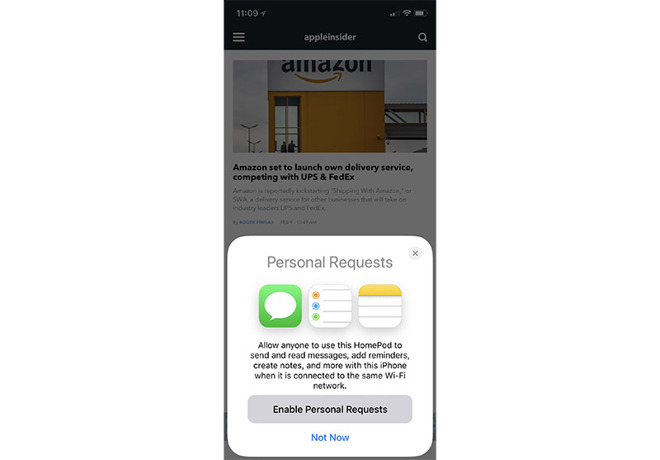

How to trigger Siri on your iPhone instead of HomePod

With HomePod, accessing Apple's Siri technology is more convenient than ever. But users might want trigger the virtual assistant on an iPhone rather than a speaker that blasts answers to anyone within earshot. Here's how to do it.

As we discovered in our first real-world hands-on, HomePod is an extremely compact, yet undeniably loud home speaker. At home in both small apartments and large houses, HomePod's place-anywhere design and built-in beamforming technology generates great sound no matter where it's set up.

Siri comes baked in, taking over main controls like content playback, volume management, HomeKit accessory commands and more. As expected, the virtual assistant retains its usual assortment of internet-connected capabilities, including ties to Apple services like Messages and various iCloud features.

However, having Siri read your text messages out loud might not be ideal for some users, especially those with cohabitants.

Apple lets you deny access to such features, called "personal requests," during initial setup, but doing so disables a number of utilities. Alternatively, users can tell HomePod, "Hey Siri, turn off Hey Siri" or turn off "Listen for 'Hey Siri'" in HomePod settings in the iOS Home app. Both methods, however, make HomePod a much less useful device.

Luckily, there is a way to enjoy the best of both worlds, but it might take some practice. The method takes advantage of Apple's solution for triggering "Hey Siri" when multiple Siri-capable devices are in the same room. More specifically, users can manually trigger "Hey Siri" on an iPhone or iPad rather than HomePod, allowing for more discreet question and response sessions.

According to Apple, when a users says, "Hey Siri," near multiple devices that support the feature, the devices communicate with each other over Bluetooth to determine which should respond to the request.

"HomePod responds to most Siri requests, even if there are other devices that support 'Hey Siri' nearby," Apple says.

There are exceptions to Apple's algorithm, however. For example, the device that heard a user's request "best" or was recently raised will respond to a given query. Use this knowledge to your advantage to create exceptions to the HomePod "Hey Siri" rule.

When you desire a bit of privacy, simply raise to wake a device and say "Hey Siri." A more direct method involves pressing the side or wake/sleep button on an iPhone or iPad to trigger the virtual assistant, which will interact with a user from that device, not HomePod.

On the other hand, if you want to ensure HomePod gets the message rather than an iOS device, place your smartphone or tablet face down. Doing so disables the "Hey Siri" feature, meaning all "Hey Siri" calls are routed to HomePod.

If you're having trouble with "Hey Siri" or if more than one device is responding to the voice trigger, ensure that all devices are running the most up-to-date version of their respective operating system.

-

Apple's Crash Detection saves another life: mine

Of all the new products I've reviewed across 15 years of writing for AppleInsider, Apple Watch has certainly has made the most impact to me personally. A couple weeks ago it literally saved my life.

Apple's Crash Detection can and has saved lives

I'm not the first person to be saved by paramedics alerted by an emergency call initiated by Crash Detection. There have also been complaints of emergency workers inconvenienced by false alert calls related to events including roller coasters, where the user didn't cancel the emergency call in time.

But I literally have some skin in the game with this new feature because Crash Detection called in an emergency response for me as I was unconscious and bleeding on the sidewalk, alone and late at night. According to calls it made, I was picked up and on my way to an emergency room within half an hour.

Because my accident occurred in a potentially dangerous and somewhat secluded area, I would likely have bled to death if the call hadn't been automatically placed.Not just for car crashes

Apple created the feature to watch for evidence of a "severe car crash," using data from its devices' gyroscopes and accelerometers, along with other sensors and analysis that determines that a crash has occured and that a vehicle operator might be disabled or unable to call for help themselves.

More than five hours later I was shocked how much blood was on the back of my ER mattress--and later, how much I saw on the sidewalk!

In my case, there was no car involved. Instead, I had checked out a rental scooter intending to make a quick trip back to where I'd parked my car.

But after just a couple blocks, my trip was sidelined by a crash. I was knocked unconscious on the side of a bridge crossing over a freeway.

A deep gash above my eye was bleeding heavily. I began losing a lot of blood.

I didn't regain consciousness for another five hours, leaving me at the mercy of my technology and the health workers Crash Detection was able to contact on my behalf.Crash Detection working as intended

Even though I wasn't driving a conventional vehicle, Crash Detection determined that I had been involved in a serious accident and that I wasn't responding. Within 20 seconds, it called emergency services with my location. Within thirty minutes I was loaded in an ambulance and on the way to the emergency room.

When I came to, I had to ask what was happening. That's the first I found out that I was getting my eyebrow stitched up and had various scrapes across the half of my face that I had apparently used to a break my fall. I couldn't remember anything.

Even later after reviewing the circumstances, I had no relocation of an accident occurring. When visiting the scene of the crash, I could only see the aftermath. Blood was everywhere, but there was not enough there to piece together what exactly had happened.

The experience was a scary reminder of how quickly things can happen and how helpless we are in certain circumstances. Having wearable technology watching over us and providing an extra layer of protection and emergency response is certainly one of the best features we can have in a dangerous world.

Almost always, I find myself in the position of making difficult decisions and figuring out how to get out of predicaments. But in the rare occasions where I've been knocked out which has only happened a few times in my entire life, there's a more difficult realization that I'd be completely powerless in the face of whatever problems might occur.

With the amount of blood that I was losing, I couldn't have laid there very long before I would have died. Loss of consciousness and blood is a bad combination for threatening brain damage, too.

I am grateful that I'm living in the current future where we have trusted mobile devices that volunteer to jump in to save us if we are knocked out.Who would opt-in to Crash Detection

Last year, Apple's introduction of Crash Detection on iPhone 14 models, Apple Watch Series 8, and Apple Watch Ultra, was derided by some who worried that the volume of false alerts would be a bigger problem than the few extra lives that might be saved by such a tool.

There were false alerts noted at ski lifts, roller coasters, and by other emergency responders who noted an uptick in calls detailing an incident where the person involved didn't respond to explain it wasn't actually an emergency.

Several observers insisted at the time that the Crash Detection system should be "opt-in," similar to the Fall Detection feature Apple had introduced on Apple Watch to report less dramatic accidents suffered by people over 55.

However, it's impossible to have the system only ever working when it is essential. In my situation, I wouldn't have thought to turn on a system to watch me ride a scooter a few blocks. I probably would have assumed that a scooter ride was less risky than driving, despite having no seatbelt, no airbag and no other protective gear.

So I'm also particularly glad Apple doesn't restrict Crash Detection only to car accidents!

My Apple Watch still works but was scratched up pretty well

The fact that my watch and phone had been monitoring me for over a year without incident before a situation occurred where they literally could spring into action to save me is based entirely upon the idea that they are working in the background, not something I'd need to assume I needed. That's the right assumption to make. It literally saved me.

Crash Detection is a primary example of a new, innovative iOS feature update that adds tremendous value to the products I already use, without any real thinking on my part. It just works. And more importantly, it saved my life when it did.Exercise your Emergency Contacts

Despite having an iPhone that's set up with a European phone number and home address, Crash Detection "just worked" here in the United States. It dialed the right number for the location where I crashed, and getting me help efficiently and quickly. That's great.

However, I realized after I woke up that I had two emergency contacts that should also have been notified. My phone did its job correctly, but in both cases I'd listed both my partner and a family member with old phone numbers they don't still use. That meant that Crash Detection had called the police for me, but wasn't able to notify my designated emergency contacts.

If you haven't taken a recent look at your emergency contact data, now might be a good time to check to make sure that everything is in order. Note that when you update a phone number, it doesn't necessarily "correct" your defined emergency contacts.

You may need to delete and reestablish your desired emergency contact and their phone number, as the system only calls the specific contact number you've supplied. It doesn't run through your contact trying each number you've ever entered for that person.

In my case, there was another Apple service that jumped in to help. Because I was sharing my location with iCloud, it was easy for my partner in another time zone, far away, to find out where I had been taken by using the Find My app, and then to call the hospital to find out my condition.

But if Crash Detection hadn't been working, I may not have survived-- or things could have ended up much worse: badly injured or even mugged while laying unconscious in a sketchy area in the middle of the night.

I'm not the only AppleInsider staffer whose life was saved by the Apple Watch, and I probably won't be the last. So thanks, Apple!

Read on AppleInsider

-

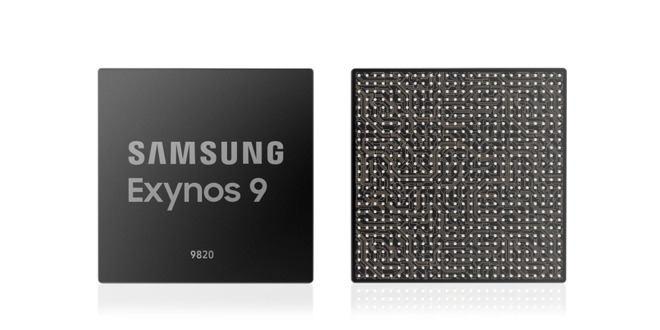

Editorial: As Apple A13 Bionic rises, Samsung Exynos scales back its silicon ambitions

A decade after Apple and Samsung partnered to create a new class of ARM chips, the two have followed separate paths: one leading to a family of world-class mobile silicon designs, the other limping along with work that it has now canceled. Here's why Samsung's preoccupation with unit sales and market share failed to compete with Apple's focus on premium products.

Apple's custom "Thunder" and "Lightning" cores used in its A13 Bionic continue to embarrass Qualcomm's custom Kyro cores used in its Snapdragon 855 Plus, currently the leading premium-performance SoC available to Android licensees.

The A13 Bionic is a significant advancement of Apple's decade-long efforts in designing custom CPU processor cores that are both faster and more efficient than those developed by ARM itself while remaining ABI compatible with the ARM instruction set. Other companies building "custom" ARM chips, including LG's now-abandoned NUCLUN and Huawei's HiSilicon Kirin chips, actually just license ARM's off-the-shelf core designs.

To effectively compete with Apple and Qualcomm, and stand out from licensees merely using off-the-shelf ARM core designs, Samsung's System LSI chip foundry attempted to develop its own advanced ARM CPU cores, initiated with the 2010 founding of its Samsung Austin R&D Center (SARC) in Texas. This resulted in Samsung's M1, M2, M3, and M4 core designs used in a variety of its Exynos-branded SoCs.

The company's newest Exynos 9820, used in most international versions of its flagship Galaxy S10, pairs two of its M4 cores along with six lower power ARM core designs. Over the past two generations, Samsung's own core designs have, according to AnandTech, delivered "underwhelming performance and power efficiency compared to its Qualcomm counter-part," including the Snapdragon 855 that Samsung uses in U.S. versions of the Galaxy S10.

The fate of Samsung's SARC-developed M-series ARM cores appeared to be sealed with reports earlier this month that Samsung was laying off hundreds of staff from its chip design team, following the footsteps of Texas Instruments' OMAP, Nvidia Tegra, and Intel Atom in backing out of the expensive, high-risk mobile SoC design space. It is widely expected that Samsung will join Huawei in simply repackaging ARM designs for future Exynos SoCs.

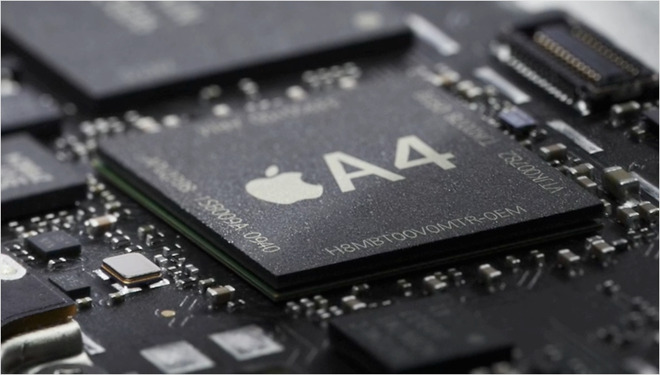

This development highlights the vast gap between what Apple has achieved with its A-series chips since 2010 compared to Samsung's parallel Exynos efforts over the same period. This is particularly interesting because in 2010 Apple and Samsung were originally collaborators on the same ancestral chip of both product lines.Partners for a new Apple core

In 2010, Samsung and Apple were two of the world's closest tech partners. They had been collaborating to deliver a new ARM super-chip capable of rivaling Intel's Atom, which scaled-down Chipzilla's then-ubiquitous x86 chip architecture from the PC to deliver an efficient mobile processor capable of powering a new generation of tablets.

ARM processors had been, by design, minimally powerful. ARM had originated in a 1990 partnership between Apple, Acorn, and chip fab VLSI to adapt Acorn's desktop RISC processor into a mobile design Apple could use in its Newton Message Pad tablet. Across the 1990s, Newton failed to gain much traction, but its ARM architecture processor caught on as a mobile-efficient design popularized in phones by Nokia and others.

Starting in 2001, Apple began using Samsung-manufactured ARM chips in its iPods, and since 2007, iPhone. The goal to make a significantly more powerful ARM chip was launched by Apple in 2008 when it bought up P.A. Semi. While it was widely reported that Apple was interested in the company's PWRficient architecture, in reality, Apple just wanted the firm's chip designers to build the future of mobile chips, something Steve Jobs stated at the time. Apple also partnered with Intrinsity, another chip designer specializing in power-efficient design, and ended up acquiring it as well.

Samsung's world-leading System LSI chip fab worked with Apple's rapidly assembled design team with talent from PA Semi and Intrinsity to deliver ambitious silicon design goals, resulting in the original new Hummingbird core design, capable of running much faster than ARM's reference designs at 1 Gigahertz. Apple shipped its new chip as A4, and used it to launch its new iPad, the hotly anticipated iPhone 4, and the new Apple TV, followed by a series of subsequent Ax chip designs that have powered all of its iOS devices since.Frenemies: from collaborators to litigants

The tech duo had spent much of the previous decade in close partnership, with Samsung building the processors, RAM, disks and other components Apple needed to ship vast volumes of iPods, iPhones and now, iPad. But Samsung began to increasingly decide that it could do what Apple was doing on its own, simply by copying Apple's existing designs and producing more profitable, finished consumer products on its own.

Samsung's System LSI founded SARC and went on to create its independent core design and its own line of chips under its Exynos brand. That effectively forced Apple to scale up its chip design work, attempt to second-source more of its components and work to find an alternative chip fab.

Increasing conflicts between the two tangled them in years of litigation that ultimately resulted in little more than a very minor windfall for Apple and an embarrassing public view into Samsung's ugly copy-cat culture that respected its own customers about as little as its criminal executives respected bribery laws.

As Samsung continued to increasingly copy Apple's work to a shameless extent, it quickly gained positive media attention for selling knockoffs of Apple's work at cut-rate prices. But it took years for Apple to cultivate alternative suppliers to Samsung, and it was particularly daunting for Apple to find a silicon chip fab partner that could rival Samsung's System LSI chip fab.

Samsung takes its act solo

By 2014, Samsung had delivered years of nearly identical copies of Apple's iPhones and iPads, and its cut-rate counterfeiting had won the cheering approval and endorsement of CNET, the Verge and Android fan-sites that portrayed Samsung's advanced components as the truly innovative technology in mobile devices, while portraying Apple's product design, OS engineering, App Store development, and other work as all just obvious steps that everyone else should be able to copy verbatim to bring communal technology to the proletariat. Apple was disparaged as a rent-seeking imperialist power merely taking advantage of component laborers and fooling customers with propaganda.

What seemed to escape the notice of pundits was the fact that while Samsung appeared to be doing an okay job of copying Apple's work, as it peeled away to create increasingly original designs, develop its own technical direction, and pursue software and services, the Korean giant began falling down.

Samsung repeatedly shipped fake or flawed biometric security, loaded up its hardware with a mess of duplicated apps that didn't work well at all, splashed out technology demos of frivolous features that were often purely ridiculous, and repeatedly failed to create a loyal base of users for its attempted app stores, music services, or its own proprietary development strategies.

And despite the vertical integration of its mobile device, display, and silicon component divisions that should have made it easier for Samsung to deliver competitive tablets and create desirable new categories of devices including wearables, its greatest success had merely been creating a niche of users who liked the idea of a smartphone with a nearly tablet-sized display, and were willing to pay a premium for this.

Despite vast shipments of roughly 300 million mobile devices annually, Samsung was struggling to develop its custom mobile processors. In part, that was because another Samsung partner, Qualcomm, was leveraging its modem IP to prevent Samsung from cost-effectively even using its own Exynos chips. For some reason, the communist rhetoric that tech journalists used to frame Apple as a villainous corporation pushing "proprietary" technology wasn't also applied to Qualcomm.Apple's revenge on a cheater

Unlike Samsung, Apple wasn't initially free to run off on its own. There simply were not any alternatives to some of Samsung's critical components-- especially at the scale Apple needed.

In particular, it was impossible for Apple to quickly yank its chip designs from Samsung's System LSI and take them to another chip fab, in part because a chip design and a particular fab process are extremely interwoven, and in part because there are very few fabs on earth capable of performing state of the art work at scale. Perhaps four in total and each of those fabs' production was booked up because fabs can't afford to sit idle.

It wasn't until 2014, after several years of exploratory work, that Apple could begin mass producing its first chip with TSMC, the A8. That new processor was used to deliver Apple's first "phablet" sized phones, the iPhone 6 and iPhone 6 Plus. These new models absolutely eviscerated the profits of Samsung's Mobile IM group. The fact that their processors were built by Samsung's chip fab arch-rival was a particularly vicious twist of the same blade.

Apple debuted the iPhone 6 alongside a preview of Apple Watch. A few months later, Apple Watch went on sale and rapidly began devastating Samsung's flashy experiment with Galaxy Gear -- the wearable product line it had been trying to sell over the past two years. Galaxy Gear had been the only significant product category that Samsung appeared capable of leading in, simply because Apple hadn't entered the space. Now that it had, Samsung's watches began to look as commercially pathetic as its tablets, notebooks, and music players.

Apple's silicon prowess helped Apple Watch to destroy Samsung's Galaxy Gear

Samsung's inability to maintain its premium sales and its attempts to drive unit sales volume instead with downmarket offerings hasn't resulted in the kind of earnings that Apple achieves by selling buyers premium products that are supported longer and deliver practical advancements.

As a result, Apple is now delivering custom silicon not just for tablets and phones, but also for wearables and a series of specialized applications ranging from W2 and H1 wireless chips that drive AirPods and Beats headphones, to its T2 security chips that handle tasks like storage encryption, media encoding, and Touch ID for Macs.

-

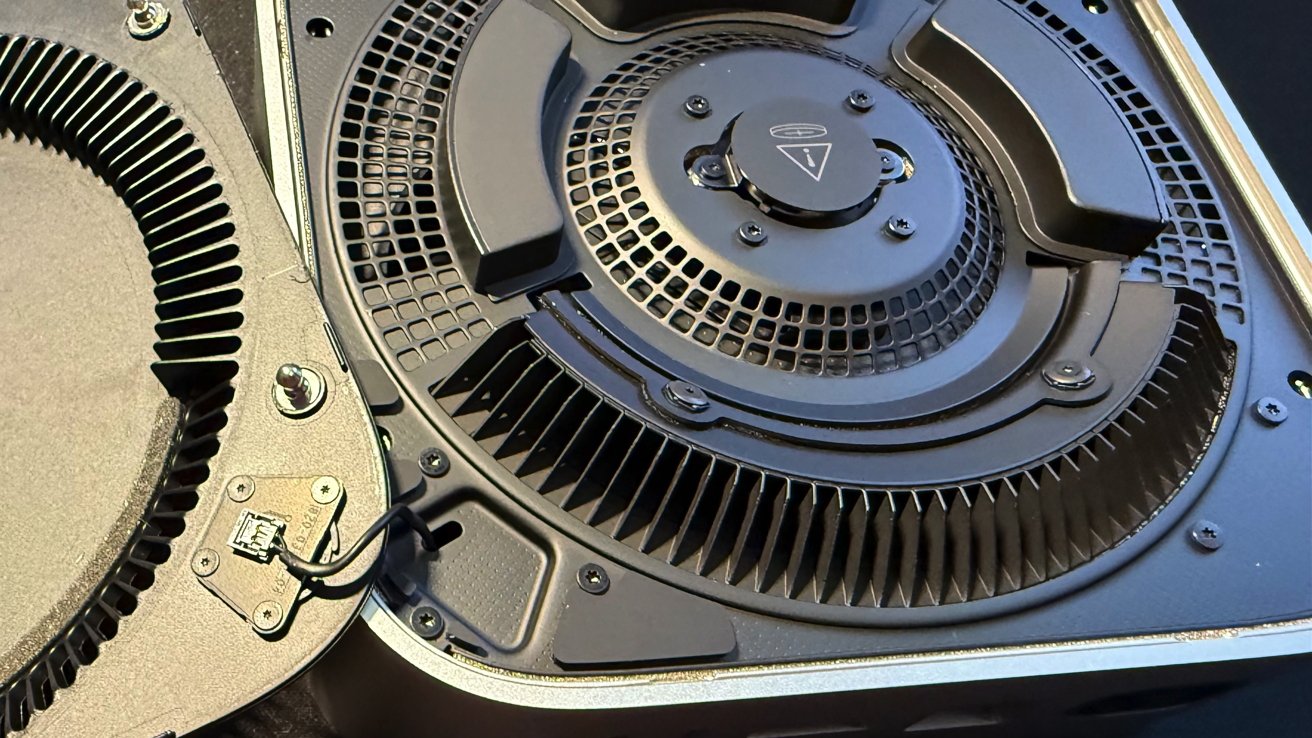

How to fix weak Wi-Fi on a M4 Mac mini when connected to a drive or dock

Sporadic reports on social media are talking about how hooking up an external hard drive to a New Mac mini is dramatically cutting Wi-Fi speeds. Here's why it's happening, and how to reduce the impact of it, or stop it entirely.

The new M4 Mac mini Wi-Fi module is marked by that exclamation point

Hours after the new Mac mini hit customers' desks, some users started complaining that when they hooked up a hard drive or SSD to the unit, they'd see Wi-Fi speeds drop, or be cut entirely. The reports were almost always followed up with the realization that when disconnected, the Wi-Fi speeds would go back to normal.

I've been looking into this for a few months, since I did the first reviews of the hardware here at AppleInsider. While there are an incredible amount of variables, I can confirm that I've seen this.

But, it's not every drive, dock, or cable that induces the problem.

Let's look at why Wi-Fi speeds can be hit by attaching something like a hard drive, and what you can do about it.What can cause Wi-Fi signal strength drops

While I'm not going to get into the physics of it, or too much into the inverse square law, Wi-Fi, like any form of electromagnetic radiation can reduce in strength -- or be attenuated -- between the transmitter, and the recipient. In this case, the Wi-Fi base station, and the new M4 Mac mini.

Normally, the main attenuator is building materials. As a general rule, the denser the material, the more attenuation. Folks with very old plaster lath walls, for instance, or cinder block construction, have more issues with attenuation than plywood and drywall.

Another factor is wavelength. For the most part, the 2.4 gigahertz band on a Wi-Fi router is less impacted by materials, with the 5 gigahertz band hit more.

And, RF interference can cause a problem too. This is the disruption of radio signals by other electronic devices emitting electromagnetic radiation in the same frequency range as the broadcast. This is the main factor why, back in the day, some baby monitors would crackle badly when near an early Wi-Fi router, or why a wireless house phone would sometimes knock a base station off the air entirely.

And, RF interference is why you should avoid putting a Wi-Fi router near a microwave oven.

What we're dealing with in the case of the Wi-Fi speed drops with a drive attached to a M4 Mac mini is a combination of Apple's design, materials, and RF interference.Apple and peripheral design

The case of the M4 Mac mini is aluminum, with a plastic lower case. There are no breaks in the aluminum for the Wi-Fi antenna, so Apple has placed it underneath that very thin plastic base of the unit.

For the most part, this is fine. The desks and surfaces a Mac mini are generally placed on are wood, which has nearly no Wi-Fi attenuation.

Since that plastic is so thin on the base, things get a little dicier when the unit is placed on top of a metal-enclosure hard drive, or dock, for instance.

After a month of testing the first arrivals, mostly iffy brands cranked out quickly after release, putting the Mac mini on top of a dock cuts the power of the Wi-Fi received by the unit dramatically, with the impact mostly varying based on how much metal surrounds the Wi-Fi module, or is in close proximity.

A number of docks are about to ship after the 2025 Chinese New Year, and AppleInsider as a whole will be looking at this more as we go. Our dock reviews will address Wi-Fi attenuation.

A shorter-term solution for any of these docks is to not put them underneath the Mac mini. They fit just as well on top of the unit as they do the bottom.Mac mini RF interference with low quality cabling

This has been trickier to test. Initially, I didn't have any problems at all with Wi-Fi attenuation when just plugging in a cable.

Ultimately I found a combination of an off-brand USB-A to USB-B cable, paired with an inexpensive USB-C to female USB-A adapter that could cause the problem. It didn't matter which enclosure I used -- when paired with the cursed cable combo, I could reliably get Wi-Fi signal attenuation when in use.

The cursed cable combo in question. Note the gap between the adapter and cable plastic body

I also tried this same cable combo on a M2 Mac mini and a Mac Studio. Both had Wi-Fi attenuation with the adapter and cable.

But, both were less impacted than the new Mac mini is, though. This is likely because of a combination of the Wi-Fi module on both being farther away and thicker case materials.

A similar problem manifested on an Asus NUC that I tried it on. The interference also happened on a PC tower that I have for gaming, but less because the cable was plugged in much farther away from the Wi-Fi antenna than possible on the Mac mini.

I talked about the inverse-square law above. The backside of the inverse square law is that the further away you can put a source of interference from a RF transmitter, the less interference you'll get.

This practically manifests in the cable combo's lesser interference with the PC tower, and the Asus NUC, since the Wi-Fi antenna in both cases was farther away from the source of that RF interference.

So, this is a clear case of RF interference generated by the cable combo. It was exacerbated by putting the Mac mini on top of the metal hard drive enclosure, adding material attenuation to the problem.How to stop getting Wi-Fi signal cuts on the M4 Mac mini

In my previous lifetime working in part as exposure control on a US Navy submarine, there was a mantra we spoke about it: Time, distance, shielding.

The first term, time, doesn't apply so much here. This was about minimizing personnel exposure times when it was necessary to be exposed to ionizing or RF radiation.

Distance matters here, though. We don't want to reduce exposure, here, we want to increase it. So, in the case of the hard drive enclosure, or dock cutting Wi-Fi strength, keeping a distance, sometimes only millimeters, from the potential shield makes all the difference in the world.

Distance isn't really changeable in the case of the cursed cable combination. That connection has to be close, given the nature of USB.

What's changeable here is the shielding on the cable.

I am frequently mocked for my cable walls in the house. I have two pegboards holding daily-use cables for this job, and a standby bucket.

A sample of one of the cable walls

When I haven't used a cable in a while, it gets bound up with a velcro tie and put in that bucket with a date for purging at some point down the line.

Cables on that wall and in that bucket vary in quality. Sometimes, the cables that are included with a drive or peripheral aren't the greatest.

For example, the USB-A to USB-C adapter I used for the bad cable that I fished from the bottom of that bucket came from a peripheral. The USB-A to USB-B cable is about four years old, and came from a drive that I shucked to put in my storage array.

Put simply, inexpensive cables aren't always your best bet here. Adapters also add another potential point of failure. In this case, they specifically add another possibility for poor shielding to cause a problem.

The wall also holds cables ranging from just-charging to higher-quality Thunderbolt 3, Thunderbolt 4, and USB4 cables. In every case, I was able to eliminate the impact of the cursed cable combo by simply not using it, and using a USB4 or Thunderbolt cable.

Do you have Lego in the house? In a pinch, lift the Mac mini off of a metal enclosure with a single plate to get some distance between the metal and the Wi-Fi module. Or, just keep them side-by-side, instead of in a stack.Not a flaw in the Mac mini -- but it could have been better

There doesn't appear to be a hardware design flaw, or a software issue, with the new Mac mini causing the Wi-Fi problems when connected to a drive. Arguably, Apple could have shielded the USB-C cable penetrations slightly better, but every design of anything, everywhere, is a series of compromises.

Thinner and smaller designs, like the M4 mini redesign, mean that there is less metal to shield the unit from RF. It also means that the components are in closer proximity than they've been in before, aggravating the impact of sources of interference.

Sometimes, millimeter-separation makes all the difference in the world when you're talking about material shielding, or RF interference. That's the main takeaway here.

Better cables mean better shielding. Moving a metal-cased enclosure away from the RF module on the bottom center of the case helps too.

Combine the two, and your problem is probably solved.

Read on AppleInsider

-

What is Display Scaling on Mac, and why you (probably) shouldn't worry about it

Display scaling makes the size of your Mac's interface more comfortable on non-Retina monitors but incurs some visual and performance penalties. We explain these effects and how much they matter.

A MacBook connected to an external monitor.

In a world where Apple's idea of display resolution is different from that of the PC monitor industry, it's time to make sense of how these two standards meet and meld on your Mac's desktop.A little Mac-ground...

Apple introduced the Retina display to the Mac with the 13-inch MacBook Pro on the 23rd of October 2012, packing in four times the pixel density. From that point onward, Apple gradually brought the Retina display to all its Macs with integrated screens.

This was great, but it appeared Apple had abandoned making its standalone monitors, leaving that task to LG in 2016. So, if you needed a standalone or a second monitor, going officially Retina wasn't an option.

However, now Apple has re-entered the external monitor market, and you have to decide whether to pair your Mac with either a Retina display, such as the Pro Display XDR or Studio Display, or some other non-Retina option.

And part of that decision depends on whether you're concerned about matching macOS's resolution standard.Enter display scaling

Increasing a display's pixel density by four times presents a problem: if you don't adjust anything, all of the elements in the user interface will be four times smaller. This makes for uncomfortably tiny viewing. So, when Apple introduced the Retina standard, it also scaled up the user interface by four times.

The result is that macOS is designed for a pixel density of 218 ppi, which Apple's Retina monitors provide. And if you deviate from this, you run into compromises.

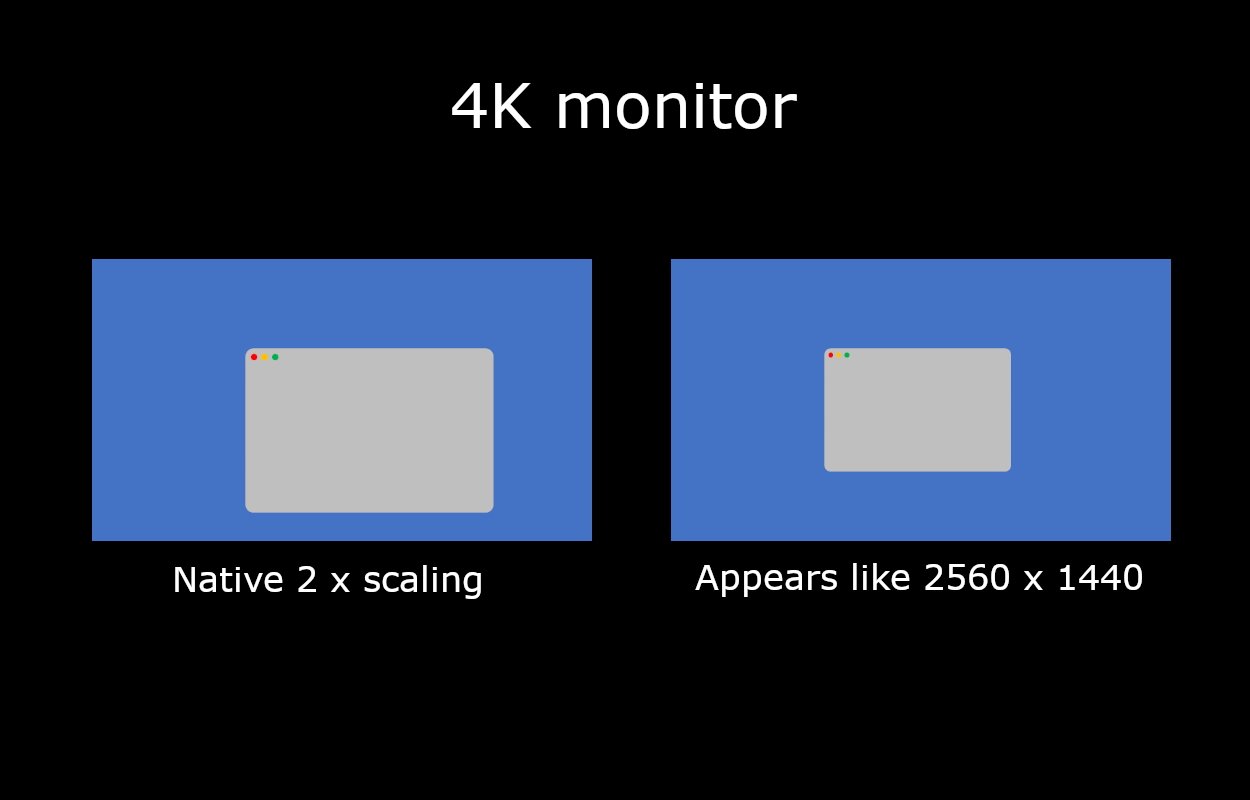

For example, take the Apple Studio Display, which is 27 inches in size. It has a resolution of 5120 x 2880, so macOS will double the horizontal and vertical dimensions of the UI, thus rendering your desktop at the equivalent of 2560 x 1440. Since macOS has been designed for this, everything appears at its intended size.

Then consider connecting a 27-inch 4K monitor to the same Mac. This has a resolution of 3840 x 2160, so the same 2 x scaling factor will result in an interface size the equivalent of 1920 x 1080.

Since both 5K and 4K screens are physically the same size with the same scaling factor, the lower pixel density of the 4K one means that everything will appear bigger. For many, this makes for uncomfortably large viewing.

The difference between native scaling and display scaling on a 4K monitor.

If you go to System Settings --> Displays, you can change the scaling factor. However, if you reduce it to the equivalent of 2560 x 1440 on a 4K monitor, macOS calculates scaling differently because 3840 x 2160 divided by 2560 x 1440 is 1.5, not 2. In this case, macOS renders the screen at 5120 x 2880 to a virtual buffer, then scales it down by 2 x to achieve 2560 x 1440.

Display scaling -- this method of rendering the screen at a higher resolution and then scaling down by 2 x -- is how macOS can render smoothly at many different display resolutions. But it does come with a couple of caveats.Mac(ular) degeneration

Applying a 2 x scaling factor to the respective native resolutions of both a 5K and a 4K monitor will result in a pixel-perfect image. But display scaling will render a 2560 x 1440 image onto a 3840 x 2160 display. This will naturally produce visual artifacts, since it's no longer a 1:1 pixel mapping.

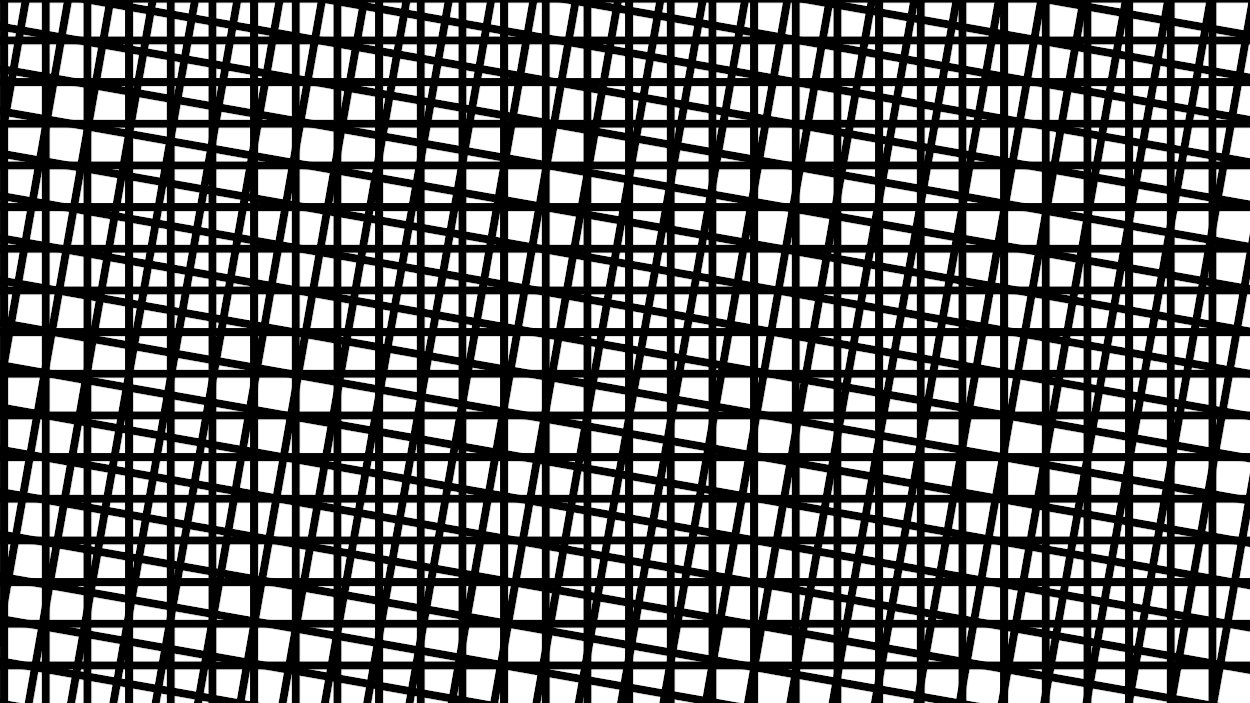

Therefore, display scaling results in deformities in image quality, including blurriness, moire patterns, and shimmering while scrolling. It also removes dithering, so gradients may appear less smooth.

An example of a Moire pattern with two superimposed grids.

Although these visual artifacts are undoubtedly present, the principle of non-resolvable pixels still applies.

By rearranging the formula, angular resolution = 2dr tan(0.5 degrees) becomes d = angular resolution / 2r tan (0.5 degrees). Then we can calculate the viewing distance at which this happens. For example, at the Retina angular resolution of 63 ppd, and the pixel density of 163 ppi for a 4K monitor, the result is about 22 inches.

This means a person with average eyesight won't see individual pixels on a 4K screen when viewed at this distance and farther.

So, you may not notice any deformities, depending on the viewing distance, your eyesight, and the quality of the display panel. (If your nose is pressed against the screen while you look for visual artifacts, you may be looking too closely.)He ain't heavy, he's my buffer

The other caveat of display scaling is performance. If your device renders the screen at a higher resolution than the display into a buffer and then scales it down, it needs to use some extra computational resources. But, again, this may not have as significant an impact as you might think.

For example, Geekbench tests on an M2 MacBook Air showed a drop in performance of less than 3 % at a scaled resolution, compared with the native one, when using OpenCL, and less than 1 % when using Metal. On the same machine, Blender performance dropped by about 1.1 %.

How significant this is depends on your usage. If you need to squeeze every last processor cycle out of your Mac, you'd be better off choosing your monitor's native resolution or switching to a Retina display. However, most users won't even notice.

The overall takeaway from display scaling is that it's designed to make rendering on your non-Retina monitor better, not worse. For example, suppose you do detailed visual work where a 1:1 pixel mapping is essential or long video exports where a 1 % time saving is critical. In that case, chances are that you already own a standalone Retina display or two. Otherwise, you can use display scaling on a non-Retina monitor without noticing any difference.

Read on AppleInsider

-

M4 Mac minis in a computing cluster is incredibly cool, but not hugely effective

There is a way to use a collection of M4 Mac minis in a cluster, but the benefits only really exist when you use high-end Macs.

A cluster of M4 Mac minis - Image credit: Alex Ziskind/YouTube

While most people think of having a more powerful computer means buying a single expensive device, there are other ways to perform large amounts of number crunching. In one concept that has been around for decades, you could use multiple computers to handle processing on a project.

The concept of cluster computing revolves around a task with lots of calculations being shared between two or more processing units. Working together to complete tasks in parallel, the result is a severe shortening of time to process.

In a video published to YouTube on Sunday, Alex Ziskind demonstrates a cluster computing setup using the M4 Mac mini. Using a collection of five Mac minis stacked in a plastic frame, he sets a task that is then distributed between them for processing.

While typical home cluster computing setups rely on Ethernet networking for communications between the nodes, Ziskind is instead taking advantage of the speed of Thunderbolt by using Thunderbolt Bridge. This speeds up the communications between the nodes considerably, as well as allowing larger packets of data to be sent, saving on processing performance.

Ethernet can run at 1Gb/s normally, or up to 10Gb/s if you paid for the Ethernet upgrade in some Mac models. The Thunderbolt Bridge method can instead run at 40Gb/s for Thunderbolt 4 ports, or 80Gb/s on Thunderbolt 5 in M4 Pro and M4 Max models when run bi-directionally.Better than GPU processing

Ziskind points out that there can be benefits to using Apple Silicon rather than a PC using a powerful graphics card for cluster computing.

For a start, processing using a GPU relies on having considerable amounts of video memory available. On a graphics card, this could be 8GB on the card itself, for example.

Apple's use of Unified memory on Apple Silicon means that the Mac's memory is used by the CPU and the GPU. The Apple Silicon GPU therefore has access to a lot more memory, especially when it comes to Mac configurations with 32GB or more.

Then there's power draw, which can be considerable for a graphics card. High power usage can be equated to a higher ongoing cost of operation.

By contrast, the Mac minis were found to use very little power, and a cluster of five Mac minis running at full capacity used less power than one high-performance graphics card.MLX, not Xgrid

To get the cluster running, Ziskind use a project we've already talked about. It uses MLX, an Apple open-source project described as an "array framework designed for efficient and flexible machine learning research on Apple Silicon."

This is vaguely reminiscent of Xgrid, Apple's long-dead dead distributed computing solution, which could control multiple Macs for cluster computing. That system also allowed for a Mac OS X Server to take advantage of workgroup Macs on a network to perform processing when they aren't being used for anything else.

However, while Xgrid worked for large-scale operations that were very well funded at a corporate or federal level, as AppleInsider's Mike Wuerthele can attest to, it didn't translate well to smaller projects. Under perfect and specific situations, and specific code, it worked fantastically, but home-made clusters tended to not perform very well, and sometimes slower than a single computer doing the work.

MLX does change that quite a bit, as it's using the standard MPI distributed computing methodology to work. It is also possible to get running on a few Macs of varying performance, without necessarily shelling out for hundreds or thousands of them.

Unlike Xgrid, MLX seems to be geared a lot more towards smaller clusters, meaning the crowd that wanted to use Xgrid but kept running into trouble.A useful cluster for the right reasons

While adding together the performance of multiple Mac minis together in a cluster seems attractive, it's not something that everyone can benefit from.

For a start, you're not going to see benefits for typical Mac uses, like running an app or playing a game. This is intended for processing massive data sets or for high intensity tasks that benefit from parallel processing.

This makes it ideal for purposes like creating LLMs for machine learning research, for example.

It's also not exactly easy to use by the typical Mac user.

Also, the performance gains aren't necessarily going to be that beneficial for the usual Mac owner. Ziskind found in tests that simply buying a M4 Pro model offers more performance than two M4 units working together when using LLMs.

Clusters can be really worth it when using multiple high-spec Macs together

Where a cluster like this comes into play is when you need more performance than you can get from a single powerful Mac. If a model is too big to work on a single Mac, such as constraints on memory, a cluster can offer more total memory for the model to use.

Ziskind offers that, at this stage, a high-end M4 Max Mac with vast amounts of memory is better than a cluster of lower-performance machines. But even so, if your requirements somehow go beyond the highest single Mac configuration, a cluster can help out here.

However, there are still some limitations to consider. While Thunderbolt is fast, Ziskind had to resort to using a Thunderbolt hub to connect the nodes to the host Mac, which reduced the available bandwidth.

Directly connecting the Macs together solved this, but then it runs into problems such as the number of available Thunderbolt ports to connect multiple Macs together. This can make scaling the cluster problematic.

He also ran into thermal oddities, where the host Mac mini was running especially hot, while nodes ran at a more reasonable level.

Ultimately, Ziskind found the Mac mini cluster tower experiment was interesting, but he doesn't intend to use it long-term. However, it's still relatively early days for the technology, and in cases where you use multiple high-end Macs for a sufficiently tough model, it can still work very well.

Read on AppleInsider

-

Is Apple Intelligence artificial?

In the days before WWDC, it was widely believed that Apple would be playing catch-up to everyone else in the consumer tech industry by rushing to bolt a copy of ChatGPT onto its software and checking off the feature box of "Artificial Intelligence." That's clearly not what happened.

Apple Intelligence is on the way.

Rather than following the herd, Apple laid out its strategy for expanding its machine learning capabilities into the world of generative AI in both writing and creating graphic illustrations as well as iconic emoji-like representations of ideas, dreamed up by the user. Most of this could occur on device, or in some cases, delegated to a secure cloud instance private to the individual user.

In both cases, Apple Intelligence, as the company coined its product, would be deeply integrated into the user's own context and data. This is instead of it just being a vast Large Language Model trained on all the publicly scrapeable data some big company could appropriate in order to generate human-resembling text and images.

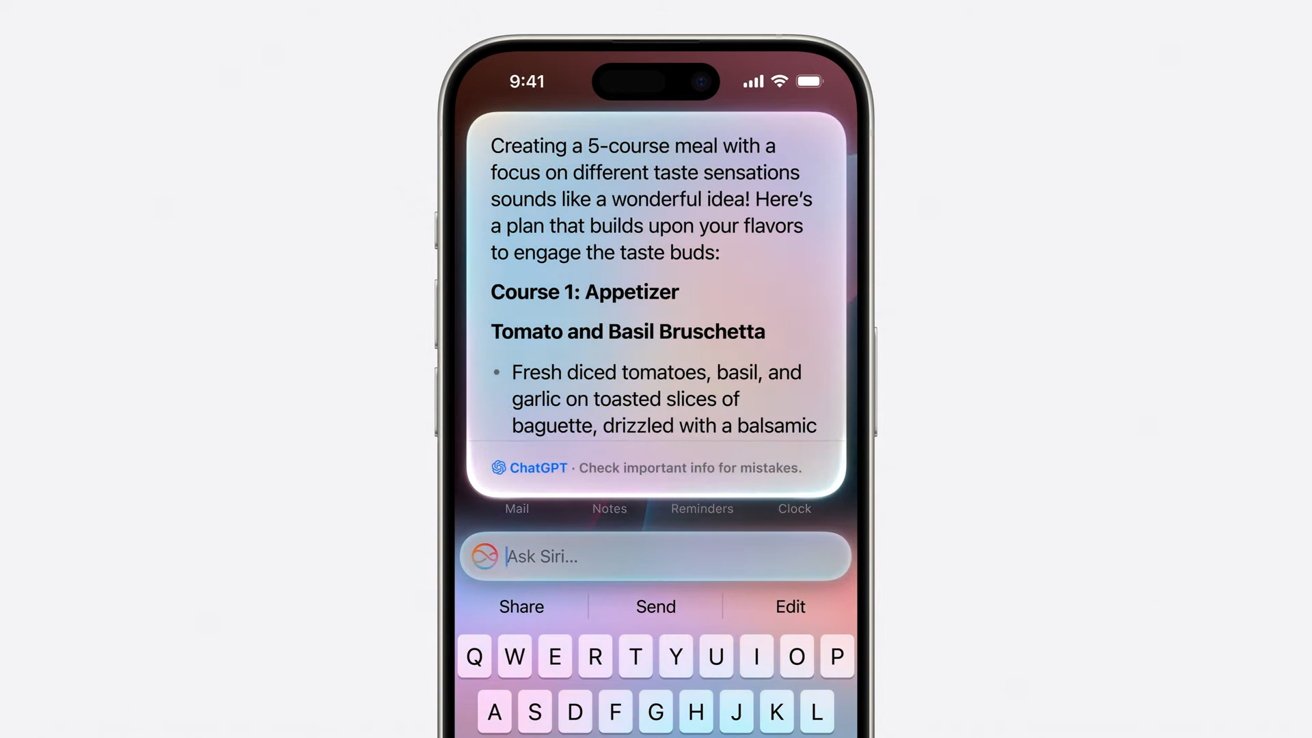

At the same time, Apple also made it optional for iOS 18 and macOS Sonoma users to access such a vast LLM from Open AI. They did this by enabling the user to grant permission to send requests to Chat GPT 4o, and even take advantage of any subscription plan they'd already bought to cover such power hungry tasks without limits.

Given that Apple delivered something vastly different than the AI tech pundits predicted, it raises the question: is it possible that Apple Intelligence isn't really delivering true AI, or is it more likely that AI pundits were really failing to deliver Apple intelligence?

The Artificial Intelligence in the tech world

Pundits and critics of Apple long mused that the company was desperately behind in AI. Purportedly, nobody at Apple had ever thought about AI until the last minute, and the cash strapped, beleaguered company couldn't possibly afford to acquire or create its own technology in house.

Obviously, they told us for months, the only thing Apple could hope to do is crawl on its knees to Google to offer its last few coins in exchange for the privilege of bolting Google's widely respected Gemini LLM onto the side of the Mac to pretend it was still in the PC game.

It could also perhaps license Copilot from Microsoft, which had just showed off how far ahead the company was in rolling out a GPT-like chat-bot of its own. Microsoft had also paid AI leader Open AI extraordinary billions to monopolize any commercial productization of its work with the actual Chat GPT leading the industry in chat-bottery.

Apple does allow users to use ChatGPT on their iPhone if they want.

A few months ago when Open AI's unique non-profit status appeared on the verge of institutional collapse, Microsoft's CEO was hailed as a genius for offering to gut the startup for brains and leadership by hiring away its people out from under its stifling non-profit chains.

That ultimately didn't materialize, but it did convince everyone in the tech world that building an AI chat bot would cost incredible billions to develop, and further that the window of opportunity to get started on one had already closed and one way or another, Microsoft had engineered a virtual lock on all manner of chat-bot advancements promised to drive the next wave of PC shipments.

Somehow, at the same time, everyone else outside of Apple had already gained access to an AI chat-bot, meaning that just Apple and only Apple would be behind in a way that it could never hope to ever gain any ground in, just like with Apple Maps.

Or like the Voice First revolution lead by Amazon's Alexa that would make mobile apps dry up and blow away while we delightfully navigate our phones with the wondrous technology underlying a telephone voicemail tree.

Or the exciting world of folding cell phones that turned into either an Android tablet or just a smaller but much thicker thing to stuff in your pocket because iPhones don't fit in anyone's pockets.

Or smart watches, music streaming, or the world of AR/VR being exclusively lead by Microsoft's HoloLens in the enterprise and Meta and video game consoles among consumers. It keeps going but I think I've exhausted the limits of sarcasm and should stop.

Anyway, Apple was never going to catch up this time.Apple catches up and was ahead all along

Just as with smart watches and Maps and Alexa and HoloLens, it turns out that being first to market with something doesn't mean you're really ahead of the curve. It often means you're the beginning of the upward curve.

If you haven't thought out your strategy beyond rushing to launch desperately in order to be first, you might end up a Samsung Gear or vastly expensive Voice First shopping platform nobody uses for anything other than changing the currently playing song.

It might be time to lay off your world-leading VR staff and tacitly admit that nobody in management had really contemplated how HoloLens or the Meta Quest Pro could possibly hope to breathe after Apple walked right in and inhaled the world's high end oxygen.

There's reason to believe that everyone should have seen this coming. And it's not because Apple is just better than everyone else. It's more likely because Apple looks down the road further and thinks about the next step.

That's obvious when looking at how Apple lays its technical foundations.

Siri was Apple's first stab at offering an intelligent agent to consumers.

Pundits and an awful lot of awful journalists seem entirely disinterested in how anything is built, but rather only in the talking points used to market some new thing. Is Samsung promising that everyone will want a $2000 cell phone that magically transforms into an Android tablet with a crease? They were excited about that for years.

When Apple does all the work behind the scenes to roll out its unique strategic vision for how something new should work, they roll their eyes and say it should have been finished years ago. It's because people who have only delivered a product that is a string of words representing their opinions imagine that everything else in the world is also just an effortless salad tossing of their stream of consciousness.

They don't appreciate that real things have to be planned and designed and built.

And while people who just scribble down their thoughts actually believed that Apple hadn't imagined any use for AI before last Monday, and so would just deliver a button crafted by marketing to invoke the work delivered by the real engineers at Google and Microsoft or Open AI, the reality was that Apple was delivering the foundations of a system wide intelligence via AI for years right out in the public.Apple Intelligence started in the 1970s

Efforts to deliver a system wide model of intelligent agents that could spring into action in a meaningful way relevant to regular people using computers began shortly after Apple began making its first income hawking the Apple I nearly 50 years ago.

One of the first things Apple set upon doing in the emerging world of personal computing is fundamental research into how people used computers, in order to make computing more broadly accessible.

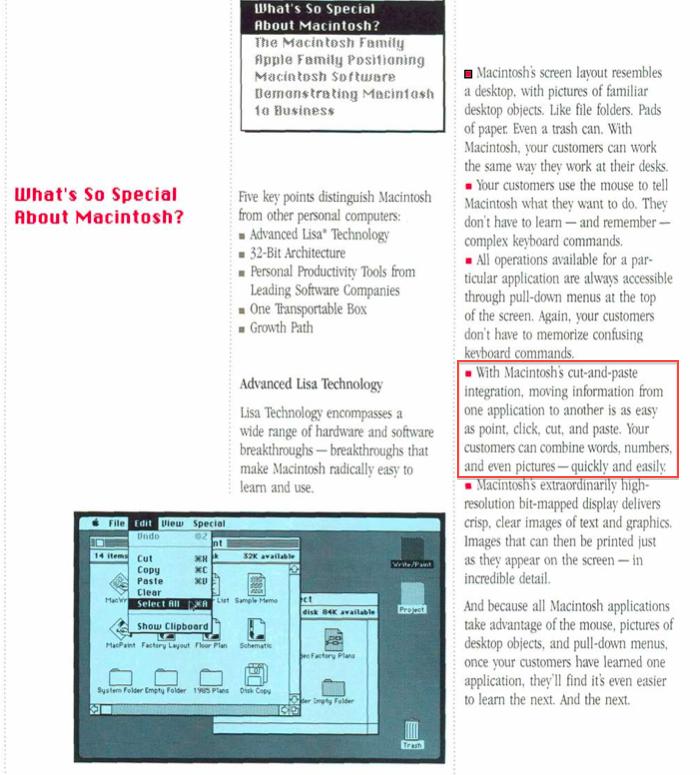

That resulted in the Human User Interface Guidelines, which explained in intricate detail how things should work and why. They did this first in order for regular people to be able to make sense of computing at a time when you had to be a full time nerd hacker in order to load a program off tape and enable it to begin to PEEK and POKE at memory cells just to generate specific characters on your CRT display.

Apple Intelligence started with Lisa Technology

In Apple's HUIG, it was mandated that the user needed consistency of operation, the ability to undo mistakes, and a direct relationship between actions and results. This was groundbreaking in a world where most enthusiasts only cared about megahertz and BASIC. A lot of people, including developers, were upset that Apple was asserting such control over the interface and dictating how things should work.

Those that ignored Apple's guidelines have perished.

Not overnight, but with certainty, personal computing began to fall in line with the fundamental models of how things should work, largely created by Apple. Microsoft based Windows on it and eventually even the laggards of DOS came kicking and screaming into the much better world of graphical computing first defined by the Macintosh, where every application printed the same way, and copy/pasted content and open/saved documents using the same shortcuts, and where Undo was always right there when you needed it.

An era later, when everyone it the tech world was convinced that Microsoft had fully plundered Apple's work and that the Macintosh would never again be relevant and that Windows would now take over and become the interface of everything, Apple completely refreshed the Mac and made it more like the PC (via Intel) and less like the PC (with OS X). Apple incrementally clawed its way back into relevance, to the point where it could launch the next major revolution in personal computing: the truly usable mobile smartphone.

Apple crushed Microsoft's efforts at delivering a Windows Phone by stopping once again to determine -- after lengthy research -- not just how to make the PC "pocket sized," but how to transform it so that regular people could figure it out and use it productively as a mobile tool.

iPhone leaped over the entire global industry of mobile makers, not because it was just faster and more sophisticated, but largely because it was easy to use. And that was a result of a lot of invisible work behind the scenes.

Windows Phone failed in the face of iPhone

Pundits liked to chalk this up to Apple's marketing, as if the company had pulled some simple con over the industry and tricked us all into paying too much for a cell phone. The reality was that Apple put tremendous work into designing a new, mobile Human User Interface that your mom-- and her dad-- could use.

Across the same decades of way-back-when, Apple and the rest of the industry was also employing elements of AI to deliver specific features. At the height of Apple's actual beleaguerment in the early '90s, the company strayed from its focus on building products for real people and instead began building things that were just new.

Apple's secret labs churned out ways to relate information together in networks of links, how to summarize long passages of text into the most meaningful synopsis, how to turn handwriting into text on the MessagePad, and how to generate beautiful ligatures in fonts -- tasks that few saw any real use for, especially as the world tilted towards Windows.

What saved Apple was a strict return to building usable tools for real people, which started with the return of Steve Jobs. The iMac wasn't wild new technology; it was approachable and easy to use. That was also the basis of iPod, iPhone, iPad and Apple's subsequent successes.

At the same time, Apple was also incorporating the really new technology Apple had already done internally. VTwin search was delivered as Spotlight, and other language tools and even those fancy fonts and handwriting recognition were morphed into technologies regular people could use.

Rather than trying to copy the industry in making a cell phone with a Start button, or a phone-tablet, or a touch-PC or some other kind of MadLib engineered refrigerator-toaster, Apple has focused on simplicity, and making powerful things easy to use.

In the same way, rather than just bolting ChatGPT on its products and enabling its users to experience the joys of seven finger images and made-up wikipedia articles where the facts are hallucinated, Apple again distilled the most useful applications of generative AI and set to work making this functionality easy to use, as well as private and secure.

This didn't start happening the day Craig Federighi first saw Microsoft Copilot. Apple's been making use of AI and machine learning for years, right out in the open.

It's used in finding people in images, and text throughout the interface for search indexing and even in authenticating via Face ID. Apple made so much use of machine learning that they started building Neural Engine cores into Apple Silicon with the release of iPhone X in 2017.

Apple was talking about privacy and machine intelligence for yearsArtificial Intelligence gets a euphemism in AI

Apple didn't really call any of its work with the Neural Engine "AI." Back then, the connotation of "Artificial Intelligence" was largely negative.

It still is.

To most people, especially those of us raised in the '80s, the notion of "artificial" usually pertained to fake flowers, unnatural flavorings, or synthetic food colors that might cause cancer and definitely seemed to set off hyperactivity in children.

Artificial Intelligence was the Terminator's Skynet, and the dreary, bleak movie by Steve Spielberg.

Some time ago when I was visiting a friend in Switzerland, where the three official languages render all consumer packaging a Rosetta Stone of sorts between French, Italian and German, I noticed that the phrase "Artificially Flavored" on my box of chocolatey cereal was represented with a version of English's "artificial" in the Latin languages, but in German used the word Kunstlich, literally "Artistic."

I wondered to myself why German uses "like an artist" rather than the word for "fake" in my native language before suddenly coming to the realization that artificial doesn't literally mean "fake," but rather an "artistic representation."

The box didn't say I was eating fake chocolate puffs; it was instead just using somebody's artistic impression of the flavor of chocolate. It was a revelation to my brain. The negative connotation of artificial was so strongly entrenched that I had never before realized that it was based on the same root as artistic or artifice.

It wasn't fraud, it was a sly replacement with something to trick the eye, or in this case, the tastebuds.

Artificial Intelligence is similarly not just trying to "fake" human intelligence, but is rather an artistic endeavor to replicate facets of how our brains work. In the last few years, the negative "fake" association of artificial intelligence has softened as we have begun to not just rebrand it as the initials AI, but also to see more positive aspects of using AI to do some task, like to generate text that would take some effort otherwise. Or to take an idea and generate an image a more accomplished artist might craft.

The shift in perception on AI has turned a dirty word into a hype cycle. AI is now expected to respark the doldrums of PC sales, or speed up the prototyping of all manner of things, and glean meaningful details out of absurd mountains of text or other Big Data. Some people are still warning that AI will kill us all, but largely the masses have accepted that AI might save them from some busy-work.

But Apple isn't much of a follower in marketing. When Apple began really talking about computer "Intelligence" on its devices at WWDC, it didn't blare out term "Artificial Intelligence." It had its own phrase.

I'm not talking about last week. I'm still talking about 2017, the year Apple introduced iPhone X and where Kevin Lynch introduced the new watchOS 4 watch face "powered by Siri intelligence."

Phil Schiller also talked about HomePod delivering "powerful speaker technology, Siri intelligence, and wireless access to the entire Apple Music library." Remember Siri Intelligence?

It seems like nobody does, even as pundits were lining up over the last year to sell the talking point that Apple had never had any previous brush with anything related to AI before last Monday. Or, supposedly, before Craig experienced his transformation on Mount Microsoft upon being anointed by the holy Copilot and ripped off all of its rival's work -- apart from the embarrassment of Recall.

Apple isn't quite ashamed of Siri as a brand, but it has dropped the association -- or maybe personification -- of its company wide "intelligent" features with Siri as a product. It has recently decided to tie its intelligence work to the Apple brand instead, conveniently coming up with branding for AI that is both literally "A.I." and also proprietary to the company.

Apple Intelligence also suavely sidesteps the immediate and visceral connotations still associated with "artificial" and "artificial intelligence." It's perhaps one of the most gutsy appropriations of a commonplace idea ever pulled off by a marketing team. What if we take a problematic buzzword, defuse the ugly part, and turn it into trademark? Genius.The years of prep-work behind Apple Intelligence

It wasn't just fresh branding that launched Apple Intelligence. Apple's Intelligence (nee Siri) also had its foundations laid in Apple Silicon, long before it was commonplace to be trying to sell the idea that your hardware could do AI without offloading every task to the cloud. Beyond that, Apple also built the tools to train models and integrate machine learning into its own apps-- and those of third party developers-- with Core ML.

The other connection between Apple's Intelligence and Siri: Apple also spent years deploying the idea among its developers of Siri Intents, which are effectively footholds developers could build into their code to enable Siri to pull results from their apps to return in response to the questions users asked Siri.

All this systemwide intelligence Apple was integrating into Siri answers also now enables Apple Intelligence to connect and pool users' own data in ways that is secure and private, without sucking up all their data and pushing it through a central LLM cloud model where it could be factored into AI results.

With Apple Intelligence, your Mac and iPhone can take the infrastructure and silicon Apple has already built to deliver answers and context that other platforms haven't thought to do. Microsoft is just now trying to begin pooping out some kind of AI engines in ARM chips that can run Windows, even though there's still issues with Windows on ARM, and most Windows PCs are not running ARM, and little effort in AI has been made outside of just rigging up another chat-bot.

Apple Intelligence stands to be more than a mere chat bot

The entire industry has been chasing cloud-based AI in the form of mostly generative AI based on massive models trained on suspect material. Google dutifully scraped up so much sarcasm and subterfuge on Reddit that its confident AI answers are often quite absurd.

Further, the company tried to force its models to generate inoffensive yet stridently progressive AI that it effectively forgot that the world has been horrific and racist, and instead just dreams up history where the founding fathers were from all backgrounds and there was no controversies or oppression.

Google's flavor of AI was literally artificial in the worst senses.

And because Apple focused on the most valuable, mainstream uses of AI to demonstrate its Apple Intelligence, it doesn't have to expose itself to the risk of generating whatever the most unpleasant corners of humanity would like it to cough up.

What Apple is selling with today's AI is the same as its HUIG sold with the release of Macintosh: sales of premium hardware that can deliver the value of its work in imagining useful, approachable, clearly functional and easy to demonstrate features that are easy to grasp and use. It's not just throwing up a new AI service in hopes that there will someday be a business model to support it.

For Apple Intelligence, the focus isn't just catching up to the industry's "artificial" generation of placeholder content, a sort of party trick lacking a clear, unique value.

It's the state-of-the-art in intelligence and machine learning.

Read on AppleInsider

-

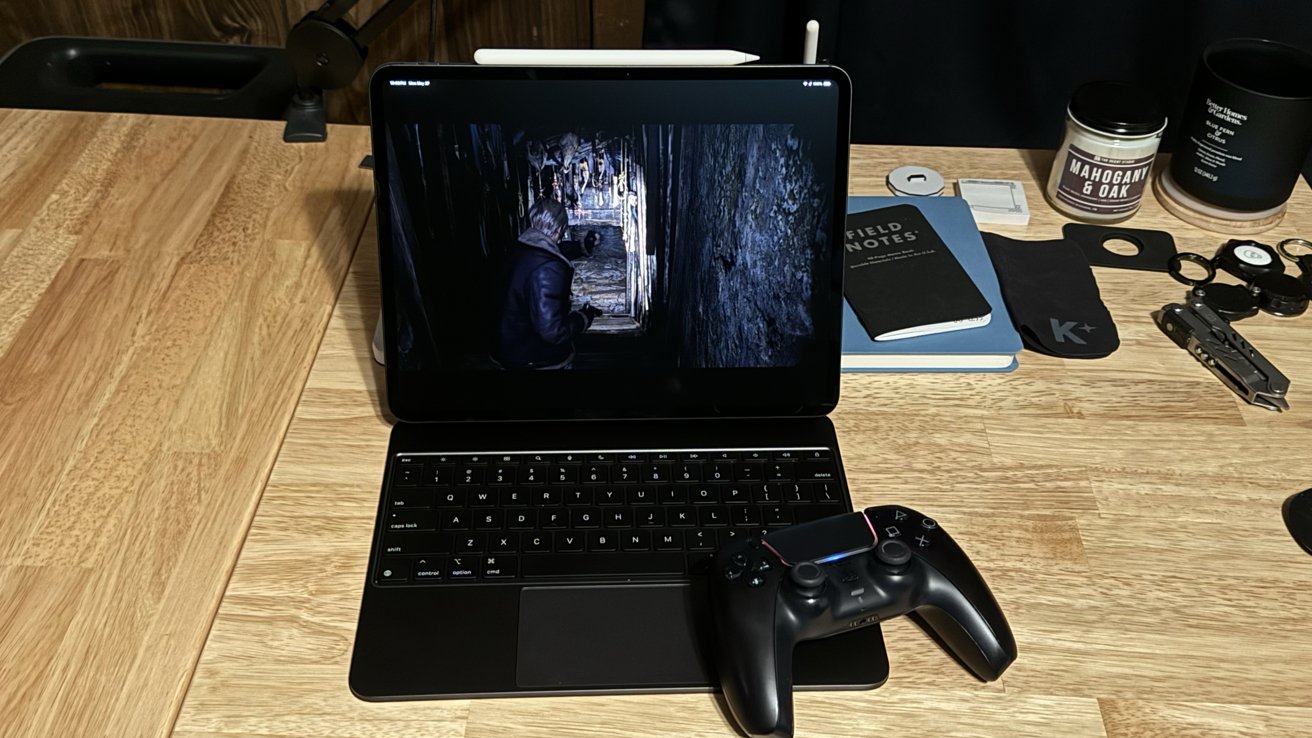

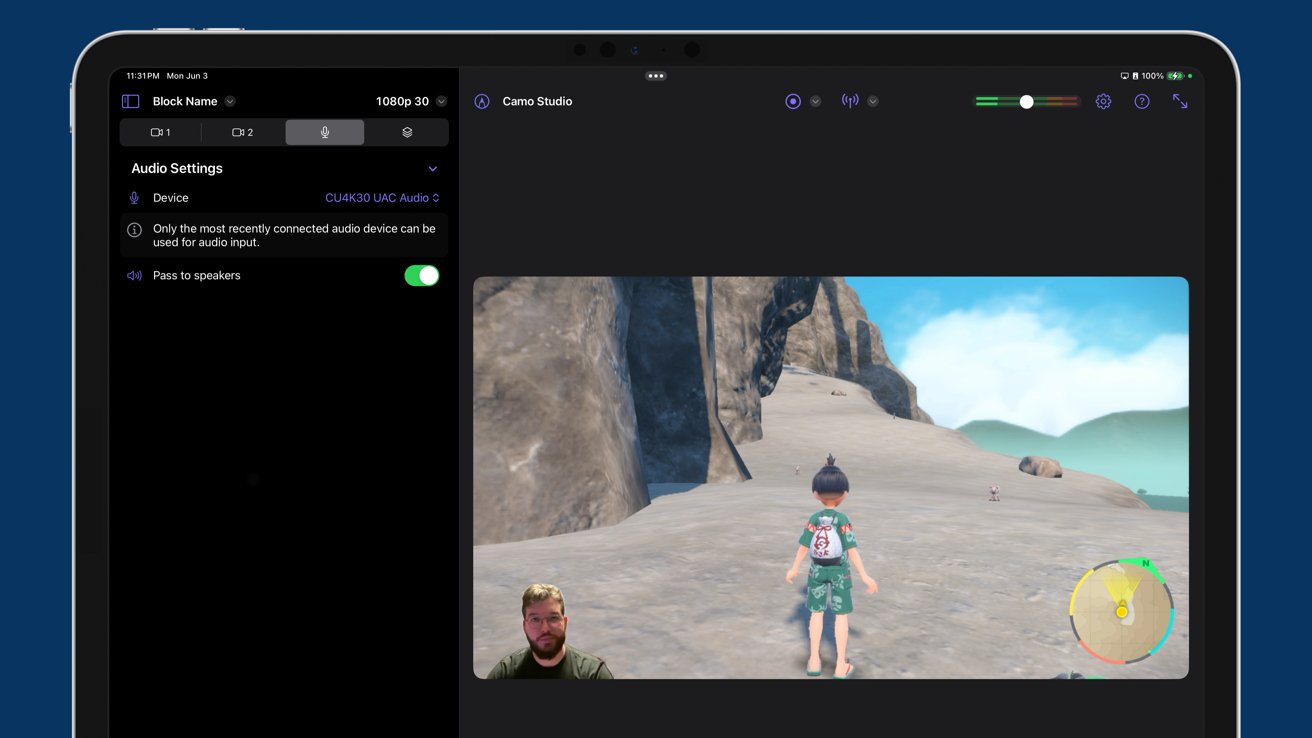

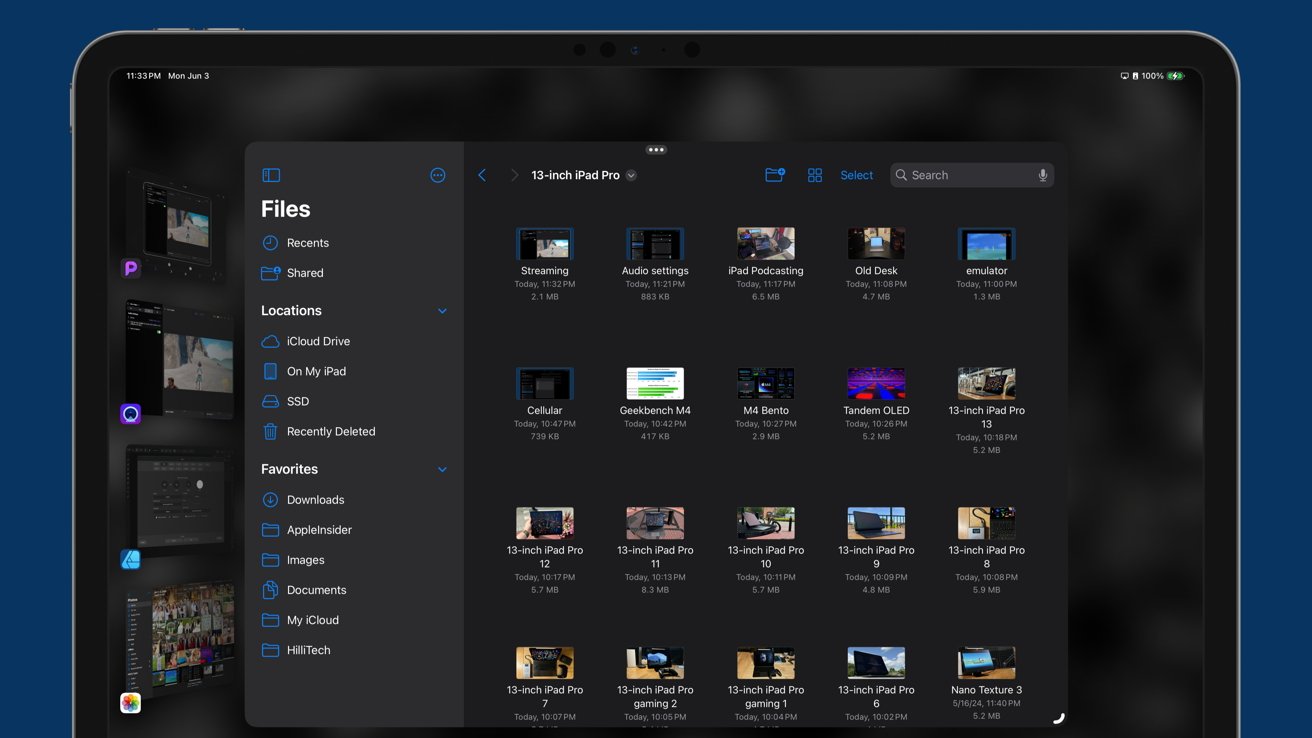

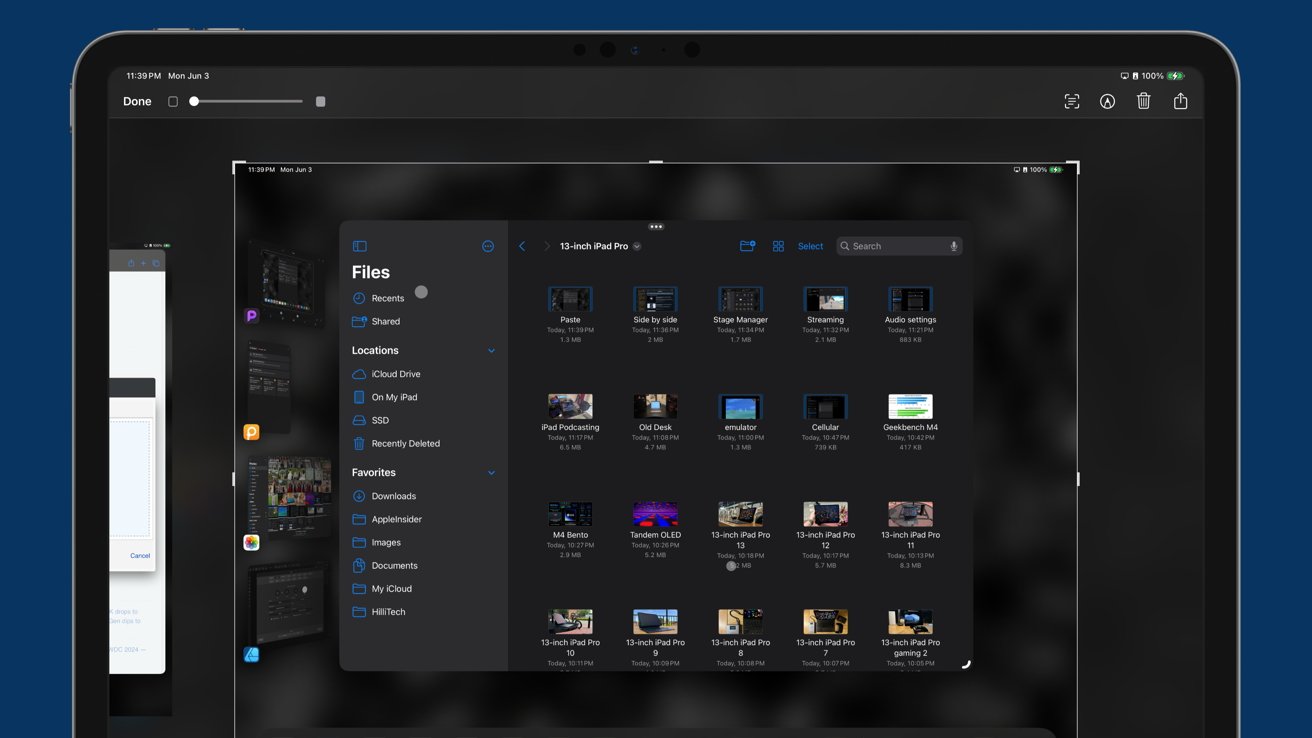

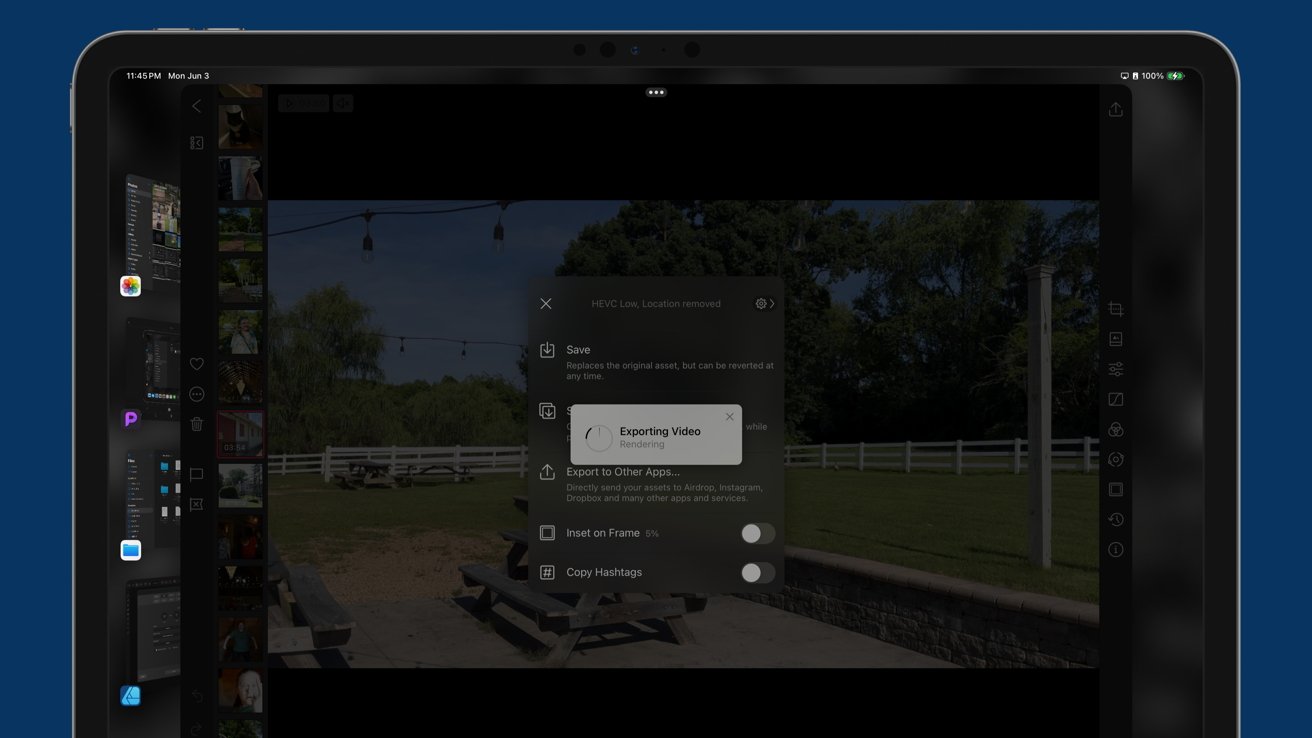

13-inch iPad Pro review: hardware of the future running software of the past

Apple's 13-inch iPad Pro is a testament to the power and efficiency of Apple Silicon, but WWDC has to address at least some of the shortcomings of iPadOS for those hardware upgrades to mean anything.

13-inch iPad Pro review

As Apple shaves away every millimeter from the iPad, it gets closer to realizing the dream of offering information on a sheet of glass. At 5.1 millimeters, there's not much else Apple can do to the hardware without physics getting in the way.

However, the only thing in the way of improving iPad software is Apple and its philosophy surrounding the tablet. While iPad is the perfect work device for some, there are obvious limitations and shortcomings that need to be addressed.

This review is a snapshot of the state of iPad Pro, with new hardware and good-enough software on June 4, 2024. At publication time, WWDC is just days away. There is some hope that Apple will be able to push past good enough and prove why M4 is in iPad Pro.

If these statements feel like deja vu, it's because they are nearly identical to statements made about the iPad Pro when it first received M1. Despite the initial fumble, Apple took a big leap with Stage Manager, but little else.

Window management is table stakes in an operating system running on a so-called pro device.An iPad Pro conundrum

On review here is the 13-inch iPad Pro with 1TB storage, which includes 16GB of RAM. I chose to add Nano Texture and Cellular.

Let's get one thing straight and incredibly clear at the top -- I love iPad Pro. I've worked primarily from iPad since I got this job in 2019, with a brief stint working from a 14-inch MacBook Pro.Apple Vision Pro

13-inch iPad Pro review: iPad Pro remains a favorite in spite of Apple Vision Pro's futuristic promisesintroduces a new bit of complication to my computing lifestyle. One thing is clear -- iPad Pro is my go-to computer for my work, play, and everything in between.

The criticisms I share about iPadOS are from a good place, hoping Apple will one day eliminate these issues. I'd love to see the day when I don't need a Mac for anything beyond screenshots of macOS.

Many people already find themselves in this position with iPad. They can get everything done and don't even notice what may be missing from the platform because it isn't part of their workflows from the start.

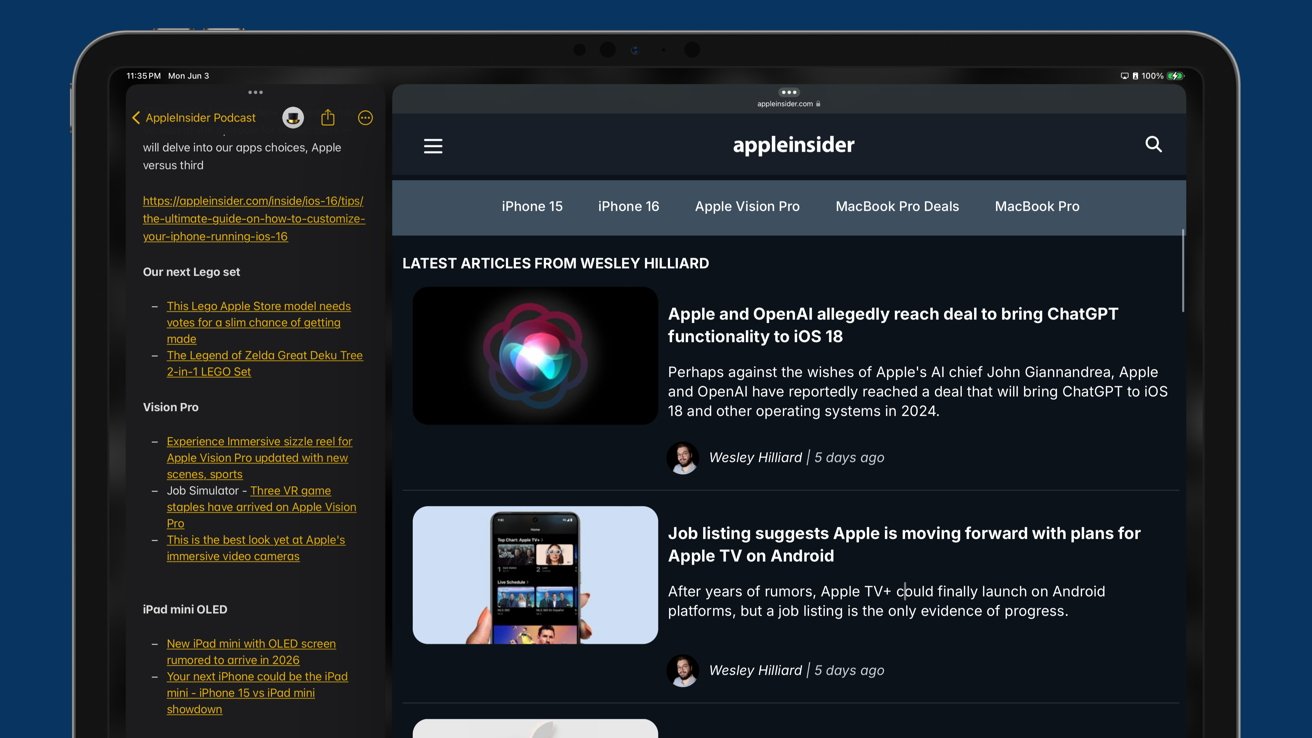

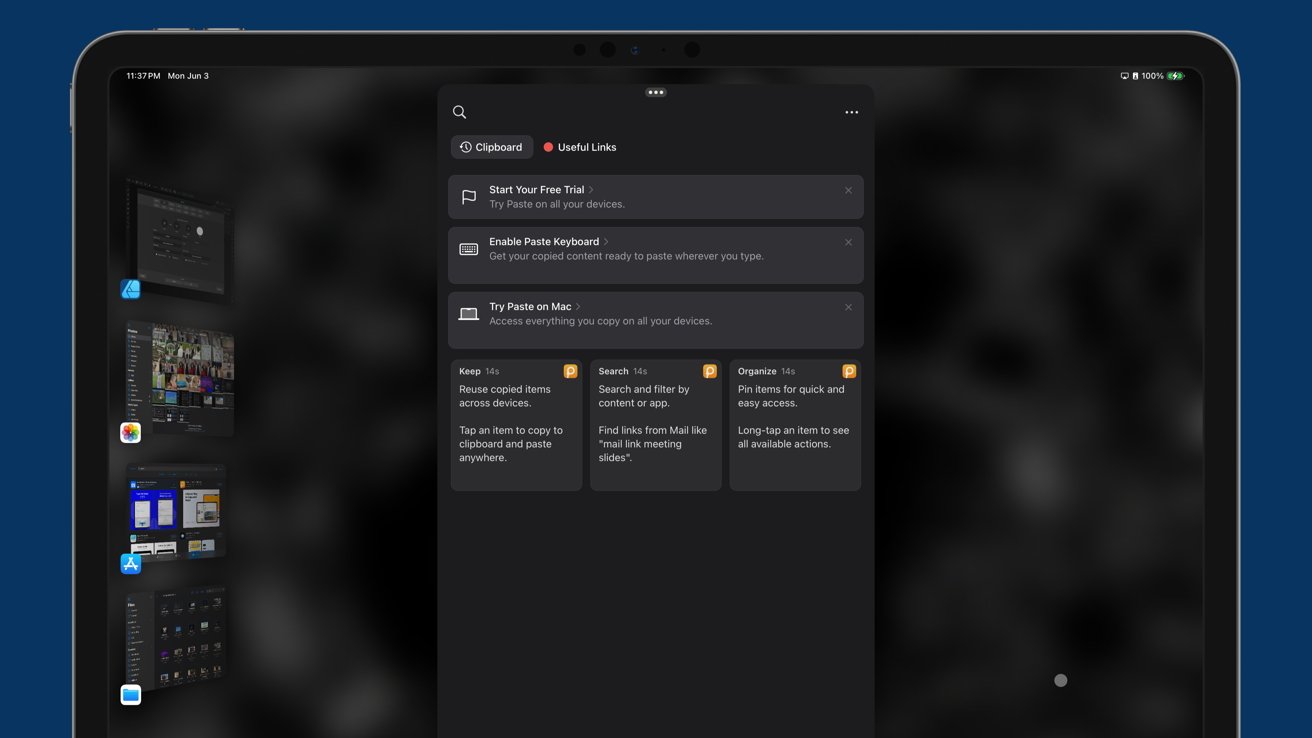

13-inch iPad Pro review: a truly modular platform

The list of things missing gets smaller every year, but it does seem odd which features Apple decides to prioritize and which are ignored perpetually. Podcasting, for example, feels like one that should have been there from the start, given its importance to the Apple brand.

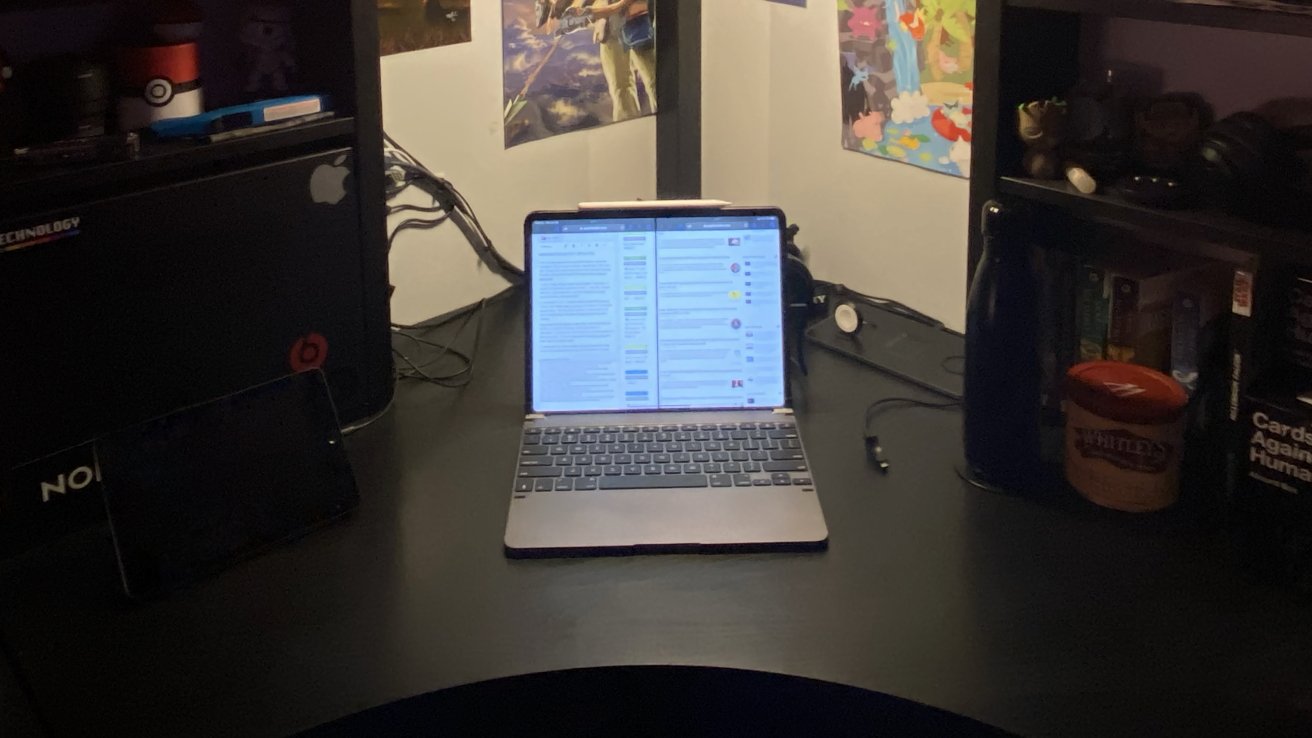

The lack of true desktop-class Safari, a reliable plus hierarchical file system, and background task execution all feel like obvious next steps.

Every iPad Pro release has accompanied a series of cries for macOS on iPad. Across the entire AppleInsider staff, even the Mac-devout, we don't think that's a good idea. Instead, we all want iPadOS to be better, and its own thing.

We want this instead of macOS shoehorned onto a platform that will lead to a sub-par experience not just for macOS, but for the iPad too. That would be the worst of both worlds.

You can't divorce the software from the hardware when you review a new iPad. More on iPadOS in a while, though.13-inch iPad Pro review - Design & Features

Obviously, nothing had changed in design and features since my initial impressions that I shared in the 13-inch iPad Pro hands on. It remains an impossibly thin and light device that's all-new while remaining familiar.

13-inch iPad Pro review: an impossibly thin and light design

If you look at the 13-inch iPad Pro head-on, there's little chance you could tell the difference between it and the 12.9-inch models that came before. As usual, a small bezel surrounds the all-screen display.

Three aspects might give it away -- OLED, the front-facing camera, and Nano Texture if you've opted for it. The relocated camera, lack of bloom, and a slight film-like texture are apparent when viewed side-by-side with any other iPad.

Apple's 13-inch iPad Pro is 5.1 mm thick, weighs 1.28 pounds, and has a thin camera bump that reduces wobble when lying flat. The design changes required new magnet arrangements, so none of the previous accessories are compatible.

13-inch iPad Pro review: new versus old

I am quite happy to have learned that Apple changed the internal design of the iPad Pro to account for heat dissipation and resistance to bending. Apple says the central skeleton helps prevent bends along the landscape side, and it also houses the logic board.

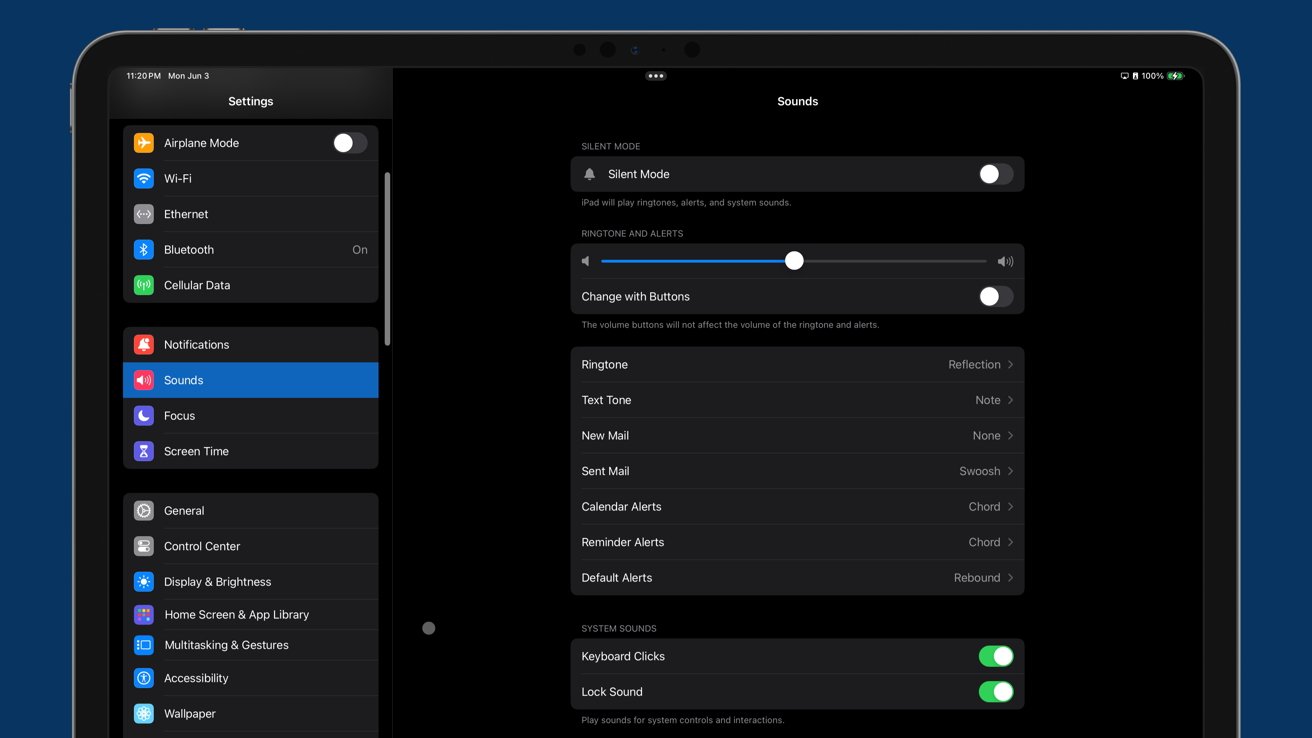

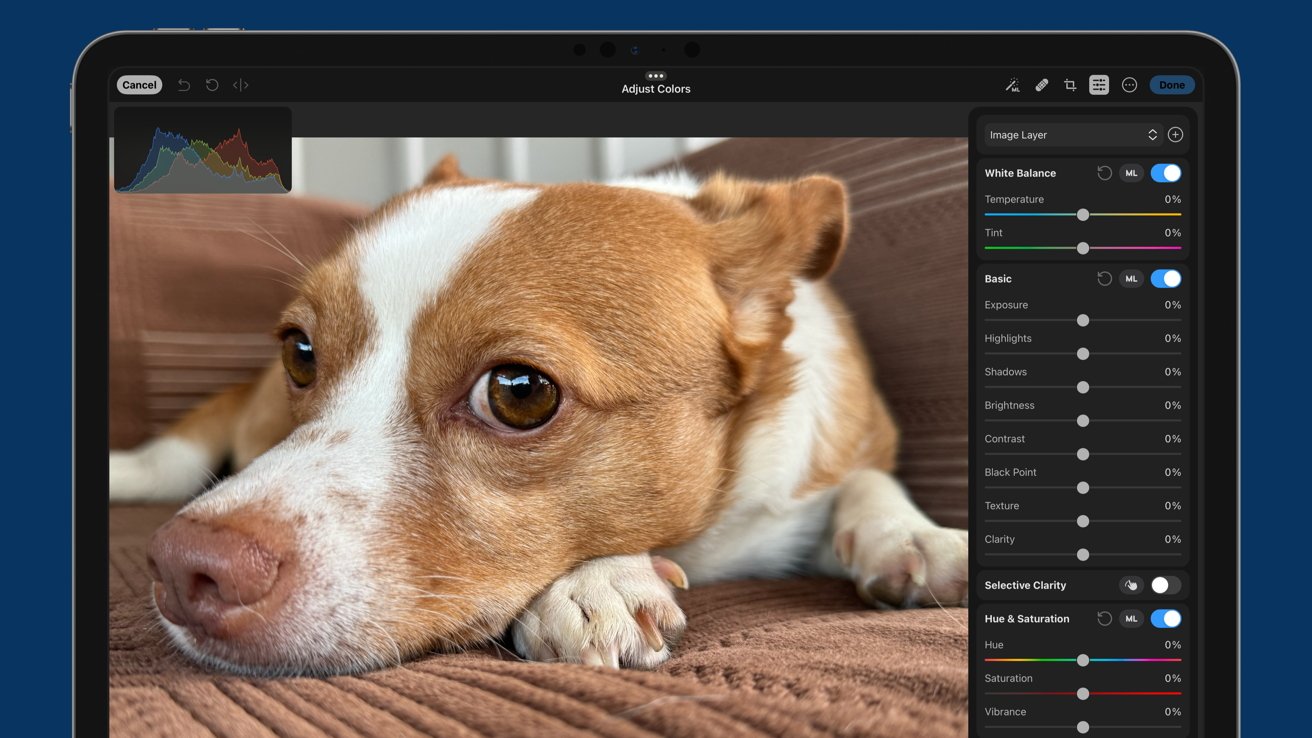

That means the M4 processor is directly below the Apple logo, which acts as a better heat sink now, thanks to copper infusion. Having the hot chip in the center also gives heat more space to dissipate and keeps heat away from your hands.OLED display

One of the best things that has happened to the iPad since cursor support is OLED. The display is just gorgeous in how it reproduces color and black levels. Creative users will benefit greatly from the upgrade from mini-LED backlighting.

13-inch iPad Pro review: OLED enables perfect black levels and near infinite contrast

13-inch iPad Pro has a 2,752p by 2,064p display. That's the expected 264 ppi density. Tandem OLED panels enable up to 1,000 nits of sustained brightness for SDR and HDR content.

The display can reach 1,600 nits for specific areas of HDR content. The super bright OLED is stunning in all lighting conditions, even direct sunlight.

Apple shifted to OLED panels with iPhone X, and people have been begging for OLED in iPad ever since. A half-step arrived in 2021 with min-LED backlighting in the 12.9-inch model. It still produced bloom around bright objects with black backgrounds, so people weren't too impressed.

13-inch iPad Pro review: tandem OLED enables a much brighter display

Tandem OLED addresses every complaint by offering superior blacks and incredible sustained brightness. The 2,000,000 to 1 contrast ratio is very noticeable with some content.

OLED still isn't ubiquitous, so some content and video games aren't designed with near infinite contrast and perfect black in mind. Like with the iPhone, I favor true-black UI in apps that allow it, like Ivory.

The new panel doesn't just look great -- it's more power efficient. Any true black element on the display is just pixels that aren't turned on. The more black pixels on the display, the better it impacts battery life.

13-inch iPad Pro review: black levels stay consistent, even in bright sunlight

For example, if you're reading a book and have it in the true black mode with white text, you will likely maximize battery life. However, since the display can get incredibly bright, you will likely counteract the energy savings if the brightness stays too high.

That said, our testing bears out Apple's estimations of about 10 hours of battery life. After jumping from the M1 iPad Pro, I haven't noticed my charging habits change.Nano Texture

The Nano Texture option was always going to be controversial, especially on a portable device. After years of Apple selling Pro Display XDR and Studio Display with a "do not touch" Nano Texture, suddenly it's fine on iPad.

Confusion reigns sometimes when Apple uses the same term for two different processes. The Nano Texture on the Pro Display XDR is a mechanical process, where it's a chemical one on the iPad.

13-inch iPad Pro review: Nano Texture removes all reflections with some tradeoffs

Apple recommends using the included polishing cloth to clean the display, but otherwise, it's just a normal iPad display. You can touch it without noticing any difference in feel, and it's easy enough to clean with the cloth.

Drawing with the Apple Pencil Pro on the Nano Texture display doesn't feel different to my hand. The tip glides along the glass without any resistance -- I believe any suggestion otherwise might be a placebo.

That's the point of the Nano Texture for iPad Pro. That chemical etching I alluded to before that is done on a microscopic scale is less of a series of grooves like on Pro Display XDR, and more of a surface-level optical characteristics change. This is why you don't feel it with your finger or when you run an Apple Pencil across the surface. It's "etched" just enough to diffuse glare.

The Nano Texture works amazingly. Since getting this option, I always note that there aren't any visible reflections or light sources, no matter where I sit -- even in direct sunlight.

But, there is a "film" effect that's exaggerated by direct light sources where the light being refracted inward causes colors and black levels to wash out. The effect is pronounced in places like the Apple Store or direct sunlight when viewing the device outside of a natural angle.

It is pretty challenging to capture the effect properly with a camera, and it doesn't exist at all on the Pro Display XDR surface. Product photos are often taken at extreme angles, not from natural viewing angles. I have shown various examples of direct light sources reflected by the different display types, but these images still don't do the effect justice.

13-inch iPad Pro review: the 'fog' effect made by reflecting light isn't as drastic in real life at normal viewing angles

The camera will capture light with a more pronounced effect than the naked eye, showing the Nano Texture in exaggerated detail. It is a display you'll have to see in person to understand whether Nano Texture is a good option for you.

The tandem OLED is bright enough on its own to compensate for sunlight and other harsh lighting conditions. However, intense light means any black surface on the display will become a reflective mirror, which is why Nano Texture is useful. In some situations, you might sacrifice black levels ever so slightly, but the result is a perfectly visible display.

I love the Nano Texture, but it is clearly a highly subjective feature -- and we have difference of opinions across the staff. Mike Wuerthele, our managing editor, is not a fan, as he prefers those blacks on the screen to be fully black. Those deep blacks are one of the main tenets of OLED, and blunting that makes no sense, according to him.

He's also mostly an indoor-user, and his "bunker" as he calls it, has little natural light.

This all said, you'll know if you need it or not, and if you're in doubt at all, the only way to know for sure is to try it at home. Photos, videos, anecdotes from reviewers, and in-person viewings at the Apple Store won't tell you what it's like to use in practice.

13-inch iPad Pro review: working outside with bright OLED and Nano Texture is a game changer

There are some concerns about the Nano Texture's longevity, as it is a surface treatment. It is something I'll revisit in future reviews.New camera systems

Apple rearranged the 13-inch iPad Pro cameras to account for the new thinner device and default orientation. The Ultra Wide Camera was removed, and the front-facing camera is now on the landscape side of the device.

13-inch iPad Pro review: the camera bump is thin and houses only one camera

Both the front-facing camera and the Main Camera are 12MP. The front-facing camera has an ultra-wide field of view and uses Center Stage to focus on subjects.

There isn't much to say about the rear camera. It hasn't changed, though the image signal processor is likely more powerful in the M4. Apple also showed off a special document scanner upgrade that helps eliminate shadows using the flash and multiple exposures.

So far, we haven't seen much -- if any -- difference in what the new document scanner tech provides in most environments that you should scan books in, versus how it worked before. We'll be looking at this closer in the future, and including sub-optimal conditions for book scanning.

The front-facing camera is paired with the True Depth system for Face ID. All the components are centered to the landscape side, but the front facing camera is slightly off center on the left.

13-inch iPad Pro review: the front-facing camera moved to the landscape orientation

The change in camera position makes FaceTime calls feel much more natural when the iPad is docked. The camera is in a much better position, whether it is being used with the Magic Keyboard, in a magnetic stand, or simply held in landscape mode.

I also love the new position for the True Depth system. When it was on the landscape side of the iPad, I found it would often be obscured by my hand.

The new orientation is also better for angling the tablet for Face ID, like when lying down. The True Depth system would be at an awkwardly high position when on the portrait side and require a much more dramatic shift to bring the camera level with my face.Speakers, connectivity, and more

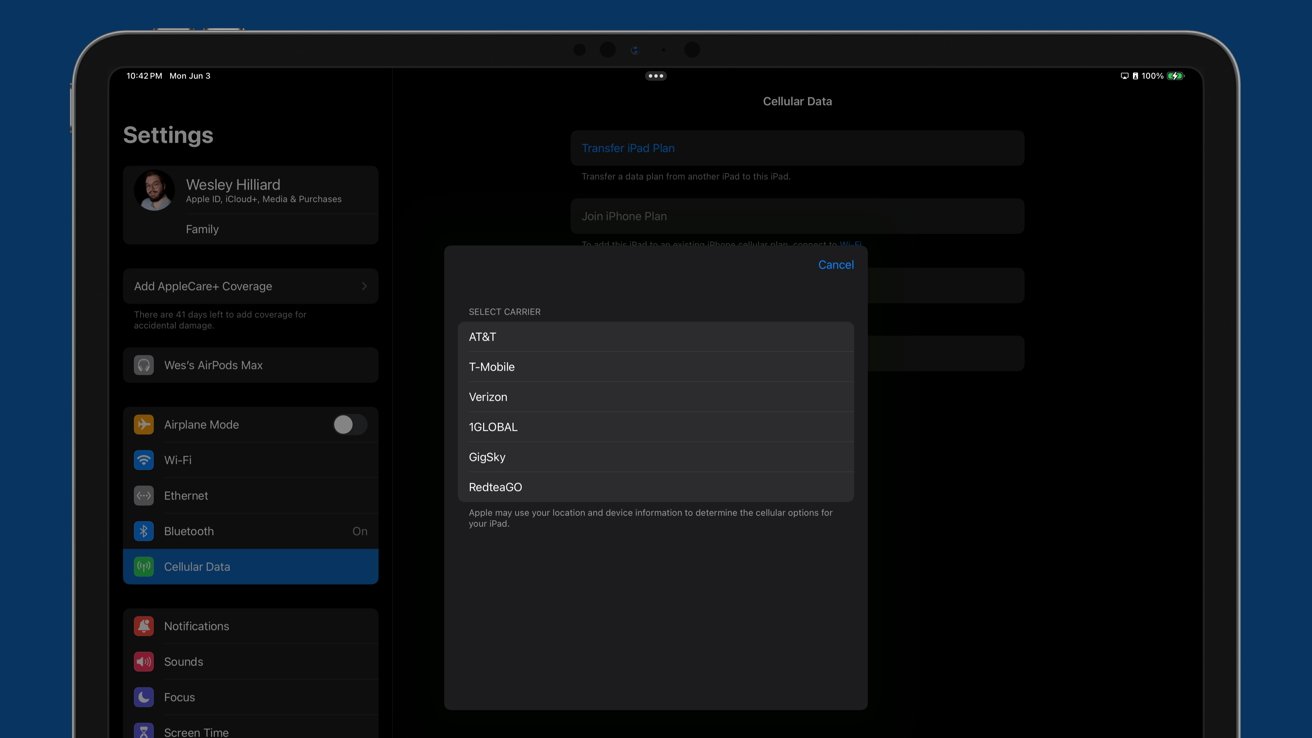

Outside of the obvious flagship features, not much else has changed. There are four speakers, four microphones, a Wi-Fi 6e radio, and 5G cellular.

13-inch iPad Pro review: eSIM makes getting cellular coverage much easier

Apple didn't opt for the nascent Wi-Fi 7 radio as expected. It's hardly a loss as many users, even pros, are likely still running Wi-Fi 6 or even Wi-Fi 5.

This may be an issue for future-proofing, but we'll see exactly when. Wi-Fi 7 systems are still ludicrously expensive and not worth it for the foreseeable future for nearly everybody.

The cellular 5G option only has sub-6GHz, not mmWave, but I doubt anyone will notice given the technical limitations of mmWave. Apple's use case of finding empty football stadiums to play multiplayer games never made much sense.

The exclusion of mmWave may be more of a testament to poor carrier rollout and availability versus usefulness.13-inch iPad Pro review - accessories

The new device design and magnet layout renders many existing accessories incompatible. Any magnetic mount, keyboard, case, stylus, or other device purpose-built for previous iPad Pros won't work on the newer generation.

The Thunderbolt/USB-4 port works identically, connecting to my existing setup without any problems, with the same speed delivery from the port as before, and nothing has changed one iota about those specs. Drives, displays, and other tools will work identically.Apple Pencil Pro