AppleInsider · Kasper's Automated Slave

About

- Username

- AppleInsider

- Joined

- Visits

- 52

- Last Active

- Roles

- administrator

- Points

- 10,963

- Badges

- 1

- Posts

- 66,634

Reactions

-

Apple Intelligence may be coming to Vision Pro -- but not soon

The roll-out of Apple Intelligence in the fall is passing over the Apple Vision Pro -- but a report on Sunday claims that it could arrive on the existing hardware as soon as 2025.

Apple Vision Pro: hardhat optional

Apple Intelligence is arriving in test in the next few weeks. It will ship to for everybody with a compatible device in the fall, with iPadOS 18, macOS Sequoia, and iOS 18.

Not on that list at present is the Apple Vision Pro. But, a new report on Sunday from Bloomberg discusses the possibility of it arriving on Apple's headset.

The specs of the headset as it stands can support Apple Intelligence. After all, the headset has a M2 processor, and 16GB of RAM, which is more than what's required on a Mac for the feature.

Apple Vision Pro is also a variant of iPadOS, so that's another point in the favor of the technology coming to the headset.

A potential roadblock cited in the report is the user experience. The report claims that the mixed reality environment may be a challenge.

But, the report makes sense. Apple is positioning the Apple Vision Pro as a productivity device, and the Apple Intelligence features make sense for the platform.

What's more questionable is the concept that it might tax Apple's cloud computing infrastructure, which is alluded to and dismissed in the report. As compared to the number of Macs that can use the tech, and the volume of iPhone 15 and iPhone 16 models that will be available when it may arrive, Apple Vision Pro quantities are a drop in the bucket.

Apple Vision Pro sales numbers are several orders of magnitude less than the other hardware that will run Apple Intelligence.

And even so, many Apple Intelligence features aren't going to arrive until 2025 anyway. And, they'll be limited to US English at first.

Rumor Score: Likely

Read on AppleInsider

-

How Apple's software engineering teams manage and test new operating system features ahead...

Internally, Apple engineers rely on a dedicated app to view, manage, and toggle in-development features and user interface elements within pre-release versions of new operating systems. Here's what the app is called and what it can do.

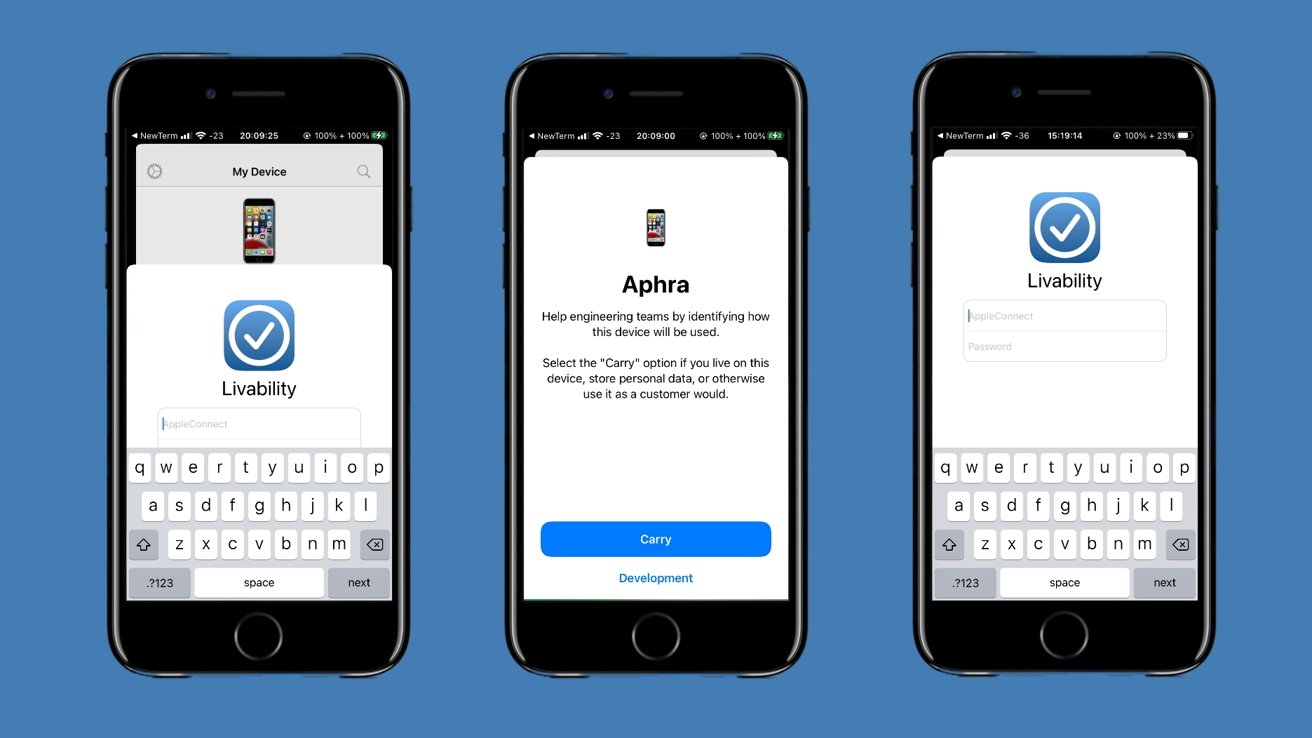

Livability is an internal app used by Apple engineers to manage new features

An essential internal-use application known as Livability lets the company's software engineers keep track of individual operating system features and test devices as a whole. It can be found in InternalUI builds of iOS, a specialized variant of the iPhone's operating system that's used internally for software development.

The application itself is a core component of Apple's pre-release operating systems. With Livability, Apple's software development and engineering teams can enable, disable, and debug upcoming features to make sure they are fully functional before their inevitable release to the general public.

Speaking to people familiar with the matter, AppleInsider has received information about the core functionality of the Livability application, as well as the specific options and settings the app contains.Settings and features available within the Livability app

Livability provides Apple employees with the following information and settings related to development devices:- Essential device information (serial number, hardware model, codename)

- Information on fusing -- development or production

- Details about VPN and MDM profiles currently installed

- Options for software updates, backups, custom boot arguments, and Carry status adjustment

- Feature Flag viewer -- lets users manage and view individual features or groups of features

- Command Center -- gives employees a way of making changes to multiple development devices simultaneously

Device information and settings within Livability

The Livability app contains an overview of essential information and options for development devices. Through the app, its users can see the serial numbers, codenames, hardware models, and marketing names of development devices, among other things.

The application also contains information about device fusing -- a key hardware characteristic of all Apple devices. The devices Apple sells to its customers are "production-fused," meaning that they have significant hardware-level security measures in place, preventing the device from running certain types of code.

"Development-fused" devices are the exact opposite of this. Pre-PVT type prototype units, such as EVT or DVT devices are in most cases development-fused, meaning it is possible to use the JTAG testing standard through specialized cables.

Apple uses both development-fused and production-fused devices to test different things, which is why Livability has an indicator for this key hardware characteristic.

The application also allows employees to specify whether or not their device is a so-called "carry" device, a daily driver, in other words. According to people familiar with the application, this information is primarily of use to Apple's engineering teams.

In addition to this, Livability features options to set custom boot arguments for the operating system kernel. With this feature, the user can force the device to boot into verbose mode or a special diagnostics menu, among other things.Livability's feature management system

Livability provides Apple's software development teams with a comprehensive overview of all features available on the operating system currently installed. The application displays and organizes feature flags -- which are toggles that can be used to disable or enable software features.

Feature flags are organized primarily by date. In speaking to people familiar with the development of Apple's latest operating systems, we have learned that feature flags are sorted into the following categories for each year:- Spring

- WWDC

- Fall

- Winter

These categories indicate the intended release date for new operating system features. Apple generally releases updates for its latest operating systems throughout the year, and such updates often introduce new features that were announced or previewed at an earlier point in time.

Apple's internal-use operating systems can have features, or early code for features, that are scheduled for release years into the future. The same operating system could have feature flags meant for release during WWDC 2024 and WWDC 2026, for instance.

Within these time-based categories, feature flags are further divided according to the app or aspect of the operating system they affect. This means that within WWDC 2024, for instance, employees would see categories such as Notes, Music, Spotlight Search, and so on.

Individual operating system features, or features part of a larger initiative, are often developed under codenames known only to select Apple employees. While some codenames can present a vague indication of the feature's overall goal, the exact purpose of codenamed features cannot be discerned without people who have direct knowledge of the matter.

Greymatter, a reference to a type of tissue within the human brain, was the codename for Apple Intelligence. Apple's new universal calculator app was codenamed GreyParrot -- a nod to the African Gray species of parrot, known for its high intelligence compared to other bird species.

Features are also classified according to their current development status, which changes as time moves on. There are four categories that indicate the degree of completion:- Under Development

- Code Complete

- Preview Ready

- Feature Complete

Within Livability, it's possible to activate all features with a specific development status through a dedicated subscription setting. We were told that Apple employees could use this to, for example, activate all features marked "Under Development."

The application will display different warnings depending on the category selected. These warnings serve to inform the users of the potential effects a new feature may have on their machine.

Generally, features other than those marked "Feature Complete" have not been fully tested and may be incomplete in some way. Features labeled "Under Development" could cause devices or specific applications to behave in unexpected ways.

Practically, this means that in-development features may prevent system applications or UI elements from working properly, causing them to crash upon launch. Alternatively, visual glitches such as misplaced text, images, or toggles can also sometimes occur.What is Livability used for?

Livabillty's feature flag viewer is of use in situations where debugging is necessary. If a new feature causes major issues, Apple's engineers can disable it until it has been fixed, then activate it later on to confirm its functionality.

With the app, Apple's employees can disable so-called sensitive UI elements so that they are not accidentally exposed to unauthorized individuals. An example of this use case was seen in a pop-up message uncovered by users of social media platform X in June of 2024.

At WWDC 2024, Apple previewed a new user interface for Apple Intelligence and Siri but kept the UI disabled in the initial developer beta of iOS 18 released on June 10. Users quickly found a way to activate it, however, which is how the pop-up message was accidentally discovered.

The message warned employees that sensitive UI and sounds were enabled and that they were not to be used within 50 feet of undisclosed individuals. Instructions on how to disable the sensitive UI elements were also included in the message.

As mentioned earlier, the application also lets users install software updates and create backups, manage VPN settings, and much more. This makes Livability an all-around device management application for Apple's software teams.

The information we acquired about the Livability app provides useful insights into Apple's development process, how software teams manage and organize new features, and how they keep track of development units.

Read on AppleInsider

-

Apple's 'longevity by design' initiative is a balance between repairability & secure engin...

Apple has published its list of principles it follows in product design to keep items like the iPhone durable, while simultaneously balancing the need for repairability.

An iPhone being repaired

Apple has frequently been praised for the designs of its products, ranging from the iPhone to the MacBook Pro. It has created quite a few iconic items in its history, with it relying on teams following the same design ethos.

In a document titled "Longevity, by Design" published in June, Apple offers what it works towards when it creates its hardware. It also discusses what can and should happen after said hardware gets into consumer hands.Approach to longevity

The 24-page document, including cover and sourcing pages, goes over quite a few areas, but starts off by insisting Apple is working to create the "best experience" for customers.

"Designing for longevity is a company-wide effort, informing our earliest decisions long before the first prototype is built and guided by historical customer-use data and predictions on future usage," it states.

This requires Apple to constantly consider the balance durability, design aspects, and repairability, while maintaining safety, security, and privacy.

"Our approach is working. Apple leads the industry in longevity as measured by the value of our secondhand products, increasing product lifespans, and decreasing service rates."

As evidence of this, the document points out that the value of its iPhone on the second-hand market is at least 40% more compared to Android devices. That valuation also increases with the age of the iPhone.

The lifespan of the iPhone is also continuing to grow. Hundreds of millions of iPhones have been in use for more than five years, it claims.

As for service rates, newer devices are apparently less likely to require repairs than older ones. Out of warranty repairs from 2015 to 2022 were down 38%, with accidental damage repairs for iPhone down 44% since the iPhone 7.

Liquid damage repairs also went down 75% with ingress protection in the iPhone 7.

A drop-tested iPhone [YouTube/MKBHD]

Apple goes on to spotlight its reliability testing, which it says is used to accompany the design process. Reliability is "intrinsically part of the entire product development life cycle," the document states.

It also covers ways Apple tests its products, some of which were raised in May on social media.

Operating system support is also "a key pillar of product longevity," thanks to security updates and bug fixes. Support for operating systems "extend well beyond the historic industry norm," with up to six years of support from the device's original release.Principles on repairability

Apple's hardware is often criticized for being hard to repair, but it has been something that it has been trying hard to fix. While it still suffers from criticism over parts pairing, Apple does try to appease electronics enthusiasts who want to repair their own hardware by sending out self-sevice repair kits.

Part of this is caused by the way Apple designs its products in the first place.

"Apple strives to improve the longevity of devices by following a set of design principles that help resolve tensions between repairability and other important factors," the document reads. This includes the impact to the environment, access to repair services, preserving safety and security, and enabling transparency in repair.

Apple insists that while it designs products for everyday use and minimizing repairs, it has to design in the possibility for repair, while maintaining durability. This is said to excuse its use of advanced adhesives to secure batteries, which can also be released when pulled in a specific direction.

The iPhone 16 is said to be the most repairable ever with 11 key modules.

A person repairing a MacBook Pro

The first principle is Environmental Impact, which includes Apple's carbon neutral goals, and using more recycled and renewed materials. It believes that prioritizing product longevity instead of repairability create "meaningful reductions in environmental impact."

Access to Repair Services is the second principle, be it from Apple itself, third-party repair outfits, or via the consumer's own effort. Apple has doubled the size of its service and repair network in the last five years.

The company also says that it is committed to supporting third-party repair services and repair tools.Safety, Security, and Privacy

Principle three is Safety, Security, and Privacy, which touches upon touchstones of the Apple ethos. A user's privacy should not be compromised during or after a repair, it states.

The section explains that there's the use of cloud-based diagnostic systems that reduce the need for requests for customer passwords by support staff.

It also discusses biometric security, and the ned to maintain it to a high level. Due to the potential risks involved, Apple justifies its its use of genuine Apple parts for repairs.

An Apple self-service repair kitTransparency in Repair

The fourth principle is Transparency in Repair. Customers have the right to know that repairs of crucial parts were performed using Apple-sourced parts.

Repairers in Apple's IRP network can offer third-party parts alongside genuine Apple parts. However, Apple will disable a third-party part in cases involving Face ID or Touch ID sensors.

The document then goes on to discuss parts sourcing and the use of third-party parts in repairs.The road ahead

Using data and its commitments to guide its production, Apple aims to ensure products "exceed expectations" on durability and perofrmance.

"This journey is one that will never be over, because as materials, testing, and technology advance, so too will the ways that we use them to make our products stand the test of time." It reexplains that this means durable and reliable products, which are also repairable with privacy and transparency in mind.

"That's our commitment to our customers, to future generations, and to the planet we call home."

Read on AppleInsider

-

Inside Apple hardware prototype and development stages

Whether a device is a Mac, iPhone, or iPad, every piece of hardware Apple makes needs to go through several prototype and development stages. Here's what that looks like, and what Apple calls each stage.

Proto2 units of the iPhone 15 Pro featured a unified haptic volume button, developed under the codename Project Bongo

Each year, Apple's hardware prototypes undergo multiple rounds of testing to ensure that they are of sufficient quality. This can include durability testing, drop testing, water resistance testing, reliability, and design viability testing, among other things.

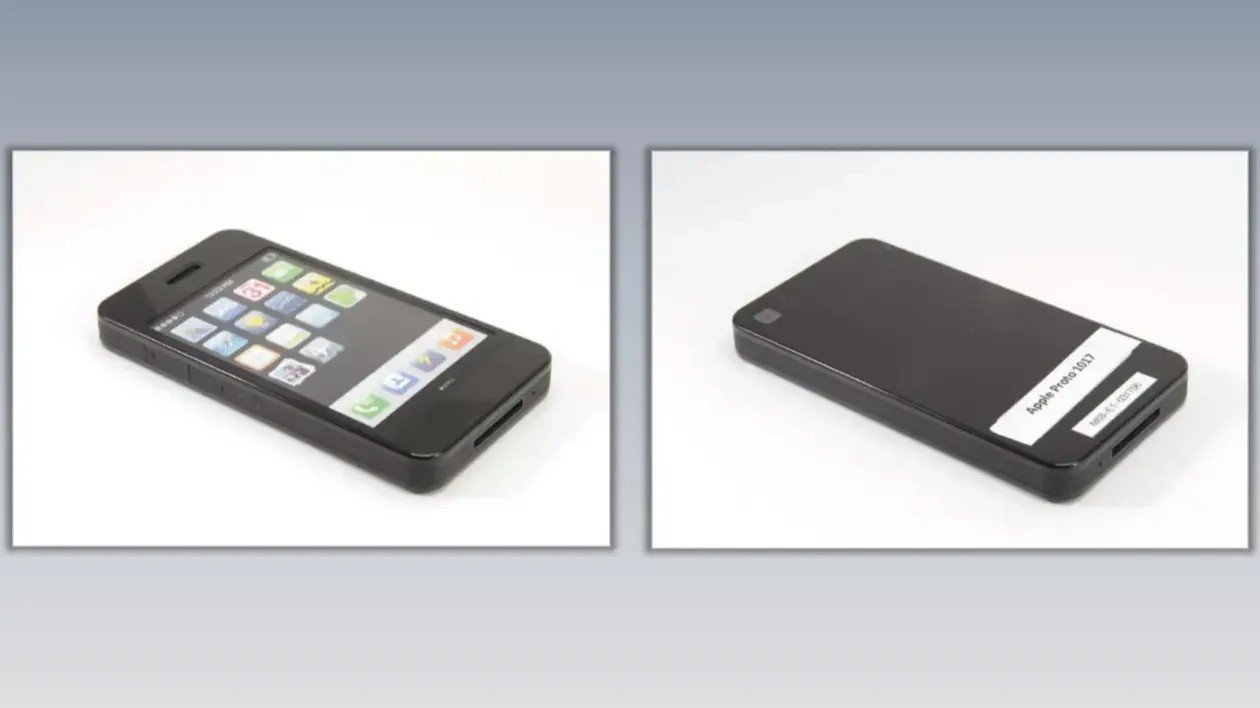

Apple's prototyping processes are undoubtedly complex, as tests are performed on nearly every core hardware component as a standalone item. Nonetheless, the development of Apple devices as a whole follows a more predictable pattern that is significantly easier to explain.Initial design mock-ups

Before the prototyping and testing of individual components can begin, Apple first needs to settle on a design for its new hardware project. To accomplish this, the company's development teams create many different mockups, serving as examples of what the finished product could one day look like.

Many early iPhone mockups were revealed in 2012 during Apple's lawsuit with Samsung

The earliest mockups are typically non-functional, as their sole purpose is to accurately represent a design concept. Because of this, they have no dedicated hardware components and generally only consist of an outside casing.

The materials used for this development stage can vary depending on the unit in question, the selected design, and its complexity. Some early mockups of the original iPhone were made of polycarbonate, for instance, while the final mass-production units were made largely of aluminum and glass.

The authenticity of early Apple mockups is often difficult to ascertain unless the units themselves have an unquestionable connection to the company or one of its employees. If a mockup has an authentic Apple asset tag or a sticker or appears in a book, for instance, then it is likely to be original.

Many of Apple's early iPhone mockups were unveiled through court documents during the company's lawsuit with Samsung in 2012.Drop test stages

Once Apple settles on an initial design for its next product, detailed documentation, product schematics, CAD files, and measurements are sent to select manufacturing facilities and factories. These designs are then checked multiple times to ensure that there is no interference between individual components.

Apple develops dedicated prototype stages for drop testing

In some instances, brackets, mounting points, or other minor details may be altered to ensure that the components can fit together once they are produced and assembled. Once a viable test design has been created, preparation for initial drop testing begins.

In specialized areas of factories, dedicated drop-testing environments with slow-motion cameras and specialized robots are set up. To calibrate the equipment before testing, engineers sometimes use plastic slabs with the same dimensions as an upcoming iPhone or iPad.

To make initial testing possible, factories begin producing so-called "Drop1" stage devices. These consist of core structural components, such as the device housing and glass backplate, where applicable.

These core components are sometimes created in multiple colors. This is done when a key production process changes, such as when a new coloring technique or material is used for the initial batch, and the durability of it needs to be tested.

Rather than using fully functional hardware components, Drop1 test units feature placeholder parts. These are often made of machined metal and can substitute the rear cameras, the battery, or even the glass backplate in some instances.

Once the Drop1 units are assembled, they are then subjected to testing. Devices are dropped from specific height levels at specific angles, with the results being recorded and analyzed later on.

Other tests performed include dropping the device into a plastic tube with metal obstacles on the inside or submerging the device into liquid to test water resistance. For all tests, parameters, and key data points are recorded and analyzed after the fact.

The devices themselves are also photographed upon the completion of drop testing so that the resulting damage can then be analyzed. Major defects, structural problems, or issues with the PVD coating process on the casing are all noted, and feedback is sent to the necessary teams.

As changes can occur due to information gathered from the Drop1 stage, Drop2 devices are often produced with alterations or added components on the inside. Similar tests are then conducted on Drop2 stage devices, and relevant information is collected.Proto - the first attempt at functional hardware

Once the initial drop test stages are complete, the hardware project moves into the Proto stage of development. This stage marks the first attempt at delivering a fully functional device with all the necessary hardware components.

Although Proto-stage units feature working hardware, they are merely the first incarnation of it. This means that Proto devices can have significant differences compared to their mass-production counterparts.

These differences can pertain to the outside casing, in some instances, or they can impact ports and connectors located on the inside of the device. This means that a Proto-stage device might be incompatible with mass-production parts such as screens or buttons.

Because of their differences relative to the final product, Proto-stage units are often highly collectible. They are also difficult to repair, though, as a result of said differences and incompatibility with commonly available parts and components.

As this development stage involves functional hardware, even more testing is required to ensure proper functionality and long-term durability. Because of this, factories have dedicated machines, known as test stations, which test individual hardware components and sensors within the devices.

As a result, the Proto stage often encompasses two sub-stages, known as Proto1 and Proto2, to account for any design changes and alterations that may occur due to information obtained from test results.

Proto1 and Proto2 development stage units often run the NonUI variant of iOS. This type of iOS is largely intended for hardware testing and is often used by Apple's hardware engineers and calibration equipment.EVT - Engineering Validation Testing

The next major step after Proto is known as EVT, an acronym for Engineering Validation Testing. This development stage primarily focuses on perfecting the hardware and ensuring that no hardware-related problems persist.

EVT prototypes of the iPod Touch 3rd generation featured a rear camera, which is not present on mass-production units. Image Credit: DongleBookPro on YouTube

The EVT stage can encompass multiple substages, which are designated numerically, such as EVT-1, EVT-2, and so on. These exist to account for specific changes made during development, which are not large enough to constitute a separate development stage.

For some devices, Apple creates letter-based EVT substages. This can happen if a major problem is noticed later in development or even on mass-production devices. This forces Apple to revert to EVT devices for additional testing and development.

In the case of the iPod Touch 3rd generation, for instance, the mass production units Apple initially shipped featured a bootrom exploit that could not be easily patched or fixed. Because of this, the company created a new batch of EVT devices with modifications and eventually shipped a new version of the iPod with an updated bootrom.

As is the case with Proto1 and Proto2 stage devices, EVT prototypes can often have noticeable design differences compared to final mass production units. EVT units also run the same general operating system variant -- iOS NonUI.

In the case of the iPhone 15 Pro, for instance, EVT prototypes featured haptic buttons developed under the codename Project Bongo. Similarly, the iPod Touch 3G mentioned earlier also featured a rear camera at one point in development.

Neither of these features can be found on the final mass-production units, as the hardware was scrapped in both instances for one reason or another.DVT - Design Validation Testing

After EVT, the hardware project moves into the next stage, known as DVT, which is short for design validation testing. This stage builds upon EVT and ensures that the product has both functional hardware as well as a viable design with no defects or structural problems.

DVT-stage iPhone SE prototype. Image credit: AppleDemoYT on YouTube

The DVT stage consists of the same numerical (DVT-1) and letter-based (DVT-a) substages. There are no set limits as to how many substages can fall under Design Validation Testing, so it is possible to encounter prototype devices with designations such as DVT-4.

DVT-stage devices often bear a significant resemblance to their mass-production counterparts, with only minor exterior differences being present, if any. Because of this, Apple sends out DVT-stage devices to regulatory authorities, such as the FCC, to confirm compliance with existing standards and regulations pertaining to electronic devices.

Similarly, Apple also sends out DVT-stage devices to phone carriers and network providers worldwide to ensure compatibility with major cellular networks. Such devices are labeled as CRB, short for "Carrier Build," even though they are functionally identical to DVT units in every way.

CRB devices often run a unique variant of iOS known as CarrierOS. In most cases, it's a stripped-down version of the InternalUI variant. This means it includes the standard iOS user interface but with network-related test applications such as E911Tester.

In general, DVT-stage devices can run either InternalUI or NonUI versions of iOS, depending on the type of tests they're used for. The hardware of DVT prototypes differs from production units at the board level.

Unlike mass production devices, DVT and earlier unit devices are "development-fused." This ultimately means that they can run additional code, and they are easier to debug compared to production units, which is why they are used in multiple development processes.PVT - Production Validation Testing

By the PVT stage, the complete design of the new hardware project has been finalized. PVT-stage prototypes are virtually identical to mass production units, apart from the fact that they run the NonUI variant of iOS.

The filesystems found on PVTE devices can sometimes contain references to in-development software features

The PVT stage of development primarily focuses on perfecting manufacturing procedures. With every product, Apple needs to ensure that the device can be mass-produced without any issues with the units themselves or factory equipment.

Another variant of PVT device is the so-called PRQ unit, short for "Post Ramp Qualification." These devices are tested when Apple introduces a minor change, such as a new color option after release, and they are identical to PVT and mass production units.

Because PVT stage units are effectively identical to the final product, these devices are worth significantly less to collectors. Their only redeeming quality is the fact that they run iOS NonUI.

The one exception to this rule would be PVTE stage devices, with the acronym standing for Production Validation Testing - Engineering.

Unlike standard PVT units, which are tested to ensure that mass production can proceed without issues, PVTE devices are used by Apple's software engineers. This means that they run the InternalUI variant of iOS, which features the standard iOS user interface, along with in-development features and internal settings.

As a result, PVTE units can contain early implementations of software features years away. A PVTE-stage iPhone Xs Max, for instance, contained an early version of Apple's on-device email categorization feature which only debuted five years later.MP - Mass Production devices

Once all prototyping is completed, factories will begin mass-producing the new device ahead of launch. To ensure that the units shipped are fully functional, the hardware of each device is tested before it is sent out.

Every mass-produced iPhone unit sold by Apple is an MP-type device

To facilitate the final test process, Apple first installs a NonUI version of iOS onto mass production units so that employees and test equipment can interact with the devices. Once quality control testing is complete, release (stock) iOS is installed, and the units are shipped out to be sold.

Every device sold legally by Apple to customers or other third parties can be considered a mass-production device. Devices produced with this purpose in mind are also considered mass-production units and are known as MP-intent.

Some of these devices inevitably fail the quality control tests due to hardware malfunctions or major defects that cannot be repaired. They are considered quality control rejects, and the defective components are generally sent out for recycling.

MP devices that fail quality control testing can still have the NonUI variant of iOS installed, making the logic board somewhat more valuable to a collector than a standard one with the release of iOS.

An MP-stage device running iOS NonUI is not worth nearly as much as DVT or an earlier device, however, because it features mass-production hardware that can be found with relative ease.What does all of this ultimately mean?

Prototypes offer unique insight as to how devices can change during development. In some instances, Apple abruptly removed key hardware components from upcoming devices, replacing them with more conventional parts.

Apple's many different prototype stages also serve as an indication of the company's quality control practices and high manufacturing standards. New products are tested extensively, through and through, to ensure that no hardware-related problems reach end consumers.

Read on AppleInsider

-

Is Apple Intelligence artificial?

In the days before WWDC, it was widely believed that Apple would be playing catch-up to everyone else in the consumer tech industry by rushing to bolt a copy of ChatGPT onto its software and checking off the feature box of "Artificial Intelligence." That's clearly not what happened.

Apple Intelligence is on the way.

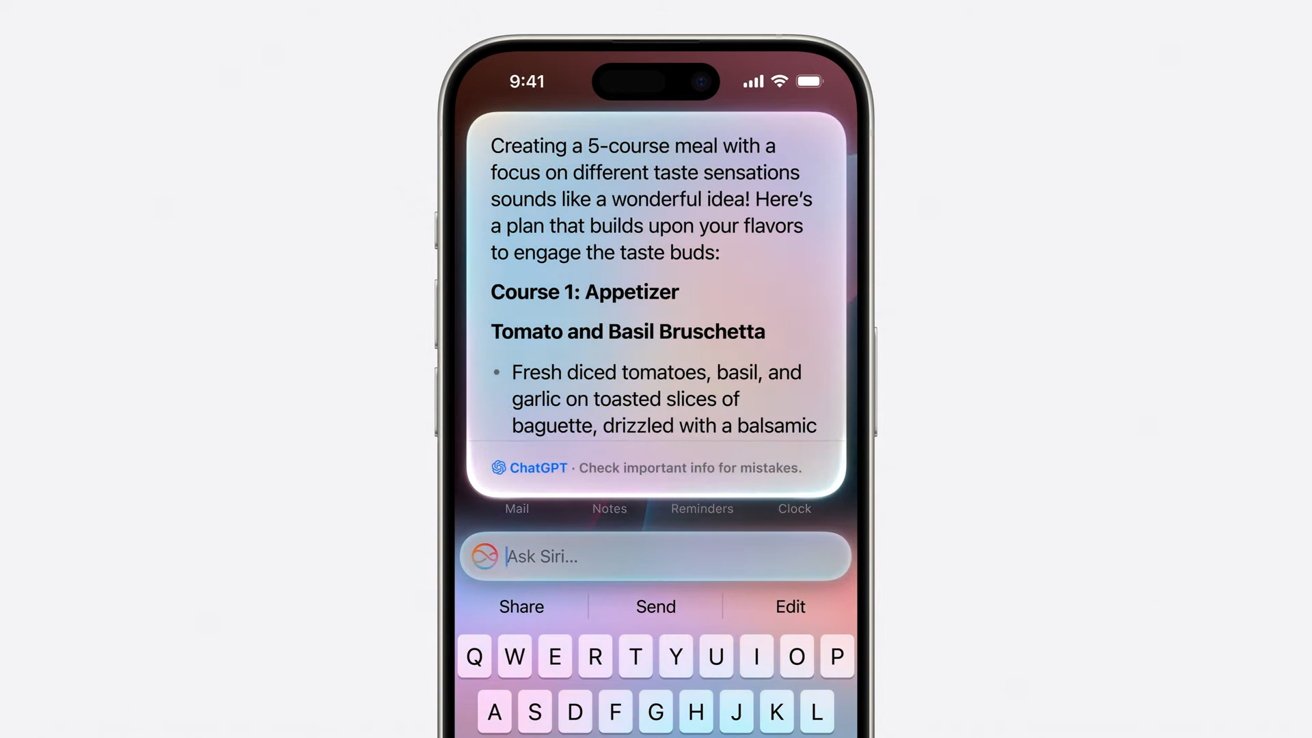

Rather than following the herd, Apple laid out its strategy for expanding its machine learning capabilities into the world of generative AI in both writing and creating graphic illustrations as well as iconic emoji-like representations of ideas, dreamed up by the user. Most of this could occur on device, or in some cases, delegated to a secure cloud instance private to the individual user.

In both cases, Apple Intelligence, as the company coined its product, would be deeply integrated into the user's own context and data. This is instead of it just being a vast Large Language Model trained on all the publicly scrapeable data some big company could appropriate in order to generate human-resembling text and images.

At the same time, Apple also made it optional for iOS 18 and macOS Sonoma users to access such a vast LLM from Open AI. They did this by enabling the user to grant permission to send requests to Chat GPT 4o, and even take advantage of any subscription plan they'd already bought to cover such power hungry tasks without limits.

Given that Apple delivered something vastly different than the AI tech pundits predicted, it raises the question: is it possible that Apple Intelligence isn't really delivering true AI, or is it more likely that AI pundits were really failing to deliver Apple intelligence?

The Artificial Intelligence in the tech world

Pundits and critics of Apple long mused that the company was desperately behind in AI. Purportedly, nobody at Apple had ever thought about AI until the last minute, and the cash strapped, beleaguered company couldn't possibly afford to acquire or create its own technology in house.

Obviously, they told us for months, the only thing Apple could hope to do is crawl on its knees to Google to offer its last few coins in exchange for the privilege of bolting Google's widely respected Gemini LLM onto the side of the Mac to pretend it was still in the PC game.

It could also perhaps license Copilot from Microsoft, which had just showed off how far ahead the company was in rolling out a GPT-like chat-bot of its own. Microsoft had also paid AI leader Open AI extraordinary billions to monopolize any commercial productization of its work with the actual Chat GPT leading the industry in chat-bottery.

Apple does allow users to use ChatGPT on their iPhone if they want.

A few months ago when Open AI's unique non-profit status appeared on the verge of institutional collapse, Microsoft's CEO was hailed as a genius for offering to gut the startup for brains and leadership by hiring away its people out from under its stifling non-profit chains.

That ultimately didn't materialize, but it did convince everyone in the tech world that building an AI chat bot would cost incredible billions to develop, and further that the window of opportunity to get started on one had already closed and one way or another, Microsoft had engineered a virtual lock on all manner of chat-bot advancements promised to drive the next wave of PC shipments.

Somehow, at the same time, everyone else outside of Apple had already gained access to an AI chat-bot, meaning that just Apple and only Apple would be behind in a way that it could never hope to ever gain any ground in, just like with Apple Maps.

Or like the Voice First revolution lead by Amazon's Alexa that would make mobile apps dry up and blow away while we delightfully navigate our phones with the wondrous technology underlying a telephone voicemail tree.

Or the exciting world of folding cell phones that turned into either an Android tablet or just a smaller but much thicker thing to stuff in your pocket because iPhones don't fit in anyone's pockets.

Or smart watches, music streaming, or the world of AR/VR being exclusively lead by Microsoft's HoloLens in the enterprise and Meta and video game consoles among consumers. It keeps going but I think I've exhausted the limits of sarcasm and should stop.

Anyway, Apple was never going to catch up this time.Apple catches up and was ahead all along

Just as with smart watches and Maps and Alexa and HoloLens, it turns out that being first to market with something doesn't mean you're really ahead of the curve. It often means you're the beginning of the upward curve.

If you haven't thought out your strategy beyond rushing to launch desperately in order to be first, you might end up a Samsung Gear or vastly expensive Voice First shopping platform nobody uses for anything other than changing the currently playing song.

It might be time to lay off your world-leading VR staff and tacitly admit that nobody in management had really contemplated how HoloLens or the Meta Quest Pro could possibly hope to breathe after Apple walked right in and inhaled the world's high end oxygen.

There's reason to believe that everyone should have seen this coming. And it's not because Apple is just better than everyone else. It's more likely because Apple looks down the road further and thinks about the next step.

That's obvious when looking at how Apple lays its technical foundations.

Siri was Apple's first stab at offering an intelligent agent to consumers.

Pundits and an awful lot of awful journalists seem entirely disinterested in how anything is built, but rather only in the talking points used to market some new thing. Is Samsung promising that everyone will want a $2000 cell phone that magically transforms into an Android tablet with a crease? They were excited about that for years.

When Apple does all the work behind the scenes to roll out its unique strategic vision for how something new should work, they roll their eyes and say it should have been finished years ago. It's because people who have only delivered a product that is a string of words representing their opinions imagine that everything else in the world is also just an effortless salad tossing of their stream of consciousness.

They don't appreciate that real things have to be planned and designed and built.

And while people who just scribble down their thoughts actually believed that Apple hadn't imagined any use for AI before last Monday, and so would just deliver a button crafted by marketing to invoke the work delivered by the real engineers at Google and Microsoft or Open AI, the reality was that Apple was delivering the foundations of a system wide intelligence via AI for years right out in the public.Apple Intelligence started in the 1970s

Efforts to deliver a system wide model of intelligent agents that could spring into action in a meaningful way relevant to regular people using computers began shortly after Apple began making its first income hawking the Apple I nearly 50 years ago.

One of the first things Apple set upon doing in the emerging world of personal computing is fundamental research into how people used computers, in order to make computing more broadly accessible.

That resulted in the Human User Interface Guidelines, which explained in intricate detail how things should work and why. They did this first in order for regular people to be able to make sense of computing at a time when you had to be a full time nerd hacker in order to load a program off tape and enable it to begin to PEEK and POKE at memory cells just to generate specific characters on your CRT display.

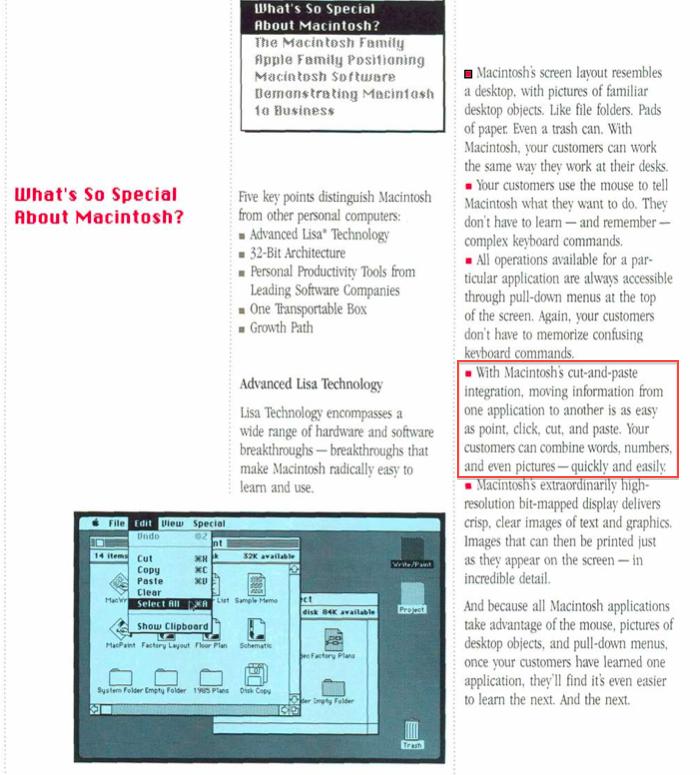

Apple Intelligence started with Lisa Technology

In Apple's HUIG, it was mandated that the user needed consistency of operation, the ability to undo mistakes, and a direct relationship between actions and results. This was groundbreaking in a world where most enthusiasts only cared about megahertz and BASIC. A lot of people, including developers, were upset that Apple was asserting such control over the interface and dictating how things should work.

Those that ignored Apple's guidelines have perished.

Not overnight, but with certainty, personal computing began to fall in line with the fundamental models of how things should work, largely created by Apple. Microsoft based Windows on it and eventually even the laggards of DOS came kicking and screaming into the much better world of graphical computing first defined by the Macintosh, where every application printed the same way, and copy/pasted content and open/saved documents using the same shortcuts, and where Undo was always right there when you needed it.

An era later, when everyone it the tech world was convinced that Microsoft had fully plundered Apple's work and that the Macintosh would never again be relevant and that Windows would now take over and become the interface of everything, Apple completely refreshed the Mac and made it more like the PC (via Intel) and less like the PC (with OS X). Apple incrementally clawed its way back into relevance, to the point where it could launch the next major revolution in personal computing: the truly usable mobile smartphone.

Apple crushed Microsoft's efforts at delivering a Windows Phone by stopping once again to determine -- after lengthy research -- not just how to make the PC "pocket sized," but how to transform it so that regular people could figure it out and use it productively as a mobile tool.

iPhone leaped over the entire global industry of mobile makers, not because it was just faster and more sophisticated, but largely because it was easy to use. And that was a result of a lot of invisible work behind the scenes.

Windows Phone failed in the face of iPhone

Pundits liked to chalk this up to Apple's marketing, as if the company had pulled some simple con over the industry and tricked us all into paying too much for a cell phone. The reality was that Apple put tremendous work into designing a new, mobile Human User Interface that your mom-- and her dad-- could use.

Across the same decades of way-back-when, Apple and the rest of the industry was also employing elements of AI to deliver specific features. At the height of Apple's actual beleaguerment in the early '90s, the company strayed from its focus on building products for real people and instead began building things that were just new.

Apple's secret labs churned out ways to relate information together in networks of links, how to summarize long passages of text into the most meaningful synopsis, how to turn handwriting into text on the MessagePad, and how to generate beautiful ligatures in fonts -- tasks that few saw any real use for, especially as the world tilted towards Windows.

What saved Apple was a strict return to building usable tools for real people, which started with the return of Steve Jobs. The iMac wasn't wild new technology; it was approachable and easy to use. That was also the basis of iPod, iPhone, iPad and Apple's subsequent successes.

At the same time, Apple was also incorporating the really new technology Apple had already done internally. VTwin search was delivered as Spotlight, and other language tools and even those fancy fonts and handwriting recognition were morphed into technologies regular people could use.

Rather than trying to copy the industry in making a cell phone with a Start button, or a phone-tablet, or a touch-PC or some other kind of MadLib engineered refrigerator-toaster, Apple has focused on simplicity, and making powerful things easy to use.

In the same way, rather than just bolting ChatGPT on its products and enabling its users to experience the joys of seven finger images and made-up wikipedia articles where the facts are hallucinated, Apple again distilled the most useful applications of generative AI and set to work making this functionality easy to use, as well as private and secure.

This didn't start happening the day Craig Federighi first saw Microsoft Copilot. Apple's been making use of AI and machine learning for years, right out in the open.

It's used in finding people in images, and text throughout the interface for search indexing and even in authenticating via Face ID. Apple made so much use of machine learning that they started building Neural Engine cores into Apple Silicon with the release of iPhone X in 2017.

Apple was talking about privacy and machine intelligence for yearsArtificial Intelligence gets a euphemism in AI

Apple didn't really call any of its work with the Neural Engine "AI." Back then, the connotation of "Artificial Intelligence" was largely negative.

It still is.

To most people, especially those of us raised in the '80s, the notion of "artificial" usually pertained to fake flowers, unnatural flavorings, or synthetic food colors that might cause cancer and definitely seemed to set off hyperactivity in children.

Artificial Intelligence was the Terminator's Skynet, and the dreary, bleak movie by Steve Spielberg.

Some time ago when I was visiting a friend in Switzerland, where the three official languages render all consumer packaging a Rosetta Stone of sorts between French, Italian and German, I noticed that the phrase "Artificially Flavored" on my box of chocolatey cereal was represented with a version of English's "artificial" in the Latin languages, but in German used the word Kunstlich, literally "Artistic."

I wondered to myself why German uses "like an artist" rather than the word for "fake" in my native language before suddenly coming to the realization that artificial doesn't literally mean "fake," but rather an "artistic representation."

The box didn't say I was eating fake chocolate puffs; it was instead just using somebody's artistic impression of the flavor of chocolate. It was a revelation to my brain. The negative connotation of artificial was so strongly entrenched that I had never before realized that it was based on the same root as artistic or artifice.

It wasn't fraud, it was a sly replacement with something to trick the eye, or in this case, the tastebuds.

Artificial Intelligence is similarly not just trying to "fake" human intelligence, but is rather an artistic endeavor to replicate facets of how our brains work. In the last few years, the negative "fake" association of artificial intelligence has softened as we have begun to not just rebrand it as the initials AI, but also to see more positive aspects of using AI to do some task, like to generate text that would take some effort otherwise. Or to take an idea and generate an image a more accomplished artist might craft.

The shift in perception on AI has turned a dirty word into a hype cycle. AI is now expected to respark the doldrums of PC sales, or speed up the prototyping of all manner of things, and glean meaningful details out of absurd mountains of text or other Big Data. Some people are still warning that AI will kill us all, but largely the masses have accepted that AI might save them from some busy-work.

But Apple isn't much of a follower in marketing. When Apple began really talking about computer "Intelligence" on its devices at WWDC, it didn't blare out term "Artificial Intelligence." It had its own phrase.

I'm not talking about last week. I'm still talking about 2017, the year Apple introduced iPhone X and where Kevin Lynch introduced the new watchOS 4 watch face "powered by Siri intelligence."

Phil Schiller also talked about HomePod delivering "powerful speaker technology, Siri intelligence, and wireless access to the entire Apple Music library." Remember Siri Intelligence?

It seems like nobody does, even as pundits were lining up over the last year to sell the talking point that Apple had never had any previous brush with anything related to AI before last Monday. Or, supposedly, before Craig experienced his transformation on Mount Microsoft upon being anointed by the holy Copilot and ripped off all of its rival's work -- apart from the embarrassment of Recall.

Apple isn't quite ashamed of Siri as a brand, but it has dropped the association -- or maybe personification -- of its company wide "intelligent" features with Siri as a product. It has recently decided to tie its intelligence work to the Apple brand instead, conveniently coming up with branding for AI that is both literally "A.I." and also proprietary to the company.

Apple Intelligence also suavely sidesteps the immediate and visceral connotations still associated with "artificial" and "artificial intelligence." It's perhaps one of the most gutsy appropriations of a commonplace idea ever pulled off by a marketing team. What if we take a problematic buzzword, defuse the ugly part, and turn it into trademark? Genius.The years of prep-work behind Apple Intelligence

It wasn't just fresh branding that launched Apple Intelligence. Apple's Intelligence (nee Siri) also had its foundations laid in Apple Silicon, long before it was commonplace to be trying to sell the idea that your hardware could do AI without offloading every task to the cloud. Beyond that, Apple also built the tools to train models and integrate machine learning into its own apps-- and those of third party developers-- with Core ML.

The other connection between Apple's Intelligence and Siri: Apple also spent years deploying the idea among its developers of Siri Intents, which are effectively footholds developers could build into their code to enable Siri to pull results from their apps to return in response to the questions users asked Siri.

All this systemwide intelligence Apple was integrating into Siri answers also now enables Apple Intelligence to connect and pool users' own data in ways that is secure and private, without sucking up all their data and pushing it through a central LLM cloud model where it could be factored into AI results.

With Apple Intelligence, your Mac and iPhone can take the infrastructure and silicon Apple has already built to deliver answers and context that other platforms haven't thought to do. Microsoft is just now trying to begin pooping out some kind of AI engines in ARM chips that can run Windows, even though there's still issues with Windows on ARM, and most Windows PCs are not running ARM, and little effort in AI has been made outside of just rigging up another chat-bot.

Apple Intelligence stands to be more than a mere chat bot

The entire industry has been chasing cloud-based AI in the form of mostly generative AI based on massive models trained on suspect material. Google dutifully scraped up so much sarcasm and subterfuge on Reddit that its confident AI answers are often quite absurd.

Further, the company tried to force its models to generate inoffensive yet stridently progressive AI that it effectively forgot that the world has been horrific and racist, and instead just dreams up history where the founding fathers were from all backgrounds and there was no controversies or oppression.

Google's flavor of AI was literally artificial in the worst senses.

And because Apple focused on the most valuable, mainstream uses of AI to demonstrate its Apple Intelligence, it doesn't have to expose itself to the risk of generating whatever the most unpleasant corners of humanity would like it to cough up.

What Apple is selling with today's AI is the same as its HUIG sold with the release of Macintosh: sales of premium hardware that can deliver the value of its work in imagining useful, approachable, clearly functional and easy to demonstrate features that are easy to grasp and use. It's not just throwing up a new AI service in hopes that there will someday be a business model to support it.

For Apple Intelligence, the focus isn't just catching up to the industry's "artificial" generation of placeholder content, a sort of party trick lacking a clear, unique value.

It's the state-of-the-art in intelligence and machine learning.

Read on AppleInsider