Apple could have used pinhole-sized sensors in display to keep Touch ID on the iPhone X

Before the introduction of the iPhone X and Face ID, Apple was considering ways to implement Touch ID on a smartphone without using an externally visible fingerprint reader, with one technique involving a series of pinholes in the display panel that could allow a fingerprint to be captured through the screen.

The introduction of the iPhone X in 2017 brought with it a fundamental change in the way Apple designed the iPhone, eliminating the famous home button in favor of an edge-to-edge display. By removing the home button, Apple also had to reconsider how it secured the iPhone, as Touch ID was previously housed in the now-eliminated component.

Apple's ultimate answer was to replace it with Face ID, using the TrueDepth camera array to authenticate the user instead of their fingerprint. While other device producers simply shifted the fingerprint reader to elsewhere on the device, typically on the rear, Apple opted to fundamentally change its security processes instead.

However, a patent application published by the US Patent and Trademark Office on Thursday reveals Apple was still considering how to retain Touch ID while using a larger display with seemingly no available space for a reader. The filing "Electronic device including pin hole array mask above optical image sensor and laterally adjacent light source and related methods" was filed on May 23, 2016, over a year before the iPhone X's launch, suggesting it was still a consideration at that point.

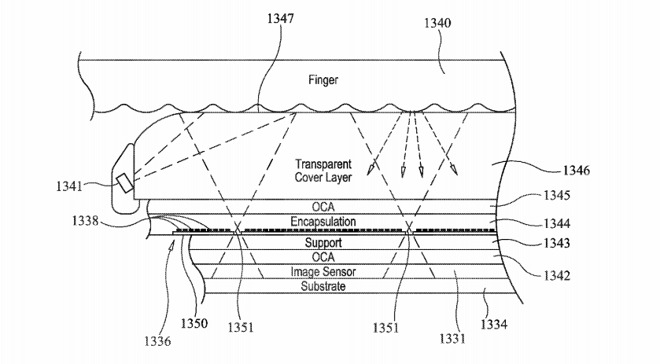

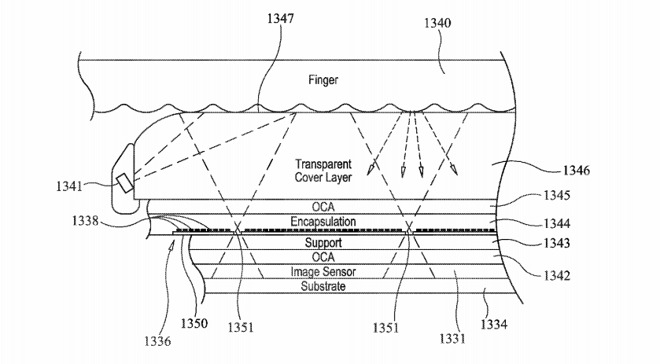

In short, the filing suggests the use of many small holes in the display panel to allow light to pass through to an optical image sensor below. By shining light onto the user's finger, reflected light could pass through the holes to the optical sensor, and could be used to produce a fingerprint.

A cross-section of the display, showing pinholes allowing light to pass through the display for fingerprint reading

There would be a large number of the holes in order to cover a wide-enough area, and be equally spaced apart between pixels on the display panel so as to not be easily visible by the user. A light source laterally adjacent to the imaging sensor is also used to shine light though the holes onto the user's finger, as while the light from the display panel could do a similar job, doing so with a separate light source leaves the display available to be used for other tasks, as well as enabling the use of infrared or ultraviolet light for fingerprint reading.

A transparent layer may also be used between the display panel and the pin hole array mask layer, which can give space for light to reflect against the user's finger and bounce back to the holes, passing through to the sensor.

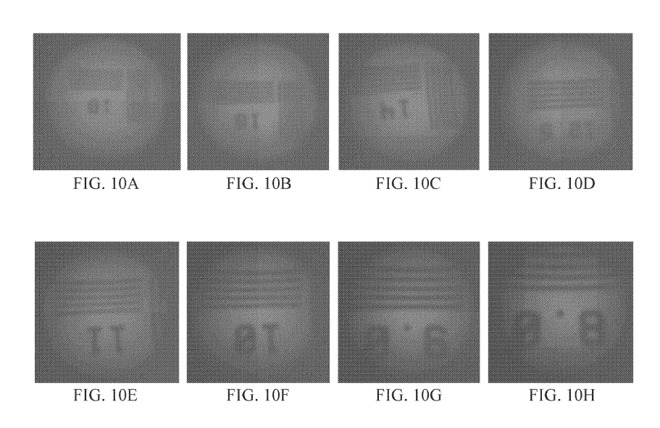

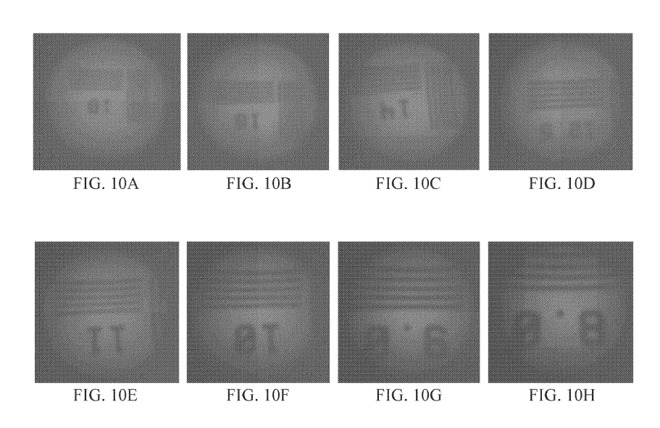

Despite using pinholes, using a plurality of them will give enough data to the sensor to be able to make an image of the user's finger. Prototype test images show the concept working with text and lines down to a micron level, making it more than sufficient enough for fingerprints.

Apple suggests the use of the system would also potentially save users time, as it could eliminate the authentication step in a process by simply reading the finger when it touches the display when required.

An example of the images that could be captured by the technique on prototype hardware

Apple files numerous patents and applications with the USPTO on a weekly basis, but while there is no guarantee that the concepts described will make their way into a future product or service, they do indicate areas of interest for the company's research and development efforts.

Using holes in the display is not the only way Apple has come up with for reading a fingerprint. Patents granted to the company in April relate to the use of acoustic transducers to vibrate the surface of the display and to monitor for waves altered by coming into contact with fingerprint ridges.

If adopted, the technique could effectively turn the entire display into a fingerprint reader, capturing the biometric element regardless of where the finger touches the display, and at any angle.

The introduction of the iPhone X in 2017 brought with it a fundamental change in the way Apple designed the iPhone, eliminating the famous home button in favor of an edge-to-edge display. By removing the home button, Apple also had to reconsider how it secured the iPhone, as Touch ID was previously housed in the now-eliminated component.

Apple's ultimate answer was to replace it with Face ID, using the TrueDepth camera array to authenticate the user instead of their fingerprint. While other device producers simply shifted the fingerprint reader to elsewhere on the device, typically on the rear, Apple opted to fundamentally change its security processes instead.

However, a patent application published by the US Patent and Trademark Office on Thursday reveals Apple was still considering how to retain Touch ID while using a larger display with seemingly no available space for a reader. The filing "Electronic device including pin hole array mask above optical image sensor and laterally adjacent light source and related methods" was filed on May 23, 2016, over a year before the iPhone X's launch, suggesting it was still a consideration at that point.

In short, the filing suggests the use of many small holes in the display panel to allow light to pass through to an optical image sensor below. By shining light onto the user's finger, reflected light could pass through the holes to the optical sensor, and could be used to produce a fingerprint.

A cross-section of the display, showing pinholes allowing light to pass through the display for fingerprint reading

There would be a large number of the holes in order to cover a wide-enough area, and be equally spaced apart between pixels on the display panel so as to not be easily visible by the user. A light source laterally adjacent to the imaging sensor is also used to shine light though the holes onto the user's finger, as while the light from the display panel could do a similar job, doing so with a separate light source leaves the display available to be used for other tasks, as well as enabling the use of infrared or ultraviolet light for fingerprint reading.

A transparent layer may also be used between the display panel and the pin hole array mask layer, which can give space for light to reflect against the user's finger and bounce back to the holes, passing through to the sensor.

Despite using pinholes, using a plurality of them will give enough data to the sensor to be able to make an image of the user's finger. Prototype test images show the concept working with text and lines down to a micron level, making it more than sufficient enough for fingerprints.

Apple suggests the use of the system would also potentially save users time, as it could eliminate the authentication step in a process by simply reading the finger when it touches the display when required.

An example of the images that could be captured by the technique on prototype hardware

Apple files numerous patents and applications with the USPTO on a weekly basis, but while there is no guarantee that the concepts described will make their way into a future product or service, they do indicate areas of interest for the company's research and development efforts.

Using holes in the display is not the only way Apple has come up with for reading a fingerprint. Patents granted to the company in April relate to the use of acoustic transducers to vibrate the surface of the display and to monitor for waves altered by coming into contact with fingerprint ridges.

If adopted, the technique could effectively turn the entire display into a fingerprint reader, capturing the biometric element regardless of where the finger touches the display, and at any angle.

Comments

FaceID is not perfect, but it’s pretty damned good, and on balance has fewer flaws than TouchID did. After using an iPhone XS for 6 months, my only complaint with the notch is that I can’t see the battery percentage without swiping down (and reachability is not nearly as convenient as it was with the home button).

Turns out that a very common use scenario is for my phone to be on the table next to my laptop, and for me to reach over and use it sort of "calculator style" without picking it up. So I have to pick it up and look at the stupid thing and then put it back down and blah blah… also I'll sometimes try to use it while walking or standing around on the subway and use it at hip-height instead of pulling it up to my face.

Apple made the right call, they shouldn't be designing around the target audience of Me, but… le sigh.

Also it's gonna be too hot for jackets soon… I may switch back to my old SE for the rest of the summer.

Oh, after reading additional comments, others have pointed out what I said..... that'll learn me to sign up and repeat others lol